Discourses of dependency and the history of a social problem

Terminology

The term "welfare dependency" is itself controversial, often carrying derogatory connotations or insinuations that the recipient is unwilling to work. Historian Michael B. Katz discussed the discourses surrounding poverty in his 1989 book The Undeserving Poor, where he elaborated upon the distinctions Americans make between so-called “deserving” recipients of aid, such as widows, and “undeserving” ones, like single-parent mothers, with the distinction being that the former have fallen upon hard times through no fault of their own whereas the latter are seen as having chosen to live on the public purse. Drawing this dichotomy diverts attention from the structural factors that cause and entrench poverty, such as economic change. Instead of focusing on how to tackle the root causes of poverty, people focus on attacking the supposed poor character of the recipient.

It is important to note that while the term “welfare dependence” in and of itself is politically neutral and merely describes a state of drawing benefits, in conventional usage it has taken on a very negative meaning that blames welfare recipients for social ills and insinuates they are morally deficient. In his 1995 book The War Against the Poor, Columbia University sociology professor Herbert Gans asserted that the label “welfare recipient,” when used to malign a poor person, transforms the individual’s experience of being in poverty into a personal failing while ignoring positive aspects of their character. For example, Gans writes, “That a welfare recipient may be a fine mother becomes irrelevant; the label assumes that she, like all others in her family, is a bad mother, and she is given no chance to prove otherwise.” In this way, structural factors that cause a person to be reliant on benefit payments for the majority of his or her income are in essence ignored because the problem is seen as situated within the person, not society. To describe a person as welfare dependent can therefore be interpreted as "blaming the victim," depending on context.

The term "welfare-reliant," as used by Edin and Lein (1996), can describe the same concept with potentially fewer negative connotations.

Welfare, long-term reliance, and policy

There is a great deal of overlap between discourses of welfare dependency and the stereotype of the welfare queen, in that long-term welfare recipients are often seen as draining public resources they have done nothing to earn, as well as stereotyped as doing nothing to improve their situation, choosing to draw benefits when there are alternatives available. This contributes to stigmatization of welfare recipients. While the stereotype of a long-term welfare recipient involves not wanting to work, in reality a large proportion of welfare recipients are engaged in some form of paid work but still cannot make ends meet.

Attention was drawn to the issue of long-term reliance on welfare in the Moynihan Report. Assistant Secretary of Labor Daniel Patrick Moynihan argued that in the wake of the 1964 Civil Rights Act, urban Black Americans would still suffer disadvantage and remain entrenched in poverty due to the decay of the family structure. Moynihan wrote, “The steady expansion of welfare programs can be taken as a measure of the steady disintegration of the Negro family structure over the past generation in the United States.” The relatively high proportion of Black families headed by single-parent mothers, along with the high proportion of children born out of wedlock, was seen as a pernicious social problem – one leading to long-term poverty and consequently reliance on welfare benefits for income, as there would be no male breadwinner working while the mother took care of her children.

From 1960 to 1975, both the percentage of families headed by single-parent mothers and reliance on welfare payments increased. At the same time, research began indicating that the majority of people living below the poverty line experienced only short spells of poverty, casting doubt on the notion of an entrenched underclass. For example, a worker who lost his job might be categorized as poor for a few months prior to re-entering full-time employment, and he or she would be much less likely to end up in a situation of long-term poverty than a single-parent mother with little formal education, even if both were considered “poor” for statistical purposes.

In 1983, researchers Mary Jo Bane and David T. Ellwood used the Panel Study of Income Dynamics to examine the duration of spells of poverty (defined as continuous periods spent with income under the poverty line), looking specifically at entry and exit. They found that while three in five people who were just beginning a spell of poverty came out of it within three years, only one-quarter of people who had already been poor for three years were able to exit poverty within the next two. The probability that a person will be able to exit poverty declines as the spell lengthens. A small but significant group of recipients remained on welfare for much longer, forming the bulk of poverty at any one point in time and requiring the most in government resources. At any one time, if a cross-sectional sample of poor people in the United States was taken, about 60% would be in a spell of poverty that would last at least eight years. Interest thus arose in studying the determinants of long-term receipt of welfare. Bane & Ellwood found that only 37% of poor people in their sample became poor as a result of the head of household’s wages decreasing, and their average spell of poverty lasted less than four years. On the other hand, entry into poverty that was the result of a woman becoming head of household lasted on average for more than five years. Children born into poverty were particularly likely to remain poor.

Reform: the rise of workfare

In the popular imagination, welfare became seen as something that the poor had made into a lifestyle rather than a safety net. The federal government had been urging single-parent mothers with children to take on paid work in an effort to reduce welfare rolls since the introduction of the WIN Program in 1967, but in the 1980s this emphasis became central to welfare policy. Emphasis turned toward personal responsibility and the attainment of self-sufficiency through work.

Conservative views of welfare dependency, coming from the perspective of classical economics, argued that individual behaviors and the policies that reward them lead to the entrenchment of poverty. Lawrence M. Mead's 1986 book Beyond Entitlement: The Social Obligations of Citizenship argued that American welfare was too permissive, giving out benefit payments without demanding anything from poor people in return, particularly not requiring the recipient to work. Mead viewed this as directly linked to the higher incidence of social problems among poor Americans, more as a cause than an effect of poverty:

- "[F]ederal programs have special difficulties in setting standards for their recipients. They seem to shield their clients from the threats and rewards that stem from private society – particularly the marketplace – while providing few sanctions of their own. The recipients seldom have to work or otherwise function to earn whatever income, service, or benefit a program gives; meager though it may be, they receive it essentially as an entitlement. Their place in American society is defined by their need and weakness, not their competence. This lack of accountability is among the reasons why nonwork, crime, family breakup, and other problems are much commoner among recipients than Americans generally."

Charles Murray argued that American social policy ignored people's inherent tendency to avoid hard work and be amoral, and that from the War on Poverty onward the government had given welfare recipients disincentives to work, marry, or have children in wedlock. His 1984 book Losing Ground was also highly influential in the welfare reforms of the 1990s.

In 1983, Bane & Ellwood found that one-third of single-parent mothers exited poverty through work, indicating that it was possible for employment to form a route out of reliance on welfare even for this particular group. Overall, four in five exits from poverty could be explained by an increase in earnings, according to their data. The idea of combining welfare reform with work programs in order to reduce long-term dependency received bipartisan support during the 1980s, culminating in the signing of the Family Support Act in 1988. This Act aimed to reduce the number of AFDC recipients, enforce child support payments, and establish a welfare-to-work program. One major component was the Job Opportunities and Basic Skills Training (JOBS) program, which provided remedial education and was specifically targeted to teenage mothers and recipients who had been on welfare for six years or more – those populations considered most likely to be welfare dependent. JOBS was to be administered by the states, with federal government matching up to a capped level of funding. A lack of resources, particularly in relation to financing and case management, stymied JOBS. However, in 1990, expansion of the Earned Income Tax Credit (EITC), first enacted in 1975, offered working poor families with children an incentive to remain in work. Also in that year, federal legislation aimed at providing child care to families who would otherwise be dependent on welfare aided single-parent mothers in particular.

Welfare reform during the Clinton presidency placed time limits on benefit receipt, replacing Aid for Families with Dependent Children and the JOBS program with Temporary Assistance for Needy Families (TANF) and requiring that recipients begin to work after two years of receiving these payments. Such measures were intended to decrease welfare dependence: The House Ways and Means Committee stated that the goal of the Personal Responsibility and Work Opportunity Act was to "reduce the length of welfare spells by attacking dependency while simultaneously preserving the function of welfare as a safety net for families experiencing temporary financial problems." This was a direct continuation of the line of thinking that had been prevalent in the 1980s, where personal responsibility was emphasized. TANF was administered by individual states, with funding coming from federal block grants. However, resources were not adjusted for inflation, caseload changes, or state spending changes. Unlike its predecessor AFDC, TANF had as its explicit goal the formation and maintenance of two-parent families and the prevention of out-of-wedlock births, reflecting the discourses that had come to surround long-term welfare receipt.

One shortcoming of workfare-based reform was that it did not take into account the fact that, due to welfare benefits often not paying enough to meet basic needs, a significant proportion of mothers on welfare already worked "off the books" to generate extra income without losing their welfare entitlements. Neither welfare nor work alone could provide enough money for daily expenses; only by combining the two could the recipients provide for themselves and their children. Even though working could make a woman eligible for the Earned Income Tax Credit, the amount was not enough to make up for the rest of her withdrawn welfare benefits. Work also brought with it related costs, such as transportation and child care. Without fundamental changes in the skill profile of the average single-parent mother on welfare to address structural changes in the economy, or a significant increase in pay for low-skilled work, withdrawing welfare benefits and leaving women with only work income meant that many faced a decline in overall income. Sociologists Kathryn Edin and Laura Lein interviewed mothers on welfare in Chicago, Charleston, Boston, and San Antonio, and found that while working mothers generally had more income left over after paying rent and food than welfare mothers did, the former were still worse-off financially because of the costs associated with work. Despite strong support for the idea that work will provide the income and opportunity to help people become self-sufficient, this approach has not alleviated the need for welfare payments in the first place: In 2005, approximately 52% of TANF recipients lived in a family with at least one working adult.

Measuring dependency

The United States Department of Health and Human Services defines ten indicators of welfare dependency:

- Indicator 1: Degree of Dependence, which can be measured by the percentage of total income from means-tested benefits. If greater than 50%, the recipient of welfare is considered to be dependent on it for the purposes of official statistics.

- Indicator 2: Receipt of Means-Tested Assistance and Labor Force Attachment, or what percentage of recipients are in families with different degrees of labor force participation.

- Indicator 3: Rates of Receipt of Means-Tested Assistance, or the percentage of the population receiving TANF, food stamps, and SSI.

- Indicator 4: Rates of Participation in Means-Tested Assistance Programs, or the percentage of people eligible for welfare benefits who are actually claiming them.

- Indicator 5: Multiple Program Receipt, or the percentage of recipients who are receiving at least two of TANF, food stamps, or SSI.

- Indicator 6: Dependence Transitions, which breaks down recipients by demographic characteristics and the level of income that welfare benefits represented for them in previous years.

- Indicator 7: Program Spell Duration, or for how long recipients draw the three means-tested benefits.

- Indicator 8: Welfare Spell Duration with No Labor Force Attachment, which measures how long recipients with no one working in their family remain on welfare.

- Indicator 9: Long Term Receipt, which breaks down spells on TANF by how long a person has been in receipt.

- Indicator 10: Events Associated with the Beginning and Ending of Program Spells, such as an increase in personal or household income, marriage, children no longer being eligible for a benefit, and/or transfer onto other benefits.

In 2005, the Department estimated that 3.8% of the American population could be considered dependent on welfare, calculated as having more than half of their family’s income coming from TANF, food stamps, and/or SSDI payments, down from 5.2% in 1996. As 15.3% of the population was in receipt of welfare benefits in 2005, it follows that approximately one-quarter of welfare recipients are considered dependent as per the official measures. In general, measures of welfare dependence are assessed alongside the statistics for poverty in general.

Government measures of welfare dependence include welfare benefits associated with work. If such benefits were excluded from calculations, the dependency rate would be lower.

Risk factors

Demographic

Welfare dependence in the United States is typically associated with female-headed households with children. Mothers who have never been married are more likely to stay on welfare for long periods of time than their counterparts who have ever been married, including women who became separated or divorced from their partners. In her study using data from the 1984 Survey of Income and Program Participation, Patricia Ruggles found that 40% of never-married mothers remained on welfare for more than two years, and that while the median time spent on welfare for ever-married women was only 8 months, for never-married women it was between 17 and 18 months. Statistics from 2005 show that while only 1% of people living in married-couple families could be classified as welfare-dependent as per the government definition, 14% of people in single-parent family mothers were dependent.

Teenage mothers in particular are susceptible to having to rely on welfare for long periods of time because their interruption in schooling combined with the responsibilities of childrearing prevent them from gaining employment; there is no significant difference between single-parent and married teenage mothers because their partners are likely to be poor as well. While many young and/or single-parent mothers do seek work, their relatively low skill levels along with the burdens of finding appropriate childcare hurt their chances of remaining employed.

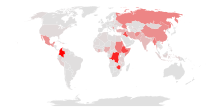

Black women are more likely than their White counterparts to be lone parents, which partially explains their higher rate of welfare dependency. At the time of the Moynihan Report, approximately one-quarter of Black households were headed by women, compared to about one in ten White households. Ruggles’ data analysis found that, in 1984, the median time on welfare for nonwhite recipients was just under 16 months, while for White recipients it was approximately 8 months. One year earlier, Bane & Ellwood found that the average duration of a new spell of poverty for a Black American was approximately seven years, compared to four years for Whites. In 2005, official statistics stated that 10.2% of Black Americans were welfare dependent, compared to 5.7% of Hispanics and 2.2% of non-Hispanic Whites.

William Julius Wilson, in The Truly Disadvantaged, explained that a shrinking pool of “marriageable” Black men, thanks to increasing unemployment brought about by structural changes in the economy, leads to more Black women remaining unmarried. However, there is no evidence that welfare payments themselves provide an incentive for teenage girls to have children or for Black women to remain unmarried.

There is an association between a parent's welfare dependency and that of her children; a mother's welfare participation increases the likelihood that her daughter, when grown, will also be dependent on welfare. The mechanisms through which this happens may include the child's lessened feelings of stigma related to being on welfare, lack of job opportunities because he or she did not observe a parent's participation in the labor market, and detailed knowledge of how the welfare system works imprinted from a young age. In some cases, the unemployment trap may function as a perverse incentive to remain dependent on welfare payments, as returning to work would not significantly increase household earnings as welfare benefits are withdrawn, and the associated costs and stressors would outweigh any benefits. This trap can be eliminated through the addition of work subsidies.

Other factors which entrench welfare dependency, particularly for women, include lack of affordable childcare, low education and skill levels, and unavailability of suitable jobs. Research has found that women who have been incarcerated also have high rates of social welfare receipt, especially if they were incarcerated in state prison rather than in county jail.

Structural economic factors

Kasarda and Ting (1996) argue that poor people become trapped in dependency on welfare due to a lack of skills along with spatial mismatch. Post-WWII, American cities have produced a surplus of high-skilled jobs which are beyond the reach of most urban welfare recipients, who do not have the appropriate skills. This is in large part due to fundamental inequalities in the quality of public education, which are themselves traceable to class disparities because school funding is heavily reliant on local property taxes. Meanwhile, low-skilled jobs have decreased within the city, moving out toward more economically advantageous suburban locations. Under the spatial mismatch hypothesis, reductions in urban welfare dependence, particularly among Blacks, would rely on giving potential workers access to suitable jobs in affluent suburbs. This would require changes in policies related not only to welfare, but to housing and transportation, to break down barriers to employment.

Without appropriate jobs, it can be argued using rational choice theory that welfare recipients would make the decision to do what is economically advantageous to them, which often means not taking low-paid work that would require expensive childcare and lengthy commutes. This would explain dependence on welfare over work. However, a large proportion of welfare recipients are also in some form of work, which casts doubt on this viewpoint.

The persistence of racism

One perspective argues that structural problems, particularly persistent racism, have concentrated disadvantage among urban Black residents and thus caused their need to rely on long-term welfare payments. Housing policies segregated Black Americans into impoverished neighborhoods and formally blocked avenues to quality education and high-paying employment. Economic growth in the 1980s and 1990s did not alleviate poverty, largely because wages remained stagnant while the availability of low-skilled but decent-paying jobs disappeared from American urban centers. Poverty could be alleviated by better-targeted economic policies as well as concerted efforts to penalize racial discrimination. However, William Julius Wilson, in The Truly Disadvantaged, urges caution in initiating race-based programs as there is evidence they may not benefit the poorest Black people, which would include people who have been on welfare for long periods of time.

Cultural

Oscar Lewis introduced a theory of a culture of poverty in the late 1950s, initially in the context of anthropological studies in Mexico. However, the idea gained currency and influenced the Moynihan Report. This perspective argues that poverty is perpetuated by a value system different from that of mainstream society, influenced by the material deprivation of one's surroundings and the experiences of family and friends. There are both liberal and conservative interpretations of the culture of poverty: the former argues that lack of work and opportunities for mobility have concentrated disadvantage and left people feeling as if they have no way out of their situation; the latter believe that welfare payments and government intervention normalize and incentivize relying on welfare, not working, and having children out of wedlock, and consequently transmit social norms supporting dependency to future generations.

Reducing poverty or reducing dependence?

Reducing poverty and reducing dependence are not equivalent terms. Cutting the number of individuals receiving welfare payments does not mean that poverty itself has been proportionally reduced, because many people with incomes below the official poverty line may not be receiving the transfer payments they may have been entitled to in previous years. For example, in the early 1980s there was a particularly large discrepancy between the official poverty rate and the number of AFDC recipients due to major government cuts in AFDC provision. As a result, many people who previously would have been entitled to welfare benefits no longer received them – an example of increasing official measures of poverty but decreasing dependence. While official welfare rolls were halved between 1996 and 2000, many working poor families were still reliant on government aid in the form of unemployment insurance, Medicaid, and assistance with food and childcare.

Changes in the practices surrounding the administration of welfare may obscure continuing problems with poverty and failures to change the discourse in the face of new evidence. Whereas in the 1980s and much of the 1990s discussions of problems with welfare centered on dependency, the focus in more recent years has come to rest on working poverty. The behavior of this particular group of poor people has changed, but their poverty has not been eliminated. Poverty rates in the United States have risen since the implementation of welfare reform. States that maintain more generous welfare benefits tend to have fewer people living below the poverty line, even if only pre-transfer income is considered.

In the United Kingdom

The Conservative/Liberal Democrat coalition government that took office in May 2010 set out to reduce welfare dependency, primarily relying on workfare and initiatives targeted to specific groups, such as disabled people, who are more likely to spend long periods of time receiving welfare payments. The Department of Work and Pensions has released a report claiming that Disability Living Allowance, the main payment given to people who are severely disabled, "can act as a barrier to work" and causes some recipients to become dependent on it as a source of income rather than looking for a suitable job. Iain Duncan Smith, Secretary for Work and Pensions, has argued that the United Kingdom has a culture of welfare dependency and a "broken" welfare system where a person would be financially better off living on state benefits than taking a job paying less than £15,000 annually. Critics argue that this is the government’s excuse to execute large-scale cuts in services, and that it perpetuates the stereotype that people on Incapacity Benefit or Disability Living Allowance are unwilling to work, faking their condition, or otherwise being "scroungers".

The previous Labour government introduced active labour market policies intended to reduce welfare dependency, an example of the Third Way philosophy favored by prime minister Tony Blair. The New Deal programs, targeted towards different groups of long-term unemployed people such as lone parents, young people, disabled people, and musicians, gave the government the ability to stop the benefit payments of people who did not accept reasonable offers of employment.