Multisensory integration, also known as multimodal integration, is the study of how information from the different sensory modalities (such as sight, sound, touch, smell, self-motion, and taste) may be integrated by the nervous system. A coherent representation of objects combining modalities enables animals to have meaningful perceptual experiences. Indeed, multisensory integration is central to adaptive behavior because it allows animals to perceive a world of coherent perceptual entities. Multisensory integration also deals with how different sensory modalities interact with one another and alter each other's processing.

General introduction

Multimodal perception is how animals form coherent, valid, and robust perception by processing sensory stimuli from various modalities. Surrounded by multiple objects and receiving multiple sensory stimulations, the brain is faced with the decision of how to categorize the stimuli resulting from different objects or events in the physical world. The nervous system is thus responsible for whether to integrate or segregate certain groups of temporally coincident sensory signals based on the degree of spatial and structural congruence of those stimulations. Multimodal perception has been widely studied in cognitive science, behavioral science, and neuroscience.

Stimuli and sensory modalities

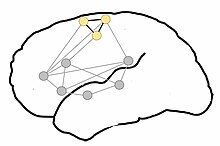

There are four attributes of stimulus: modality, intensity, location, and duration. The neocortex in the mammalian brain has parcellations that primarily process sensory input from one modality. For example, primary visual area, V1, or primary somatosensory area, S1. These areas mostly deal with low-level stimulus features such as brightness, orientation, intensity, etc. These areas have extensive connections to each other as well as to higher association areas that further process the stimuli and are believed to integrate sensory input from various modalities. However, recently multisensory effects have been shown to occur in primary sensory areas as well.

Binding problem

The relationship between the binding problem and multisensory perception can be thought of as a question – the binding problem, and potential solution – multisensory perception. The binding problem stemmed from unanswered questions about how mammals (particularly higher primates) generate a unified, coherent perception of their surroundings from the cacophony of electromagnetic waves, chemical interactions, and pressure fluctuations that forms the physical basis of the world around us. It was investigated initially in the visual domain (colour, motion, depth, and form), then in the auditory domain, and recently in the multisensory areas. It can be said therefore, that the binding problem is central to multisensory perception.

However, considerations of how unified conscious representations are formed are not the full focus of multisensory Integration research. It is obviously important for the senses to interact in order to maximize how efficiently people interact with the environment. For perceptual experience and behavior to benefit from the simultaneous stimulation of multiple sensory modalities, integration of the information from these modalities is necessary. Some of the mechanisms mediating this phenomenon and its subsequent effects on cognitive and behavioural processes will be examined hereafter. Perception is often defined as one's conscious experience, and thereby combines inputs from all relevant senses and prior knowledge. Perception is also defined and studied in terms of feature extraction, which is several hundred milliseconds away from conscious experience. Notwithstanding the existence of Gestalt psychology schools that advocate a holistic approach to the operation of the brain, the physiological processes underlying the formation of percepts and conscious experience have been vastly understudied. Nevertheless, burgeoning neuroscience research continues to enrich our understanding of the many details of the brain, including neural structures implicated in multisensory integration such as the superior colliculus (SC) and various cortical structures such as the superior temporal gyrus (GT) and visual and auditory association areas. Although the structure and function of the SC are well known, the cortex and the relationship between its constituent parts are presently the subject of much investigation. Concurrently, the recent impetus on integration has enabled investigation into perceptual phenomena such as the ventriloquism effect, rapid localization of stimuli and the McGurk effect; culminating in a more thorough understanding of the human brain and its functions.

History

Studies of sensory processing in humans and other animals has traditionally been performed one sense at a time, and to the present day, numerous academic societies and journals are largely restricted to considering sensory modalities separately ('Vision Research', 'Hearing Research' etc.). However, there is also a long and parallel history of multisensory research. An example is the Stratton's (1896) experiments on the somatosensory effects of wearing vision-distorting prism glasses. Multisensory interactions or crossmodal effects in which the perception of a stimulus is influenced by the presence of another type of stimulus are referred since very early in the past. They were reviewed by Hartmann in a fundamental book where, among several references to different types of multisensory interactions, reference is made to the work of Urbantschitsch in 1888 who reported on the improvement of visual acuity by auditive stimuli in subjects with damaged brain. This effect was also found latter in normals by Krakov and Hartmann, as well as the fact that the visual acuity could be improved by other type of stimuli. It is also noteworthy the amount of work in the early thirties on intersensory relations in Soviet Union, reviewed by London. A remarkable multisensory research is the extensive work of Gonzalo in the forties on the characterization of a multisensory syndrome in patients with parieto-occipital cortical lesions. In this syndrome, all the sensory functions are affected, and with symmetric bilaterality, in spite of being a unilateral lesion where the primary areas were not involved. A feature of this syndrome is the great permeability to crossmodal effects between visual, tactile, auditive stimuli as well as muscular effort to improve the perception, also decreasing the reaction times. The improvement by crossmodal effect was found to be greater as the primary stimulus to be perceived was weaker, and as the cortical lesion was greater (Vol I and II of reference). This author interpreted these phenomena under a dynamic physiological concept, and from a model based on functional gradients through the cortex and scaling laws of dynamical systems, thus highlighting the functional unity of the cortex. According to the functional cortical gradients, the specificity of the cortex would be distributed in gradation, and the overlap of different specific gradients would be related to multisensory interactions.

Multisensory research has recently gained enormous interest and popularity.

Example of spatial and structural congruence

When we hear a car honk, we would determine which car triggers the honk by which car we see is the spatially closest to the honk. It's a spatially congruent example by combining visual and auditory stimuli. On the other hand, the sound and the pictures of a TV program would be integrated as structurally congruent by combining visual and auditory stimuli. However, if the sound and the pictures did not meaningfully fit, we would segregate the two stimuli. Therefore, spatial or structural congruence comes from not only combining the stimuli but is also determined by our understanding.

Theories and approaches

Visual dominance

Literature on spatial crossmodal biases suggests that visual modality often influences information from other senses. Some research indicates that vision dominates what we hear, when varying the degree of spatial congruency. This is known as the ventriloquist effect. In cases of visual and haptic integration, children younger than 8 years of age show visual dominance when required to identify object orientation. However, haptic dominance occurs when the factor to identify is object size.

Modality appropriateness

According to Welch and Warren (1980), the Modality Appropriateness Hypothesis states that the influence of perception in each modality in multisensory integration depends on that modality's appropriateness for the given task. Thus, vision has a greater influence on integrated localization than hearing, and hearing and touch have a greater bearing on timing estimates than vision.

More recent studies refine this early qualitative account of multisensory integration. Alais and Burr (2004), found that following progressive degradation in the quality of a visual stimulus, participants' perception of spatial location was determined progressively more by a simultaneous auditory cue. However, they also progressively changed the temporal uncertainty of the auditory cue; eventually concluding that it is the uncertainty of individual modalities that determine to what extent information from each modality is considered when forming a percept. This conclusion is similar in some respects to the 'inverse effectiveness rule'. The extent to which multisensory integration occurs may vary according to the ambiguity of the relevant stimuli. In support of this notion, a recent study shows that weak senses such as olfaction can even modulate the perception of visual information as long as the reliability of visual signals is adequately compromised.

Bayesian integration

The theory of Bayesian integration is based on the fact that the brain must deal with a number of inputs, which vary in reliability. In dealing with these inputs, it must construct a coherent representation of the world that corresponds to reality. The Bayesian integration view is that the brain uses a form of Bayesian inference. This view has been backed up by computational modeling of such a Bayesian inference from signals to coherent representation, which shows similar characteristics to integration in the brain.

Cue combination vs. causal inference models

With the assumption of independence between various sources, traditional cue combination model is successful in modality integration. However, depending on the discrepancies between modalities, there might be different forms of stimuli fusion: integration, partial integration, and segregation. To fully understand the other two types, we have to use causal inference model without the assumption as cue combination model. This freedom gives us general combination of any numbers of signals and modalities by using Bayes' rule to make causal inference of sensory signals.

The hierarchical vs. non-hierarchical models

The difference between two models is that hierarchical model can explicitly make causal inference to predict certain stimulus while non-hierarchical model can only predict joint probability of stimuli. However, hierarchical model is actually a special case of non-hierarchical model by setting joint prior as a weighted average of the prior to common and independent causes, each weighted by their prior probability. Based on the correspondence of these two models, we can also say that hierarchical is a mixture modal of non-hierarchical model.

Independence of likelihoods and priors

For Bayesian model, the prior and likelihood generally represent the statistics of the environment and the sensory representations. The independence of priors and likelihoods is not assured since the prior may vary with likelihood only by the representations. However, the independence has been proved by Shams with series of parameter control in multi sensory perception experiment.

Principles

The contributions of Barry Stein, Alex Meredith, and their colleagues (e.g."The merging of the senses" 1993) are widely considered to be the groundbreaking work in the modern field of multisensory integration. Through detailed long-term study of the neurophysiology of the superior colliculus, they distilled three general principles by which multisensory integration may best be described.

- The spatial rule states that multisensory integration is more likely or stronger when the constituent unisensory stimuli arise from approximately the same location.

- The temporal rule states that multisensory integration is more likely or stronger when the constituent unisensory stimuli arise at approximately the same time.

- The principle of inverse effectiveness states that multisensory integration is more likely or stronger when the constituent unisensory stimuli evoke relatively weak responses when presented in isolation.

Perceptual and behavioral consequences

A unimodal approach dominated scientific literature until the beginning of this century. Although this enabled rapid progression of neural mapping, and an improved understanding of neural structures, the investigation of perception remained relatively stagnant, with a few exceptions. The recent revitalized enthusiasm into perceptual research is indicative of a substantial shift away from reductionism and toward gestalt methodologies. Gestalt theory, dominant in the late 19th and early 20th centuries espoused two general principles: the 'principle of totality' in which conscious experience must be considered globally, and the 'principle of psychophysical isomorphism' which states that perceptual phenomena are correlated with cerebral activity. Just these ideas were already applied by Justo Gonzalo in his work of brain dynamics, where a sensory-cerebral correspondence is considered in the formulation of the "development of the sensory field due to a psychophysical isomorphism" (pag. 23 of the English translation of ref.). Both ideas 'principle of totality' and 'psychophysical isomorphism' are particularly relevant in the current climate and have driven researchers to investigate the behavioural benefits of multisensory integration.

Decreasing sensory uncertainty

It has been widely acknowledged that uncertainty in sensory domains results in an increased dependence of multisensory integration. Hence, it follows that cues from multiple modalities that are both temporally and spatially synchronous are viewed neurally and perceptually as emanating from the same source. The degree of synchrony that is required for this 'binding' to occur is currently being investigated in a variety of approaches. The integrative function only occurs to a point beyond which the subject can differentiate them as two opposing stimuli. Concurrently, a significant intermediate conclusion can be drawn from the research thus far. Multisensory stimuli that are bound into a single percept, are also bound on the same receptive fields of multisensory neurons in the SC and cortex.

Decreasing reaction time

Responses to multiple simultaneous sensory stimuli can be faster than responses to the same stimuli presented in isolation. Hershenson (1962) presented a light and tone simultaneously and separately, and asked human participants to respond as rapidly as possible to them. As the asynchrony between the onsets of both stimuli was varied, it was observed that for certain degrees of asynchrony, reaction times were decreased. These levels of asynchrony were quite small, perhaps reflecting the temporal window that exists in multisensory neurons of the SC. Further studies have analysed the reaction times of saccadic eye movements; and more recently correlated these findings to neural phenomena. In patients studied by Gonzalo, with lesions in the parieto-occipital cortex, the decrease in the reaction time to a given stimulus by means of intersensory facilitation was shown to be very remarkable.

Redundant target effects

The redundant target effect is the observation that people typically respond faster to double targets (two targets presented simultaneously) than to either of the targets presented alone. This difference in latency is termed the redundancy gain (RG).

In a study done by Forster, Cavina-Pratesi, Aglioti, and Berlucchi (2001), normal observers responded faster to simultaneous visual and tactile stimuli than to single visual or tactile stimuli. RT to simultaneous visual and tactile stimuli was also faster than RT to simultaneous dual visual or tactile stimuli. The advantage for RT to combined visual-tactile stimuli over RT to the other types of stimulation could be accounted for by intersensory neural facilitation rather than by probability summation. These effects can be ascribed to the convergence of tactile and visual inputs onto neural centers which contain flexible multisensory representations of body parts.

Multisensory illusions

McGurk effect

It has been found that two converging bimodal stimuli can produce a perception that is not only different in magnitude than the sum of its parts, but also quite different in quality. In a classic study labeled the McGurk effect, a person's phoneme production was dubbed with a video of that person speaking a different phoneme. The end result was the perception of a third, different phoneme. McGurk and MacDonald (1976) explained that phonemes such as ba, da, ka, ta, ga and pa can be divided into four groups, those that can be visually confused, i.e. (da, ga, ka, ta) and (ba and pa), and those that can be audibly confused. Hence, when ba – voice and ga lips are processed together, the visual modality sees ga or da, and the auditory modality hears ba or da, combining to form the percept da.

Ventriloquism

Ventriloquism has been used as the evidence for the modality appropriateness hypothesis. Ventriloquism is the situation in which auditory location perception is shifted toward a visual cue. The original study describing this phenomenon was conducted by Howard and Templeton, (1966) after which several studies have replicated and built upon the conclusions they reached. In conditions in which the visual cue is unambiguous, visual capture reliably occurs. Thus to test the influence of sound on perceived location, the visual stimulus must be progressively degraded. Furthermore, given that auditory stimuli are more attuned to temporal changes, recent studies have tested the ability of temporal characteristics to influence the spatial location of visual stimuli. Some types of EVP – electronic voice phenomenon, mainly the ones using sound bubbles are considered a kind of modern ventriloquism technique and is played by the use of sophisticated software, computers and sound equipment.

Double-flash illusion

The double flash illusion was reported as the first illusion to show that visual stimuli can be qualitatively altered by audio stimuli. In the standard paradigm participants are presented combinations of one to four flashes accompanied by zero to 4 beeps. They were then asked to say how many flashes they perceived. Participants perceived illusory flashes when there were more beeps than flashes. fMRI studies have shown that there is crossmodal activation in early, low level visual areas, which was qualitatively similar to the perception of a real flash. This suggests that the illusion reflects subjective perception of the extra flash. Further, studies suggest that timing of multisensory activation in unisensory cortexes is too fast to be mediated by a higher order integration suggesting feed forward or lateral connections. One study has revealed the same effect but from vision to audition, as well as fission rather than fusion effects, although the level of the auditory stimulus was reduced to make it less salient for those illusions affecting audition.

Rubber hand illusion

In the rubber hand illusion (RHI), human participants view a dummy hand being stroked with a paintbrush, while they feel a series of identical brushstrokes applied to their own hand, which is hidden from view. If this visual and tactile information is applied synchronously, and if the visual appearance and position of the dummy hand is similar to one's own hand, then people may feel that the touches on their own hand are coming from the dummy hand, and even that the dummy hand is, in some way, their own hand. This is an early form of body transfer illusion. The RHI is an illusion of vision, touch, and posture (proprioception), but a similar illusion can also be induced with touch and proprioception. It has also been found that the illusion may not require tactile stimulation at all, but can be completely induced using mere vision of the rubber hand being in a congruent posture with the hidden real hand. The very first report of this kind of illusion may have been as early as 1937 (Tastevin, 1937).

Body transfer illusion

Body transfer illusion typically involves the use of virtual reality devices to induce the illusion in the subject that the body of another person or being is the subject's own body.

Neural mechanisms

Subcortical areas

Superior colliculus

The superior colliculus (SC) or optic tectum (OT) is part of the tectum, located in the midbrain, superior to the brainstem and inferior to the thalamus. It contains seven layers of alternating white and grey matter, of which the superficial contain topographic maps of the visual field; and deeper layers contain overlapping spatial maps of the visual, auditory and somatosensory modalities. The structure receives afferents directly from the retina, as well as from various regions of the cortex (primarily the occipital lobe), the spinal cord and the inferior colliculus. It sends efferents to the spinal cord, cerebellum, thalamus and occipital lobe via the lateral geniculate nucleus (LGN). The structure contains a high proportion of multisensory neurons and plays a role in the motor control of orientation behaviours of the eyes, ears and head.

Receptive fields from somatosensory, visual and auditory modalities converge in the deeper layers to form a two-dimensional multisensory map of the external world. Here, objects straight ahead are represented caudally and objects on the periphery are represented rosterally. Similarly, locations in superior sensory space are represented medially, and inferior locations are represented laterally.

However, in contrast to simple convergence, the SC integrates information to create an output that differs from the sum of its inputs. Following a phenomenon labelled the 'spatial rule', neurons are excited if stimuli from multiple modalities fall on the same or adjacent receptive fields, but are inhibited if the stimuli fall on disparate fields. Excited neurons may then proceed to innervate various muscles and neural structures to orient an individual's behaviour and attention toward the stimulus. Neurons in the SC also adhere to the 'temporal rule', in which stimulation must occur within close temporal proximity to excite neurons. However, due to the varying processing time between modalities and the relatively slower speed of sound to light, it has been found the neurons may be optimally excited when stimulated some time apart.

Putamen

Single neurons in the macaque putamen have been shown to have visual and somatosensory responses closely related to those in the polysensory zone of the premotor cortex and area 7b in the parietal lobe.

Cortical areas

Multisensory neurons exist in a large number of locations, often integrated with unimodal neurons. They have recently been discovered in areas previously thought to be modality specific, such as the somatosensory cortex; as well as in clusters at the borders between the major cerebral lobes, such as the occipito-parietal space and the occipito-temporal space.

However, in order to undergo such physiological changes, there must exist continuous connectivity between these multisensory structures. It is generally agreed that information flow within the cortex follows a hierarchical configuration. Hubel and Wiesel showed that receptive fields and thus the function of cortical structures, as one proceeds out from V1 along the visual pathways, become increasingly complex and specialized. From this it was postulated that information flowed outwards in a feed forward fashion; the complex end products eventually binding to form a percept. However, via fMRI and intracranial recording technologies, it has been observed that the activation time of successive levels of the hierarchy does not correlate with a feed forward structure. That is, late activation has been observed in the striate cortex, markedly after activation of the prefrontal cortex in response to the same stimulus.

Complementing this, afferent nerve fibres have been found that project to early visual areas such as the lingual gyrus from late in the dorsal (action) and ventral (perception) visual streams, as well as from the auditory association cortex. Feedback projections have also been observed in the opossum directly from the auditory association cortex to V1. This last observation currently highlights a point of controversy within the neuroscientific community. Sadato et al. (2004) concluded, in line with Bernstein et al. (2002), that the primary auditory cortex (A1) was functionally distinct from the auditory association cortex, in that it was void of any interaction with the visual modality. They hence concluded that A1 would not at all be effected by cross modal plasticity. This concurs with Jones and Powell's (1970) contention that primary sensory areas are connected only to other areas of the same modality.

In contrast, the dorsal auditory pathway, projecting from the temporal lobe is largely concerned with processing spatial information, and contains receptive fields that are topographically organized. Fibers from this region project directly to neurons governing corresponding receptive fields in V1. The perceptual consequences of this have not yet been empirically acknowledged. However, it can be hypothesized that these projections may be the precursors of increased acuity and emphasis of visual stimuli in relevant areas of perceptual space. Consequently, this finding rejects Jones and Powell's (1970) hypothesis and thus is in conflict with Sadato et al.'s (2004) findings. A resolution to this discrepancy includes the possibility that primary sensory areas can not be classified as a single group, and thus may be far more different from what was previously thought.

The multisensory syndrome with symmetric bilaterality, characterized by Gonzalo and called by this author `central syndrome of the cortex', was originated from a unilateral parieto-occipital cortical lesion equidistant from the visual, tactile, and auditory projection areas (the middle of area 19, the anterior part of area 18 and the most posterior of area 39, in Brodmann terminology) that was called `central zone'. The gradation observed between syndromes led this author to propose a functional gradient scheme in which the specificity of the cortex is distributed with a continuous variation, the overlap of the specific gradients would be high or maximum in that ` central zone'.

Further research is necessary for a definitive resolution.

Frontal lobe

Area F4 in macaques

Area F5 in macaques

Polysensory zone of premotor cortex (PZ) in macaques

Occipital lobe

Primary visual cortex (V1)

Lingual gyrus in humans

Lateral occipital complex (LOC), including lateral occipital tactile visual area (LOtv)

Parietal lobe

Ventral intraparietal sulcus (VIP) in macaques

Lateral intraparietal sulcus (LIP) in macaques

Area 7b in macaques

Second somatosensory cortex (SII)

Temporal lobe

Primary auditory cortex (A1)

Superior temporal cortex (STG/STS/PT) Audio visual cross modal interactions are known to occur in the auditory association cortex which lies directly inferior to the Sylvian fissure in the temporal lobe. Plasticity was observed in the superior temporal gyrus (STG) by Petitto et al. (2000). Here, it was found that the STG was more active during stimulation in native deaf signers compared to hearing non signers. Concurrently, further research has revealed differences in the activation of the Planum temporale (PT) in response to non linguistic lip movements between the hearing and deaf; as well as progressively increasing activation of the auditory association cortex as previously deaf participants gain hearing experience via a cochlear implant.

Anterior ectosylvian sulus (AES) in cats

Rostral lateral suprasylvian sulcus (rLS) in cats

Cortical-subcortical interactions

The most significant interaction between these two systems (corticotectal interactions) is the connection between the anterior ectosylvian sulcus (AES), which lies at the junction of the parietal, temporal and frontal lobes, and the SC. The AES is divided into three unimodal regions with multisensory neurons at the junctions between these sections. (Jiang & Stein, 2003). Neurons from the unimodal regions project to the deep layers of the SC and influence the multiplicative integration effect. That is, although they can receive inputs from all modalities as normal, the SC can not enhance or depress the effect of multisensory stimulation without input from the AES.

Concurrently, the multisensory neurons of the AES, although also integrally connected to unimodal AES neurons, are not directly connected to the SC. This pattern of division is reflected in other areas of the cortex, resulting in the observation that cortical and tectal multisensory systems are somewhat dissociated. Stein, London, Wilkinson and Price (1996) analysed the perceived luminance of an LED in the context of spatially disparate auditory distracters of various types. A significant finding was that a sound increased the perceived brightness of the light, regardless of their relative spatial locations, provided the light's image was projected onto the fovea. Here, the apparent lack of the spatial rule, further differentiates cortical and tectal multisensory neurons. Little empirical evidence exists to justify this dichotomy. Nevertheless, cortical neurons governing perception, and a separate sub cortical system governing action (orientation behavior) is synonymous with the perception action hypothesis of the visual stream. Further investigation into this field is necessary before any substantial claims can be made.

Dual "what" and "where" multisensory routes

Research suggests the existence of two multisensory routes for "what" and "where". The "what" route identifying the identity of things involving area Brodmann area 9 in the right inferior frontal gyrus and right middle frontal gyrus, Brodmann area 13 and Brodmann area 45 in the right insula-inferior frontal gyrus area, and Brodmann area 13 bilaterally in the insula. The "where" route detecting their spatial attributes involving the Brodmann area 40 in the right and left inferior parietal lobule and the Brodmann area 7 in the right precuneus-superior parietal lobule and Brodmann area 7 in the left superior parietal lobe.

Development of multisensory operations

Theories of development

All species equipped with multiple sensory systems, utilize them in an integrative manner to achieve action and perception. However, in most species, especially higher mammals and humans, the ability to integrate develops in parallel with physical and cognitive maturity. Children until certain ages do not show mature integration patterns. Classically, two opposing views that are principally modern manifestations of the nativist/empiricist dichotomy have been put forth. The integration (empiricist) view states that at birth, sensory modalities are not at all connected. Hence, it is only through active exploration that plastic changes can occur in the nervous system to initiate holistic perceptions and actions. Conversely, the differentiation (nativist) perspective asserts that the young nervous system is highly interconnected; and that during development, modalities are gradually differentiated as relevant connections are rehearsed and the irrelevant are discarded.

Using the SC as a model, the nature of this dichotomy can be analysed. In the newborn cat, deep layers of the SC contain only neurons responding to the somatosensory modality. Within a week, auditory neurons begin to occur, but it is not until two weeks after birth that the first multisensory neurons appear. Further changes continue, with the arrival of visual neurons after three weeks, until the SC has achieved its fully mature structure after three to four months. Concurrently in species of monkey, newborns are endowed with a significant complement of multisensory cells; however, along with cats there is no integration effect apparent until much later. This delay is thought to be the result of the relatively slower development of cortical structures including the AES; which as stated above, is essential for the existence of the integration effect.

Furthermore, it was found by Wallace (2004) that cats raised in a light deprived environment had severely underdeveloped visual receptive fields in deep layers of the SC. Although, receptive field size has been shown to decrease with maturity, the above finding suggests that integration in the SC is a function of experience. Nevertheless, the existence of visual multisensory neurons, despite a complete lack of visual experience, highlights the apparent relevance of nativist viewpoints. Multisensory development in the cortex has been studied to a lesser extent, however a similar study to that presented above was performed on cats whose optic nerves had been severed. These cats displayed a marked improvement in their ability to localize stimuli through audition; and consequently also showed increased neural connectivity between V1 and the auditory cortex. Such plasticity in early childhood allows for greater adaptability, and thus more normal development in other areas for those with a sensory deficit.

In contrast, following the initial formative period, the SC does not appear to display any neural plasticity. Despite this, habituation and sensititisation over the long term is known to exist in orientation behaviors. This apparent plasticity in function has been attributed to the adaptability of the AES. That is, although neurons in the SC have a fixed magnitude of output per unit input, and essentially operate an all or nothing response, the level of neural firing can be more finely tuned by variations in input by the AES.

Although there is evidence for either perspective of the integration/differentiation dichotomy, a significant body of evidence also exists for a combination of factors from either view. Thus, analogous to the broader nativist/empiricist argument, it is apparent that rather than a dichotomy, there exists a continuum, such that the integration and differentiation hypotheses are extremes at either end.

Psychophysical development of integration

Not much is known about the development of the ability to integrate multiple estimates such as vision and touch. Some multisensory abilities are present from early infancy, but it is not until children are eight years or older before they use multiple modalities to reduce sensory uncertainty.

One study demonstrated that cross-modal visual and auditory integration is present from within 1 year of life. This study measured response time for orientating towards a source. Infants who were 8–10 months old showed significantly decreased response times when the source was presented through both visual and auditory information compared to a single modality. Younger infants, however, showed no such change in response times to these different conditions. Indeed, the results of the study indicates that children potentially have the capacity to integrate sensory sources at any age. However, in certain cases, for example visual cues, intermodal integration is avoided.

Another study found that cross-modal integration of touch and vision for distinguishing size and orientation is available from at least 8 years of age. For pre-integration age groups, one sense dominates depending on the characteristic discerned (see visual dominance).

A study investigating sensory integration within a single modality (vision) found that it cannot be established until age 12 and above. This particular study assessed the integration of disparity and texture cues to resolve surface slant. Though younger age groups showed a somewhat better performance when combining disparity and texture cues compared to using only disparity or texture cues, this difference was not statistically significant. In adults, the sensory integration can be mandatory, meaning that they no longer have access to the individual sensory sources.

Acknowledging these variations, many hypotheses have been established to reflect why these observations are task-dependent. Given that different senses develop at different rates, it has been proposed that cross-modal integration does not appear until both modalities have reached maturity. The human body undergoes significant physical transformation throughout childhood. Not only is there growth in size and stature (affecting viewing height), but there is also change in inter-ocular distance and eyeball length. Therefore, sensory signals need to be constantly re-evaluated to appreciate these various physiological changes. Some support comes from animal studies that explore the neurobiology behind integration. Adult monkeys have deep inter-neuronal connections within the superior colliculus providing strong, accelerated visuo-auditory integration. Young animals conversely, do not have this enhancement until unimodal properties are fully developed.

Additionally, to rationalize sensory dominance, Gori et al. (2008) advocates that the brain utilises the most direct source of information during sensory immaturity. In this case, orientation is primarily a visual characteristic. It can be derived directly from the object image that forms on the retina, irrespective of other visual factors. In fact, data shows that a functional property of neurons within primate visual cortices' are their discernment to orientation. In contrast, haptic orientation judgements are recovered through collaborated patterned stimulations, evidently an indirect source susceptible to interference. Likewise, when size is concerned haptic information coming from positions of the fingers is more immediate. Visual-size perceptions, alternatively, have to be computed using parameters such as slant and distance. Considering this, sensory dominance is a useful instinct to assist with calibration. During sensory immaturity, the more simple and robust information source could be used to tweak the accuracy of the alternate source. Follow-up work by Gori et al. (2012) showed that, at all ages, vision-size perceptions are near perfect when viewing objects within the haptic workspace (i.e. at arm's reach). However, systematic errors in perception appeared when the object was positioned beyond this zone. Children younger than 14 years tend to underestimate object size, whereas adults overestimated. However, if the object was returned to the haptic workspace, those visual biases disappeared. These results support the hypothesis that haptic information may educate visual perceptions. If sources are used for cross-calibration they cannot, therefore, be combined (integrated). Maintaining access to individual estimates is a trade-off for extra plasticity over accuracy, which could be beneficial in retrospect to the developing body.

Alternatively, Ernst (2008) advocates that efficient integration initially relies upon establishing correspondence – which sensory signals belong together. Indeed, studies have shown that visuo-haptic integration fails in adults when there is a perceived spatial separation, suggesting sensory information is coming from different targets. Furthermore, if the separation can be explained, for example viewing an object through a mirror, integration is re-established and can even be optimal. Ernst (2008) suggests that adults can obtain this knowledge from previous experiences to quickly determine which sensory sources depict the same target, but young children could be deficient in this area. Once there is a sufficient bank of experiences, confidence to correctly integrate sensory signals can then be introduced in their behaviour.

Lastly, Nardini et al. (2010) recently hypothesised that young children have optimized their sensory appreciation for speed over accuracy. When information is presented in two forms, children may derive an estimate from the fastest available source, subsequently ignoring the alternate, even if it contains redundant information. Nardini et al. (2010) provides evidence that children's (aged 6 years) response latencies are significantly lower when stimuli are presented in multi-cue over single-cue conditions. Conversely, adults showed no change between these conditions. Indeed, adults display mandatory fusion of signals, therefore they can only ever aim for maximum accuracy. However, the overall mean latencies for children were not faster than adults, which suggests that speed optimization merely enable them to keep up with the mature pace. Considering the haste of real-world events, this strategy may prove necessary to counteract the general slower processing of children and maintain effective vision-action coupling. Ultimately the developing sensory system may preferentially adapt for different goals – speed and detecting sensory conflicts – those typical of objective learning.

The late development of efficient integration has also been investigated from computational point of view. Daee et al. (2014) showed that having one dominant sensory source at early age, rather than integrating all sources, facilitates the overall development of cross-modal integrations.

Applications

Prosthesis

Prosthetics designers should carefully consider the nature of dimensionality alteration of sensorimotor signaling from and to the CNS when designing prothesitic devices. As reported in literatures, neural signaling from the CNS to the motors is organized in a way that the dimensionalities of the signals are gradually increased as you approach the muscles, also called muscle synergies. In the same principal, but in opposite ordering, on the other hand, signals dimensionalities from the sensory receptors are gradually integrated, also called sensory synergies, as they approaches the CNS. This bow tie like signaling formation enables the CNS to process abstract yet valuable information only. Such as process will decrease complexity of the data, handle the noises and guarantee to the CNS the optimum energy consumption. Although the current commercially available prosthetic devices mainly focusing in implementing the motor side by simply uses EMG sensors to switch between different activation states of the prosthesis. Very limited works have proposed a system to involve by integrating the sensory side. The integration of tactile sense and proprioception is regarded as essential for implementing the ability to perceive environmental input.

Visual Rehabilitation

Multisensory integration has also been shown to ameliorate visual hemianopia. Through the repeated presentation of multisensory stimuli in the blind hemifield, the ability to respond to purely visual stimuli gradually returns to that hemifield in a central to peripheral manner. These benefits persist even after the explicit multisensory training ceases.