Resilience in the Built Environment is the built environment's capability to keep adapting to existing and emerging threats such as severe wind storms or earthquakes and creating robustness and redundancy in building design. New implications of changing conditions on the efficiency of different approaches to design and planning can be addressed in the following term.

Origin of the term resilience

According to the dictionary, resilience means "the ability to recover from difficulties or disturbance." The root of the term resilience is found in the Latin term 'resilio' which means to go back to a state or to spring back. In the 1640s the root term provided a resilience in the field of the mechanics of materials as "the ability of a material to absorb energy when it is elastically deformed and to release that energy upon unloading". By 1824, the term had developed to encompass the meaning of ‘elasticity’.

19th century resilience

Thomas Tredgold was the first to introduce the concept of resilience in 1818 in England. The term was used to describe a property in the strength of timber, as beams were bent and deformed to support heavy load. Tredgold found the timber durable and did not burn readily, despite being planted in bad soil conditions and exposed climates. Resilience was then refined by Mallett in 1856 in relation to the capacity of specific materials to withstand specific disturbances. These definitions can be used in engineering resilience due to the application of a single material that has a stable equilibrium regime rather than the complex adaptive stability of larger systems.

20th century resilience

In the 1970s, researchers studied resilience in relation to child psychology and the exposure to certain risks. Resilience was used to describe people who have “the ability to recover from adversity.” One of the many researchers was Professor Sir Michael Rutter, who was concerned with a combination of risk experiences and their relative outcomes.

In his paper Resilience and Stability of Ecological systems (1973), Holling first explored the topic of resilience through its application to the field of ecology. Ecological resilience was defined as a “measure of the persistence of systems and of their ability to absorb change and disturbance and still maintain the same relationships between state variables.” Holling found that such a framework can be applied to other forms of resilience. The application to ecosystems was later used to draw into other manners of human, cultural and social applications.The random events described by Holling are not only climatic, but instability to neutral systems can occur through the impact of fires, the changes in forest community or the process of fishing. Stability, on the other hand, is the ability of a system to return to an equilibrium state after a temporary disturbance. Multiple state systems and conditions rather than objects should be studied as the world is a heterogeneous space with various biological, physical and chemical characteristics. Unlike material and engineering resilience, Ecological and social resilience focus on the redundancy and persistence of multi-equilibrium states to maintain existence of function.

Engineering resilience

Engineering resilience refers to the functionality of a system in relation to hazard mitigation. Within this framework, resilience is calculated based on the time it takes a system to return to a single state equilibrium. Researchers at the MCEER (Multi-Hazard Earthquake Engineering research center) have identified four properties of resilience: Robustness, resourcefulness, redundancy and rapidity.

- Robustness: the ability of systems to withstand a certain level of stress without suffering loss of function.

- Resourcefulness: the ability to identify problems and resources when threats may disrupt the system.

- Redundancy: the ability to have various paths in a system by which forces can be transferred to enable continued function

- Rapidity: the ability to meet priorities and goals in time to prevent losses and future disruptions.

Social-ecological resilience

also known as adaptive resilience, social-ecological resilience is a new concept that shifts the focus to combining the social, ecological and technical domains of resilience. The adaptive model focuses on the transformable quality of the stable state of a system. In adaptive buildings, both short term and long term resilience are addressed to ensure that the system can withstand disturbances with social and physical capacities. Buildings operate at multiple scale and conditions, therefore it is important to recognize that constant changes in architecture are expected. Laboy and Fannon recognize that the resilience model is shifting, and have applied the MCEER four properties of resilience to the planning, designing and operating phases of architecture. Rather than using four properties to describe resilience, Laboy and Fannon suggest a 6R model that adds Recovery for the operation phase of a building and Risk Avoidance for the planning phase of the building. In the planning phase of a building, site selection, building placement and site conditions are crucial for the risk avoidance. Early planning can help prepare and design for the built environment based on forces that we understand and perceive. In the operation phase of the building, a disturbance does not mark the end of resilience, but should propose a recovery plan for future adaptations. Disturbances should we be used as a learning opportunity to assess mistakes and outcomes, and reconfigure for future needs.

Applications

Resilience in International Building Code

The international building code provides minimum requirements for buildings using performative based standards. The most recent International Building Code (IBC)was released in 2018 by the International Code Council (ICC), focusing on standards that protect public health, safety and welfare, without restricting use of certain building methods. The code addresses several categories, which are updated every three years to incorporate new technologies and changes. Building codes are fundamental to the resilience of communities and their buildings, as “Resilience in the built environment starts with strong, regularly adopted and properly administered building codes” Benefits occur due to the adoption of codes as the National Institute of Building Sciences (NIBS) found that the adoption of the International Building Code provides an 11$ benefit for every 1$ invested.

The International Code Council is focused on assuming the community’s buildings support the resilience of communities ahead of disasters. The process presented by the ICC includes understanding the risks, identifying strategies for the risks, and implementing those strategies. Risks vary based on communities, geographies and other factors. The American Institute of Architects created a list of shocks and stresses that are related to certain community characteristics. Shocks are natural forms of hazards (floods, earthquakes), while stresses are more chronic events that can develop over a longer period of time (affordability, drought). It is important to understand the application of resilient design on both shocks and stresses as buildings can play a part in contributing to their resolution. Even though the IBC is a model code, it is adopted by various state and governments to regulate specific building areas. Most of the approaches to minimizing risks are organized around building use and occupancy. In addition, the safety of a structure is determined by material usage, frames, and structure requirements can provide a high level of protection for occupants. Specific requirements and strategies are provided for each shock or stress such as with tsunamis, fires and earthquakes.

U.S Resiliency Council

The U.S Resiliency Council (USRC), a non-profit organization, created the USRC Rating system which describes the expected impacts of a natural disaster on new and existing buildings. The rating considers the building prior to its use through its structure, Mechanical-Electrical systems and material usage. Currently, the program is in its pilot stage, focusing primarily on earthquake preparedness and resilience. For earthquake hazards, the rating relies heavily on the requirements set by the Building codes for design. Buildings can obtain one of the Two types of USRC rating systems:

USRC Verified Rating System

The verified Rating system is used for marketing and publicity purposes using badges. The rating is easy to understand, credible and transparent at is awarded by professionals. The USRC building rating system rates buildings with stars ranging from one to five stars based on the dimensions used in their systems. The three dimensions that the USRC uses are Safety, Damage and Recovery. Safety describes the prevention of potential harm for people after an event. Damage describes the estimated repair required due to replacements and losses. Recovery is calculated based on the time it takes for the building to regain function after a shock. The following types of Rating certification can be achieved:

- USRC Platinum: less than 5% of expected damage

- USRC Gold: less than 10% of expected damage

- USRC Silver: less than 20% of expected damage

- USRC Certified: less than 40% of expected damage

Earthquake Building rating system can be obtained through hazard evaluation and seismic testing. In addition to the technical review provided by the USRC, A CRP seismic analysis applies for a USRC rating with the required documentation. The USRC is planning on creating similar standards for other natural hazards such as floods, storms and winds.

USRC Transaction Rating System

Transaction rating system provides a building with a report for risk exposure, possibly investments and benefits. This rating remains confidential with the USRC and is not used to publicize or market the building.

Disadvantages of the USRC rating system

Due to the current focus on seismic interventions, the USRC does not take into consideration several parts of a building. The USRC building rating system does not take into consideration any changes to the design of the building that might occur after the rating is awarded. Therefore, changes that might impede the resilience of a building would not affect the rating that the building was awarded. In addition, changes in the uses of the building after certification might include the use of hazardous materials would not affect the rating certification of the building. The damage rating does not include damage caused by pipe breakage, building upgrades and damage to furnishings. The recovery rating does not include fully restoring all building function and all damages but only a certain amount.

The 100 Resilient Cities Program

In 2013, The 100 Resilient Cities Program was initiated by the Rockefeller foundation, with the goal to help cities become more resilient to physical, social and economic shocks and stresses. The program helps facilitate the resilience plans in cities around the world through access to tools, funding and global network partners such as ARUP and the AIA. Of 1,000 cities that applied to join the program, only 100 cities were selected with challenges ranging from aging populations, cyber attacks, severe storms and drug abuse.

There are many cities that are members of the program, but in the article, Building up resilience in cities worldwide, Spaans and Waterhot focus on the city of Rotterdam to compare the city’s resilience before and after the participation in the program. The authors found that the program broadens the scope and improved the Resilience plan of Rotterdam by including access to water, data, clean air, cyber robustness, and safe water. The program addresses other social stresses that can weaken the resilience of cities such as violence and unemployment. Therefore, cities are able to reflect on their current situation and plan to adapt to new shocks and stresses. The findings of the article can support the understanding of resiliency at a larger urban scale that requires an integrated approach with coordination across multiple government scales, time scales and fields. In addition to integrating resiliency into building code and building certification programs, the 100 resilience Cities program provides other support opportunities that can help increase awareness through non-profit organizations.

After more than six years of growth and change, the existing 100 Resilient Cities organization concluded on July 31, 2019.

RELi Rating System

RELi is a design criteria used to develop resilience in multiple scales of the built environment such as buildings, neighborhoods and infrastructure. It was developed by the Institute for Market Transformation to Sustainability (MTS) to help designers plan for hazards. RELi is very similar to LEED but with a focus on resilience. RELi is now owned by the U.S Green Building Council (USGBC) and available to projects seeking LEED certification. The first version of RELi was released in 2014, it is currently still in the pilot phase, with no points allocated for specific credits. RELi accreditation is not required, and the use of the credit information is voluntary. Therefore, the current point system is still to be determined and does not have a tangible value. RELi provides a credit catalog that is used a s a reference guide for building design and expands on the RELi definition of resilience as follows:

Resilient Design pursues Buildings + Communities that are shock resistant, healthy, adaptable and regenerative through a combination of diversity, foresight and the capacity for self-organization and learning. A Resilient Society can withstand shocks and rebuild itself when necessary. It requires humans to embrace their capacity to anticipate, plan and adapt for the future.

RELi Credit Catalog

The RELi Catalog considers multiple scales of intervention with requirements for a panoramic approach, risk adaptation & mitigation for acute events and a comprehensive adaptation & mitigation for the present and future. RELi's framework highly focuses on social issues for community resilience such as providing community spaces and organisations. RELi also combines specific hazard designs such as flood preparedness with general strategies for energy and water efficiency. The following categories are used to organize the RELi credit list:

- Panoramic approach to Planning, design, Maintenance and Operations

- Hazard Preparedness

- Hazard adaptation and mitigation

- Community cohesion, social and economic vitality

- Productivity, health and diversity

- Energy, water, food

- Materials and artifacts

- Applied creativity, innovation and exploration

The RELI Program complements and expands on other popular rating systems such as LEED, Envision, and Living Building Challenge. The menu format of the catalog allows users to easily navigate the credits and recognize the goals achieved by RELI. References to other rating systems that have been used can help increase awareness on RELi and its credibility of its use. The reference for each credit is listed in the catalog for ease of access.

LEED Pilot Credits

In 2018, three new LEED pilot credits were released to increase awareness on specific natural and man-made disasters. The pilot credits are found in the Integrative Process category and are applicable to all Building Design and Construction rating systems.

- The first credit IPpc98: Assessment and Planning for Resilience, includes a prerequisite for a hazard assessment of the site. It is crucial to take into account the site conditions and how they change with variations in the climate. Projects can either choose to do a climate-related risk plan or can complete planning forms presented by the Red Cross.

- The second credit IPpc99: Assessment and Planning for Resilience, requires projects to prioritize three top hazards based on the assessments made in the first credit. specific mitigation strategies for each hazard have to be identified and implemented. Reference to other resilience programs such as the USRC should be made to support the choice of hazards.

- The third credit IPpc100: Passive Survivability and Functionality During Emergencies, focuses on maintaining livable and functional conditions during a disturbance. Projects can demonstrate the ability to provide emergency power for high priority functions, can maintain livable temperatures for a certain period of time, and provide access to water. For thermal resistance, reference to thermal modeling of the comfort tool's psychrometric chart should be made to support the thermal qualities of the building during a certain time. As for emergency power, backup power must last based on the critical loads and needs of the building use type.

LEED credits overlap with RELi rating system credits, the USGBC has been refining RELi to better synthesize with the LEED resilient design pilot credits.

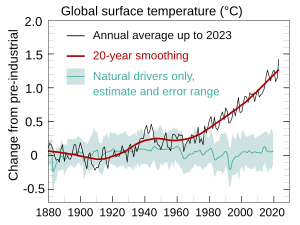

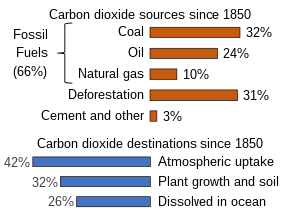

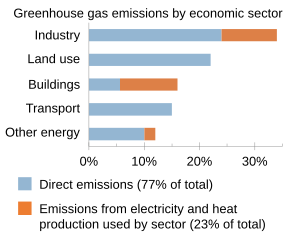

Design based on climate change

It is important to assess current climate data and design in preparation of changes or threats to the environment. Resilience plans and passive design strategies can differ based on climates that are too hot. Here are general climate responsive design strategies based on three different climatic conditions:

Too Wet

- Use of Natural solutions: mangroves and other shoreline plants can act as barriers to flooding.

- Creating a Dike system: in areas with extreme floods, dikes can be integrated into the urban landscape to protect buildings.

- Using permeable paving: porous pavement surfaces absorb runoff in parking lots, roads and sidewalks.

- Rain Harvesting methods: collect and store rainwater for domestic or landscape purposes.

Too Dry

- Use of drought-tolerant plants: save water usage in landscaping methods

- Filtration of wastewater: recycling wastewater for landscaping or toilet usage.

- Use of courtyard layout: minimize the area affected by solar radiation and use water and plants for evaporative cooling.

Too Hot

- Use of vegetation: Trees can help cool the environment by reducing the urban heat island effect through evapotranspiration.

- Use of passive solar-design strategies: operable windows and thermal mass can cool the building down naturally.

- Window Shading strategies: control the amount of sunlight that enters the building to minimize heat gains during the day.

- Reduce or shade external adjacent thermal masses that will re-radiate into the building (e.g. pavers)

Design based on hazards

Hazard assessment

Determining and assessing vulnerabilities to the built environment based on specific locations is crucial for creating a resilience plan. Disasters lead to a wide range of consequences such as damaged buildings, ecosystems and human losses. For example, earthquakes that took place in the Wenchuan County in 2008, lead to major landslides which relocated entire city district such as Old Beichuan. Here are some natural hazards and potential strategies for resilience assessment.

Fire

- use of fire rated materials

- provide fire-resistant stairwells for evacuation

- universal escape methods to also help those with disabilities.

Hurricanes

There are multiple strategies for protecting structures against hurricanes, based on wind and rain loads.

- Openings should be protected form flying debris

- Structures should be elevated from possible water intrusion and flooding

- Building enclosures should be sealed with specific nailing patterns

- use of materials such as metal, tile or masonry to resist wind loads.

Earthquakes

Earthquakes can also result in the structural damage and collapse of buildings due to high stresses on building frames.

- Secure appliances such as heaters and furniture to prevent injury and fires

- expansion joints should be used in building structure to respond to seismic shaking.

- create flexible systems with base isolation to minimize impact

- provide earthquake preparedness kit with necessary resources during event

Resilience and sustainability

It is difficult to discuss the concepts of resilience and sustainability in comparison due to the various scholarly definitions that have been used in the field over the years. Many policies and academic publications on both topics either provide their own definitions of both concepts or lack a clear definition of the type of resilience they seek. Even though sustainability is a well established term, there are generic interpretations of the concept and its focus. Sanchez et al proposed a new characterization of the term ‘sustainable resilience’ which expands the social-ecological resilience to include more sustained and long-term approaches. Sustainable resilience focuses not only on the outcomes, but also on the processes and policy structures in the implementation.

Both concepts share essential assumptions and goals such as passive survivability and persistence of a system operation over time and in response to disturbances. There is also a shared focus on climate change mitigation as they both appear in larger frameworks such as Building Code and building certification programs. Holling and Walker argue that “a resilient sociol-ecological system is synonymous with a region that is ecological, economically and socially sustainable.” Other scholars such as Perrings state that “a development strategy is not sustainable if it is not resilient.” Therefore, the two concepts are intertwined and cannot be successful individually as they are dependent on one another. For example, in RELi and in LEED and other building certifications, providing access to safe water and an energy source is crucial before, during and after a disturbance.

Some scholars argue that resilience and sustainability tactics target different goals. Paula Melton argues that resilience focuses on the design for unpredictable, while sustainability focuses on the climate responsive designs. Some forms of resilience such as adaptive resilience focus on designs that can adapt and change based on a shock event, on the other hand, sustainable design focuses on systems that are efficient and optimized.