A search engine is a software system that is designed to carry out web searches (Internet searches), which means to search the World Wide Web in a systematic way for particular information specified in a textual web search query. The search results are generally presented in a line of results, often referred to as search engine results pages (SERPs) The information may be a mix of links to web pages, images, videos, infographics, articles, research papers, and other types of files. Some search engines also mine data available in databases or open directories. Unlike web directories, which are maintained only by human editors, search engines also maintain real-time information by running an algorithm on a web crawler. Internet content that is not capable of being searched by a web search engine is generally described as the deep web.

History

| Year | Engine | Current status |

|---|---|---|

| 1993 | W3Catalog | Active |

| Aliweb | Active | |

| JumpStation | Inactive | |

| WWW Worm | Inactive | |

| 1994 | WebCrawler | Active |

| Go.com | Inactive, redirects to Disney | |

| Lycos | Active | |

| Infoseek | Inactive, redirects to Disney | |

| 1995 | Yahoo! Search | Active, initially a search function for Yahoo! Directory |

| Daum | Active | |

| Magellan | Inactive | |

| Excite | Active | |

| SAPO | Active | |

| MetaCrawler | Active | |

| AltaVista | Inactive, acquired by Yahoo! in 2003, since 2013 redirects to Yahoo! | |

| 1996 | RankDex | Inactive, incorporated into Baidu in 2000 |

| Dogpile | Active, Aggregator | |

| Inktomi | Inactive, acquired by Yahoo! | |

| HotBot | Active | |

| Ask Jeeves | Active (rebranded ask.com) | |

| 1997 | AOL NetFind | Active (rebranded AOL Search since 1999) |

| Northern Light | Inactive | |

| Yandex | Active | |

| 1998 | Active | |

| Ixquick | Active as Startpage.com | |

| MSN Search | Active as Bing | |

| empas | Inactive (merged with NATE) | |

| 1999 | AlltheWeb | Inactive (URL redirected to Yahoo!) |

| GenieKnows | Active, rebranded Yellowee (redirection to justlocalbusiness.com) | |

| Naver | Active | |

| Teoma | Active (© APN, LLC) | |

| 2000 | Baidu | Active |

| Exalead | Inactive | |

| Gigablast | Active | |

| 2001 | Kartoo | Inactive |

| 2003 | Info.com | Active |

| Scroogle | Inactive | |

| 2004 | A9.com | Inactive |

| Clusty | Active (as Yippy) | |

| Mojeek | Active | |

| Sogou | Active | |

| 2005 | SearchMe | Inactive |

| KidzSearch | Active, Google Search | |

| 2006 | Soso | Inactive, merged with Sogou |

| Quaero | Inactive | |

| Search.com | Active | |

| ChaCha | Inactive | |

| Ask.com | Active | |

| Live Search | Active as Bing, rebranded MSN Search | |

| 2007 | wikiseek | Inactive |

| Sproose | Inactive | |

| Wikia Search | Inactive | |

| Blackle.com | Active, Google Search | |

| 2008 | Powerset | Inactive (redirects to Bing) |

| Picollator | Inactive | |

| Viewzi | Inactive | |

| Boogami | Inactive | |

| LeapFish | Inactive | |

| Forestle | Inactive (redirects to Ecosia) | |

| DuckDuckGo | Active | |

| 2009 | Bing | Active, rebranded Live Search |

| Yebol | Inactive | |

| Mugurdy | Inactive due to a lack of funding | |

| Scout (Goby) | Active | |

| NATE | Active | |

| Ecosia | Active | |

| Startpage.com | Active, sister engine of Ixquick | |

| 2010 | Blekko | Inactive, sold to IBM |

| Cuil | Inactive | |

| Yandex (English) | Active | |

| Parsijoo | Active | |

| 2011 | YaCy | Active, P2P |

| 2012 | Volunia | Inactive |

| 2013 | Qwant | Active |

| 2014 | Egerin | Active, Kurdish / Sorani |

| Swisscows | Active | |

| 2015 | Yooz | Active |

| Cliqz | Inactive | |

| 2016 | Kiddle | Active, Google Search |

Pre-1990s

A system for locating published information intended to overcome the ever increasing difficulty of locating information in ever-growing centralized indices of scientific work was described in 1945 by Vannevar Bush, who wrote an article in The Atlantic Monthly titled "As We May Think" in which he envisioned libraries of research with connected annotations not unlike modern hyperlinks. Link analysis would eventually become a crucial component of search engines through algorithms such as Hyper Search and PageRank.

1990s: Birth of search engines

The first internet search engines predate the debut of the Web in December 1990: Who is user search dates back to 1982, and the Knowbot Information Service multi-network user search was first implemented in 1989. The first well documented search engine that searched content files, namely FTP files, was Archie, which debuted on 10 September 1990.

Prior to September 1993, the World Wide Web was entirely indexed by hand. There was a list of webservers edited by Tim Berners-Lee and hosted on the CERN webserver. One snapshot of the list in 1992 remains, but as more and more web servers went online the central list could no longer keep up. On the NCSA site, new servers were announced under the title "What's New!"

The first tool used for searching content (as opposed to users) on the Internet was Archie. The name stands for "archive" without the "v"., It was created by Alan Emtage computer science student at McGill University in Montreal, Quebec, Canada. The program downloaded the directory listings of all the files located on public anonymous FTP (File Transfer Protocol) sites, creating a searchable database of file names; however, Archie Search Engine did not index the contents of these sites since the amount of data was so limited it could be readily searched manually.

The rise of Gopher (created in 1991 by Mark McCahill at the University of Minnesota) led to two new search programs, Veronica and Jughead. Like Archie, they searched the file names and titles stored in Gopher index systems. Veronica (Very Easy Rodent-Oriented Net-wide Index to Computerized Archives) provided a keyword search of most Gopher menu titles in the entire Gopher listings. Jughead (Jonzy's Universal Gopher Hierarchy Excavation And Display) was a tool for obtaining menu information from specific Gopher servers. While the name of the search engine "Archie Search Engine" was not a reference to the Archie comic book series, "Veronica" and "Jughead" are characters in the series, thus referencing their predecessor.

In the summer of 1993, no search engine existed for the web, though numerous specialized catalogues were maintained by hand. Oscar Nierstrasz at the University of Geneva wrote a series of Perl scripts that periodically mirrored these pages and rewrote them into a standard format. This formed the basis for W3Catalog, the web's first primitive search engine, released on September 2, 1993.

In June 1993, Matthew Gray, then at MIT, produced what was probably the first web robot, the Perl-based World Wide Web Wanderer, and used it to generate an index called "Wandex". The purpose of the Wanderer was to measure the size of the World Wide Web, which it did until late 1995. The web's second search engine Aliweb appeared in November 1993. Aliweb did not use a web robot, but instead depended on being notified by website administrators of the existence at each site of an index file in a particular format.

JumpStation (created in December 1993 by Jonathon Fletcher) used a web robot to find web pages and to build its index, and used a web form as the interface to its query program. It was thus the first WWW resource-discovery tool to combine the three essential features of a web search engine (crawling, indexing, and searching) as described below. Because of the limited resources available on the platform it ran on, its indexing and hence searching were limited to the titles and headings found in the web pages the crawler encountered.

One of the first "all text" crawler-based search engines was WebCrawler, which came out in 1994. Unlike its predecessors, it allowed users to search for any word in any webpage, which has become the standard for all major search engines since. It was also the search engine that was widely known by the public. Also in 1994, Lycos (which started at Carnegie Mellon University) was launched and became a major commercial endeavor.

The first popular search engine on the Web was Yahoo! Search. The first product from Yahoo!, founded by Jerry Yang and David Filo in January 1994, was a Web directory called Yahoo! Directory. In 1995, a search function was added, allowing users to search Yahoo! Directory! It became one of the most popular ways for people to find web pages of interest, but its search function operated on its web directory, rather than its full-text copies of web pages.

Soon after, a number of search engines appeared and vied for popularity. These included Magellan, Excite, Infoseek, Inktomi, Northern Light, and AltaVista. Information seekers could also browse the directory instead of doing a keyword-based search.

In 1996, Robin Li developed the RankDex site-scoring algorithm for search engines results page ranking and received a US patent for the technology. It was the first search engine that used hyperlinks to measure the quality of websites it was indexing, predating the very similar algorithm patent filed by Google two years later in 1998. Larry Page referenced Li's work in some of his U.S. patents for PageRank. Li later used his Rankdex technology for the Baidu search engine, which was founded by Robin Li in China and launched in 2000.

In 1996, Netscape was looking to give a single search engine an exclusive deal as the featured search engine on Netscape's web browser. There was so much interest that instead Netscape struck deals with five of the major search engines: for $5 million a year, each search engine would be in rotation on the Netscape search engine page. The five engines were Yahoo!, Magellan, Lycos, Infoseek, and Excite.

Google adopted the idea of selling search terms in 1998, from a small search engine company named goto.com. This move had a significant effect on the SE business, which went from struggling to one of the most profitable businesses in the Internet.

Search engines were also known as some of the brightest stars in the Internet investing frenzy that occurred in the late 1990s. Several companies entered the market spectacularly, receiving record gains during their initial public offerings. Some have taken down their public search engine, and are marketing enterprise-only editions, such as Northern Light. Many search engine companies were caught up in the dot-com bubble, a speculation-driven market boom that peaked in 1990 and ended in 2000.

2000's-Present: Post dot-com bubble

Around 2000, Google's search engine rose to prominence. The company achieved better results for many searches with an algorithm called PageRank, as was explained in the paper Anatomy of a Search Engine written by Sergey Brin and Larry Page, the later founders of Google. This iterative algorithm ranks web pages based on the number and PageRank of other web sites and pages that link there, on the premise that good or desirable pages are linked to more than others. Larry Page's patent for PageRank cites Robin Li's earlier RankDex patent as an influence. Google also maintained a minimalist interface to its search engine. In contrast, many of its competitors embedded a search engine in a web portal. In fact, the Google search engine became so popular that spoof engines emerged such as Mystery Seeker.

By 2000, Yahoo! was providing search services based on Inktomi's search engine. Yahoo! acquired Inktomi in 2002, and Overture (which owned AlltheWeb and AltaVista) in 2003. Yahoo! switched to Google's search engine until 2004, when it launched its own search engine based on the combined technologies of its acquisitions.

Microsoft first launched MSN Search in the fall of 1998 using search results from Inktomi. In early 1999 the site began to display listings from Looksmart, blended with results from Inktomi. For a short time in 1999, MSN Search used results from AltaVista instead. In 2004, Microsoft began a transition to its own search technology, powered by its own web crawler (called msnbot).

Microsoft's rebranded search engine, Bing, was launched on June 1, 2009. On July 29, 2009, Yahoo! and Microsoft finalized a deal in which Yahoo! Search would be powered by Microsoft Bing technology.

As of 2019, active search engine crawlers include those of Google, Sogou, Baidu, Bing, Gigablast, Mojeek, DuckDuckGo and Yandex.

Approach

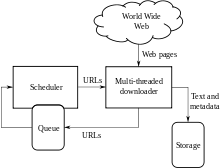

A search engine maintains the following processes in near real time:

Web search engines get their information by web crawling from site to site. The "spider" checks for the standard filename robots.txt, addressed to it. The robots.txt file contains directives for search spiders, telling it which pages to crawl. After checking for robots.txt and either finding it or not, the spider sends certain information back to be indexed depending on many factors, such as the titles, page content, JavaScript, Cascading Style Sheets (CSS), headings, or its metadata in HTML meta tags. After a certain number of pages crawled, amount of data indexed, or time spent on the website, the spider stops crawling and moves on. "[N]o web crawler may actually crawl the entire reachable web. Due to infinite websites, spider traps, spam, and other exigencies of the real web, crawlers instead apply a crawl policy to determine when the crawling of a site should be deemed sufficient. Some websites are crawled exhaustively, while others are crawled only partially".

Indexing means associating words and other definable tokens found on web pages to their domain names and HTML-based fields. The associations are made in a public database, made available for web search queries. A query from a user can be a single word, multiple words or a sentence. The index helps find information relating to the query as quickly as possible. Some of the techniques for indexing, and caching are trade secrets, whereas web crawling is a straightforward process of visiting all sites on a systematic basis.

Between visits by the spider, the cached version of page (some or all the content needed to render it) stored in the search engine working memory is quickly sent to an inquirer. If a visit is overdue, the search engine can just act as a web proxy instead. In this case the page may differ from the search terms indexed. The cached page holds the appearance of the version whose words were previously indexed, so a cached version of a page can be useful to the web site when the actual page has been lost, but this problem is also considered a mild form of linkrot.

Typically when a user enters a query into a search engine it is a few keywords. The index already has the names of the sites containing the keywords, and these are instantly obtained from the index. The real processing load is in generating the web pages that are the search results list: Every page in the entire list must be weighted according to information in the indexes. Then the top search result item requires the lookup, reconstruction, and markup of the snippets showing the context of the keywords matched. These are only part of the processing each search results web page requires, and further pages (next to the top) require more of this post processing.

Beyond simple keyword lookups, search engines offer their own GUI- or command-driven operators and search parameters to refine the search results. These provide the necessary controls for the user engaged in the feedback loop users create by filtering and weighting while refining the search results, given the initial pages of the first search results. For example, from 2007 the Google.com search engine has allowed one to filter by date by clicking "Show search tools" in the leftmost column of the initial search results page, and then selecting the desired date range. It's also possible to weight by date because each page has a modification time. Most search engines support the use of the boolean operators AND, OR and NOT to help end users refine the search query. Boolean operators are for literal searches that allow the user to refine and extend the terms of the search. The engine looks for the words or phrases exactly as entered. Some search engines provide an advanced feature called proximity search, which allows users to define the distance between keywords. There is also concept-based searching where the research involves using statistical analysis on pages containing the words or phrases you search for. As well, natural language queries allow the user to type a question in the same form one would ask it to a human. A site like this would be ask.com.

The usefulness of a search engine depends on the relevance of the result set it gives back. While there may be millions of web pages that include a particular word or phrase, some pages may be more relevant, popular, or authoritative than others. Most search engines employ methods to rank the results to provide the "best" results first. How a search engine decides which pages are the best matches, and what order the results should be shown in, varies widely from one engine to another. The methods also change over time as Internet usage changes and new techniques evolve. There are two main types of search engine that have evolved: one is a system of predefined and hierarchically ordered keywords that humans have programmed extensively. The other is a system that generates an "inverted index" by analyzing texts it locates. This first form relies much more heavily on the computer itself to do the bulk of the work.

Most Web search engines are commercial ventures supported by advertising revenue and thus some of them allow advertisers to have their listings ranked higher in search results for a fee. Search engines that do not accept money for their search results make money by running search related ads alongside the regular search engine results. The search engines make money every time someone clicks on one of these ads.

Local search

Local search is the process that optimizes efforts of local businesses. They focus on change to make sure all searches are consistent. It's important because many people determine where they plan to go and what to buy based on their searches.

As of February 2021, Google is the world's most used search engine, with a market share of 92.04%, and the world's other most used search engines were:

Russia and East Asia

In Russia, Yandex has a market share of 61.9%, compared to Google's 28.3%. In China, Baidu is the most popular search engine. South Korea's homegrown search portal, Naver, is used for 70% of online searches in the country. Yahoo! Japan and Yahoo! Taiwan are the most popular avenues for Internet searches in Japan and Taiwan, respectively. China is one of few countries where Google is not in the top three web search engines for market share. Google was previously a top search engine in China, but had to withdraw after failing to follow China's laws.

Europe

Most countries' markets in Western Europe are dominated by Google, except for the Czech Republic, where Seznam is a strong competitor.

Search engine bias

Although search engines are programmed to rank websites based on some combination of their popularity and relevancy, empirical studies indicate various political, economic, and social biases in the information they provide and the underlying assumptions about the technology. These biases can be a direct result of economic and commercial processes (e.g., companies that advertise with a search engine can become also more popular in its organic search results), and political processes (e.g., the removal of search results to comply with local laws). For example, Google will not surface certain neo-Nazi websites in France and Germany, where Holocaust denial is illegal.

Biases can also be a result of social processes, as search engine algorithms are frequently designed to exclude non-normative viewpoints in favor of more "popular" results. Indexing algorithms of major search engines skew towards coverage of U.S.-based sites, rather than websites from non-U.S. countries.

Google Bombing is one example of an attempt to manipulate search results for political, social or commercial reasons.

Several scholars have studied the cultural changes triggered by search engines, and the representation of certain controversial topics in their results, such as terrorism in Ireland, climate change denial, and conspiracy theories.

Customized results and filter bubbles

Many search engines such as Google and Bing provide customized results based on the user's activity history. This leads to an effect that has been called a filter bubble. The term describes a phenomenon in which websites use algorithms to selectively guess what information a user would like to see, based on information about the user (such as location, past click behaviour and search history). As a result, websites tend to show only information that agrees with the user's past viewpoint. This puts the user in a state of intellectual isolation without contrary information. Prime examples are Google's personalized search results and Facebook's personalized news stream. According to Eli Pariser, who coined the term, users get less exposure to conflicting viewpoints and are isolated intellectually in their own informational bubble. Pariser related an example in which one user searched Google for "BP" and got investment news about British Petroleum while another searcher got information about the Deepwater Horizon oil spill and that the two search results pages were "strikingly different". The bubble effect may have negative implications for civic discourse, according to Pariser. Since this problem has been identified, competing search engines have emerged that seek to avoid this problem by not tracking or "bubbling" users, such as DuckDuckGo. Other scholars do not share Pariser's view, finding the evidence in support of his thesis unconvincing.

Religious search engines

The global growth of the Internet and electronic media in the Arab and Muslim World during the last decade has encouraged Islamic adherents in the Middle East and Asian sub-continent, to attempt their own search engines, their own filtered search portals that would enable users to perform safe searches. More than usual safe search filters, these Islamic web portals categorizing websites into being either "halal" or "haram", based on interpretation of the "Law of Islam". ImHalal came online in September 2011. Halalgoogling came online in July 2013. These use haram filters on the collections from Google and Bing (and others).

While lack of investment and slow pace in technologies in the Muslim World has hindered progress and thwarted success of an Islamic search engine, targeting as the main consumers Islamic adherents, projects like Muxlim, a Muslim lifestyle site, did receive millions of dollars from investors like Rite Internet Ventures, and it also faltered. Other religion-oriented search engines are Jewogle, the Jewish version of Google, and SeekFind.org, which is Christian. SeekFind filters sites that attack or degrade their faith.

Search engine submission

Web search engine submission is a process in which a webmaster submits a website directly to a search engine. While search engine submission is sometimes presented as a way to promote a website, it generally is not necessary because the major search engines use web crawlers that will eventually find most web sites on the Internet without assistance. They can either submit one web page at a time, or they can submit the entire site using a sitemap, but it is normally only necessary to submit the home page of a web site as search engines are able to crawl a well designed website. There are two remaining reasons to submit a web site or web page to a search engine: to add an entirely new web site without waiting for a search engine to discover it, and to have a web site's record updated after a substantial redesign.

Some search engine submission software not only submits websites to multiple search engines, but also adds links to websites from their own pages. This could appear helpful in increasing a website's ranking, because external links are one of the most important factors determining a website's ranking. However, John Mueller of Google has stated that this "can lead to a tremendous number of unnatural links for your site" with a negative impact on site ranking.