Speculative realism is a movement in contemporary Continental-inspired philosophy (also known as post-Continental philosophy) that defines itself loosely in its stance of metaphysical realism against its interpretation of the dominant forms of post-Kantian philosophy (or what it terms "correlationism").

Speculative realism takes its name from a conference held at Goldsmiths College, University of London in April 2007. The conference was moderated by Alberto Toscano of Goldsmiths College, and featured presentations by Ray Brassier of American University of Beirut (then at Middlesex University), Iain Hamilton Grant of the University of the West of England, Graham Harman of the American University in Cairo, and Quentin Meillassoux of the École Normale Supérieure in Paris. Credit for the name "speculative realism" is generally ascribed to Brassier, though Meillassoux had already used the term "speculative materialism" to describe his own position.

A second conference, entitled "Speculative Realism/Speculative Materialism", took place at the UWE Bristol on Friday 24 April 2009, two years after the original event at Goldsmiths. The line-up consisted of Ray Brassier, Iain Hamilton Grant, Graham Harman, and (in place of Meillassoux, who was unable to attend) Alberto Toscano.

Critique of correlationism

While often in disagreement over basic philosophical issues, the speculative realist thinkers have a shared resistance to what they interpret as philosophies of human finitude inspired by the tradition of Immanuel Kant.

What unites the four core members of the movement is an attempt to overcome both "correlationism" and "philosophies of access". In After Finitude, Meillassoux defines correlationism as "the idea according to which we only ever have access to the correlation between thinking and being, and never to either term considered apart from the other." Philosophies of access are any of those philosophies which privilege the human being over other entities. Both ideas represent forms of anthropocentrism.

All four of the core thinkers within speculative realism work to overturn these forms of philosophy which privilege the human being, favouring distinct forms of realism against the dominant forms of idealism in much of contemporary Continental philosophy.

Variations

While sharing in the goal of overturning the dominant strands of post-Kantian thought in Continental philosophy, there are important differences separating the core members of the speculative realist movement and their followers.

Speculative materialism

In his critique of correlationism, Quentin Meillassoux (who uses the term speculative materialism to describe his position) finds two principles as the locus of Kant's philosophy. The first is the principle of correlation itself, which claims essentially that we can only know the correlate of Thought and Being; what lies outside that correlate is unknowable. The second is termed by Meillassoux the principle of factiality, which states that things could be otherwise than what they are. This principle is upheld by Kant in his defence of the thing-in-itself as unknowable but imaginable. We can imagine reality as being fundamentally different even if we never know such a reality. According to Meillassoux, the defence of both principles leads to "weak" correlationism (such as those of Kant and Husserl), while the rejection of the thing-in-itself leads to the "strong" correlationism of thinkers such as late Ludwig Wittgenstein and late Martin Heidegger, for whom it makes no sense to suppose that there is anything outside of the correlate of Thought and Being, and so the principle of factiality is eliminated in favour of a strengthened principle of correlation.

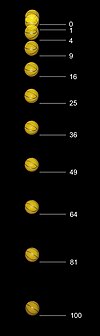

Meillassoux follows the opposite tactic in rejecting the principle of correlation for the sake of a bolstered principle of factiality in his post-Kantian return to Hume. By arguing in favour of such a principle, Meillassoux is led to reject the necessity not only of all physical laws of nature, but all logical laws except the Principle of Non-Contradiction (since eliminating this would undermine the Principle of Factiality which claims that things can always be otherwise than what they are). By rejecting the Principle of Sufficient Reason, there can be no justification for the necessity of physical laws, meaning that while the universe may be ordered in such and such a way, there is no reason it could not be otherwise. Meillassoux rejects the Kantian a priori in favour of a Humean a priori, claiming that the lesson to be learned from Hume on the subject of causality is that "the same cause may actually bring about 'a hundred different events' (and even many more)."

Object-oriented ontology

The central tenet of Graham Harman and Levi Bryant's object-oriented ontology (OOO) is that objects have been neglected in philosophy in favor of a "radical philosophy" that tries to "undermine" objects by saying that objects are the crusts to a deeper underlying reality, either in the form of monism or a perpetual flux, or those that try to "overmine" objects by saying that the idea of a whole object is a form of folk ontology. According to Harman, everything is an object, whether it be a mailbox, electromagnetic radiation, curved spacetime, the Commonwealth of Nations, or a propositional attitude; all things, whether physical or fictional, are equally objects. Sympathetic to panpsychism, Harman proposes a new philosophical discipline called "speculative psychology" dedicated to investigating the "cosmic layers of psyche" and "ferreting out the specific psychic reality of earthworms, dust, armies, chalk, and stone".

Harman defends a version of the Aristotelian notion of substance. Unlike Leibniz, for whom there were both substances and aggregates, Harman maintains that when objects combine, they create new objects. In this way, he defends an a priori metaphysics that claims that reality is made up only of objects and that there is no "bottom" to the series of objects. For Harman, an object is in itself an infinite recess, unknowable and inaccessible by any other thing. This leads to his account of what he terms "vicarious causality". Inspired by the occasionalists of medieval Islamic philosophy, Harman maintains that no two objects can ever interact save through the mediation of a "sensual vicar". There are two types of objects, then, for Harman: real objects and the sensual objects that allow for interaction. The former are the things of everyday life, while the latter are the caricatures that mediate interaction. For example, when fire burns cotton, Harman argues that the fire does not touch the essence of that cotton which is inexhaustible by any relation, but that the interaction is mediated by a caricature of the cotton which causes it to burn.

Transcendental materialism

Iain Hamilton Grant defends a position he calls transcendental materialism. He argues against what he terms "somatism", the philosophy and physics of bodies. In his Philosophies of Nature After Schelling, Grant tells a new history of philosophy from Plato onward based on the definition of matter. Aristotle distinguished between Form and Matter in such a way that Matter was invisible to philosophy, whereas Grant argues for a return to the Platonic Matter as not only the basic building blocks of reality, but the forces and powers that govern our reality. He traces this same argument to the post-Kantian German Idealists Johann Gottlieb Fichte and Friedrich Wilhelm Joseph Schelling, claiming that the distinction between Matter as substantive versus useful fiction persists to this day and that we should end our attempts to overturn Plato and instead attempt to overturn Kant and return to "speculative physics" in the Platonic tradition, that is, not a physics of bodies, but a "physics of the All".

Eugene Thacker has examined how the concept of "life itself" is both determined within regional philosophy and also how "life itself" comes to acquire metaphysical properties. His book After Life shows how the ontology of life operates by way of a split between "Life" and "the living," making possible a "metaphysical displacement" in which life is thought via another metaphysical term, such as time, form, or spirit: "Every ontology of life thinks of life in terms of something-other-than-life...that something-other-than-life is most often a metaphysical concept, such as time and temporality, form and causality, or spirit and immanence" Thacker traces this theme from Aristotle, to Scholasticism and mysticism/negative theology, to Spinoza and Kant, showing how this three-fold displacement is also alive in philosophy today (life as time in process philosophy and Deleuzianism, life as form in biopolitical thought, life as spirit in post-secular philosophies of religion). Thacker examines the relation of speculative realism to the ontology of life, arguing for a "vitalist correlation": "Let us say that a vitalist correlation is one that fails to conserve the correlationist dual necessity of the separation and inseparability of thought and object, self and world, and which does so based on some ontologized notion of 'life'.'' Ultimately Thacker argues for a skepticism regarding "life": "Life is not only a problem of philosophy, but a problem for philosophy."

Other thinkers have emerged within this group, united in their allegiance to what has been known as "process philosophy", rallying around such thinkers as Schelling, Bergson, Whitehead, and Deleuze, among others. A recent example is found in Steven Shaviro's book Without Criteria: Kant, Whitehead, Deleuze, and Aesthetics, which argues for a process-based approach that entails panpsychism as much as it does vitalism or animism. For Shaviro, it is Whitehead's philosophy of prehensions and nexus that offers the best combination of continental and analytical philosophy. Another recent example is found in Jane Bennett's book Vibrant Matter, which argues for a shift from human relations to things, to a "vibrant matter" that cuts across the living and non-living, human bodies and non-human bodies. Leon Niemoczynski, in his book Charles Sanders Peirce and a Religious Metaphysics of Nature, invokes what he calls "speculative naturalism" so as to argue that nature can afford lines of insight into its own infinitely productive "vibrant" ground, which he identifies as natura naturans.

Transcendental nihilism

In Nihil Unbound: Extinction and Enlightenment, Ray Brassier defends transcendental nihilism. He maintains that philosophy has avoided the traumatic idea of extinction, instead attempting to find meaning in a world conditioned by the very idea of its own annihilation. Thus Brassier critiques both the phenomenological and hermeneutic strands of continental philosophy as well as the vitality of thinkers like Gilles Deleuze, who work to ingrain meaning in the world and stave off the "threat" of nihilism. Instead, drawing on thinkers such as Alain Badiou, François Laruelle, Paul Churchland and Thomas Metzinger, Brassier defends a view of the world as inherently devoid of meaning. That is, rather than avoiding nihilism, Brassier embraces it as the truth of reality. Brassier concludes from his readings of Badiou and Laruelle that the universe is founded on the nothing, but also that philosophy is the "organon of extinction," that it is only because life is conditioned by its own extinction that there is thought at all. Brassier then defends a radically anti-correlationist philosophy proposing that Thought is conjoined not with Being, but with Non-Being.

Controversy about the term

In an interview with Kronos magazine published in March 2011, Ray Brassier denied that there is any such thing as a "speculative realist movement" and firmly distanced himself from those who continue to attach themselves to the brand name:

The "speculative realist movement" exists only in the imaginations of a group of bloggers promoting an agenda for which I have no sympathy whatsoever: actor-network theory spiced with pan-psychist metaphysics and morsels of process philosophy. I don't believe the internet is an appropriate medium for serious philosophical debate; nor do I believe it is acceptable to try to concoct a philosophical movement online by using blogs to exploit the misguided enthusiasm of impressionable graduate students. I agree with Deleuze's remark that ultimately the most basic task of philosophy is to impede stupidity, so I see little philosophical merit in a "movement" whose most signal achievement thus far is to have generated an online orgy of stupidity.

Publications

Speculative realism has close ties to the journal Collapse, which published the proceedings of the inaugural conference at Goldsmiths and has featured numerous other articles by 'speculative realist' thinkers; as has the academic journal Pli, which is edited and produced by members of the Graduate School of the Department of Philosophy at the University of Warwick. The journal Speculations, founded in 2010 published by Punctum books, regularly features articles related to Speculative Realism. Edinburgh University Press publishes a book series called Speculative Realism.