From Wikipedia, the free encyclopedia

Nanorobotics is an emerging technology field creating machines or robots whose components are at or near the scale of a nanometer (10−9 meters). More specifically, nanorobotics (as opposed to microrobotics) refers to the nanotechnology engineering discipline of designing and building nanorobots, with devices ranging in size from 0.1 to 10 micrometres and constructed of nanoscale or molecular components. The terms nanobot, nanoid, nanite, nanomachine, or nanomite have also been used to describe such devices currently under research and development.

Nanomachines are largely in the research and development phase, but some primitive molecular machines and nanomotors

have been tested. An example is a sensor having a switch approximately

1.5 nanometers across, able to count specific molecules in the chemical

sample. The first useful applications of nanomachines may be in nanomedicine. For example, biological machines could be used to identify and destroy cancer cells.

Another potential application is the detection of toxic chemicals, and

the measurement of their concentrations, in the environment. Rice University has demonstrated a single-molecule car developed by a chemical process and including Buckminsterfullerenes (buckyballs) for wheels. It is actuated by controlling the environmental temperature and by positioning a scanning tunneling microscope tip.

Another definition is a robot that allows precise interactions with nanoscale objects, or can manipulate with nanoscale resolution. Such devices are more related to microscopy or scanning probe microscopy, instead of the description of nanorobots as molecular machines. Using the microscopy definition, even a large apparatus such as an atomic force microscope

can be considered a nanorobotic instrument when configured to perform

nanomanipulation. For this viewpoint, macroscale robots or microrobots

that can move with nanoscale precision can also be considered

nanorobots.

Nanorobotics theory

According to Richard Feynman, it was his former graduate student and collaborator Albert Hibbs who originally suggested to him (circa 1959) the idea of a medical use for Feynman's theoretical micro-machines (see biological machine).

Hibbs suggested that certain repair machines might one day be reduced

in size to the point that it would, in theory, be possible to (as

Feynman put it) "swallow the surgeon". The idea was incorporated into Feynman's 1959 essay There's Plenty of Room at the Bottom.

Since nano-robots would be microscopic in size, it would probably be necessary

for very large numbers of them to work together to perform microscopic

and macroscopic tasks. These nano-robot swarms, both those unable to replicate (as in utility fog) and those able to replicate unconstrained in the natural environment (as in grey goo and synthetic biology), are found in many science fiction stories, such as the Borg nano-probes in Star Trek and The Outer Limits episode "The New Breed".

Some proponents of nano-robotics, in reaction to the grey goo

scenarios that they earlier helped to propagate, hold the view that

nano-robots able to replicate outside of a restricted factory

environment do not form a necessary part of a purported productive

nanotechnology, and that the process of self-replication, were it ever

to be developed, could be made inherently safe. They further assert that

their current plans for developing and using molecular manufacturing do

not in fact include free-foraging replicators.

A detailed theoretical discussion of nanorobotics, including specific design issues such as sensing, power communication, navigation, manipulation, locomotion, and onboard computation, has been presented in the medical context of nanomedicine by Robert Freitas. Some of these discussions remain at the level of unbuildable generality and do not approach the level of detailed engineering.

Legal and ethical implications

Open technology

A document with a proposal on nanobiotech development using open design technology methods, as in open-source hardware and open-source software, has been addressed to the United Nations General Assembly. According to the document sent to the United Nations, in the same way that open source has in recent years accelerated the development of computer systems, a similar approach should benefit the society at large and accelerate nanorobotics development. The use of nanobiotechnology should be established as a human heritage for the coming generations, and developed as an open technology based on ethical practices for peaceful purposes. Open technology is stated as a fundamental key for such an aim.

Nanorobot race

In the same ways that technology research and development drove the space race and nuclear arms race, a race for nanorobots is occurring. There is plenty of ground allowing nanorobots to be included among the emerging technologies. Some of the reasons are that large corporations, such as General Electric, Hewlett-Packard, Synopsys, Northrop Grumman and Siemens have been recently working in the development and research of nanorobots; surgeons are getting involved and starting to propose ways to apply nanorobots for common medical procedures;

universities and research institutes were granted funds by government

agencies exceeding $2 billion towards research developing nanodevices

for medicine;

bankers are also strategically investing with the intent to acquire

beforehand rights and royalties on future nanorobots commercialisation. Some aspects of nanorobot litigation and related issues linked to monopoly have already arisen.

A large number of patents has been granted recently on nanorobots, done

mostly for patent agents, companies specialized solely on building

patent portfolios, and lawyers. After a long series of patents and

eventually litigations, see for example the invention of radio, or the war of currents, emerging fields of technology tend to become a monopoly, which normally is dominated by large corporations.

Manufacturing approaches

Manufacturing

nanomachines assembled from molecular components is a very challenging

task. Because of the level of difficulty, many engineers and scientists

continue working cooperatively across multidisciplinary approaches to

achieve breakthroughs in this new area of development. Thus, it is quite

understandable the importance of the following distinct techniques

currently applied towards manufacturing nanorobots:

Biochip

The joint use of nanoelectronics, photolithography, and new biomaterials

provides a possible approach to manufacturing nanorobots for common

medical uses, such as surgical instrumentation, diagnosis, and drug

delivery. This method for manufacturing on nanotechnology scale is in use in the electronics industry since 2008.

So, practical nanorobots should be integrated as nanoelectronics

devices, which will allow tele-operation and advanced capabilities for

medical instrumentation.

Nubots

A nucleic acid robot (nubot) is an organic molecular machine at the nanoscale.

DNA structure can provide means to assemble 2D and 3D nanomechanical

devices. DNA based machines can be activated using small molecules,

proteins and other molecules of DNA.

Biological circuit gates based on DNA materials have been engineered as

molecular machines to allow in-vitro drug delivery for targeted health

problems. Such material based systems would work most closely to smart biomaterial drug system delivery, while not allowing precise in vivo teleoperation of such engineered prototypes.

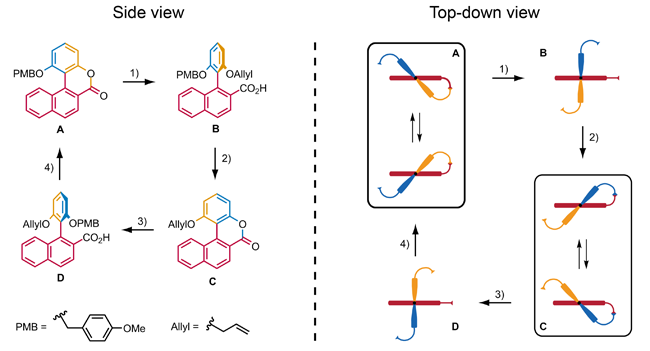

Surface-bound systems

Several reports have demonstrated the attachment of synthetic molecular motors to surfaces.

These primitive nanomachines have been shown to undergo machine-like

motions when confined to the surface of a macroscopic material. The

surface anchored motors could potentially be used to move and position

nanoscale materials on a surface in the manner of a conveyor belt.

Positional nanoassembly

Nanofactory Collaboration, founded by Robert Freitas and Ralph Merkle in 2000 and involving 23 researchers from 10 organizations and 4 countries, focuses on developing a practical research agenda specifically aimed at developing positionally-controlled diamond mechanosynthesis and a diamondoid nanofactory that would have the capability of building diamondoid medical nanorobots.

Biohybrids

The

emerging field of bio-hybrid systems combines biological and synthetic

structural elements for biomedical or robotic applications. The

constituting elements of bio-nanoelectromechanical systems (BioNEMS) are

of nanoscale size, for example DNA, proteins or nanostructured

mechanical parts. Thiol-ene e-beams resist allow the direct writing of

nanoscale features, followed by the functionalization of the natively

reactive resist surface with biomolecules. Other approaches use a biodegradable material attached to magnetic particles that allow them to be guided around the body.

Bacteria-based

This approach proposes the use of biological microorganisms, like the bacterium Escherichia coli and Salmonella typhimurium.

Thus the model uses a flagellum for propulsion purposes. Electromagnetic

fields normally control the motion of this kind of biological

integrated device.

Chemists at the University of Nebraska have created a humidity gauge by fusing a bacterium to a silicon computer chip.

Virus-based

Retroviruses can be retrained to attach to cells and replace DNA. They go through a process called reverse transcription to deliver genetic packaging in a vector. Usually, these devices are Pol – Gag genes of the virus for the Capsid and Delivery system. This process is called retroviral gene therapy, having the ability to re-engineer cellular DNA by usage of viral vectors. This approach has appeared in the form of retroviral, adenoviral, and lentiviral gene delivery systems. These gene therapy vectors have been used in cats to send genes into the genetically modified organism (GMO), causing it to display the trait.

3D printing

3D printing is the process by which a three-dimensional structure is

built through the various processes of additive manufacturing. Nanoscale

3D printing involves many of the same process, incorporated at a much

smaller scale. To print a structure in the 5-400 µm scale, the precision

of the 3D printing machine needs to be improved greatly. A two-step

process of 3D printing, using a 3D printing and laser etched plates

method was incorporated as an improvement technique.

To be more precise at a nanoscale, the 3D printing process uses a laser

etching machine, which etches the details needed for the segments of

nanorobots into each plate. The plate is then transferred to the 3D

printer, which fills the etched regions with the desired nanoparticle.

The 3D printing process is repeated until the nanorobot is built from

the bottom up. This 3D printing process has many benefits. First, it

increases the overall accuracy of the printing process. Second, it has the potential to create functional segments of a nanorobot.

The 3D printer uses a liquid resin, which is hardened at precisely the

correct spots by a focused laser beam. The focal point of the laser beam

is guided through the resin by movable mirrors and leaves behind a

hardened line of solid polymer, just a few hundred nanometers wide. This

fine resolution enables the creation of intricately structured

sculptures as tiny as a grain of sand. This process takes place by using

photoactive resins, which are hardened by the laser at an extremely

small scale to create the structure. This process is quick by nanoscale

3D printing standards. Ultra-small features can be made with the 3D

micro-fabrication technique used in multiphoton photopolymerisation.

This approach uses a focused laser to trace the desired 3D object into a

block of gel. Due to the nonlinear nature of photo excitation, the gel

is cured to a solid only in the places where the laser was focused while

the remaining gel is then washed away. Feature sizes of under 100 nm

are easily produced, as well as complex structures with moving and

interlocked parts.

Potential uses

Nanomedicine

Potential uses for nanorobotics in medicine include early diagnosis and targeted drug-delivery for cancer, biomedical instrumentation, surgery, pharmacokinetics, monitoring of diabetes, and health care.

In such plans, future medical nanotechnology

is expected to employ nanorobots injected into the patient to perform

work at a cellular level. Such nanorobots intended for use in medicine

should be non-replicating, as replication would needlessly increase

device complexity, reduce reliability, and interfere with the medical

mission.

Nanotechnology provides a wide range of new technologies for developing customized means to optimize the delivery of pharmaceutical drugs. Today, harmful side effects of treatments such as chemotherapy are commonly a result of drug delivery methods that don't pinpoint their intended target cells accurately. Researchers at Harvard and MIT, however, have been able to attach special RNA

strands, measuring nearly 10 nm in diameter, to nanoparticles, filling

them with a chemotherapy drug. These RNA strands are attracted to cancer cells. When the nanoparticle encounters a cancer cell, it adheres to it, and releases the drug into the cancer cell.

This directed method of drug delivery has great potential for treating

cancer patients while avoiding negative effects (commonly associated

with improper drug delivery).

The first demonstration of nanomotors operating in living organisms was

carried out in 2014 at University of California, San Diego. MRI-guided nanocapsules are one potential precursor to nanorobots.

Another useful application of nanorobots is assisting in the repair of tissue cells alongside white blood cells. Recruiting inflammatory cells or white blood cells (which include neutrophil granulocytes, lymphocytes, monocytes, and mast cells) to the affected area is the first response of tissues to injury.

Because of their small size, nanorobots could attach themselves to the

surface of recruited white cells, to squeeze their way out through the

walls of blood vessels

and arrive at the injury site, where they can assist in the tissue

repair process. Certain substances could possibly be used to accelerate

the recovery.

The science behind this mechanism is quite complex. Passage of cells across the blood endothelium,

a process known as transmigration, is a mechanism involving engagement

of cell surface receptors to adhesion molecules, active force exertion

and dilation of the vessel walls and physical deformation of the migrating cells. By attaching themselves to migrating inflammatory

cells, the robots can in effect "hitch a ride" across the blood

vessels, bypassing the need for a complex transmigration mechanism of

their own.

As of 2016, in the United States, Food and Drug Administration (FDA) regulates nanotechnology on the basis of size.

Nanocomposite particles that are controlled remotely by an electromagnetic field was also developed. This series of nanorobots that are now enlisted in the Guinness World Records, can be used to interact with the biological cells. Scientists suggest that this technology can be used for the treatment of cancer.

Cultural references

The Nanites are characters on the TV show Mystery Science Theater 3000.

They're self-replicating, bio-engineered organisms that work on the

ship and reside in the SOL's computer systems. They made their first

appearance in Season 8.

Nanites are used in a number of episodes in the Netflix series

"Travelers". They be programmed and injected into injured people to

perform repairs. First appearance in season 1

Nanites also feature in the Rise of Iron 2016 expansion for

Destiny in which SIVA, a self-replicating nanotechnology is used as a

weapon.

Nanites (referred to more often as Nanomachines) are often

referenced in Konami's "Metal Gear" series being used to enhance and

regulate abilities and body functions.

In the Star Trek franchise TV shows nanites play an important plot device. Starting with Evolution in the third season of The Next Generation, Borg Nanoprobes

perform the function of maintaining the Borg cybernetic systems, as

well as repairing damage to the organic parts of a Borg. They generate

new technology inside a Borg when needed, as well as protecting them

from many forms of disease.

Nanites play a role in the video game Deus Ex, being the basis of

the nano-augmentation technology which gives augmented people

superhuman abilities.

Nanites are also mentioned in the Arc of a Scythe book series by Neal Shusterman and are used to heal all nonfatal injuries, regulate bodily functions, and considerably lessen pain.