The formation and evolution of the Solar System began about 4.5 billion years ago with the gravitational collapse of a small part of a giant molecular cloud. Most of the collapsing mass collected in the center, forming the Sun, while the rest flattened into a protoplanetary disk out of which the planets, moons, asteroids, and other small Solar System bodies formed.

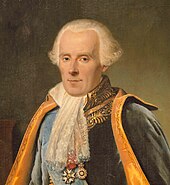

This model, known as the nebular hypothesis, was first developed in the 18th century by Emanuel Swedenborg, Immanuel Kant, and Pierre-Simon Laplace. Its subsequent development has interwoven a variety of scientific disciplines including astronomy, chemistry, geology, physics, and planetary science. Since the dawn of the space age in the 1950s and the discovery of extrasolar planets in the 1990s, the model has been both challenged and refined to account for new observations.

The Solar System has evolved considerably since its initial formation. Many moons have formed from circling discs of gas and dust around their parent planets, while other moons are thought to have formed independently and later to have been captured by their planets. Still others, such as Earth's Moon, may be the result of giant collisions. Collisions between bodies have occurred continually up to the present day and have been central to the evolution of the Solar System. The positions of the planets might have shifted due to gravitational interactions. This planetary migration is now thought to have been responsible for much of the Solar System's early evolution.

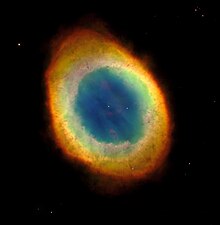

In roughly 5 billion years, the Sun will cool and expand outward to many times its current diameter (becoming a red giant), before casting off its outer layers as a planetary nebula and leaving behind a stellar remnant known as a white dwarf. In the far distant future, the gravity of passing stars will gradually reduce the Sun's retinue of planets. Some planets will be destroyed, others ejected into interstellar space. Ultimately, over the course of tens of billions of years, it is likely that the Sun will be left with none of the original bodies in orbit around it.

History

Ideas concerning the origin and fate of the world date from the earliest known writings; however, for almost all of that time, there was no attempt to link such theories to the existence of a "Solar System", simply because it was not generally thought that the Solar System, in the sense we now understand it, existed. The first step toward a theory of Solar System formation and evolution was the general acceptance of heliocentrism, which placed the Sun at the centre of the system and the Earth in orbit around it. This concept had developed for millennia (Aristarchus of Samos had suggested it as early as 250 BC), but was not widely accepted until the end of the 17th century. The first recorded use of the term "Solar System" dates from 1704.

The current standard theory for Solar System formation, the nebular hypothesis, has fallen into and out of favour since its formulation by Emanuel Swedenborg, Immanuel Kant, and Pierre-Simon Laplace in the 18th century. The most significant criticism of the hypothesis was its apparent inability to explain the Sun's relative lack of angular momentum when compared to the planets. However, since the early 1980s studies of young stars have shown them to be surrounded by cool discs of dust and gas, exactly as the nebular hypothesis predicts, which has led to its re-acceptance.

Understanding of how the Sun is expected to continue to evolve required an understanding of the source of its power. Arthur Stanley Eddington's confirmation of Albert Einstein's theory of relativity led to his realisation that the Sun's energy comes from nuclear fusion reactions in its core, fusing hydrogen into helium. In 1935, Eddington went further and suggested that other elements also might form within stars. Fred Hoyle elaborated on this premise by arguing that evolved stars called red giants created many elements heavier than hydrogen and helium in their cores. When a red giant finally casts off its outer layers, these elements would then be recycled to form other star systems.

Formation

Presolar nebula

The nebular hypothesis says that the Solar System formed from the gravitational collapse of a fragment of a giant molecular cloud. The cloud was about 20 parsec (65 light years) across, while the fragments were roughly 1 parsec (three and a quarter light-years) across. The further collapse of the fragments led to the formation of dense cores 0.01–0.1 parsec (2,000–20,000 AU) in size. One of these collapsing fragments (known as the presolar nebula) formed what became the Solar System. The composition of this region with a mass just over that of the Sun (M☉) was about the same as that of the Sun today, with hydrogen, along with helium and trace amounts of lithium produced by Big Bang nucleosynthesis, forming about 98% of its mass. The remaining 2% of the mass consisted of heavier elements that were created by nucleosynthesis in earlier generations of stars. Late in the life of these stars, they ejected heavier elements into the interstellar medium.

The oldest inclusions found in meteorites, thought to trace the first solid material to form in the presolar nebula, are 4568.2 million years old, which is one definition of the age of the Solar System. Studies of ancient meteorites reveal traces of stable daughter nuclei of short-lived isotopes, such as iron-60, that only form in exploding, short-lived stars. This indicates that one or more supernovae occurred nearby. A shock wave from a supernova may have triggered the formation of the Sun by creating relatively dense regions within the cloud, causing these regions to collapse. Because only massive, short-lived stars produce supernovae, the Sun must have formed in a large star-forming region that produced massive stars, possibly similar to the Orion Nebula. Studies of the structure of the Kuiper belt and of anomalous materials within it suggest that the Sun formed within a cluster of between 1,000 and 10,000 stars with a diameter of between 6.5 and 19.5 light years and a collective mass of 3,000 M☉. This cluster began to break apart between 135 million and 535 million years after formation. Several simulations of our young Sun interacting with close-passing stars over the first 100 million years of its life produce anomalous orbits observed in the outer Solar System, such as detached objects.

Because of the conservation of angular momentum, the nebula spun faster as it collapsed. As the material within the nebula condensed, the atoms within it began to collide with increasing frequency, converting their kinetic energy into heat. The center, where most of the mass collected, became increasingly hotter than the surrounding disc. Over about 100,000 years, the competing forces of gravity, gas pressure, magnetic fields, and rotation caused the contracting nebula to flatten into a spinning protoplanetary disc with a diameter of about 200 AU and form a hot, dense protostar (a star in which hydrogen fusion has not yet begun) at the centre.

At this point in its evolution, the Sun is thought to have been a T Tauri star. Studies of T Tauri stars show that they are often accompanied by discs of pre-planetary matter with masses of 0.001–0.1 M☉. These discs extend to several hundred AU—the Hubble Space Telescope has observed protoplanetary discs of up to 1000 AU in diameter in star-forming regions such as the Orion Nebula—and are rather cool, reaching a surface temperature of only about 1,000 K (730 °C; 1,340 °F) at their hottest. Within 50 million years, the temperature and pressure at the core of the Sun became so great that its hydrogen began to fuse, creating an internal source of energy that countered gravitational contraction until hydrostatic equilibrium was achieved. This marked the Sun's entry into the prime phase of its life, known as the main sequence. Main-sequence stars derive energy from the fusion of hydrogen into helium in their cores. The Sun remains a main-sequence star today. As the early Solar System continued to evolve, it eventually drifted away from its siblings in the stellar nursery, and continued orbiting the Milky Way's center on its own.

Formation of the planets

The various planets are thought to have formed from the solar nebula, the disc-shaped cloud of gas and dust left over from the Sun's formation. The currently accepted method by which the planets formed is accretion, in which the planets began as dust grains in orbit around the central protostar. Through direct contact and self-organization, these grains formed into clumps up to 200 m (660 ft) in diameter, which in turn collided to form larger bodies (planetesimals) of ~10 km (6.2 mi) in size. These gradually increased through further collisions, growing at the rate of centimetres per year over the course of the next few million years.

The inner Solar System, the region of the Solar System inside 4 AU, was too warm for volatile molecules like water and methane to condense, so the planetesimals that formed there could only form from compounds with high melting points, such as metals (like iron, nickel, and aluminium) and rocky silicates. These rocky bodies would become the terrestrial planets (Mercury, Venus, Earth, and Mars). These compounds are quite rare in the Universe, comprising only 0.6% of the mass of the nebula, so the terrestrial planets could not grow very large. The terrestrial embryos grew to about 0.05 Earth masses (M⊕) and ceased accumulating matter about 100,000 years after the formation of the Sun; subsequent collisions and mergers between these planet-sized bodies allowed terrestrial planets to grow to their present sizes (see Terrestrial planets below).

When the terrestrial planets were forming, they remained immersed in a disk of gas and dust. The gas was partially supported by pressure and so did not orbit the Sun as rapidly as the planets. The resulting drag and, more importantly, gravitational interactions with the surrounding material caused a transfer of angular momentum, and as a result the planets gradually migrated to new orbits. Models show that density and temperature variations in the disk governed this rate of migration, but the net trend was for the inner planets to migrate inward as the disk dissipated, leaving the planets in their current orbits.

The giant planets (Jupiter, Saturn, Uranus, and Neptune) formed further out, beyond the frost line, which is the point between the orbits of Mars and Jupiter where the material is cool enough for volatile icy compounds to remain solid. The ices that formed the Jovian planets were more abundant than the metals and silicates that formed the terrestrial planets, allowing the giant planets to grow massive enough to capture hydrogen and helium, the lightest and most abundant elements. Planetesimals beyond the frost line accumulated up to 4 M⊕ within about 3 million years. Today, the four giant planets comprise just under 99% of all the mass orbiting the Sun. Theorists believe it is no accident that Jupiter lies just beyond the frost line. Because the frost line accumulated large amounts of water via evaporation from infalling icy material, it created a region of lower pressure that increased the speed of orbiting dust particles and halted their motion toward the Sun. In effect, the frost line acted as a barrier that caused material to accumulate rapidly at ~5 AU from the Sun. This excess material coalesced into a large embryo (or core) on the order of 10 M⊕, which began to accumulate an envelope via accretion of gas from the surrounding disc at an ever-increasing rate. Once the envelope mass became about equal to the solid core mass, growth proceeded very rapidly, reaching about 150 Earth masses ~105 years thereafter and finally topping out at 318 M⊕. Saturn may owe its substantially lower mass simply to having formed a few million years after Jupiter, when there was less gas available to consume.

T Tauri stars like the young Sun have far stronger stellar winds than more stable, older stars. Uranus and Neptune are thought to have formed after Jupiter and Saturn did, when the strong solar wind had blown away much of the disc material. As a result, those planets accumulated little hydrogen and helium—not more than 1 M⊕ each. Uranus and Neptune are sometimes referred to as failed cores. The main problem with formation theories for these planets is the timescale of their formation. At the current locations it would have taken millions of years for their cores to accrete. This means that Uranus and Neptune may have formed closer to the Sun—near or even between Jupiter and Saturn—and later migrated or were ejected outward (see Planetary migration below). Motion in the planetesimal era was not all inward toward the Sun; the Stardust sample return from Comet Wild 2 has suggested that materials from the early formation of the Solar System migrated from the warmer inner Solar System to the region of the Kuiper belt.

After between three and ten million years, the young Sun's solar wind would have cleared away all the gas and dust in the protoplanetary disc, blowing it into interstellar space, thus ending the growth of the planets.

Subsequent evolution

The planets were originally thought to have formed in or near their current orbits. This has been questioned during the last 20 years. Currently, many planetary scientists think that the Solar System might have looked very different after its initial formation: several objects at least as massive as Mercury were present in the inner Solar System, the outer Solar System was much more compact than it is now, and the Kuiper belt was much closer to the Sun.

Terrestrial planets

At the end of the planetary formation epoch the inner Solar System was populated by 50–100 Moon- to Mars-sized planetary embryos. Further growth was possible only because these bodies collided and merged, which took less than 100 million years. These objects would have gravitationally interacted with one another, tugging at each other's orbits until they collided, growing larger until the four terrestrial planets we know today took shape. One such giant collision is thought to have formed the Moon (see Moons below), while another removed the outer envelope of the young Mercury.

One unresolved issue with this model is that it cannot explain how the initial orbits of the proto-terrestrial planets, which would have needed to be highly eccentric to collide, produced the remarkably stable and nearly circular orbits they have today. One hypothesis for this "eccentricity dumping" is that the terrestrials formed in a disc of gas still not expelled by the Sun. The "gravitational drag" of this residual gas would have eventually lowered the planets' energy, smoothing out their orbits. However, such gas, if it existed, would have prevented the terrestrial planets' orbits from becoming so eccentric in the first place. Another hypothesis is that gravitational drag occurred not between the planets and residual gas but between the planets and the remaining small bodies. As the large bodies moved through the crowd of smaller objects, the smaller objects, attracted by the larger planets' gravity, formed a region of higher density, a "gravitational wake", in the larger objects' path. As they did so, the increased gravity of the wake slowed the larger objects down into more regular orbits.

Asteroid belt

The outer edge of the terrestrial region, between 2 and 4 AU from the Sun, is called the asteroid belt. The asteroid belt initially contained more than enough matter to form 2–3 Earth-like planets, and, indeed, a large number of planetesimals formed there. As with the terrestrials, planetesimals in this region later coalesced and formed 20–30 Moon- to Mars-sized planetary embryos; however, the proximity of Jupiter meant that after this planet formed, 3 million years after the Sun, the region's history changed dramatically. Orbital resonances with Jupiter and Saturn are particularly strong in the asteroid belt, and gravitational interactions with more massive embryos scattered many planetesimals into those resonances. Jupiter's gravity increased the velocity of objects within these resonances, causing them to shatter upon collision with other bodies, rather than accrete.

As Jupiter migrated inward following its formation (see Planetary migration below), resonances would have swept across the asteroid belt, dynamically exciting the region's population and increasing their velocities relative to each other. The cumulative action of the resonances and the embryos either scattered the planetesimals away from the asteroid belt or excited their orbital inclinations and eccentricities. Some of those massive embryos too were ejected by Jupiter, while others may have migrated to the inner Solar System and played a role in the final accretion of the terrestrial planets. During this primary depletion period, the effects of the giant planets and planetary embryos left the asteroid belt with a total mass equivalent to less than 1% that of the Earth, composed mainly of small planetesimals. This is still 10–20 times more than the current mass in the main belt, which is now about 0.0005 M⊕. A secondary depletion period that brought the asteroid belt down close to its present mass is thought to have followed when Jupiter and Saturn entered a temporary 2:1 orbital resonance (see below).

The inner Solar System's period of giant impacts probably played a role in the Earth acquiring its current water content (~6×1021 kg) from the early asteroid belt. Water is too volatile to have been present at Earth's formation and must have been subsequently delivered from outer, colder parts of the Solar System. The water was probably delivered by planetary embryos and small planetesimals thrown out of the asteroid belt by Jupiter. A population of main-belt comets discovered in 2006 has been also suggested as a possible source for Earth's water. In contrast, comets from the Kuiper belt or farther regions delivered not more than about 6% of Earth's water. The panspermia hypothesis holds that life itself may have been deposited on Earth in this way, although this idea is not widely accepted.

Planetary migration

According to the nebular hypothesis, the outer two planets may be in the "wrong place". Uranus and Neptune (known as the "ice giants") exist in a region where the reduced density of the solar nebula and longer orbital times render their formation there highly implausible. The two are instead thought to have formed in orbits near Jupiter and Saturn (known as the "gas giants"), where more material was available, and to have migrated outward to their current positions over hundreds of millions of years.

a) Before Jupiter/Saturn 2:1 resonance

b) Scattering of Kuiper belt objects into the Solar System after the orbital shift of Neptune

c) After ejection of Kuiper belt bodies by Jupiter

The migration of the outer planets is also necessary to account for the existence and properties of the Solar System's outermost regions. Beyond Neptune, the Solar System continues into the Kuiper belt, the scattered disc, and the Oort cloud, three sparse populations of small icy bodies thought to be the points of origin for most observed comets. At their distance from the Sun, accretion was too slow to allow planets to form before the solar nebula dispersed, and thus the initial disc lacked enough mass density to consolidate into a planet. The Kuiper belt lies between 30 and 55 AU from the Sun, while the farther scattered disc extends to over 100 AU, and the distant Oort cloud begins at about 50,000 AU. Originally, however, the Kuiper belt was much denser and closer to the Sun, with an outer edge at approximately 30 AU. Its inner edge would have been just beyond the orbits of Uranus and Neptune, which were in turn far closer to the Sun when they formed (most likely in the range of 15–20 AU), and in 50% of simulations ended up in opposite locations, with Uranus farther from the Sun than Neptune.

According to the Nice model, after the formation of the Solar System, the orbits of all the giant planets continued to change slowly, influenced by their interaction with the large number of remaining planetesimals. After 500–600 million years (about 4 billion years ago) Jupiter and Saturn fell into a 2:1 resonance: Saturn orbited the Sun once for every two Jupiter orbits. This resonance created a gravitational push against the outer planets, possibly causing Neptune to surge past Uranus and plough into the ancient Kuiper belt. The planets scattered the majority of the small icy bodies inwards, while themselves moving outwards. These planetesimals then scattered off the next planet they encountered in a similar manner, moving the planets' orbits outwards while they moved inwards. This process continued until the planetesimals interacted with Jupiter, whose immense gravity sent them into highly elliptical orbits or even ejected them outright from the Solar System. This caused Jupiter to move slightly inward. Those objects scattered by Jupiter into highly elliptical orbits formed the Oort cloud; those objects scattered to a lesser degree by the migrating Neptune formed the current Kuiper belt and scattered disc. This scenario explains the Kuiper belt's and scattered disc's present low mass. Some of the scattered objects, including Pluto, became gravitationally tied to Neptune's orbit, forcing them into mean-motion resonances. Eventually, friction within the planetesimal disc made the orbits of Uranus and Neptune circular again.

In contrast to the outer planets, the inner planets are not thought to have migrated significantly over the age of the Solar System, because their orbits have remained stable following the period of giant impacts.

Another question is why Mars came out so small compared with Earth. A study by Southwest Research Institute, San Antonio, Texas, published June 6, 2011 (called the Grand tack hypothesis), proposes that Jupiter had migrated inward to 1.5 AU. After Saturn formed, migrated inward, and established the 2:3 mean motion resonance with Jupiter, the study assumes that both planets migrated back to their present positions. Jupiter thus would have consumed much of the material that would have created a bigger Mars. The same simulations also reproduce the characteristics of the modern asteroid belt, with dry asteroids and water-rich objects similar to comets. However, it is unclear whether conditions in the solar nebula would have allowed Jupiter and Saturn to move back to their current positions, and according to current estimates this possibility appears unlikely. Moreover, alternative explanations for the small mass of Mars exist.

Late Heavy Bombardment and after

Gravitational disruption from the outer planets' migration would have sent large numbers of asteroids into the inner Solar System, severely depleting the original belt until it reached today's extremely low mass. This event may have triggered the Late Heavy Bombardment that occurred approximately 4 billion years ago, 500–600 million years after the formation of the Solar System. This period of heavy bombardment lasted several hundred million years and is evident in the cratering still visible on geologically dead bodies of the inner Solar System such as the Moon and Mercury. The oldest known evidence for life on Earth dates to 3.8 billion years ago—almost immediately after the end of the Late Heavy Bombardment.

Impacts are thought to be a regular (if currently infrequent) part of the evolution of the Solar System. That they continue to happen is evidenced by the collision of Comet Shoemaker–Levy 9 with Jupiter in 1994, the 2009 Jupiter impact event, the Tunguska event, the Chelyabinsk meteor and the impact that created Meteor Crater in Arizona. The process of accretion, therefore, is not complete, and may still pose a threat to life on Earth.

Over the course of the Solar System's evolution, comets were ejected out of the inner Solar System by the gravity of the giant planets, and sent thousands of AU outward to form the Oort cloud, a spherical outer swarm of cometary nuclei at the farthest extent of the Sun's gravitational pull. Eventually, after about 800 million years, the gravitational disruption caused by galactic tides, passing stars and giant molecular clouds began to deplete the cloud, sending comets into the inner Solar System. The evolution of the outer Solar System also appears to have been influenced by space weathering from the solar wind, micrometeorites, and the neutral components of the interstellar medium.

The evolution of the asteroid belt after Late Heavy Bombardment was mainly governed by collisions. Objects with large mass have enough gravity to retain any material ejected by a violent collision. In the asteroid belt this usually is not the case. As a result, many larger objects have been broken apart, and sometimes newer objects have been forged from the remnants in less violent collisions. Moons around some asteroids currently can only be explained as consolidations of material flung away from the parent object without enough energy to entirely escape its gravity.

Moons

Moons have come to exist around most planets and many other Solar System bodies. These natural satellites originated by one of three possible mechanisms:

- Co-formation from a circumplanetary disc (only in the cases of the giant planets);

- Formation from impact debris (given a large enough impact at a shallow angle); and

- Capture of a passing object.

Jupiter and Saturn have several large moons, such as Io, Europa, Ganymede and Titan, which may have originated from discs around each giant planet in much the same way that the planets formed from the disc around the Sun. This origin is indicated by the large sizes of the moons and their proximity to the planet. These attributes are impossible to achieve via capture, while the gaseous nature of the primaries also make formation from collision debris unlikely. The outer moons of the giant planets tend to be small and have eccentric orbits with arbitrary inclinations. These are the characteristics expected of captured bodies. Most such moons orbit in the direction opposite the rotation of their primary. The largest irregular moon is Neptune's moon Triton, which is thought to be a captured Kuiper belt object.

Moons of solid Solar System bodies have been created by both collisions and capture. Mars's two small moons, Deimos and Phobos, are thought to be captured asteroids. The Earth's Moon is thought to have formed as a result of a single, large head-on collision. The impacting object probably had a mass comparable to that of Mars, and the impact probably occurred near the end of the period of giant impacts. The collision kicked into orbit some of the impactor's mantle, which then coalesced into the Moon. The impact was probably the last in the series of mergers that formed the Earth. It has been further hypothesized that the Mars-sized object may have formed at one of the stable Earth–Sun Lagrangian points (either L4 or L5) and drifted from its position. The moons of trans-Neptunian objects Pluto (Charon) and Orcus (Vanth) may also have formed by means of a large collision: the Pluto–Charon, Orcus–Vanth and Earth–Moon systems are unusual in the Solar System in that the satellite's mass is at least 1% that of the larger body.

Future

Astronomers estimate that the current state of the Solar System will not change drastically until the Sun has fused almost all the hydrogen fuel in its core into helium, beginning its evolution from the main sequence of the Hertzsprung–Russell diagram and into its red-giant phase. The Solar System will continue to evolve until then.

Long-term stability

The Solar System is chaotic over million- and billion-year timescales, with the orbits of the planets open to long-term variations. One notable example of this chaos is the Neptune–Pluto system, which lies in a 3:2 orbital resonance. Although the resonance itself will remain stable, it becomes impossible to predict the position of Pluto with any degree of accuracy more than 10–20 million years (the Lyapunov time) into the future. Another example is Earth's axial tilt, which, due to friction raised within Earth's mantle by tidal interactions with the Moon, is incomputable from some point between 1.5 and 4.5 billion years from now.

The outer planets' orbits are chaotic over longer timescales, with a Lyapunov time in the range of 2–230 million years. In all cases this means that the position of a planet along its orbit ultimately becomes impossible to predict with any certainty (so, for example, the timing of winter and summer become uncertain), but in some cases the orbits themselves may change dramatically. Such chaos manifests most strongly as changes in eccentricity, with some planets' orbits becoming significantly more—or less—elliptical.

Ultimately, the Solar System is stable in that none of the planets are likely to collide with each other or be ejected from the system in the next few billion years. Beyond this, within five billion years or so Mars's eccentricity may grow to around 0.2, such that it lies on an Earth-crossing orbit, leading to a potential collision. In the same timescale, Mercury's eccentricity may grow even further, and a close encounter with Venus could theoretically eject it from the Solar System altogether or send it on a collision course with Venus or Earth. This could happen within a billion years, according to numerical simulations in which Mercury's orbit is perturbed.

Moon–ring systems

The evolution of moon systems is driven by tidal forces. A moon will raise a tidal bulge in the object it orbits (the primary) due to the differential gravitational force across diameter of the primary. If a moon is revolving in the same direction as the planet's rotation and the planet is rotating faster than the orbital period of the moon, the bulge will constantly be pulled ahead of the moon. In this situation, angular momentum is transferred from the rotation of the primary to the revolution of the satellite. The moon gains energy and gradually spirals outward, while the primary rotates more slowly over time.

The Earth and its Moon are one example of this configuration. Today, the Moon is tidally locked to the Earth; one of its revolutions around the Earth (currently about 29 days) is equal to one of its rotations about its axis, so it always shows one face to the Earth. The Moon will continue to recede from Earth, and Earth's spin will continue to slow gradually. Other examples are the Galilean moons of Jupiter (as well as many of Jupiter's smaller moons) and most of the larger moons of Saturn.

A different scenario occurs when the moon is either revolving around the primary faster than the primary rotates, or is revolving in the direction opposite the planet's rotation. In these cases, the tidal bulge lags behind the moon in its orbit. In the former case, the direction of angular momentum transfer is reversed, so the rotation of the primary speeds up while the satellite's orbit shrinks. In the latter case, the angular momentum of the rotation and revolution have opposite signs, so transfer leads to decreases in the magnitude of each (that cancel each other out). In both cases, tidal deceleration causes the moon to spiral in towards the primary until it either is torn apart by tidal stresses, potentially creating a planetary ring system, or crashes into the planet's surface or atmosphere. Such a fate awaits the moons Phobos of Mars (within 30 to 50 million years), Triton of Neptune (in 3.6 billion years), and at least 16 small satellites of Uranus and Neptune. Uranus's Desdemona may even collide with one of its neighboring moons.

A third possibility is where the primary and moon are tidally locked to each other. In that case, the tidal bulge stays directly under the moon, there is no transfer of angular momentum, and the orbital period will not change. Pluto and Charon are an example of this type of configuration.

There is no consensus as to the mechanism of formation of the rings of Saturn. Although theoretical models indicated that the rings were likely to have formed early in the Solar System's history, data from the Cassini–Huygens spacecraft suggests they formed relatively late.

The Sun and planetary environments

In the long term, the greatest changes in the Solar System will come from changes in the Sun itself as it ages. As the Sun burns through its supply of hydrogen fuel, it gets hotter and burns the remaining fuel even faster. As a result, the Sun is growing brighter at a rate of ten percent every 1.1 billion years. In about 600 million years, the Sun's brightness will have disrupted the Earth's carbon cycle to the point where trees and forests (C3 photosynthetic plant life) will no longer be able to survive; and in around 800 million years, the Sun will have killed all complex life on the Earth's surface and in the oceans. In 1.1 billion years' time, the Sun's increased radiation output will cause its circumstellar habitable zone to move outwards, making the Earth's surface too hot for liquid water to exist there naturally. At this point, all life will be reduced to single-celled organisms. Evaporation of water, a potent greenhouse gas, from the oceans' surface could accelerate temperature increase, potentially ending all life on Earth even sooner. During this time, it is possible that as Mars's surface temperature gradually rises, carbon dioxide and water currently frozen under the surface regolith will release into the atmosphere, creating a greenhouse effect that will heat the planet until it achieves conditions parallel to Earth today, providing a potential future abode for life. By 3.5 billion years from now, Earth's surface conditions will be similar to those of Venus today.

Around 5.4 billion years from now, the core of the Sun will become hot enough to trigger hydrogen fusion in its surrounding shell. This will cause the outer layers of the star to expand greatly, and the star will enter a phase of its life in which it is called a red giant. Within 7.5 billion years, the Sun will have expanded to a radius of 1.2 AU—256 times its current size. At the tip of the red-giant branch, as a result of the vastly increased surface area, the Sun's surface will be much cooler (about 2600 K) than now and its luminosity much higher—up to 2,700 current solar luminosities. For part of its red-giant life, the Sun will have a strong stellar wind that will carry away around 33% of its mass. During these times, it is possible that Saturn's moon Titan could achieve surface temperatures necessary to support life.

As the Sun expands, it will swallow the planets Mercury and Venus. Earth's fate is less clear; although the Sun will envelop Earth's current orbit, the star's loss of mass (and thus weaker gravity) will cause the planets' orbits to move farther out. If it were only for this, Venus and Earth would probably escape incineration, but a 2008 study suggests that Earth will likely be swallowed up as a result of tidal interactions with the Sun's weakly bound outer envelope.

After the expansion phase, the habitable zone will shift deeper into the outer solar system and the Kuiper-belt. This means that surface temperatures on Pluto and Charon will be high enough for water ice to sublimate into steam. Surface temperatures on Pluto and Charon would be 0°C. (Water ice sublimates at lower atmospheric pressures). By that time Pluto would've already lost its methane shell as a result of sublimation. But Pluto will be too small and lacks a magnetic field to prevent high energy ions from striking its atmosphere so as to be able to maintain a thick atmosphere given that the solar activity would increase drastically when the sun dies. Pluto and Charon will loose their diffuse water atmosphere into space, leaving an exposed rocky core. Both of them will loose 30%-40% of their mass as a result.

Gradually, the hydrogen burning in the shell around the solar core will increase the mass of the core until it reaches about 45% of the present solar mass. At this point the density and temperature will become so high that the fusion of helium into carbon will begin, leading to a helium flash; the Sun will shrink from around 250 to 11 times its present (main-sequence) radius. Consequently, its luminosity will decrease from around 3,000 to 54 times its current level, and its surface temperature will increase to about 4770 K. The Sun will become a horizontal giant, burning helium in its core in a stable fashion much like it burns hydrogen today. The helium-fusing stage will last only 100 million years. Eventually, it will have to again resort to the reserves of hydrogen and helium in its outer layers and will expand a second time, turning into what is known as an asymptotic giant. Here the luminosity of the Sun will increase again, reaching about 2,090 present luminosities, and it will cool to about 3500 K. This phase lasts about 30 million years, after which, over the course of a further 100,000 years, the Sun's remaining outer layers will fall away, ejecting a vast stream of matter into space and forming a halo known (misleadingly) as a planetary nebula. The ejected material will contain the helium and carbon produced by the Sun's nuclear reactions, continuing the enrichment of the interstellar medium with heavy elements for future generations of stars.

This is a relatively peaceful event, nothing akin to a supernova, which the Sun is too small to undergo as part of its evolution. Any observer present to witness this occurrence would see a massive increase in the speed of the solar wind, but not enough to destroy a planet completely. However, the star's loss of mass could send the orbits of the surviving planets into chaos, causing some to collide, others to be ejected from the Solar System, and still others to be torn apart by tidal interactions. Afterwards, all that will remain of the Sun is a white dwarf, an extraordinarily dense object, 54% its original mass but only the size of the Earth. Initially, this white dwarf may be 100 times as luminous as the Sun is now. It will consist entirely of degenerate carbon and oxygen, but will never reach temperatures hot enough to fuse these elements. Thus the white dwarf Sun will gradually cool, growing dimmer and dimmer.

As the Sun dies, its gravitational pull on the orbiting bodies such as planets, comets and asteroids will weaken due to its mass loss. All remaining planets' orbits will expand; if Venus, Earth, and Mars still exist, their orbits will lie roughly at 1.4 AU (210,000,000 km), 1.9 AU (280,000,000 km), and 2.8 AU (420,000,000 km). They and the other remaining planets will become dark, frigid hulks, completely devoid of any form of life. They will continue to orbit their star, their speed slowed due to their increased distance from the Sun and the Sun's reduced gravity. Two billion years later, when the Sun has cooled to the 6000–8000K range, the carbon and oxygen in the Sun's core will freeze, with over 90% of its remaining mass assuming a crystalline structure. Eventually, after roughly 1 quadrillion years, the Sun will finally cease to shine altogether, becoming a black dwarf.

Galactic interaction

The Solar System travels alone through the Milky Way in a circular orbit approximately 30,000 light years from the Galactic Centre. Its speed is about 220 km/s. The period required for the Solar System to complete one revolution around the Galactic Centre, the galactic year, is in the range of 220–250 million years. Since its formation, the Solar System has completed at least 20 such revolutions.

Various scientists have speculated that the Solar System's path through the galaxy is a factor in the periodicity of mass extinctions observed in the Earth's fossil record. One hypothesis supposes that vertical oscillations made by the Sun as it orbits the Galactic Centre cause it to regularly pass through the galactic plane. When the Sun's orbit takes it outside the galactic disc, the influence of the galactic tide is weaker; as it re-enters the galactic disc, as it does every 20–25 million years, it comes under the influence of the far stronger "disc tides", which, according to mathematical models, increase the flux of Oort cloud comets into the Solar System by a factor of 4, leading to a massive increase in the likelihood of a devastating impact.

However, others argue that the Sun is currently close to the galactic plane, and yet the last great extinction event was 15 million years ago. Therefore, the Sun's vertical position cannot alone explain such periodic extinctions, and that extinctions instead occur when the Sun passes through the galaxy's spiral arms. Spiral arms are home not only to larger numbers of molecular clouds, whose gravity may distort the Oort cloud, but also to higher concentrations of bright blue giants, which live for relatively short periods and then explode violently as supernovae.

Galactic collision and planetary disruption

Although the vast majority of galaxies in the Universe are moving away from the Milky Way, the Andromeda Galaxy, the largest member of the Local Group of galaxies, is heading toward it at about 120 km/s. In 4 billion years, Andromeda and the Milky Way will collide, causing both to deform as tidal forces distort their outer arms into vast tidal tails. If this initial disruption occurs, astronomers calculate a 12% chance that the Solar System will be pulled outward into the Milky Way's tidal tail and a 3% chance that it will become gravitationally bound to Andromeda and thus a part of that galaxy. After a further series of glancing blows, during which the likelihood of the Solar System's ejection rises to 30%, the galaxies' supermassive black holes will merge. Eventually, in roughly 6 billion years, the Milky Way and Andromeda will complete their merger into a giant elliptical galaxy. During the merger, if there is enough gas, the increased gravity will force the gas to the centre of the forming elliptical galaxy. This may lead to a short period of intensive star formation called a starburst. In addition, the infalling gas will feed the newly formed black hole, transforming it into an active galactic nucleus. The force of these interactions will likely push the Solar System into the new galaxy's outer halo, leaving it relatively unscathed by the radiation from these collisions.

It is a common misconception that this collision will disrupt the orbits of the planets in the Solar System. Although it is true that the gravity of passing stars can detach planets into interstellar space, distances between stars are so great that the likelihood of the Milky Way–Andromeda collision causing such disruption to any individual star system is negligible. Although the Solar System as a whole could be affected by these events, the Sun and planets are not expected to be disturbed.

However, over time, the cumulative probability of a chance encounter with a star increases, and disruption of the planets becomes all but inevitable. Assuming that the Big Crunch or Big Rip scenarios for the end of the Universe do not occur, calculations suggest that the gravity of passing stars will have completely stripped the dead Sun of its remaining planets within 1 quadrillion (1015) years. This point marks the end of the Solar System. Although the Sun and planets may survive, the Solar System, in any meaningful sense, will cease to exist.

Chronology

The time frame of the Solar System's formation has been determined using radiometric dating. Scientists estimate that the Solar System is 4.6 billion years old. The oldest known mineral grains on Earth are approximately 4.4 billion years old. Rocks this old are rare, as Earth's surface is constantly being reshaped by erosion, volcanism, and plate tectonics. To estimate the age of the Solar System, scientists use meteorites, which were formed during the early condensation of the solar nebula. Almost all meteorites (see the Canyon Diablo meteorite) are found to have an age of 4.6 billion years, suggesting that the Solar System must be at least this old.

Studies of discs around other stars have also done much to establish a time frame for Solar System formation. Stars between one and three million years old have discs rich in gas, whereas discs around stars more than 10 million years old have little to no gas, suggesting that giant planets within them have ceased forming.