In modern physics, antimatter is defined as matter composed of the antiparticles (or "partners") of the corresponding particles in "ordinary" matter. Minuscule numbers of antiparticles are generated daily at particle accelerators—total production has been only a few nanograms—and in natural processes like cosmic ray collisions and some types of radioactive decay, but only a tiny fraction of these have successfully been bound together in experiments to form anti-atoms. No macroscopic amount of antimatter has ever been assembled due to the extreme cost and difficulty of production and handling.

Theoretically, a particle and its anti-particle (for example, a proton and an antiproton) have the same mass, but opposite electric charge, and other differences in quantum numbers. For example, a proton has positive charge while an antiproton has negative charge.

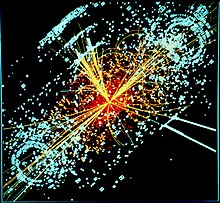

A collision between any particle and its anti-particle partner leads to their mutual annihilation, giving rise to various proportions of intense photons (gamma rays), neutrinos, and sometimes less-massive particle–antiparticle pairs. The majority of the total energy of annihilation emerges in the form of ionizing radiation. If surrounding matter is present, the energy content of this radiation will be absorbed and converted into other forms of energy, such as heat or light. The amount of energy released is usually proportional to the total mass of the collided matter and antimatter, in accordance with the notable mass–energy equivalence equation, E=mc2.

Antimatter particles bind with each other to form antimatter, just as ordinary particles bind to form normal matter. For example, a positron (the antiparticle of the electron) and an antiproton (the antiparticle of the proton) can form an antihydrogen atom. The nuclei of antihelium have been artificially produced, albeit with difficulty, and are the most complex anti-nuclei so far observed. Physical principles indicate that complex antimatter atomic nuclei are possible, as well as anti-atoms corresponding to the known chemical elements.

There is strong evidence that the observable universe is composed almost entirely of ordinary matter, as opposed to an equal mixture of matter and antimatter. This asymmetry of matter and antimatter in the visible universe is one of the great unsolved problems in physics. The process by which this inequality between matter and antimatter particles developed is called baryogenesis.

Definitions

Antimatter particles can be defined by their negative baryon number or lepton number, while "normal" (non-antimatter) matter particles have a positive baryon or lepton number. These two classes of particles are the antiparticle partners of each other. A "positron" is the antimatter equivalent of the "electron".

The French term contra-terrene led to the initialism "C.T." and the science fiction term "seetee", as used in such novels as Seetee Ship.

Conceptual history

The idea of negative matter appears in past theories of matter that have now been abandoned. Using the once popular vortex theory of gravity, the possibility of matter with negative gravity was discussed by William Hicks in the 1880s. Between the 1880s and the 1890s, Karl Pearson proposed the existence of "squirts" and sinks of the flow of aether. The squirts represented normal matter and the sinks represented negative matter. Pearson's theory required a fourth dimension for the aether to flow from and into.

The term antimatter was first used by Arthur Schuster in two rather whimsical letters to Nature in 1898, in which he coined the term. He hypothesized antiatoms, as well as whole antimatter solar systems, and discussed the possibility of matter and antimatter annihilating each other. Schuster's ideas were not a serious theoretical proposal, merely speculation, and like the previous ideas, differed from the modern concept of antimatter in that it possessed negative gravity.

The modern theory of antimatter began in 1928, with a paper by Paul Dirac. Dirac realised that his relativistic version of the Schrödinger wave equation for electrons predicted the possibility of antielectrons. These were discovered by Carl D. Anderson in 1932 and named positrons from "positive electron". Although Dirac did not himself use the term antimatter, its use follows on naturally enough from antielectrons, antiprotons, etc. A complete periodic table of antimatter was envisaged by Charles Janet in 1929.

The Feynman–Stueckelberg interpretation states that antimatter and antiparticles are regular particles traveling backward in time.

Notation

One way to denote an antiparticle is by adding a bar over the particle's symbol. For example, the proton and antiproton are denoted as

p

and

p

, respectively. The same rule applies if one were to address a particle by its constituent components. A proton is made up of

u

u

d

quarks, so an antiproton must therefore be formed from

u

u

d

antiquarks. Another convention is to distinguish particles by positive and negative electric charge. Thus, the electron and positron are denoted simply as

e−

and

e+

respectively. To prevent confusion, however, the two conventions are never mixed.

Properties

Theorized anti-gravitational properties of antimatter are currently being tested at the AEGIS experiment at CERN. Antimatter coming in contact with matter will annihilate both while leaving behind pure energy. Research is needed to study the possible gravitational effects between matter and antimatter, and between antimatter and antimatter. However research is difficult considering when the two meet they annihilate, along with the current difficulties of capturing and containing antimatter.

There are compelling theoretical reasons to believe that, aside from the fact that antiparticles have different signs on all charges (such as electric and baryon charges), matter and antimatter have exactly the same properties. This means a particle and its corresponding antiparticle must have identical masses and decay lifetimes (if unstable). It also implies that, for example, a star made up of antimatter (an "antistar") will shine just like an ordinary star. This idea was tested experimentally in 2016 by the ALPHA experiment, which measured the transition between the two lowest energy states of antihydrogen. The results, which are identical to that of hydrogen, confirmed the validity of quantum mechanics for antimatter.

Origin and asymmetry

Most matter observable from the Earth seems to be made of matter rather than antimatter. If antimatter-dominated regions of space existed, the gamma rays produced in annihilation reactions along the boundary between matter and antimatter regions would be detectable.

Antiparticles are created everywhere in the universe where high-energy particle collisions take place. High-energy cosmic rays impacting Earth's atmosphere (or any other matter in the Solar System) produce minute quantities of antiparticles in the resulting particle jets, which are immediately annihilated by contact with nearby matter. They may similarly be produced in regions like the center of the Milky Way and other galaxies, where very energetic celestial events occur (principally the interaction of relativistic jets with the interstellar medium). The presence of the resulting antimatter is detectable by the two gamma rays produced every time positrons annihilate with nearby matter. The frequency and wavelength of the gamma rays indicate that each carries 511 keV of energy (that is, the rest mass of an electron multiplied by c2).

Observations by the European Space Agency's INTEGRAL satellite may explain the origin of a giant antimatter cloud surrounding the galactic center. The observations show that the cloud is asymmetrical and matches the pattern of X-ray binaries (binary star systems containing black holes or neutron stars), mostly on one side of the galactic center. While the mechanism is not fully understood, it is likely to involve the production of electron–positron pairs, as ordinary matter gains kinetic energy while falling into a stellar remnant.

Antimatter may exist in relatively large amounts in far-away galaxies due to cosmic inflation in the primordial time of the universe. Antimatter galaxies, if they exist, are expected to have the same chemistry and absorption and emission spectra as normal-matter galaxies, and their astronomical objects would be observationally identical, making them difficult to distinguish. NASA is trying to determine if such galaxies exist by looking for X-ray and gamma-ray signatures of annihilation events in colliding superclusters.

In October 2017, scientists working on the BASE experiment at CERN reported a measurement of the antiproton magnetic moment to a precision of 1.5 parts per billion. It is consistent with the most precise measurement of the proton magnetic moment (also made by BASE in 2014), which supports the hypothesis of CPT symmetry. This measurement represents the first time that a property of antimatter is known more precisely than the equivalent property in matter.

Antimatter quantum interferometry has been first demonstrated in the L-NESS Laboratory of R. Ferragut in Como (Italy), by a group led by M. Giammarchi.

Natural production

Positrons are produced naturally in β+ decays of naturally occurring radioactive isotopes (for example, potassium-40) and in interactions of gamma quanta (emitted by radioactive nuclei) with matter. Antineutrinos are another kind of antiparticle created by natural radioactivity (β− decay). Many different kinds of antiparticles are also produced by (and contained in) cosmic rays. In January 2011, research by the American Astronomical Society discovered antimatter (positrons) originating above thunderstorm clouds; positrons are produced in terrestrial gamma-ray flashes created by electrons accelerated by strong electric fields in the clouds. Antiprotons have also been found to exist in the Van Allen Belts around the Earth by the PAMELA module.

Antiparticles are also produced in any environment with a sufficiently high temperature (mean particle energy greater than the pair production threshold). It is hypothesized that during the period of baryogenesis, when the universe was extremely hot and dense, matter and antimatter were continually produced and annihilated. The presence of remaining matter, and absence of detectable remaining antimatter, is called baryon asymmetry. The exact mechanism that produced this asymmetry during baryogenesis remains an unsolved problem. One of the necessary conditions for this asymmetry is the violation of CP symmetry, which has been experimentally observed in the weak interaction.

Recent observations indicate black holes and neutron stars produce vast amounts of positron-electron plasma via the jets.

Observation in cosmic rays

Satellite experiments have found evidence of positrons and a few antiprotons in primary cosmic rays, amounting to less than 1% of the particles in primary cosmic rays. This antimatter cannot all have been created in the Big Bang, but is instead attributed to have been produced by cyclic processes at high energies. For instance, electron-positron pairs may be formed in pulsars, as a magnetized neutron star rotation cycle shears electron-positron pairs from the star surface. Therein the antimatter forms a wind that crashes upon the ejecta of the progenitor supernovae. This weathering takes place as "the cold, magnetized relativistic wind launched by the star hits the non-relativistically expanding ejecta, a shock wave system forms in the impact: the outer one propagates in the ejecta, while a reverse shock propagates back towards the star." The former ejection of matter in the outer shock wave and the latter production of antimatter in the reverse shock wave are steps in a space weather cycle.

Preliminary results from the presently operating Alpha Magnetic Spectrometer (AMS-02) on board the International Space Station show that positrons in the cosmic rays arrive with no directionality, and with energies that range from 10 GeV to 250 GeV. In September, 2014, new results with almost twice as much data were presented in a talk at CERN and published in Physical Review Letters. A new measurement of positron fraction up to 500 GeV was reported, showing that positron fraction peaks at a maximum of about 16% of total electron+positron events, around an energy of 275 ± 32 GeV. At higher energies, up to 500 GeV, the ratio of positrons to electrons begins to fall again. The absolute flux of positrons also begins to fall before 500 GeV, but peaks at energies far higher than electron energies, which peak about 10 GeV. These results on interpretation have been suggested to be due to positron production in annihilation events of massive dark matter particles.

Cosmic ray antiprotons also have a much higher energy than their normal-matter counterparts (protons). They arrive at Earth with a characteristic energy maximum of 2 GeV, indicating their production in a fundamentally different process from cosmic ray protons, which on average have only one-sixth of the energy.

There is an ongoing search for larger antimatter nuclei, such as antihelium nuclei (that is, anti-alpha particles), in cosmic rays. The detection of natural antihelium could imply the existence of large antimatter structures such as an antistar. A prototype of the AMS-02 designated AMS-01, was flown into space aboard the Space Shuttle Discovery on STS-91 in June 1998. By not detecting any antihelium at all, the AMS-01 established an upper limit of 1.1×10−6 for the antihelium to helium flux ratio. AMS-02 revealed in December 2016 that it had discovered a few signals consistent with antihelium nuclei amidst several billion helium nuclei. The result remains to be verified, and the team is currently trying to rule out contamination.

Artificial production

Positrons

Positrons were reported in November 2008 to have been generated by Lawrence Livermore National Laboratory in larger numbers than by any previous synthetic process. A laser drove electrons through a gold target's nuclei, which caused the incoming electrons to emit energy quanta that decayed into both matter and antimatter. Positrons were detected at a higher rate and in greater density than ever previously detected in a laboratory. Previous experiments made smaller quantities of positrons using lasers and paper-thin targets; new simulations showed that short bursts of ultra-intense lasers and millimeter-thick gold are a far more effective source.

Antiprotons, antineutrons, and antinuclei

The existence of the antiproton was experimentally confirmed in 1955 by University of California, Berkeley physicists Emilio Segrè and Owen Chamberlain, for which they were awarded the 1959 Nobel Prize in Physics. An antiproton consists of two up antiquarks and one down antiquark (

u

u

d

).

The properties of the antiproton that have been measured all match the

corresponding properties of the proton, with the exception of the

antiproton having opposite electric charge and magnetic moment from the

proton. Shortly afterwards, in 1956, the antineutron was discovered in

proton–proton collisions at the Bevatron (Lawrence Berkeley National Laboratory) by Bruce Cork and colleagues.

In addition to antibaryons, anti-nuclei consisting of multiple bound antiprotons and antineutrons have been created. These are typically produced at energies far too high to form antimatter atoms (with bound positrons in place of electrons). In 1965, a group of researchers led by Antonino Zichichi reported production of nuclei of antideuterium at the Proton Synchrotron at CERN. At roughly the same time, observations of antideuterium nuclei were reported by a group of American physicists at the Alternating Gradient Synchrotron at Brookhaven National Laboratory.

Antihydrogen atoms

| Low Energy Antiproton Ring (1982-1996) | |

|---|---|

| Antiproton Accumulator | Antiproton production |

| Antiproton Collector | Decelerated and stored antiprotons |

| Antimatter Factory (2000-Present) | |

| Antiproton Decelerator (AD) | Decelerates antiprotons |

| Extra Low Energy Antiproton ring(ELENA) | Delerates antiprotons received from AD |

In 1995, CERN announced that it had successfully brought into existence nine hot antihydrogen atoms by implementing the SLAC/Fermilab concept during the PS210 experiment. The experiment was performed using the Low Energy Antiproton Ring (LEAR), and was led by Walter Oelert and Mario Macri. Fermilab soon confirmed the CERN findings by producing approximately 100 antihydrogen atoms at their facilities. The antihydrogen atoms created during PS210 and subsequent experiments (at both CERN and Fermilab) were extremely energetic and were not well suited to study. To resolve this hurdle, and to gain a better understanding of antihydrogen, two collaborations were formed in the late 1990s, namely, ATHENA and ATRAP.

In 1999, CERN activated the Antiproton Decelerator, a device capable of decelerating antiprotons from 3500 MeV to 5.3 MeV – still too "hot" to produce study-effective antihydrogen, but a huge leap forward. In late 2002 the ATHENA project announced that they had created the world's first "cold" antihydrogen. The ATRAP project released similar results very shortly thereafter. The antiprotons used in these experiments were cooled by decelerating them with the Antiproton Decelerator, passing them through a thin sheet of foil, and finally capturing them in a Penning–Malmberg trap. The overall cooling process is workable, but highly inefficient; approximately 25 million antiprotons leave the Antiproton Decelerator and roughly 25,000 make it to the Penning–Malmberg trap, which is about 1/1000 or 0.1% of the original amount.

The antiprotons are still hot when initially trapped. To cool them further, they are mixed into an electron plasma. The electrons in this plasma cool via cyclotron radiation, and then sympathetically cool the antiprotons via Coulomb collisions. Eventually, the electrons are removed by the application of short-duration electric fields, leaving the antiprotons with energies less than 100 meV. While the antiprotons are being cooled in the first trap, a small cloud of positrons is captured from radioactive sodium in a Surko-style positron accumulator. This cloud is then recaptured in a second trap near the antiprotons. Manipulations of the trap electrodes then tip the antiprotons into the positron plasma, where some combine with antiprotons to form antihydrogen. This neutral antihydrogen is unaffected by the electric and magnetic fields used to trap the charged positrons and antiprotons, and within a few microseconds the antihydrogen hits the trap walls, where it annihilates. Some hundreds of millions of antihydrogen atoms have been made in this fashion.

In 2005, ATHENA disbanded and some of the former members (along with others) formed the ALPHA Collaboration, which is also based at CERN. The ultimate goal of this endeavour is to test CPT symmetry through comparison of the atomic spectra of hydrogen and antihydrogen (see hydrogen spectral series).

In 2016 a new antiproton decelerator and cooler called ELENA (Extra Low ENergy Antiproton decelerator) was built. It takes the antiprotons from the antiproton decelerator and cools them to 90 keV, which is "cold" enough to study. This machine works by using high energy and accelerating the particles within the chamber. More than one hundred antiprotons can be captured per second, a huge improvement, but it would still take several thousand years to make a nanogram of antimatter.

Most of the sought-after high-precision tests of the properties of antihydrogen could only be performed if the antihydrogen were trapped, that is, held in place for a relatively long time. While antihydrogen atoms are electrically neutral, the spins of their component particles produce a magnetic moment. These magnetic moments can interact with an inhomogeneous magnetic field; some of the antihydrogen atoms can be attracted to a magnetic minimum. Such a minimum can be created by a combination of mirror and multipole fields. Antihydrogen can be trapped in such a magnetic minimum (minimum-B) trap; in November 2010, the ALPHA collaboration announced that they had so trapped 38 antihydrogen atoms for about a sixth of a second. This was the first time that neutral antimatter had been trapped.

On 26 April 2011, ALPHA announced that they had trapped 309 antihydrogen atoms, some for as long as 1,000 seconds (about 17 minutes). This was longer than neutral antimatter had ever been trapped before. ALPHA has used these trapped atoms to initiate research into the spectral properties of the antihydrogen.

The biggest limiting factor in the large-scale production of antimatter is the availability of antiprotons. Recent data released by CERN states that, when fully operational, their facilities are capable of producing ten million antiprotons per minute. Assuming a 100% conversion of antiprotons to antihydrogen, it would take 100 billion years to produce 1 gram or 1 mole of antihydrogen (approximately 6.02×1023 atoms of anti-hydrogen).

Antihelium

Antihelium-3 nuclei (3

He

)

were first observed in the 1970s in proton–nucleus collision

experiments at the Institute for High Energy Physics by Y. Prockoshkin's

group (Protvino near Moscow, USSR) and later created in nucleus–nucleus collision experiments.

Nucleus–nucleus collisions produce antinuclei through the coalescence

of antiprotons and antineutrons created in these reactions. In 2011, the

STAR detector reported the observation of artificially created antihelium-4 nuclei (anti-alpha particles) (4

He

) from such collisions.

The Alpha Magnetic Spectrometer on the International Space Station has, as of 2021, recorded eight events that seem to indicate the detection of antihelium-3.

Preservation

Antimatter cannot be stored in a container made of ordinary matter because antimatter reacts with any matter it touches, annihilating itself and an equal amount of the container. Antimatter in the form of charged particles can be contained by a combination of electric and magnetic fields, in a device called a Penning trap. This device cannot, however, contain antimatter that consists of uncharged particles, for which atomic traps are used. In particular, such a trap may use the dipole moment (electric or magnetic) of the trapped particles. At high vacuum, the matter or antimatter particles can be trapped and cooled with slightly off-resonant laser radiation using a magneto-optical trap or magnetic trap. Small particles can also be suspended with optical tweezers, using a highly focused laser beam.

In 2011, CERN scientists were able to preserve antihydrogen for approximately 17 minutes. The record for storing antiparticles is currently held by the TRAP experiment at CERN: antiprotons were kept in a Penning trap for 405 days. A proposal was made in 2018, to develop containment technology advanced enough to contain a billion anti-protons in a portable device to be driven to another lab for further experimentation.

Cost

Scientists claim that antimatter is the costliest material to make. In 2006, Gerald Smith estimated $250 million could produce 10 milligrams of positrons (equivalent to $25 billion per gram); in 1999, NASA gave a figure of $62.5 trillion per gram of antihydrogen. This is because production is difficult (only very few antiprotons are produced in reactions in particle accelerators) and because there is higher demand for other uses of particle accelerators. According to CERN, it has cost a few hundred million Swiss francs to produce about 1 billionth of a gram (the amount used so far for particle/antiparticle collisions). In comparison, to produce the first atomic weapon, the cost of the Manhattan Project was estimated at $23 billion with inflation during 2007.

Several studies funded by the NASA Institute for Advanced Concepts are exploring whether it might be possible to use magnetic scoops to collect the antimatter that occurs naturally in the Van Allen belt of the Earth, and ultimately, the belts of gas giants, like Jupiter, hopefully at a lower cost per gram.

Uses

Medical

Matter–antimatter reactions have practical applications in medical imaging, such as positron emission tomography (PET). In positive beta decay, a nuclide loses surplus positive charge by emitting a positron (in the same event, a proton becomes a neutron, and a neutrino is also emitted). Nuclides with surplus positive charge are easily made in a cyclotron and are widely generated for medical use. Antiprotons have also been shown within laboratory experiments to have the potential to treat certain cancers, in a similar method currently used for ion (proton) therapy.

Fuel

Isolated and stored anti-matter could be used as a fuel for interplanetary or interstellar travel as part of an antimatter-catalyzed nuclear pulse propulsion or another antimatter rocket. Since the energy density of antimatter is higher than that of conventional fuels, an antimatter-fueled spacecraft would have a higher thrust-to-weight ratio than a conventional spacecraft.

If matter–antimatter collisions resulted only in photon emission, the entire rest mass of the particles would be converted to kinetic energy. The energy per unit mass (9×1016 J/kg) is about 10 orders of magnitude greater than chemical energies, and about 3 orders of magnitude greater than the nuclear potential energy that can be liberated, today, using nuclear fission (about 200 MeV per fission reaction or 8×1013 J/kg), and about 2 orders of magnitude greater than the best possible results expected from fusion (about 6.3×1014 J/kg for the proton–proton chain). The reaction of 1 kg of antimatter with 1 kg of matter would produce 1.8×1017 J (180 petajoules) of energy (by the mass–energy equivalence formula, E=mc2), or the rough equivalent of 43 megatons of TNT – slightly less than the yield of the 27,000 kg Tsar Bomba, the largest thermonuclear weapon ever detonated.

Not all of that energy can be utilized by any realistic propulsion technology because of the nature of the annihilation products. While electron–positron reactions result in gamma ray photons, these are difficult to direct and use for thrust. In reactions between protons and antiprotons, their energy is converted largely into relativistic neutral and charged pions. The neutral pions decay almost immediately (with a lifetime of 85 attoseconds) into high-energy photons, but the charged pions decay more slowly (with a lifetime of 26 nanoseconds) and can be deflected magnetically to produce thrust.

Charged pions ultimately decay into a combination of neutrinos (carrying about 22% of the energy of the charged pions) and unstable charged muons (carrying about 78% of the charged pion energy), with the muons then decaying into a combination of electrons, positrons and neutrinos (cf. muon decay; the neutrinos from this decay carry about 2/3 of the energy of the muons, meaning that from the original charged pions, the total fraction of their energy converted to neutrinos by one route or another would be about 0.22 + (2/3)⋅0.78 = 0.74).

Weapons

Antimatter has been considered as a trigger mechanism for nuclear weapons. A major obstacle is the difficulty of producing antimatter in large enough quantities, and there is no evidence that it will ever be feasible. Nonetheless, the U.S. Air Force funded studies of the physics of antimatter in the Cold War, and began considering its possible use in weapons, not just as a trigger, but as the explosive itself.