In physics, canonical quantization is a procedure for quantizing a classical theory, while attempting to preserve the formal structure, such as symmetries, of the classical theory, to the greatest extent possible.

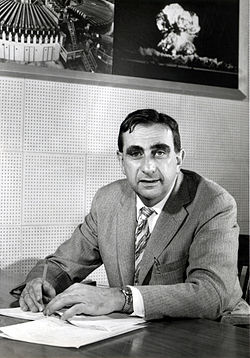

Historically, this was not quite Werner Heisenberg's route to obtaining quantum mechanics, but Paul Dirac introduced it in his 1926 doctoral thesis, the "method of classical analogy" for quantization, and detailed it in his classic text. The word canonical arises from the Hamiltonian approach to classical mechanics, in which a system's dynamics is generated via canonical Poisson brackets, a structure which is only partially preserved in canonical quantization.

This method was further used in the context of quantum field theory by Paul Dirac, in his construction of quantum electrodynamics. In the field theory context, it is also called the second quantization of fields, in contrast to the semi-classical first quantization of single particles.

History

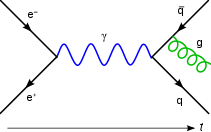

When it was first developed, quantum physics dealt only with the quantization of the motion of particles, leaving the electromagnetic field classical, hence the name quantum mechanics.

Later the electromagnetic field was also quantized, and even the particles themselves became represented through quantized fields, resulting in the development of quantum electrodynamics (QED) and quantum field theory in general. Thus, by convention, the original form of particle quantum mechanics is denoted first quantization, while quantum field theory is formulated in the language of second quantization.

First quantization

Single particle systems

The following exposition is based on Dirac's treatise on quantum mechanics. In the classical mechanics of a particle, there are dynamic variables which are called coordinates (x) and momenta (p). These specify the state of a classical system. The canonical structure (also known as the symplectic structure) of classical mechanics consists of Poisson brackets enclosing these variables, such as {x,p} = 1. All transformations of variables which preserve these brackets are allowed as canonical transformations in classical mechanics. Motion itself is such a canonical transformation.

By contrast, in quantum mechanics, all significant features of a particle are contained in a state , called a quantum state. Observables are represented by operators acting on a Hilbert space of such quantum states.

The eigenvalue of an operator acting on one of its eigenstates represents the value of a measurement on the particle thus represented. For example, the energy is read off by the Hamiltonian operator acting on a state , yielding

- ,

where En is the characteristic energy associated to this eigenstate.

Any state could be represented as a linear combination of eigenstates of energy; for example,

- ,

where an are constant coefficients.

As in classical mechanics, all dynamical operators can be represented by functions of the position and momentum ones, and , respectively. The connection between this representation and the more usual wavefunction representation is given by the eigenstate of the position operator representing a particle at position , which is denoted by an element in the Hilbert space, and which satisfies . Then, .

Likewise, the eigenstates of the momentum operator specify the momentum representation: .

The central relation between these operators is a quantum analog of the above Poisson bracket of classical mechanics, the canonical commutation relation,

- .

This relation encodes (and formally leads to) the uncertainty principle, in the form Δx Δp ≥ ħ/2. This algebraic structure may be thus considered as the quantum analog of the canonical structure of classical mechanics.

Many-particle systems

When turning to N-particle systems, i.e., systems containing N identical particles (particles characterized by the same quantum numbers such as mass, charge and spin), it is necessary to extend the single-particle state function to the N-particle state function . A fundamental difference between classical and quantum mechanics concerns the concept of indistinguishability of identical particles. Only two species of particles are thus possible in quantum physics, the so-called bosons and fermions which obey the rules:

(bosons),

(fermions).

Where we have interchanged two coordinates of the state function. The usual wave function is obtained using the Slater determinant and the identical particles theory. Using this basis, it is possible to solve various many-particle problems.

Issues and limitations

Classical and quantum brackets

Dirac's book details his popular rule of supplanting Poisson brackets by commutators:

One might interpret this proposal as saying that we should seek a "quantization map" mapping a function on the classical phase space to an operator on the quantum Hilbert space such that

It is now known that there is no reasonable such quantization map satisfying the above identity exactly for all functions and .

Groenewold's theorem

One concrete version of the above impossibility claim is Groenewold's theorem (after Dutch theoretical physicist Hilbrand J. Groenewold), which we describe for a system with one degree of freedom for simplicity. Let us accept the following "ground rules" for the map . First, should send the constant function 1 to the identity operator. Second, should take and to the usual position and momentum operators and . Third, should take a polynomial in and to a "polynomial" in and , that is, a finite linear combinations of products of and , which may be taken in any desired order. In its simplest form, Groenewold's theorem says that there is no map satisfying the above ground rules and also the bracket condition

for all polynomials and .

Actually, the nonexistence of such a map occurs already by the time we reach polynomials of degree four. Note that the Poisson bracket of two polynomials of degree four has degree six, so it does not exactly make sense to require a map on polynomials of degree four to respect the bracket condition. We can, however, require that the bracket condition holds when and have degree three. Groenewold's theorem can be stated as follows:

- Theorem: There is no quantization map (following the above ground rules) on polynomials of degree less than or equal to four that satisfies

- whenever and have degree less than or equal to three. (Note that in this case, has degree less than or equal to four.)

The proof can be outlined as follows. Suppose we first try to find a quantization map on polynomials of degree less than or equal to three satisfying the bracket condition whenever has degree less than or equal to two and has degree less than or equal to two. Then there is precisely one such map, and it is the Weyl quantization. The impossibility result now is obtained by writing the same polynomial of degree four as a Poisson bracket of polynomials of degree three in two different ways. Specifically, we have

On the other hand, we have already seen that if there is going to be a quantization map on polynomials of degree three, it must be the Weyl quantization; that is, we have already determined the only possible quantization of all the cubic polynomials above.

The argument is finished by computing by brute force that

does not coincide with

- .

Thus, we have two incompatible requirements for the value of .

Axioms for quantization

If Q represents the quantization map that acts on functions f in classical phase space, then the following properties are usually considered desirable:

- and (elementary position/momentum operators)

- is a linear map

- (Poisson bracket)

- (von Neumann rule).

However, not only are these four properties mutually inconsistent, any three of them are also inconsistent! As it turns out, the only pairs of these properties that lead to self-consistent, nontrivial solutions are 2 & 3, and possibly 1 & 3 or 1 & 4. Accepting properties 1 & 2, along with a weaker condition that 3 be true only asymptotically in the limit ħ→0 (see Moyal bracket), leads to deformation quantization, and some extraneous information must be provided, as in the standard theories utilized in most of physics. Accepting properties 1 & 2 & 3 but restricting the space of quantizable observables to exclude terms such as the cubic ones in the above example amounts to geometric quantization.

Second quantization: field theory

Quantum mechanics was successful at describing non-relativistic systems with fixed numbers of particles, but a new framework was needed to describe systems in which particles can be created or destroyed, for example, the electromagnetic field, considered as a collection of photons. It was eventually realized that special relativity was inconsistent with single-particle quantum mechanics, so that all particles are now described relativistically by quantum fields.

When the canonical quantization procedure is applied to a field, such as the electromagnetic field, the classical field variables become quantum operators. Thus, the normal modes comprising the amplitude of the field are simple oscillators, each of which is quantized in standard first quantization, above, without ambiguity. The resulting quanta are identified with individual particles or excitations. For example, the quanta of the electromagnetic field are identified with photons. Unlike first quantization, conventional second quantization is completely unambiguous, in effect a functor, since the constituent set of its oscillators are quantized unambiguously.

Historically, quantizing the classical theory of a single particle gave rise to a wavefunction. The classical equations of motion of a field are typically identical in form to the (quantum) equations for the wave-function of one of its quanta. For example, the Klein–Gordon equation is the classical equation of motion for a free scalar field, but also the quantum equation for a scalar particle wave-function. This meant that quantizing a field appeared to be similar to quantizing a theory that was already quantized, leading to the fanciful term second quantization in the early literature, which is still used to describe field quantization, even though the modern interpretation detailed is different.

One drawback to canonical quantization for a relativistic field is that by relying on the Hamiltonian to determine time dependence, relativistic invariance is no longer manifest. Thus it is necessary to check that relativistic invariance is not lost. Alternatively, the Feynman integral approach is available for quantizing relativistic fields, and is manifestly invariant. For non-relativistic field theories, such as those used in condensed matter physics, Lorentz invariance is not an issue.

Field operators

Quantum mechanically, the variables of a field (such as the field's amplitude at a given point) are represented by operators on a Hilbert space. In general, all observables are constructed as operators on the Hilbert space, and the time-evolution of the operators is governed by the Hamiltonian, which must be a positive operator. A state annihilated by the Hamiltonian must be identified as the vacuum state, which is the basis for building all other states. In a non-interacting (free) field theory, the vacuum is normally identified as a state containing zero particles. In a theory with interacting particles, identifying the vacuum is more subtle, due to vacuum polarization, which implies that the physical vacuum in quantum field theory is never really empty. For further elaboration, see the articles on the quantum mechanical vacuum and the vacuum of quantum chromodynamics. The details of the canonical quantization depend on the field being quantized, and whether it is free or interacting.

Real scalar field

A scalar field theory provides a good example of the canonical quantization procedure. Classically, a scalar field is a collection of an infinity of oscillator normal modes. It suffices to consider a 1+1-dimensional space-time in which the spatial direction is compactified to a circle of circumference 2π, rendering the momenta discrete.

The classical Lagrangian density describes an infinity of coupled harmonic oscillators, labelled by x which is now a label (and not the displacement dynamical variable to be quantized), denoted by the classical field φ,

where V(φ) is a potential term, often taken to be a polynomial or monomial of degree 3 or higher. The action functional is

- .

The canonical momentum obtained via the Legendre transformation using the action L is , and the classical Hamiltonian is found to be

Canonical quantization treats the variables φ and π as operators with canonical commutation relations at time t= 0, given by

Operators constructed from φ and π can then formally be defined at other times via the time-evolution generated by the Hamiltonian,

However, since φ and π no longer commute, this expression is ambiguous at the quantum level. The problem is to construct a representation of the relevant operators on a Hilbert space and to construct a positive operator H as a quantum operator on this Hilbert space in such a way that it gives this evolution for the operators as given by the preceding equation, and to show that contains a vacuum state on which H has zero eigenvalue. In practice, this construction is a difficult problem for interacting field theories, and has been solved completely only in a few simple cases via the methods of constructive quantum field theory. Many of these issues can be sidestepped using the Feynman integral as described for a particular V(φ) in the article on scalar field theory.

In the case of a free field, with V(φ) = 0, the quantization procedure is relatively straightforward. It is convenient to Fourier transform the fields, so that

The reality of the fields implies that

- .

The classical Hamiltonian may be expanded in Fourier modes as

where .

This Hamiltonian is thus recognizable as an infinite sum of classical normal mode oscillator excitations φk, each one of which is quantized in the standard manner, so the free quantum Hamiltonian looks identical. It is the φks that have become operators obeying the standard commutation relations, [φk, πk†] = [φk†, πk] = iħ, with all others vanishing. The collective Hilbert space of all these oscillators is thus constructed using creation and annihilation operators constructed from these modes,

for which [ak, ak†] = 1 for all k, with all other commutators vanishing.

The vacuum is taken to be annihilated by all of the ak, and is the Hilbert space constructed by applying any combination of the infinite collection of creation operators ak† to . This Hilbert space is called Fock space. For each k, this construction is identical to a quantum harmonic oscillator. The quantum field is an infinite array of quantum oscillators. The quantum Hamiltonian then amounts to

- ,

where Nk may be interpreted as the number operator giving the number of particles in a state with momentum k.

This Hamiltonian differs from the previous expression by the subtraction of the zero-point energy ħωk/2 of each harmonic oscillator. This satisfies the condition that H must annihilate the vacuum, without affecting the time-evolution of operators via the above exponentiation operation. This subtraction of the zero-point energy may be considered to be a resolution of the quantum operator ordering ambiguity, since it is equivalent to requiring that all creation operators appear to the left of annihilation operators in the expansion of the Hamiltonian. This procedure is known as Wick ordering or normal ordering.

Other fields

All other fields can be quantized by a generalization of this procedure. Vector or tensor fields simply have more components, and independent creation and destruction operators must be introduced for each independent component. If a field has any internal symmetry, then creation and destruction operators must be introduced for each component of the field related to this symmetry as well. If there is a gauge symmetry, then the number of independent components of the field must be carefully analyzed to avoid over-counting equivalent configurations, and gauge-fixing may be applied if needed.

It turns out that commutation relations are useful only for quantizing bosons, for which the occupancy number of any state is unlimited. To quantize fermions, which satisfy the Pauli exclusion principle, anti-commutators are needed. These are defined by {A,B} = AB+BA.

When quantizing fermions, the fields are expanded in creation and annihilation operators, θk†, θk, which satisfy

The states are constructed on a vacuum |0> annihilated by the θk, and the Fock space is built by applying all products of creation operators θk† to |0>. Pauli's exclusion principle is satisfied, because , by virtue of the anti-commutation relations.

Condensates

The construction of the scalar field states above assumed that the potential was minimized at φ = 0, so that the vacuum minimizing the Hamiltonian satisfies 〈 φ 〉= 0, indicating that the vacuum expectation value (VEV) of the field is zero. In cases involving spontaneous symmetry breaking, it is possible to have a non-zero VEV, because the potential is minimized for a value φ = v . This occurs for example, if V(φ) = gφ4 − 2m2φ2 with g > 0 and m2 > 0, for which the minimum energy is found at v = ±m/√g. The value of v in one of these vacua may be considered as condensate of the field φ. Canonical quantization then can be carried out for the shifted field φ(x,t)−v, and particle states with respect to the shifted vacuum are defined by quantizing the shifted field. This construction is utilized in the Higgs mechanism in the standard model of particle physics.

Mathematical quantization

Deformation quantization

The classical theory is described using a spacelike foliation of spacetime with the state at each slice being described by an element of a symplectic manifold with the time evolution given by the symplectomorphism generated by a Hamiltonian function over the symplectic manifold. The quantum algebra of "operators" is an ħ-deformation of the algebra of smooth functions over the symplectic space such that the leading term in the Taylor expansion over ħ of the commutator [A, B] expressed in the phase space formulation is iħ{A, B} . (Here, the curly braces denote the Poisson bracket. The subleading terms are all encoded in the Moyal bracket, the suitable quantum deformation of the Poisson bracket.) In general, for the quantities (observables) involved, and providing the arguments of such brackets, ħ-deformations are highly nonunique—quantization is an "art", and is specified by the physical context. (Two different quantum systems may represent two different, inequivalent, deformations of the same classical limit, ħ → 0.)

Now, one looks for unitary representations of this quantum algebra. With respect to such a unitary representation, a symplectomorphism in the classical theory would now deform to a (metaplectic) unitary transformation. In particular, the time evolution symplectomorphism generated by the classical Hamiltonian deforms to a unitary transformation generated by the corresponding quantum Hamiltonian.

A further generalization is to consider a Poisson manifold instead of a symplectic space for the classical theory and perform an ħ-deformation of the corresponding Poisson algebra or even Poisson supermanifolds.

Geometric quantization

In contrast to the theory of deformation quantization described above, geometric quantization seeks to construct an actual Hilbert space and operators on it. Starting with a symplectic manifold , one first constructs a prequantum Hilbert space consisting of the space of square-integrable sections of an appropriate line bundle over . On this space, one can map all classical observables to operators on the prequantum Hilbert space, with the commutator corresponding exactly to the Poisson bracket. The prequantum Hilbert space, however, is clearly too big to describe the quantization of .

One then proceeds by choosing a polarization, that is (roughly), a choice of variables on the -dimensional phase space. The quantum Hilbert space is then the space of sections that depend only on the chosen variables, in the sense that they are covariantly constant in the other directions. If the chosen variables are real, we get something like the traditional Schrödinger Hilbert space. If the chosen variables are complex, we get something like the Segal–Bargmann space.

![[\hat{X},\hat{P}] = \hat{X}\hat{P}-\hat{P}\hat{X} = i\hbar](https://wikimedia.org/api/rest_v1/media/math/render/svg/e4b300836904ba23ab0a86b7789214680a9b5ab0)

![\{A,B\} \longmapsto \tfrac{1}{i \hbar} [\hat{A},\hat{B}] ~.](https://wikimedia.org/api/rest_v1/media/math/render/svg/677fb6619c684d91b202308ae3996eb58de1f0d4)

![{\displaystyle Q_{\{f,g\}}={\frac {1}{i\hbar }}[Q_{f},Q_{g}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bb79c43bd130a2ed3fd250ec05c426cd3c1a3b75)

![{\displaystyle \quad Q_{\{f,g\}}={\frac {1}{i\hbar }}[Q_{f},Q_{g}]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/961205e72620eabe4d969c526530ce0efb744f65)

![{\displaystyle {\frac {1}{9}}[Q(x^{3}),Q(p^{3})]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/61b2aacecb4c1da544947b250bb1d742f3e9f891)

![{\displaystyle {\frac {1}{3}}[Q(x^{2}p),Q(xp^{2})]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/be83bd245a3200e1b8db6371195a8aecc24aaa4d)

![[Q_f,Q_g]=i\hbar Q_{\{f,g\}}~~](https://wikimedia.org/api/rest_v1/media/math/render/svg/13870688b34f8e5c93708a72bac2249610694c2c)

![H(\phi,\pi) = \int dx \left[\frac{1}{2} \pi^2 + \frac{1}{2} (\partial_x \phi)^2 + \frac{1}{2} m^2 \phi^2 + V(\phi)\right].](https://wikimedia.org/api/rest_v1/media/math/render/svg/49cce5946073bfbfe50b7d39c98621deffa8c6a1)

![[\phi(x),\phi(y)] = 0, \ \ [\pi(x), \pi(y)] = 0, \ \ [\phi(x),\pi(y)] = i\hbar \delta(x-y).](https://wikimedia.org/api/rest_v1/media/math/render/svg/c19d1fe20880f28806984d97695cf499950877b9)

![H=\frac{1}{2}\sum_{k=-\infty}^{\infty}\left[\pi_k \pi_k^\dagger + \omega_k^2\phi_k\phi_k^\dagger\right],](https://wikimedia.org/api/rest_v1/media/math/render/svg/e6c4f4fc09e8e30139953051efa85389394718a8)