| ARPANET | |

|---|---|

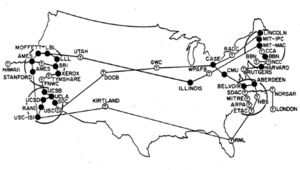

ARPANET logical map, March 1977 | |

| Type | Data |

| Location | United States, United Kingdom, Norway |

| Protocols | 1822 protocol, NCP, TCP/IP |

| Operator | From 1975, Defense Communications Agency |

| Established | 1969 |

| Closed | 1990 |

| Commercial? | No |

| Funding | From 1966, Advanced Research Projects Agency (ARPA) |

The Advanced Research Projects Agency Network (ARPANET) was the first wide-area packet-switched network with distributed control and one of the first networks to implement the TCP/IP protocol suite. Both technologies became the technical foundation of the Internet. The ARPANET was established by the Advanced Research Projects Agency (ARPA) of the United States Department of Defense.

Building on the ideas of J. C. R. Licklider, Bob Taylor initiated the ARPANET project in 1966 to enable access to remote computers. Taylor appointed Larry Roberts as program manager. Roberts made the key decisions about the network design. He incorporated Donald Davies’ concepts and designs for packet switching, and sought input from Paul Baran. ARPA awarded the contract to build the network to Bolt Beranek & Newman who developed the first protocol for the network. Roberts engaged Leonard Kleinrock at UCLA to develop mathematical methods for analyzing the packet network technology.

The first computers were connected in 1969 and the Network Control Program was implemented in 1970. The network was declared operational in 1971. Further software development enabled remote login, file transfer and email. The network expanded rapidly and operational control passed to the Defense Communications Agency in 1975.

Internetworking research in the early 1970s led by Bob Kahn at DARPA and Vint Cerf at Stanford University and later DARPA formulated the Transmission Control Program, which incorporated concepts from the French CYCLADES project. As this work progressed, a protocol was developed by which multiple separate networks could be joined into a network of networks. Version 4 of TCP/IP was installed in the ARPANET for production use in January 1983 after the Department of Defense made it standard for all military computer networking.

Access to the ARPANET was expanded in 1981, when the National Science Foundation (NSF) funded the Computer Science Network (CSNET). In the early 1980s, the NSF funded the establishment of national supercomputing centers at several universities, and provided network access and network interconnectivity with the NSFNET project in 1986. The ARPANET was formally decommissioned in 1990, after partnerships with the telecommunication and computer industry had assured private sector expansion and future commercialization of an expanded world-wide network, known as the Internet.

History

Inspiration

Historically, voice and data communications were based on methods of circuit switching, as exemplified in the traditional telephone network, wherein each telephone call is allocated a dedicated, end to end, electronic connection between the two communicating stations. The connection is established by switching systems that connected multiple intermediate call legs between these systems for the duration of the call.

The traditional model of the circuit-switched telecommunication network was challenged in the early 1960s by Paul Baran at the RAND Corporation, who had been researching systems that could sustain operation during partial destruction, such as by nuclear war. He developed the theoretical model of distributed adaptive message block switching. However, the telecommunication establishment rejected the development in favor of existing models. Donald Davies at the United Kingdom's National Physical Laboratory (NPL) independently arrived at a similar concept in 1965.

The earliest ideas for a computer network intended to allow general communications among computer users were formulated by computer scientist J. C. R. Licklider of Bolt Beranek and Newman (BBN), in April 1963, in memoranda discussing the concept of the "Intergalactic Computer Network". Those ideas encompassed many of the features of the contemporary Internet. In October 1963, Licklider was appointed head of the Behavioral Sciences and Command and Control programs at the Defense Department's Advanced Research Projects Agency (ARPA). He convinced Ivan Sutherland and Bob Taylor that this network concept was very important and merited development, although Licklider left ARPA before any contracts were assigned for development.

Sutherland and Taylor continued their interest in creating the network, in part, to allow ARPA-sponsored researchers at various corporate and academic locales to utilize computers provided by ARPA, and, in part, to quickly distribute new software and other computer science results. Taylor had three computer terminals in his office, each connected to separate computers, which ARPA was funding: one for the System Development Corporation (SDC) Q-32 in Santa Monica, one for Project Genie at the University of California, Berkeley, and another for Multics at the Massachusetts Institute of Technology. Taylor recalls the circumstance: "For each of these three terminals, I had three different sets of user commands. So, if I was talking online with someone at S.D.C., and I wanted to talk to someone I knew at Berkeley, or M.I.T., about this, I had to get up from the S.D.C. terminal, go over and log into the other terminal and get in touch with them. I said, "Oh Man!", it's obvious what to do: If you have these three terminals, there ought to be one terminal that goes anywhere you want to go. That idea is the ARPANET".

Donald Davies' work caught the attention of ARPANET developers at Symposium on Operating Systems Principles in October 1967. He gave the first public presentation, having coined the term packet switching, in August 1968 and incorporated it into the NPL network in England. The NPL network and ARPANET were the first two networks in the world to use packet switching, and were themselves interconnected in 1973. Roberts said the ARPANET and other packet switching networks built in the 1970s were similar "in nearly all respects" to Davies' original 1965 design.

Creation

In February 1966, Bob Taylor successfully lobbied ARPA's Director Charles M. Herzfeld to fund a network project. Herzfeld redirected funds in the amount of one million dollars from a ballistic missile defense program to Taylor's budget. Taylor hired Larry Roberts as a program manager in the ARPA Information Processing Techniques Office in January 1967 to work on the ARPANET.

Roberts asked Frank Westervelt to explore the initial design questions for a network. In April 1967, ARPA held a design session on technical standards. The initial standards for identification and authentication of users, transmission of characters, and error checking and retransmission procedures were discussed. Roberts' proposal was that all mainframe computers would connect to one another directly. The other investigators were reluctant to dedicate these computing resources to network administration. Wesley Clark proposed minicomputers should be used as an interface to create a message switching network. Roberts modified the ARPANET plan to incorporate Clark's suggestion and named the minicomputers Interface Message Processors (IMPs).

The plan was presented at the inaugural Symposium on Operating Systems Principles in October 1967. Donald Davies' work on packet switching and the NPL network, presented by a colleague (Roger Scantlebury), came to the attention of the ARPA investigators at this conference. Roberts applied Davies' concept of packet switching for the ARPANET, and sought input from Paul Baran. The NPL network was using line speeds of 768 kbit/s, and the proposed line speed for the ARPANET was upgraded from 2.4 kbit/s to 50 kbit/s.

By mid-1968, Roberts and Barry Wessler wrote a final version of the Interface Message Processor (IMP) specification based on a Stanford Research Institute (SRI) report that ARPA commissioned to write detailed specifications describing the ARPANET communications network. Roberts gave a report to Taylor on 3 June, who approved it on 21 June. After approval by ARPA, a Request for Quotation (RFQ) was issued for 140 potential bidders. Most computer science companies regarded the ARPA proposal as outlandish, and only twelve submitted bids to build a network; of the twelve, ARPA regarded only four as top-rank contractors. At year's end, ARPA considered only two contractors, and awarded the contract to build the network to Bolt, Beranek and Newman Inc. (BBN) in January 1969.

The initial, seven-person BBN team were much aided by the technical specificity of their response to the ARPA RFQ, and thus quickly produced the first working system. This team was led by Frank Heart and included Robert Kahn and Dave Walden. The BBN-proposed network closely followed Roberts' ARPA plan: a network composed of small computers called Interface Message Processors (or IMPs), similar to the later concept of routers, that functioned as gateways interconnecting local resources. At each site, the IMPs performed store-and-forward packet switching functions, and were interconnected with leased lines via telecommunication data sets (modems), with initial data rates of 56kbit/s. The host computers were connected to the IMPs via custom serial communication interfaces. The system, including the hardware and the packet switching software, was designed and installed in nine months. The BBN team continued to interact with the NPL team with meetings between them taking place in the U.S. and the U.K.

The first-generation IMPs were built by BBN Technologies using a rugged computer version of the Honeywell DDP-516 computer, configured with 24KB of expandable magnetic-core memory, and a 16-channel Direct Multiplex Control (DMC) direct memory access unit. The DMC established custom interfaces with each of the host computers and modems. In addition to the front-panel lamps, the DDP-516 computer also features a special set of 24 indicator lamps showing the status of the IMP communication channels. Each IMP could support up to four local hosts, and could communicate with up to six remote IMPs via early Digital Signal 0 leased telephone lines. The network connected one computer in Utah with three in California. Later, the Department of Defense allowed the universities to join the network for sharing hardware and software resources.

Debate on design goals

According to Charles Herzfeld, ARPA Director (1965–1967):

The ARPANET was not started to create a Command and Control System that would survive a nuclear attack, as many now claim. To build such a system was, clearly, a major military need, but it was not ARPA's mission to do this; in fact, we would have been severely criticized had we tried. Rather, the ARPANET came out of our frustration that there were only a limited number of large, powerful research computers in the country, and that many research investigators, who should have access to them, were geographically separated from them.

Nonetheless, according to Stephen J. Lukasik, who as deputy director (1967–1970) and Director of DARPA (1970–1975) was "the person who signed most of the checks for Arpanet's development":

The goal was to exploit new computer technologies to meet the needs of military command and control against nuclear threats, achieve survivable control of US nuclear forces, and improve military tactical and management decision making.

The ARPANET incorporated distributed computation, and frequent re-computation, of routing tables. This increased the survivability of the network in the face of significant interruption. Automatic routing was technically challenging at the time. The ARPANET was designed to survive subordinate-network losses, since the principal reason was that the switching nodes and network links were unreliable, even without any nuclear attacks.

The Internet Society agrees with Herzfeld in a footnote in their online article, A Brief History of the Internet:

It was from the RAND study that the false rumor started, claiming that the ARPANET was somehow related to building a network resistant to nuclear war. This was never true of the ARPANET, but was an aspect of the earlier RAND study of secure communication. The later work on internetworking did emphasize robustness and survivability, including the capability to withstand losses of large portions of the underlying networks.

Paul Baran, the first to put forward a theoretical model for communication using packet switching, conducted the RAND study referenced above. Though the ARPANET did not exactly share Baran's project's goal, he said his work did contribute to the development of the ARPANET. Minutes taken by Elmer Shapiro of Stanford Research Institute at the ARPANET design meeting of 9–10 October 1967 indicate that a version of Baran's routing method ("hot potato") may be used, consistent with the NPL team's proposal at the Symposium on Operating System Principles in Gatlinburg.

Implementation

The first four nodes were designated as a testbed for developing and debugging the 1822 protocol, which was a major undertaking. While they were connected electronically in 1969, network applications were not possible until the Network Control Program was implemented in 1970 enabling the first two host-host protocols, remote login (Telnet) and file transfer (FTP) which were specified and implemented between 1969 and 1973. The network was declared operational in 1971. Network traffic began to grow once email was established at the majority of sites by around 1973.

Initial four hosts

The first four IMPs were:

- University of California, Los Angeles (UCLA), where Leonard Kleinrock had established a Network Measurement Center, with an SDS Sigma 7 being the first computer attached to it;

- The Augmentation Research Center at Stanford Research Institute (now SRI International), where Douglas Engelbart had created the new NLS system, an early hypertext system, and would run the Network Information Center (NIC), with the SDS 940 that ran NLS, named "Genie", being the first host attached;

- University of California, Santa Barbara (UCSB), with the Culler-Fried Interactive Mathematics Center's IBM 360/75, running OS/MVT being the machine attached;

- The University of Utah School of Computing, where Ivan Sutherland had moved, running a DEC PDP-10 operating on TENEX.

The first successful host to host connection on the ARPANET was made between Stanford Research Institute (SRI) and UCLA, by SRI programmer Bill Duvall and UCLA student programmer Charley Kline, at 10:30 pm PST on 29 October 1969 (6:30 UTC on 30 October 1969). Kline connected from UCLA's SDS Sigma 7 Host computer (in Boelter Hall room 3420) to the Stanford Research Institute's SDS 940 Host computer. Kline typed the command "login," but initially the SDS 940 crashed after he typed two characters. About an hour later, after Duvall adjusted parameters on the machine, Kline tried again and successfully logged in. Hence, the first two characters successfully transmitted over the ARPANET were "lo". The first permanent ARPANET link was established on 21 November 1969, between the IMP at UCLA and the IMP at the Stanford Research Institute. By 5 December 1969, the initial four-node network was established.

Elizabeth Feinler created the first Resource Handbook for ARPANET in 1969 which led to the development of the ARPANET directory. The directory, built by Feinler and a team made it possible to navigate the ARPANET.

Growth and evolution

Roberts engaged Howard Frank to consult on the topological design of the network. Frank made recommendations to increase throughput and reduce costs in a scaled-up network. By March 1970, the ARPANET reached the East Coast of the United States, when an IMP at BBN in Cambridge, Massachusetts was connected to the network. Thereafter, the ARPANET grew: 9 IMPs by June 1970 and 13 IMPs by December 1970, then 18 by September 1971 (when the network included 23 university and government hosts); 29 IMPs by August 1972, and 40 by September 1973. By June 1974, there were 46 IMPs, and in July 1975, the network numbered 57 IMPs. By 1981, the number was 213 host computers, with another host connecting approximately every twenty days.

Support for inter-IMP circuits of up to 230.4 kbit/s was added in 1970, although considerations of cost and IMP processing power meant this capability was not actively used.

Larry Roberts saw the ARPANET and NPL projects as complementary and sought in 1970 to connect them via a satellite link. Peter Kirstein's research group at University College London (UCL) was subsequently chosen in 1971 in place of NPL for the UK connection. In June 1973, a transatlantic satellite link connected ARPANET to the Norwegian Seismic Array (NORSAR), via the Tanum Earth Station in Sweden, and onward via a terrestrial circuit to a TIP at UCL. UCL provided a gateway for an interconnection with the NPL network, the first interconnected network, and subsequently the SRCnet, the forerunner of UK's JANET network.

1971 saw the start of the use of the non-ruggedized (and therefore significantly lighter) Honeywell 316 as an IMP. It could also be configured as a Terminal Interface Processor (TIP), which provided terminal server support for up to 63 ASCII serial terminals through a multi-line controller in place of one of the hosts. The 316 featured a greater degree of integration than the 516, which made it less expensive and easier to maintain. The 316 was configured with 40 kB of core memory for a TIP. The size of core memory was later increased, to 32 kB for the IMPs, and 56 kB for TIPs, in 1973.

In 1975, BBN introduced IMP software running on the Pluribus multi-processor. These appeared in a few sites. In 1981, BBN introduced IMP software running on its own C/30 processor product.

Network performance

In 1968, Roberts contracted with Kleinrock to measure the performance of the network and find areas for improvement. Building on his earlier work on queueing theory, Kleinrock specified mathematical models of the performance of packet-switched networks, which underpinned the development of the ARPANET as it expanded rapidly in the early 1970s.

Operation

The ARPANET was a research project that was communications-oriented, rather than user-oriented in design. Nonetheless, in the summer of 1975, the ARPANET was declared "operational". The Defense Communications Agency took control since ARPA was intended to fund advanced research. At about this time, the first ARPANET encryption devices were deployed to support classified traffic.

The transatlantic connectivity with NORSAR and UCL later evolved into the SATNET. The ARPANET, SATNET and PRNET were interconnected in 1977.

The ARPANET Completion Report, published in 1981 jointly by BBN and ARPA, concludes that:

... it is somewhat fitting to end on the note that the ARPANET program has had a strong and direct feedback into the support and strength of computer science, from which the network, itself, sprang.

CSNET, expansion

Access to the ARPANET was expanded in 1981, when the National Science Foundation (NSF) funded the Computer Science Network (CSNET).

Adoption of TCP/IP

The DoD made TCP/IP standard for all military computer networking in 1980. NORSAR and University College London left the ARPANET and began using TCP/IP over SATNET in early 1982.

On January 1, 1983, known as flag day, TCP/IP protocols became the standard for the ARPANET, replacing the earlier Network Control Program.

MILNET, phasing out

In September 1984 work was completed on restructuring the ARPANET giving U.S. military sites their own Military Network (MILNET) for unclassified defense department communications. Both networks carried unclassified information, and were connected at a small number of controlled gateways which would allow total separation in the event of an emergency. MILNET was part of the Defense Data Network (DDN).

Separating the civil and military networks reduced the 113-node ARPANET by 68 nodes. After MILNET was split away, the ARPANET would continue be used as an Internet backbone for researchers, but be slowly phased out.

Decommissioning

In 1985, the National Science Foundation (NSF) funded the establishment of national supercomputing centers at several universities, and provided network access and network interconnectivity with the NSFNET project in 1986. NSFNET became the Internet backbone for government agencies and universities.

The ARPANET project was formally decommissioned in 1990. The original IMPs and TIPs were phased out as the ARPANET was shut down after the introduction of the NSFNet, but some IMPs remained in service as late as July 1990.

In the wake of the decommissioning of the ARPANET on 28 February 1990, Vinton Cerf wrote the following lamentation, entitled "Requiem of the ARPANET":

It was the first, and being first, was best,

but now we lay it down to ever rest.

Now pause with me a moment, shed some tears.

For auld lang syne, for love, for years and years

of faithful service, duty done, I weep.

Lay down thy packet, now, O friend, and sleep.

Legacy

The ARPANET was related to many other research projects, which either influenced the ARPANET design, or which were ancillary projects or spun out of the ARPANET.

Senator Al Gore authored the High Performance Computing and Communication Act of 1991, commonly referred to as "The Gore Bill", after hearing the 1988 concept for a National Research Network submitted to Congress by a group chaired by Leonard Kleinrock. The bill was passed on 9 December 1991 and led to the National Information Infrastructure (NII) which Gore called the information superhighway.

Inter-networking protocols developed by ARPA and implemented on the ARPANET paved the way for future commercialization of a new world-wide network, known as the Internet.

The ARPANET project was honored with two IEEE Milestones, both dedicated in 2009.

Software and protocols

IMP functionality

Because

it was never a goal for the ARPANET to support IMPs from vendors other

than BBN, the IMP-to-IMP protocol and message format were not

standardized. However, the IMPs did nonetheless communicate amongst

themselves to perform link-state routing,

to do reliable forwarding of messages, and to provide remote monitoring

and management functions to ARPANET's Network Control Center.

Initially, each IMP had a 6-bit identifier, and supported up to 4 hosts,

which were identified with a 2-bit index. An ARPANET host address,

therefore, consisted of both the port index on its IMP and the

identifier of the IMP, which was written with either port/IMP notation or as a single byte; for example, the address of MIT-DMG (notable for hosting development of Zork) could be written as either 1/6 or 70. An upgrade in early 1976 extended the host and IMP numbering to 8-bit and 16-bit, respectively.

In addition to primary routing and forwarding responsibilities, the IMP ran several background programs, titled TTY, DEBUG, PARAMETER-CHANGE, DISCARD, TRACE, and STATISTICS. These were given host numbers in order to be addressed directly and provided functions independently of any connected host. For example, "TTY" allowed an on-site operator to send ARPANET packets manually via the teletype connected directly to the IMP.

1822 protocol

The starting point for host-to-host communication on the ARPANET in 1969 was the 1822 protocol, which defined the transmission of messages to an IMP. The message format was designed to work unambiguously with a broad range of computer architectures. An 1822 message essentially consisted of a message type, a numeric host address, and a data field. To send a data message to another host, the transmitting host formatted a data message containing the destination host's address and the data message being sent, and then transmitted the message through the 1822 hardware interface. The IMP then delivered the message to its destination address, either by delivering it to a locally connected host, or by delivering it to another IMP. When the message was ultimately delivered to the destination host, the receiving IMP would transmit a Ready for Next Message (RFNM) acknowledgement to the sending, host IMP.

Network Control Program

Unlike modern Internet datagrams, the ARPANET was designed to reliably transmit 1822 messages, and to inform the host computer when it loses a message; the contemporary IP is unreliable, whereas the TCP is reliable. Nonetheless, the 1822 protocol proved inadequate for handling multiple connections among different applications residing in a host computer. This problem was addressed with the Network Control Program (NCP), which provided a standard method to establish reliable, flow-controlled, bidirectional communications links among different processes in different host computers. The NCP interface allowed application software to connect across the ARPANET by implementing higher-level communication protocols, an early example of the protocol layering concept later incorporated in the OSI model.

NCP was developed under the leadership of Stephen D. Crocker, then a graduate student at UCLA. Crocker created and led the Network Working Group (NWG) which was made up of a collection of graduate students at universities and research laboratories sponsored by ARPA to carry out the development of the ARPANET and the software for the host computers that supported applications. The various application protocols such as TELNET for remote time-sharing access, File Transfer Protocol (FTP) and rudimentary electronic mail protocols were developed and eventually ported to run over the TCP/IP protocol suite or replaced in the case of email by the Simple Mail Transfer Protocol.

TCP/IP

Steve Crocker formed a "Networking Working Group" in 1969 with Vint Cerf, who also joined an International Networking Working Group in 1972. These groups considered how to interconnect packet switching networks with different specifications, that is, internetworking. Stephen J. Lukasik directed DARPA to focus on internetworking research in the early 1970s. Research led by Bob Kahn at DARPA and Vint Cerf at Stanford University and later DARPA resulted in the formulation of the Transmission Control Program, which incorporated concepts from the French CYCLADES project directed by Louis Pouzin. Its specification was written by Cerf with Yogen Dalal and Carl Sunshine in December 1974 (RFC 675). The following year, testing began through concurrent implementations at Stanford, BBN and University College London. At first a monolithic design, the software was redesigned as a modular protocol stack in version 3 in 1978. Version 4 was installed in the ARPANET for production use in January 1983, replacing NCP. The development of the complete Internet protocol suite by 1989, as outlined in RFC 1122 and RFC 1123, and partnerships with the telecommunication and computer industry laid the foundation for the adoption of TCP/IP as a comprehensive protocol suite as the core component of the emerging Internet.

Network applications

NCP provided a standard set of network services that could be shared by several applications running on a single host computer. This led to the evolution of application protocols that operated, more or less, independently of the underlying network service, and permitted independent advances in the underlying protocols.

Telnet was developed in 1969 beginning with RFC 15, extended in RFC 855.

The original specification for the File Transfer Protocol was written by Abhay Bhushan and published as RFC 114 on 16 April 1971. By 1973, the File Transfer Protocol (FTP) specification had been defined (RFC 354) and implemented, enabling file transfers over the ARPANET.

In 1971, Ray Tomlinson, of BBN sent the first network e-mail (RFC 524, RFC 561). Within a few years, e-mail came to represent a very large part of the overall ARPANET traffic.

The Network Voice Protocol (NVP) specifications were defined in 1977 (RFC 741), and implemented. But, because of technical shortcomings, conference calls over the ARPANET never worked well; the contemporary Voice over Internet Protocol (packet voice) was decades away.

Password protection

The Purdy Polynomial hash algorithm was developed for the ARPANET to protect passwords in 1971 at the request of Larry Roberts, head of ARPA at that time. It computed a polynomial of degree 224 + 17 modulo the 64-bit prime p = 264 − 59. The algorithm was later used by Digital Equipment Corporation (DEC) to hash passwords in the VMS operating system and is still being used for this purpose.

Rules and etiquette

Because of its government funding, certain forms of traffic were discouraged or prohibited.

Leonard Kleinrock claims to have committed the first illegal act on the Internet, having sent a request for return of his electric razor after a meeting in England in 1973. At the time, use of the ARPANET for personal reasons was unlawful.

In 1978, against the rules of the network, Gary Thuerk of Digital Equipment Corporation (DEC) sent out the first mass email to approximately 400 potential clients via the ARPANET. He claims that this resulted in $13 million worth of sales in DEC products, and highlighted the potential of email marketing.

A 1982 handbook on computing at MIT's AI Lab stated regarding network etiquette:

It is considered illegal to use the ARPANet for anything which is not in direct support of Government business ... personal messages to other ARPANet subscribers (for example, to arrange a get-together or check and say a friendly hello) are generally not considered harmful ... Sending electronic mail over the ARPANet for commercial profit or political purposes is both anti-social and illegal. By sending such messages, you can offend many people, and it is possible to get MIT in serious trouble with the Government agencies which manage the ARPANet.

In popular culture

- Computer Networks: The Heralds of Resource Sharing, a 30-minute documentary film featuring Fernando J. Corbató, J. C. R. Licklider, Lawrence G. Roberts, Robert Kahn, Frank Heart, William R. Sutherland, Richard W. Watson, John R. Pasta, Donald W. Davies, and economist, George W. Mitchell.

- "Scenario", an episode of the U.S. television sitcom Benson (season 6, episode 20—dated February 1985), was the first incidence of a popular TV show directly referencing the Internet or its progenitors. The show includes a scene in which the ARPANET is accessed.

- There is an electronic music artist known as "Arpanet", Gerald Donald, one of the members of Drexciya. The artist's 2002 album Wireless Internet features commentary on the expansion of the internet via wireless communication, with songs such as NTT DoCoMo, dedicated to the mobile communications giant based in Japan.

- Thomas Pynchon mentions the ARPANET in his 2009 novel Inherent Vice, which is set in Los Angeles in 1970, and in his 2013 novel Bleeding Edge.

- The 1993 television series The X-Files featured the ARPANET in a season 5 episode, titled "Unusual Suspects". John Fitzgerald Byers offers to help Susan Modeski (known as Holly ... "just like the sugar") by hacking into the ARPANET to obtain sensitive information.

- In the spy-drama television series The Americans, a Russian scientist defector offers access to ARPANET to the Russians in a plea to not be repatriated (Season 2 Episode 5 "The Deal"). Episode 7 of Season 2 is named 'ARPANET' and features Russian infiltration to bug the network.

- In the television series Person of Interest, main character Harold Finch hacked the ARPANET in 1980 using a homemade computer during his first efforts to build a prototype of the Machine. This corresponds with the real life virus that occurred in October of that year that temporarily halted ARPANET functions. The ARPANET hack was first discussed in the episode 2PiR (stylised 2R) where a computer science teacher called it the most famous hack in history and one that was never solved. Finch later mentioned it to Person of Interest Caleb Phipps and his role was first indicated when he showed knowledge that it was done by "a kid with a homemade computer" which Phipps, who had researched the hack, had never heard before.

- In the third season of the television series Halt and Catch Fire, the character Joe MacMillan explores the potential commercialization of the ARPANET.