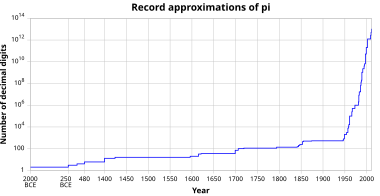

Approximations for the mathematical constant pi (π) in the history of mathematics reached an accuracy within 0.04% of the true value before the beginning of the Common Era. In Chinese mathematics, this was improved to approximations correct to what corresponds to about seven decimal digits by the 5th century.

Further progress was not made until the 15th century (through the efforts of Jamshīd al-Kāshī). Early modern mathematicians reached an accuracy of 35 digits by the beginning of the 17th century (Ludolph van Ceulen), and 126 digits by the 19th century (Jurij Vega), surpassing the accuracy required for any conceivable application outside of pure mathematics.

The record of manual approximation of π is held by William Shanks, who calculated 527 digits correctly in 1853. Since the middle of the 20th century, the approximation of π has been the task of electronic digital computers (for a comprehensive account, see Chronology of computation of π). On June 8, 2022, the current record was established by Emma Haruka Iwao with Alexander Yee's y-cruncher with 100 trillion digits.

Early history

The best known approximations to π dating to before the Common Era were accurate to two decimal places; this was improved upon in Chinese mathematics in particular by the mid-first millennium, to an accuracy of seven decimal places. After this, no further progress was made until the late medieval period.

Some Egyptologists have claimed that the ancient Egyptians used an approximation of π as 22⁄7 = 3.142857 (about 0.04% too high) from as early as the Old Kingdom. This claim has been met with skepticism.

Babylonian mathematics usually approximated π to 3, sufficient for the architectural projects of the time (notably also reflected in the description of Solomon's Temple in the Hebrew Bible). The Babylonians were aware that this was an approximation, and one Old Babylonian mathematical tablet excavated near Susa in 1936 (dated to between the 19th and 17th centuries BCE) gives a better approximation of π as 25⁄8 = 3.125, about 0.528% below the exact value.

At about the same time, the Egyptian Rhind Mathematical Papyrus (dated to the Second Intermediate Period, c. 1600 BCE, although stated to be a copy of an older, Middle Kingdom text) implies an approximation of π as 256⁄81 ≈ 3.16 (accurate to 0.6 percent) by calculating the area of a circle via approximation with the octagon.

Astronomical calculations in the Shatapatha Brahmana (c. 6th century BCE) use a fractional approximation of 339⁄108 ≈ 3.139.

The Mahabharata (500 BCE - 300 CE) offers an approximation of 3, in the ratios offered in Bhishma Parva verses: 6.12.40-45.

...

The Moon is handed down by memory to be eleven thousand yojanas in diameter. Its peripheral circle happens to be thirty three thousand yojanas when calculated.

...

The Sun is eight thousand yojanas and another two thousand yojanas in diameter. From that its peripheral circle comes to be equal to thirty thousand yojanas.

...

— "verses: 6.12.40-45, Bhishma Parva of the Mahabharata"

In the 3rd century BCE, Archimedes proved the sharp inequalities 223⁄71 < π < 22⁄7, by means of regular 96-gons (accuracies of 2·10−4 and 4·10−4, respectively).

In the 2nd century CE, Ptolemy used the value 377⁄120, the first known approximation accurate to three decimal places (accuracy 2·10−5). It is equal to which is accurate to two sexagesimal digits.

The Chinese mathematician Liu Hui in 263 CE computed π to between 3.141024 and 3.142708 by inscribing a 96-gon and 192-gon; the average of these two values is 3.141866 (accuracy 9·10−5). He also suggested that 3.14 was a good enough approximation for practical purposes. He has also frequently been credited with a later and more accurate result, π ≈ 3927⁄1250 = 3.1416 (accuracy 2·10−6), although some scholars instead believe that this is due to the later (5th-century) Chinese mathematician Zu Chongzhi. Zu Chongzhi is known to have computed π to be between 3.1415926 and 3.1415927, which was correct to seven decimal places. He also gave two other approximations of π: π ≈ 22⁄7 and π ≈ 355⁄113, which are not as accurate as his decimal result. The latter fraction is the best possible rational approximation of π using fewer than five decimal digits in the numerator and denominator. Zu Chongzhi's results surpass the accuracy reached in Hellenistic mathematics, and would remain without improvement for close to a millennium.

In Gupta-era India (6th century), mathematician Aryabhata, in his astronomical treatise Āryabhaṭīya stated:

Add 4 to 100, multiply by 8 and add to 62,000. This is ‘approximately’ the circumference of a circle whose diameter is 20,000.

Approximating π to four decimal places: π ≈ 62832⁄20000 = 3.1416, Aryabhata stated that his result "approximately" (āsanna "approaching") gave the circumference of a circle. His 15th-century commentator Nilakantha Somayaji (Kerala school of astronomy and mathematics) has argued that the word means not only that this is an approximation, but that the value is incommensurable (irrational).

Middle Ages

Further progress was not made for nearly a millennium, until the 14th century, when Indian mathematician and astronomer Madhava of Sangamagrama, founder of the Kerala school of astronomy and mathematics, found the Maclaurin series for arctangent, and then two infinite series for π. One of them is now known as the Madhava–Leibniz series, based on

The other was based on

He used the first 21 terms to compute an approximation of π correct to 11 decimal places as 3.14159265359.

He also improved the formula based on arctan(1) by including a correction:

It is not known how he came up with this correction. Using this he found an approximation of π to 13 decimal places of accuracy when n = 75.

Jamshīd al-Kāshī (Kāshānī), a Persian astronomer and mathematician, correctly computed the fractional part of 2π to 9 sexagesimal digits in 1424, and translated this into 16 decimal digits after the decimal point:

which gives 16 correct digits for π after the decimal point:

He achieved this level of accuracy by calculating the perimeter of a regular polygon with 3 × 228 sides.

16th to 19th centuries

In the second half of the 16th century, the French mathematician François Viète discovered an infinite product that converged on π known as Viète's formula.

The German-Dutch mathematician Ludolph van Ceulen (circa 1600) computed the first 35 decimal places of π with a 262-gon. He was so proud of this accomplishment that he had them inscribed on his tombstone.

In Cyclometricus (1621), Willebrord Snellius demonstrated that the perimeter of the inscribed polygon converges on the circumference twice as fast as does the perimeter of the corresponding circumscribed polygon. This was proved by Christiaan Huygens in 1654. Snellius was able to obtain seven digits of π from a 96-sided polygon.

In 1789, the Slovene mathematician Jurij Vega calculated the first 140 decimal places for π, of which the first 126 were correct, and held the world record for 52 years until 1841, when William Rutherford calculated 208 decimal places, of which the first 152 were correct. Vega improved John Machin's formula from 1706 and his method is still mentioned today.

The magnitude of such precision (152 decimal places) can be put into context by the fact that the circumference of the largest known object, the observable universe, can be calculated from its diameter (93 billion light-years) to a precision of less than one Planck length (at 1.6162×10−35 meters, the shortest unit of length expected to be directly measurable) using π expressed to just 62 decimal places.

The English amateur mathematician William Shanks, a man of independent means, calculated π to 530 decimal places in January 1853, of which the first 527 were correct (the last few likely being incorrect due to round-off errors). He subsequently expanded his calculation to 607 decimal places in April 1853, but an error introduced right at the 530th decimal place rendered the rest of his calculation erroneous; due to the nature of Machin's formula, the error propagated back to the 528th decimal place, leaving only the first 527 digits correct once again. Twenty years later, Shanks expanded his calculation to 707 decimal places in April 1873. Due to this being an expansion of his previous calculation, all of the new digits were incorrect as well. Shanks was said to have calculated new digits all morning and would then spend all afternoon checking his morning's work. This was the longest expansion of π until the advent of the electronic digital computer three-quarters of a century later.

20th and 21st centuries

In 1910, the Indian mathematician Srinivasa Ramanujan found several rapidly converging infinite series of π, including

which computes a further eight decimal places of π with each term in the series. His series are now the basis for the fastest algorithms currently used to calculate π. Even using just the first term gives

From the mid-20th century onwards, all calculations of π have been done with the help of calculators or computers.

In 1944, D. F. Ferguson, with the aid of a mechanical desk calculator, found that William Shanks had made a mistake in the 528th decimal place, and that all succeeding digits were incorrect.

In the early years of the computer, an expansion of π to 100000 decimal places was computed by Maryland mathematician Daniel Shanks (no relation to the aforementioned William Shanks) and his team at the United States Naval Research Laboratory in Washington, D.C. In 1961, Shanks and his team used two different power series for calculating the digits of π. For one, it was known that any error would produce a value slightly high, and for the other, it was known that any error would produce a value slightly low. And hence, as long as the two series produced the same digits, there was a very high confidence that they were correct. The first 100,265 digits of π were published in 1962. The authors outlined what would be needed to calculate π to 1 million decimal places and concluded that the task was beyond that day's technology, but would be possible in five to seven years.

In 1989, the Chudnovsky brothers computed π to over 1 billion decimal places on the supercomputer IBM 3090 using the following variation of Ramanujan's infinite series of π:

Records since then have all been accomplished using the Chudnovsky algorithm. In 1999, Yasumasa Kanada and his team at the University of Tokyo computed π to over 200 billion decimal places on the supercomputer HITACHI SR8000/MPP (128 nodes) using another variation of Ramanujan's infinite series of π. In November 2002, Yasumasa Kanada and a team of 9 others used the Hitachi SR8000, a 64-node supercomputer with 1 terabyte of main memory, to calculate π to roughly 1.24 trillion digits in around 600 hours (25 days).

Recent Records

- In August 2009, a Japanese supercomputer called the T2K Open Supercomputer more than doubled the previous record by calculating π to roughly 2.6 trillion digits in approximately 73 hours and 36 minutes.

- In December 2009, Fabrice Bellard used a home computer to compute 2.7 trillion decimal digits of π. Calculations were performed in base 2 (binary), then the result was converted to base 10 (decimal). The calculation, conversion, and verification steps took a total of 131 days.

- In August 2010, Shigeru Kondo used Alexander Yee's y-cruncher to calculate 5 trillion digits of π. This was the world record for any type of calculation, but significantly it was performed on a home computer built by Kondo. The calculation was done between 4 May and 3 August, with the primary and secondary verifications taking 64 and 66 hours respectively.

- In October 2011, Shigeru Kondo broke his own record by computing ten trillion (1013) and fifty digits using the same method but with better hardware.

- In December 2013, Kondo broke his own record for a second time when he computed 12.1 trillion digits of π.

- In October 2014, Sandon Van Ness, going by the pseudonym "houkouonchi" used y-cruncher to calculate 13.3 trillion digits of π.

- In November 2016, Peter Trueb and his sponsors computed on y-cruncher and fully verified 22.4 trillion digits of π (22,459,157,718,361 (πe × 1012)). The computation took (with three interruptions) 105 days to complete, the limitation of further expansion being primarily storage space.

- In March 2019, Emma Haruka Iwao, an employee at Google, computed 31.4 (approximately 10π) trillion digits of pi using y-cruncher and Google Cloud machines. This took 121 days to complete.

- In January 2020, Timothy Mullican announced the computation of 50 trillion digits over 303 days.

- On August 14, 2021, a team (DAViS) at the University of Applied Sciences of the Grisons announced completion of the computation of π to 62.8 (approximately 20π) trillion digits.

- On June 8th 2022, Emma Haruka Iwao announced on the Google Cloud Blog the computation of 100 trillion (1014) digits of π over 158 days using Alexander Yee's y-cruncher.

Practical approximations

Depending on the purpose of a calculation, π can be approximated by using fractions for ease of calculation. The most notable such approximations are 22⁄7 (relative error of about 4·10−4) and 355⁄113 (relative error of about 8·10−8).

Non-mathematical "definitions" of π

Of some notability are legal or historical texts purportedly "defining π" to have some rational value, such as the "Indiana Pi Bill" of 1897, which stated "the ratio of the diameter and circumference is as five-fourths to four" (which would imply "π = 3.2") and a passage in the Hebrew Bible that implies that π = 3.

Indiana bill

The so-called "Indiana Pi Bill" from 1897 has often been characterized as an attempt to "legislate the value of Pi". Rather, the bill dealt with a purported solution to the problem of geometrically "squaring the circle".

The bill was nearly passed by the Indiana General Assembly in the U.S., and has been claimed to imply a number of different values for π, although the closest it comes to explicitly asserting one is the wording "the ratio of the diameter and circumference is as five-fourths to four", which would make π = 16⁄5 = 3.2, a discrepancy of nearly 2 percent. A mathematics professor who happened to be present the day the bill was brought up for consideration in the Senate, after it had passed in the House, helped to stop the passage of the bill on its second reading, after which the assembly thoroughly ridiculed it before postponing it indefinitely.

Imputed biblical value

It is sometimes claimed that the Hebrew Bible implies that "π equals three", based on a passage in 1 Kings 7:23 and 2 Chronicles 4:2 giving measurements for the round basin located in front of the Temple in Jerusalem as having a diameter of 10 cubits and a circumference of 30 cubits.

The issue is discussed in the Talmud and in Rabbinic literature. Among the many explanations and comments are these:

- Rabbi Nehemiah explained this in his Mishnat ha-Middot (the earliest known Hebrew text on geometry, ca. 150 CE) by saying that the diameter was measured from the outside rim while the circumference was measured along the inner rim. This interpretation implies a brim about 0.225 cubit (or, assuming an 18-inch "cubit", some 4 inches), or one and a third "handbreadths," thick (cf. NKJV and NKJV).

- Maimonides states (ca. 1168 CE) that π can only be known approximately, so the value 3 was given as accurate enough for religious purposes. This is taken by some as the earliest assertion that π is irrational.

There is still some debate on this passage in biblical scholarship. Many reconstructions of the basin show a wider brim (or flared lip) extending outward from the bowl itself by several inches to match the description given in NKJV In the succeeding verses, the rim is described as "a handbreadth thick; and the brim thereof was wrought like the brim of a cup, like the flower of a lily: it received and held three thousand baths" NKJV, which suggests a shape that can be encompassed with a string shorter than the total length of the brim, e.g., a Lilium flower or a Teacup.

Development of efficient formulae

Polygon approximation to a circle

Archimedes, in his Measurement of a Circle, created the first algorithm for the calculation of π based on the idea that the perimeter of any (convex) polygon inscribed in a circle is less than the circumference of the circle, which, in turn, is less than the perimeter of any circumscribed polygon. He started with inscribed and circumscribed regular hexagons, whose perimeters are readily determined. He then shows how to calculate the perimeters of regular polygons of twice as many sides that are inscribed and circumscribed about the same circle. This is a recursive procedure which would be described today as follows: Let pk and Pk denote the perimeters of regular polygons of k sides that are inscribed and circumscribed about the same circle, respectively. Then,

Archimedes uses this to successively compute P12, p12, P24, p24, P48, p48, P96 and p96. Using these last values he obtains

It is not known why Archimedes stopped at a 96-sided polygon; it only takes patience to extend the computations. Heron reports in his Metrica (about 60 CE) that Archimedes continued the computation in a now lost book, but then attributes an incorrect value to him.

Archimedes uses no trigonometry in this computation and the difficulty in applying the method lies in obtaining good approximations for the square roots that are involved. Trigonometry, in the form of a table of chord lengths in a circle, was probably used by Claudius Ptolemy of Alexandria to obtain the value of π given in the Almagest (circa 150 CE).

Advances in the approximation of π (when the methods are known) were made by increasing the number of sides of the polygons used in the computation. A trigonometric improvement by Willebrord Snell (1621) obtains better bounds from a pair of bounds obtained from the polygon method. Thus, more accurate results were obtained from polygons with fewer sides. Viète's formula, published by François Viète in 1593, was derived by Viète using a closely related polygonal method, but with areas rather than perimeters of polygons whose numbers of sides are powers of two.

The last major attempt to compute π by this method was carried out by Grienberger in 1630 who calculated 39 decimal places of π using Snell's refinement.

Machin-like formula

For fast calculations, one may use formulae such as Machin's:

together with the Taylor series expansion of the function arctan(x). This formula is most easily verified using polar coordinates of complex numbers, producing:

({x,y} = {239, 132} is a solution to the Pell equation x2−2y2 = −1.)

Formulae of this kind are known as Machin-like formulae. Machin's particular formula was used well into the computer era for calculating record numbers of digits of π, but more recently other similar formulae have been used as well.

For instance, Shanks and his team used the following Machin-like formula in 1961 to compute the first 100,000 digits of π:

and they used another Machin-like formula,

as a check.

The record as of December 2002 by Yasumasa Kanada of Tokyo University stood at 1,241,100,000,000 digits. The following Machin-like formulae were used for this:

K. Takano (1982).

F. C. M. Størmer (1896).

Other classical formulae

Other formulae that have been used to compute estimates of π include:

Liu Hui (see also Viète's formula):

Newton / Euler Convergence Transformation:

where (2k + 1)!! denotes the product of the odd integers up to 2k + 1.

David Chudnovsky and Gregory Chudnovsky:

Ramanujan's work is the basis for the Chudnovsky algorithm, the fastest algorithms used, as of the turn of the millennium, to calculate π.

Modern algorithms

Extremely long decimal expansions of π are typically computed with iterative formulae like the Gauss–Legendre algorithm and Borwein's algorithm. The latter, found in 1985 by Jonathan and Peter Borwein, converges extremely quickly:

For and

where , the sequence converges quartically to π, giving about 100 digits in three steps and over a trillion digits after 20 steps. The Gauss–Legendre algorithm (with time complexity , using Harvey–Hoeven multiplication algorithm) is asymptotically faster than the Chudnovsky algorithm (with time complexity ) – but which of these algorithms is faster in practice for "small enough" depends on technological factors such as memory sizes and access times. For breaking world records, the iterative algorithms are used less commonly than the Chudnovsky algorithm since they are memory-intensive.

The first one million digits of π and 1⁄π are available from Project Gutenberg. A former calculation record (December 2002) by Yasumasa Kanada of Tokyo University stood at 1.24 trillion digits, which were computed in September 2002 on a 64-node Hitachi supercomputer with 1 terabyte of main memory, which carries out 2 trillion operations per second, nearly twice as many as the computer used for the previous record (206 billion digits). The following Machin-like formulae were used for this:

- (Kikuo Takano (1982))

- (F. C. M. Størmer (1896)).

These approximations have so many digits that they are no longer of any practical use, except for testing new supercomputers. Properties like the potential normality of π will always depend on the infinite string of digits on the end, not on any finite computation.

Miscellaneous approximations

Historically, base 60 was used for calculations. In this base, π can be approximated to eight (decimal) significant figures with the number 3;8,29,4460, which is

(The next sexagesimal digit is 0, causing truncation here to yield a relatively good approximation.)

In addition, the following expressions can be used to estimate π:

- accurate to three digits:

- accurate to three digits:

- Karl Popper conjectured that Plato knew this expression, that he believed it to be exactly π, and that this is responsible for some of Plato's confidence in the omnicompetence of mathematical geometry—and Plato's repeated discussion of special right triangles that are either isosceles or halves of equilateral triangles.

- accurate to four digits:

- accurate to four digits (or five significant figures):

- an approximation by Ramanujan, accurate to 4 digits (or five significant figures):

- accurate to five digits:

- accurate to six digits:

- accurate to seven digits:

- - inverse of first term of Ramanujan series.

- accurate to eight digits:

- accurate to nine digits:

- This is from Ramanujan, who claimed the Goddess of Namagiri appeared to him in a dream and told him the true value of π.

- accurate to ten digits:

- accurate to ten digits:

- accurate to ten digits (or eleven significant figures):

- This curious approximation follows the observation that the 193rd power of 1/π yields the sequence 1122211125... Replacing 5 by 2 completes the symmetry without reducing the correct digits of π, while inserting a central decimal point remarkably fixes the accompanying magnitude at 10100.

- accurate to eleven digits:

- accurate to twelve digits:

- accurate to 16 digits:

- - inverse of sum of first two terms of Ramanujan series.

- accurate to 18 digits:

- This is based on the fundamental discriminant d = 3(89) = 267 which has class number h(-d) = 2 explaining the algebraic numbers of degree 2. The core radical is 53 more than the fundamental unit which gives the smallest solution { x, y} = {500, 53} to the Pell equation x2 − 89y2 = −1.

- accurate to 24 digits:

- - inverse of sum of first three terms of Ramanujan series.

- accurate to 30 decimal places:

- Derived from the closeness of Ramanujan constant to the integer 6403203+744. This does not admit obvious generalizations in the integers, because there are only finitely many Heegner numbers and negative discriminants d with class number h(−d) = 1, and d = 163 is the largest one in absolute value.

- accurate to 52 decimal places:

- Like the one above, a consequence of the j-invariant. Among negative discriminants with class number 2, this d the largest in absolute value.

- accurate to 161 decimal places:

- where u is a product of four simple quartic units,

- and,

- Based on one found by Daniel Shanks. Similar to the previous two, but this time is a quotient of a modular form, namely the Dedekind eta function, and where the argument involves . The discriminant d = 3502 has h(−d) = 16.

- The continued fraction representation of π can be used to generate successive best rational approximations. These approximations are the best possible rational approximations of π relative to the size of their denominators. Here is a list of the first thirteen of these:

- Of these, is the only fraction in this sequence that gives more exact digits of π (i.e. 7) than the number of digits needed to approximate it (i.e. 6). The accuracy can be improved by using other fractions with larger numerators and denominators, but, for most such fractions, more digits are required in the approximation than correct significant figures achieved in the result.

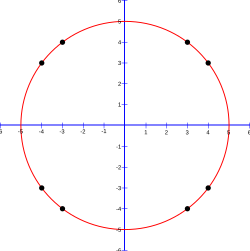

Summing a circle's area

Pi can be obtained from a circle if its radius and area are known using the relationship:

If a circle with radius r is drawn with its center at the point (0, 0), any point whose distance from the origin is less than r will fall inside the circle. The Pythagorean theorem gives the distance from any point (x, y) to the center:

Mathematical "graph paper" is formed by imagining a 1×1 square centered around each cell (x, y), where x and y are integers between −r and r. Squares whose center resides inside or exactly on the border of the circle can then be counted by testing whether, for each cell (x, y),

The total number of cells satisfying that condition thus approximates the area of the circle, which then can be used to calculate an approximation of π. Closer approximations can be produced by using larger values of r.

Mathematically, this formula can be written:

In other words, begin by choosing a value for r. Consider all cells (x, y) in which both x and y are integers between −r and r. Starting at 0, add 1 for each cell whose distance to the origin (0,0) is less than or equal to r. When finished, divide the sum, representing the area of a circle of radius r, by r2 to find the approximation of π. For example, if r is 5, then the cells considered are:

(−5,5) (−4,5) (−3,5) (−2,5) (−1,5) (0,5) (1,5) (2,5) (3,5) (4,5) (5,5) (−5,4) (−4,4) (−3,4) (−2,4) (−1,4) (0,4) (1,4) (2,4) (3,4) (4,4) (5,4) (−5,3) (−4,3) (−3,3) (−2,3) (−1,3) (0,3) (1,3) (2,3) (3,3) (4,3) (5,3) (−5,2) (−4,2) (−3,2) (−2,2) (−1,2) (0,2) (1,2) (2,2) (3,2) (4,2) (5,2) (−5,1) (−4,1) (−3,1) (−2,1) (−1,1) (0,1) (1,1) (2,1) (3,1) (4,1) (5,1) (−5,0) (−4,0) (−3,0) (−2,0) (−1,0) (0,0) (1,0) (2,0) (3,0) (4,0) (5,0) (−5,−1) (−4,−1) (−3,−1) (−2,−1) (−1,−1) (0,−1) (1,−1) (2,−1) (3,−1) (4,−1) (5,−1) (−5,−2) (−4,−2) (−3,−2) (−2,−2) (−1,−2) (0,−2) (1,−2) (2,−2) (3,−2) (4,−2) (5,−2) (−5,−3) (−4,−3) (−3,−3) (−2,−3) (−1,−3) (0,−3) (1,−3) (2,−3) (3,−3) (4,−3) (5,−3) (−5,−4) (−4,−4) (−3,−4) (−2,−4) (−1,−4) (0,−4) (1,−4) (2,−4) (3,−4) (4,−4) (5,−4) (−5,−5) (−4,−5) (−3,−5) (−2,−5) (−1,−5) (0,−5) (1,−5) (2,−5) (3,−5) (4,−5) (5,−5)

The 12 cells (0, ±5), (±5, 0), (±3, ±4), (±4, ±3) are exactly on the circle, and 69 cells are completely inside, so the approximate area is 81, and π is calculated to be approximately 3.24 because 81⁄52 = 3.24. Results for some values of r are shown in the table below:

| r | area | approximation of π |

|---|---|---|

| 2 | 13 | 3.25 |

| 3 | 29 | 3.22222 |

| 4 | 49 | 3.0625 |

| 5 | 81 | 3.24 |

| 10 | 317 | 3.17 |

| 20 | 1257 | 3.1425 |

| 100 | 31417 | 3.1417 |

| 1000 | 3141549 | 3.141549 |

For related results see The circle problem: number of points (x,y) in square lattice with x^2 + y^2 <= n.

Similarly, the more complex approximations of π given below involve repeated calculations of some sort, yielding closer and closer approximations with increasing numbers of calculations.

Continued fractions

Besides its simple continued fraction representation [3; 7, 15, 1, 292, 1, 1, ...], which displays no discernible pattern, π has many generalized continued fraction representations generated by a simple rule, including these two.

The well-known values 22⁄7 and 355⁄113 are respectively the second and fourth continued fraction approximations to π. (Other representations are available at The Wolfram Functions Site.)

Trigonometry

Gregory–Leibniz series

is the power series for arctan(x) specialized to x = 1. It converges too slowly to be of practical interest. However, the power series converges much faster for smaller values of , which leads to formulae where arises as the sum of small angles with rational tangents, known as Machin-like formulae.

Arctangent

Knowing that 4 arctan 1 = π, the formula can be simplified to get:

with a convergence such that each additional 10 terms yields at least three more digits.

Another formula for involving arctangent function is given by

where such that . Approximations can be made by using, for example, the rapidly convergent Euler formula

Alternatively, the following simple expansion series of the arctangent function can be used

where

to approximate with even more rapid convergence. Convergence in this arctangent formula for improves as integer increases.

The constant can also be expressed by infinite sum of arctangent functions as

and

where is the n-th Fibonacci number. However, these two formulae for are much slower in convergence because of set of arctangent functions that are involved in computation.

Arcsine

Observing an equilateral triangle and noting that

yields

with a convergence such that each additional five terms yields at least three more digits.

Digit extraction methods

The Bailey–Borwein–Plouffe formula (BBP) for calculating π was discovered in 1995 by Simon Plouffe. Using base 16 math, the formula can compute any particular digit of π—returning the hexadecimal value of the digit—without having to compute the intervening digits (digit extraction).

In 1996, Simon Plouffe derived an algorithm to extract the nth decimal digit of π (using base 10 math to extract a base 10 digit), and which can do so with an improved speed of O(n3(log n)3) time. The algorithm requires virtually no memory for the storage of an array or matrix so the one-millionth digit of π can be computed using a pocket calculator. However, it would be quite tedious and impractical to do so.

The calculation speed of Plouffe's formula was improved to O(n2) by Fabrice Bellard, who derived an alternative formula (albeit only in base 2 math) for computing π.

Efficient methods

Many other expressions for π were developed and published by Indian mathematician Srinivasa Ramanujan. He worked with mathematician Godfrey Harold Hardy in England for a number of years.

Extremely long decimal expansions of π are typically computed with the Gauss–Legendre algorithm and Borwein's algorithm; the Salamin–Brent algorithm, which was invented in 1976, has also been used.

In 1997, David H. Bailey, Peter Borwein and Simon Plouffe published a paper (Bailey, 1997) on a new formula for π as an infinite series:

This formula permits one to fairly readily compute the kth binary or hexadecimal digit of π, without having to compute the preceding k − 1 digits. Bailey's website contains the derivation as well as implementations in various programming languages. The PiHex project computed 64 bits around the quadrillionth bit of π (which turns out to be 0).

Fabrice Bellard further improved on BBP with his formula:

Other formulae that have been used to compute estimates of π include:

This converges extraordinarily rapidly. Ramanujan's work is the basis for the fastest algorithms used, as of the turn of the millennium, to calculate π.

In 1988, David Chudnovsky and Gregory Chudnovsky found an even faster-converging series (the Chudnovsky algorithm):

- .

The speed of various algorithms for computing pi to n correct digits is shown below in descending order of asymptotic complexity. M(n) is the complexity of the multiplication algorithm employed.

| Algorithm | Year | Time complexity or Speed |

|---|---|---|

| Gauss–Legendre algorithm | 1975 | |

| Chudnovsky algorithm | 1988 | |

| Binary splitting of the arctan series in Machin's formula | ||

| Leibniz formula for π | 1300s | Sublinear convergence. Five billion terms for 10 correct decimal places |

Projects

Pi Hex

Pi Hex was a project to compute three specific binary digits of π using a distributed network of several hundred computers. In 2000, after two years, the project finished computing the five trillionth (5*1012), the forty trillionth, and the quadrillionth (1015) bits. All three of them turned out to be 0.

Software for calculating π

Over the years, several programs have been written for calculating π to many digits on personal computers.

General purpose

Most computer algebra systems can calculate π and other common mathematical constants to any desired precision.

Functions for calculating π are also included in many general libraries for arbitrary-precision arithmetic, for instance Class Library for Numbers, MPFR and SymPy.

Special purpose

Programs designed for calculating π may have better performance than general-purpose mathematical software. They typically implement checkpointing and efficient disk swapping to facilitate extremely long-running and memory-expensive computations.

- TachusPi by Fabrice Bellard is the program used by himself to compute world record number of digits of pi in 2009.

- y-cruncher by Alexander Yee is the program which every world record holder since Shigeru Kondo in 2010 has used to compute world record numbers of digits. y-cruncher can also be used to calculate other constants and holds world records for several of them.

- PiFast by Xavier Gourdon was the fastest program for Microsoft Windows in 2003. According to its author, it can compute one million digits in 3.5 seconds on a 2.4 GHz Pentium 4. PiFast can also compute other irrational numbers like e and √2. It can also work at lesser efficiency with very little memory (down to a few tens of megabytes to compute well over a billion (109) digits). This tool is a popular benchmark in the overclocking community. PiFast 4.4 is available from Stu's Pi page. PiFast 4.3 is available from Gourdon's page.

- QuickPi by Steve Pagliarulo for Windows is faster than PiFast for runs of under 400 million digits. Version 4.5 is available on Stu's Pi Page below. Like PiFast, QuickPi can also compute other irrational numbers like e, √2, and √3. The software may be obtained from the Pi-Hacks Yahoo! forum, or from Stu's Pi page.

- Super PI by Kanada Laboratory in the University of Tokyo is the program for Microsoft Windows for runs from 16,000 to 33,550,000 digits. It can compute one million digits in 40 minutes, two million digits in 90 minutes and four million digits in 220 minutes on a Pentium 90 MHz. Super PI version 1.9 is available from Super PI 1.9 page.

![{\sqrt[{3}]{31}}=3.1413^{+}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1543ecdacf674dd8556ae74ce3f2a01ab5a5e9b0)

![{\displaystyle {\sqrt[{5}]{306}}=3.14155^{+}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/619f58ab2ef68f9a3293310d8e224b55d9311e7c)

![{\displaystyle {\frac {16}{5{\sqrt[{38}]{2}}{\sqrt[{3846}]{2}}}}=3.14159\ 260^{+}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/71cfe2ca9824483b4651951335c252e1557c6547)

![{\displaystyle {\sqrt[{4}]{3^{4}+2^{4}+{\frac {1}{2+({\frac {2}{3}})^{2}}}}}={\sqrt[{4}]{\frac {2143}{22}}}=3.14159\ 2652^{+}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e25fb9c5d2e9c0ce5b4d226d7e77d32098dc4949)

![{\displaystyle {\sqrt[{15}]{28658146}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/97ef7274bb01ac1b24b35cdada717c95d1dcf360)

![{\sqrt[{193}]{\frac {10^{100}}{11222.11122}}}=3.14159\ 26536^{+}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a2515a12f53607ccf92daf1b59b8929e6be3d15c)

![{\displaystyle {\sqrt[{18}]{888582403}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f09091d68335195915fe2ea287fd0e995dfde8d9)

![{\displaystyle {\sqrt[{20}]{8769956796}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/bcc25b0b5b0bf79d1970ed227609bb17cf4b9294)