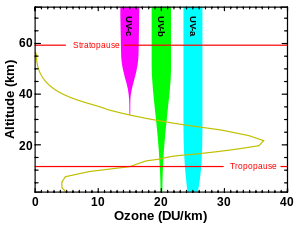

The ozone layer or ozone shield is a region of Earth's stratosphere that absorbs most of the Sun's ultraviolet radiation. It contains a high concentration of ozone (O3) in relation to other parts of the atmosphere, although still small in relation to other gases in the stratosphere. The ozone layer contains less than 10 parts per million of ozone, while the average ozone concentration in Earth's atmosphere as a whole is about 0.3 parts per million. The ozone layer is mainly found in the lower portion of the stratosphere, from approximately 15 to 35 kilometers (9 to 22 mi) above Earth, although its thickness varies seasonally and geographically.

The ozone layer was discovered in 1913 by the French physicists Charles Fabry and Henri Buisson. Measurements of the sun showed that the radiation sent out from its surface and reaching the ground on Earth is usually consistent with the spectrum of a black body with a temperature in the range of 5,500–6,000 K (5,230–5,730 °C), except that there was no radiation below a wavelength of about 310 nm at the ultraviolet end of the spectrum. It was deduced that the missing radiation was being absorbed by something in the atmosphere. Eventually the spectrum of the missing radiation was matched to only one known chemical, ozone. Its properties were explored in detail by the British meteorologist G. M. B. Dobson, who developed a simple spectrophotometer (the Dobsonmeter) that could be used to measure stratospheric ozone from the ground. Between 1928 and 1958, Dobson established a worldwide network of ozone monitoring stations, which continue to operate to this day. The "Dobson unit", a convenient measure of the amount of ozone overhead, is named in his honor.

The ozone layer absorbs 97 to 99 percent of the Sun's medium-frequency ultraviolet light (from about 200 nm to 315 nm wavelength), which otherwise would potentially damage exposed life forms near the surface.

In 1976, atmospheric research revealed that the ozone layer was being depleted by chemicals released by industry, mainly chlorofluorocarbons (CFCs). Concerns that increased UV radiation due to ozone depletion threatened life on Earth, including increased skin cancer in humans and other ecological problems, led to bans on the chemicals, and the latest evidence is that ozone depletion has slowed or stopped. The United Nations General Assembly has designated September 16 as the International Day for the Preservation of the Ozone Layer.

Venus also has a thin ozone layer at an altitude of 100 kilometers above the planet's surface.

Sources

The photochemical mechanisms that give rise to the ozone layer were discovered by the British physicist Sydney Chapman in 1930. Ozone in the Earth's stratosphere is created by ultraviolet light striking ordinary oxygen molecules containing two oxygen atoms (O2), splitting them into individual oxygen atoms (atomic oxygen); the atomic oxygen then combines with unbroken O2 to create ozone, O3. The ozone molecule is unstable (although, in the stratosphere, long-lived) and when ultraviolet light hits ozone it splits into a molecule of O2 and an individual atom of oxygen, a continuing process called the ozone-oxygen cycle. Chemically, this can be described as:

About 90 percent of the ozone in the atmosphere is contained in the stratosphere. Ozone concentrations are greatest between about 20 and 40 kilometres (66,000 and 131,000 ft), where they range from about 2 to 8 parts per million. If all of the ozone were compressed to the pressure of the air at sea level, it would be only 3 millimetres (1⁄8 inch) thick.

Ultraviolet light

Although the concentration of the ozone in the ozone layer is very small, it is vitally important to life because it absorbs biologically harmful ultraviolet (UV) radiation coming from the Sun. Extremely short or vacuum UV (10–100 nm) is screened out by nitrogen. UV radiation capable of penetrating nitrogen is divided into three categories, based on its wavelength; these are referred to as UV-A (400–315 nm), UV-B (315–280 nm), and UV-C (280–100 nm).

UV-C, which is very harmful to all living things, is entirely screened out by a combination of dioxygen (< 200 nm) and ozone (> about 200 nm) by around 35 kilometres (115,000 ft) altitude. UV-B radiation can be harmful to the skin and is the main cause of sunburn; excessive exposure can also cause cataracts, immune system suppression, and genetic damage, resulting in problems such as skin cancer. The ozone layer (which absorbs from about 200 nm to 310 nm with a maximal absorption at about 250 nm) is very effective at screening out UV-B; for radiation with a wavelength of 290 nm, the intensity at the top of the atmosphere is 350 million times stronger than at the Earth's surface. Nevertheless, some UV-B, particularly at its longest wavelengths, reaches the surface, and is important for the skin's production of vitamin D in mammals.

Ozone is transparent to most UV-A, so most of this longer-wavelength UV radiation reaches the surface, and it constitutes most of the UV reaching the Earth. This type of UV radiation is significantly less harmful to DNA, although it may still potentially cause physical damage, premature aging of the skin, indirect genetic damage, and skin cancer.

Distribution in the stratosphere

The thickness of the ozone layer varies worldwide and is generally thinner near the equator and thicker near the poles. Thickness refers to how much ozone is in a column over a given area and varies from season to season. The reasons for these variations are due to atmospheric circulation patterns and solar intensity.

The majority of ozone is produced over the tropics and is transported towards the poles by stratospheric wind patterns. In the northern hemisphere these patterns, known as the Brewer-Dobson circulation, make the ozone layer thickest in the spring and thinnest in the fall. When ozone is produced by solar UV radiation in the tropics, it is done so by circulation lifting ozone-poor air out of the troposphere and into the stratosphere where the sun photolyzes oxygen molecules and turns them into ozone. Then, the ozone-rich air is carried to higher latitudes and drops into lower layers of the atmosphere.

Research has found that the ozone levels in the United States are highest in the spring months of April and May and lowest in October. While the total amount of ozone increases moving from the tropics to higher latitudes, the concentrations are greater in high northern latitudes than in high southern latitudes, with spring ozone columns in high northern latitudes occasionally exceeding 600 DU and averaging 450 DU whereas 400 DU constituted a usual maximum in the Antarctic before anthropogenic ozone depletion. This difference occurred naturally because of the weaker polar vortex and stronger Brewer-Dobson circulation in the northern hemisphere owing to that hemisphere’s large mountain ranges and greater contrasts between land and ocean temperatures. The difference between high northern and southern latitudes has increased since the 1970s due to the ozone hole phenomenon. The highest amounts of ozone are found over the Arctic during the spring months of March and April, but the Antarctic has the lowest amounts of ozone during the summer months of September and October,

Depletion

The ozone layer can be depleted by free radical catalysts, including nitric oxide (NO), nitrous oxide (N2O), hydroxyl (OH), atomic chlorine (Cl), and atomic bromine (Br). While there are natural sources for all of these species, the concentrations of chlorine and bromine increased markedly in recent decades because of the release of large quantities of man-made organohalogen compounds, especially chlorofluorocarbons (CFCs) and bromofluorocarbons. These highly stable compounds are capable of surviving the rise to the stratosphere, where Cl and Br radicals are liberated by the action of ultraviolet light. Each radical is then free to initiate and catalyze a chain reaction capable of breaking down over 100,000 ozone molecules. By 2009, nitrous oxide was the largest ozone-depleting substance (ODS) emitted through human activities.

The breakdown of ozone in the stratosphere results in reduced absorption of ultraviolet radiation. Consequently, unabsorbed and dangerous ultraviolet radiation is able to reach the Earth's surface at a higher intensity. Ozone levels have dropped by a worldwide average of about 4 percent since the late 1970s. For approximately 5 percent of the Earth's surface, around the north and south poles, much larger seasonal declines have been seen, and are described as "ozone holes". Let it be known that the "ozone holes" are actually patches in the ozone layer in which the ozone is thinner. The thinnest parts of the ozone are at the polar points of Earth's axis. The discovery of the annual depletion of ozone above the Antarctic was first announced by Joe Farman, Brian Gardiner and Jonathan Shanklin, in a paper which appeared in Nature on May 16, 1985.

Regulation attempts have included but not have been limited to the Clean Air Act implemented by the United States Environmental Protection Agency. The Clean Air Act introduced the requirement of National Ambient Air Quality Standards (NAAQS) with ozone pollutions being one of six criteria pollutants. This regulation has proven to be effective since counties, cities and tribal regions must abide by these standards and the EPA also provides assistance for each region to regulate contaminants. Effective presentation of information has also proven to be important in order to educate the general population of the existence and regulation of ozone depletion and contaminants. A scientific paper was written by Sheldon Ungar in which the author explores and studies how information about the depletion of the ozone, climate change and various related topics. The ozone case was communicated to lay persons "with easy-to-understand bridging metaphors derived from the popular culture" and related to "immediate risks with everyday relevance". The specific metaphors used in the discussion (ozone shield, ozone hole) proved quite useful and, compared to global climate change, the ozone case was much more seen as a "hot issue" and imminent risk. Lay people were cautious about a depletion of the ozone layer and the risks of skin cancer.

"Bad" ozone can cause adverse health risks respiratory effects (difficulty breathing) and is proven to be an aggravator of respiratory illnesses such as asthma, COPD and emphysema. That is why many countries have set in place regulations to improve "good" ozone and prevent the increase of "bad" ozone in urban or residential areas. In terms of ozone protection (the preservation of "good" ozone) the European Union has strict guidelines on what products are allowed to be bought, distributed or used in specific areas. With effective regulation, the ozone is expected to heal over time.

To support successful regulation attempts, the ozone case was communicated to lay persons "with easy-to-understand bridging metaphors derived from the popular culture" and related to "immediate risks with everyday relevance". The specific metaphors used in the discussion (ozone shield, ozone hole) proved quite useful and, compared to global climate change, the ozone case was much more seen as a "hot issue" and imminent risk. Lay people were cautious about a depletion of the ozone layer and the risks of skin cancer.

In 1978, the United States, Canada and Norway enacted bans on CFC-containing aerosol sprays that damage the ozone layer. The European Community rejected an analogous proposal to do the same. In the U.S., chlorofluorocarbons continued to be used in other applications, such as refrigeration and industrial cleaning, until after the discovery of the Antarctic ozone hole in 1985. After negotiation of an international treaty (the Montreal Protocol), CFC production was capped at 1986 levels with commitments to long-term reductions. This allowed for a ten-year phase-in for developing countries (identified in Article 5 of the protocol). Since that time, the treaty was amended to ban CFC production after 1995 in the developed countries, and later in developing countries. Today, all of the world's 197 countries have signed the treaty. Beginning January 1, 1996, only recycled and stockpiled CFCs were available for use in developed countries like the US. This production phaseout was possible because of efforts to ensure that there would be substitute chemicals and technologies for all ODS uses.

On August 2, 2003, scientists announced that the global depletion of the ozone layer may be slowing down because of the international regulation of ozone-depleting substances. In a study organized by the American Geophysical Union, three satellites and three ground stations confirmed that the upper-atmosphere ozone-depletion rate slowed significantly during the previous decade. Some breakdown can be expected to continue because of ODSs used by nations which have not banned them, and because of gases which are already in the stratosphere. Some ODSs, including CFCs, have very long atmospheric lifetimes, ranging from 50 to over 100 years. It has been estimated that the ozone layer will recover to 1980 levels near the middle of the 21st century. A gradual trend toward "healing" was reported in 2016.

Compounds containing C–H bonds (such as hydrochlorofluorocarbons, or HCFCs) have been designed to replace CFCs in certain applications. These replacement compounds are more reactive and less likely to survive long enough in the atmosphere to reach the stratosphere where they could affect the ozone layer. While being less damaging than CFCs, HCFCs can have a negative impact on the ozone layer, so they are also being phased out. These in turn are being replaced by hydrofluorocarbons (HFCs) and other compounds that do not destroy stratospheric ozone at all.

The residual effects of CFCs accumulating within the atmosphere lead to a concentration gradient between the atmosphere and the ocean. This organohalogen compound is able to dissolve into the ocean's surface waters and is able to act as a time-dependent tracer. This tracer helps scientists study ocean circulation by tracing biological, physical and chemical pathways.

Implications for astronomy

As ozone in the atmosphere prevents most energetic ultraviolet radiation reaching the surface of the Earth, astronomical data in these wavelengths have to be gathered from satellites orbiting above the atmosphere and ozone layer. Most of the light from young hot stars is in the ultraviolet and so study of these wavelengths is important for studying the origins of galaxies. The Galaxy Evolution Explorer, GALEX, is an orbiting ultraviolet space telescope launched on April 28, 2003, which operated until early 2012.

This GALEX image of the Cygnus Loop nebula could not have been taken from the surface of the Earth because the ozone layer blocks the ultra-violet radiation emitted by the nebula.