| 0 | 1 |

| 1 | 1 |

| 2 | 2 |

| 3 | 6 |

| 4 | 24 |

| 5 | 120 |

| 6 | 720 |

| 7 | 5040 |

| 8 | 40320 |

| 9 | 362880 |

| 10 | 3628800 |

| 11 | 39916800 |

| 12 | 479001600 |

| 13 | 6227020800 |

| 14 | 87178291200 |

| 15 | 1307674368000 |

| 16 | 20922789888000 |

| 17 | 355687428096000 |

| 18 | 6402373705728000 |

| 19 | 121645100408832000 |

| 20 | 2432902008176640000 |

| 25 | 1.551121004×1025 |

| 50 | 3.041409320×1064 |

| 70 | 1.197857167×10100 |

| 100 | 9.332621544×10157 |

| 450 | 1.733368733×101000 |

| 1000 | 4.023872601×102567 |

| 3249 | 6.412337688×1010000 |

| 10000 | 2.846259681×1035659 |

| 25206 | 1.205703438×10100000 |

| 100000 | 2.824229408×10456573 |

| 205023 | 2.503898932×101000004 |

| 1000000 | 8.263931688×105565708 |

| 10100 | 1010101.9981097754820 |

In mathematics, the factorial of a non-negative integer , denoted by , is the product of all positive integers less than or equal to . The factorial of also equals the product of with the next smaller factorial:

Factorials have been discovered in several ancient cultures, notably in Indian mathematics in the canonical works of Jain literature, and by Jewish mystics in the Talmudic book Sefer Yetzirah. The factorial operation is encountered in many areas of mathematics, notably in combinatorics, where its most basic use counts the possible distinct sequences – the permutations – of distinct objects: there are . In mathematical analysis, factorials are used in power series for the exponential function and other functions, and they also have applications in algebra, number theory, probability theory, and computer science.

Much of the mathematics of the factorial function was developed beginning in the late 18th and early 19th centuries. Stirling's approximation provides an accurate approximation to the factorial of large numbers, showing that it grows more quickly than exponential growth. Legendre's formula describes the exponents of the prime numbers in a prime factorization of the factorials, and can be used to count the trailing zeros of the factorials. Daniel Bernoulli and Leonhard Euler interpolated the factorial function to a continuous function of complex numbers, except at the negative integers, the (offset) gamma function.

Many other notable functions and number sequences are closely related to the factorials, including the binomial coefficients, double factorials, falling factorials, primorials, and subfactorials. Implementations of the factorial function are commonly used as an example of different computer programming styles, and are included in scientific calculators and scientific computing software libraries. Although directly computing large factorials using the product formula or recurrence is not efficient, faster algorithms are known, matching to within a constant factor the time for fast multiplication algorithms for numbers with the same number of digits.

History

The concept of factorials has arisen independently in many cultures:

- In Indian mathematics, one of the earliest known descriptions of factorials comes from the Anuyogadvāra-sūtra, one of the canonical works of Jain literature, which has been assigned dates varying from 300 BCE to 400 CE. It separates out the sorted and reversed order of a set of items from the other ("mixed") orders, evaluating the number of mixed orders by subtracting two from the usual product formula for the factorial. The product rule for permutations was also described by 6th-century CE Jain monk Jinabhadra. Hindu scholars have been using factorial formulas since at least 1150, when Bhāskara II mentioned factorials in his work Līlāvatī, in connection with a problem of how many ways Vishnu could hold his four characteristic objects (a conch shell, discus, mace, and lotus flower) in his four hands, and a similar problem for a ten-handed god.

- In the mathematics of the Middle East, the Hebrew mystic book of creation Sefer Yetzirah, from the Talmudic period (200 to 500 CE), lists factorials up to 7! as part of an investigation into the number of words that can be formed from the Hebrew alphabet. Factorials were also studied for similar reasons by 8th-century Arab grammarian Al-Khalil ibn Ahmad al-Farahidi. Arab mathematician Ibn al-Haytham (also known as Alhazen, c. 965 – c. 1040) was the first to formulate Wilson's theorem connecting the factorials with the prime numbers.

- In Europe, although Greek mathematics included some combinatorics, and Plato famously used 5040 (a factorial) as the population of an ideal community, in part because of its divisibility properties, there is no direct evidence of ancient Greek study of factorials. Instead, the first work on factorials in Europe was by Jewish scholars such as Shabbethai Donnolo, explicating the Sefer Yetzirah passage. In 1677, British author Fabian Stedman described the application of factorials to change ringing, a musical art involving the ringing of several tuned bells.

From the late 15th century onward, factorials became the subject of study by western mathematicians. In a 1494 treatise, Italian mathematician Luca Pacioli calculated factorials up to 11!, in connection with a problem of dining table arrangements. Christopher Clavius discussed factorials in a 1603 commentary on the work of Johannes de Sacrobosco, and in the 1640s, French polymath Marin Mersenne published large (but not entirely correct) tables of factorials, up to 64!, based on the work of Clavius. The power series for the exponential function, with the reciprocals of factorials for its coefficients, was first formulated in 1676 by Isaac Newton in a letter to Gottfried Wilhelm Leibniz. Other important works of early European mathematics on factorials include extensive coverage in a 1685 treatise by John Wallis, a study of their approximate values for large values of by Abraham de Moivre in 1721, a 1729 letter from James Stirling to de Moivre stating what became known as Stirling's approximation, and work at the same time by Daniel Bernoulli and Leonhard Euler formulating the continuous extension of the factorial function to the gamma function. Adrien-Marie Legendre included Legendre's formula, describing the exponents in the factorization of factorials into prime powers, in an 1808 text on number theory.

The notation for factorials was introduced by the French mathematician Christian Kramp in 1808. Many other notations have also been used. Another later notation, in which the argument of the factorial was half-enclosed by the left and bottom sides of a box, was popular for some time in Britain and America but fell out of use, perhaps because it is difficult to typeset. The word "factorial" (originally French: factorielle) was first used in 1800 by Louis François Antoine Arbogast, in the first work on Faà di Bruno's formula, but referring to a more general concept of products of arithmetic progressions. The "factors" that this name refers to are the terms of the product formula for the factorial.

Definition

The factorial function of a positive integer is defined by the product of all positive integers not greater than

If this product formula is changed to keep all but the last term, it would define a product of the same form, for a smaller factorial. This leads to a recurrence relation, according to which each value of the factorial function can be obtained by multiplying the previous value by :

Factorial of zero

The factorial of is , or in symbols, . There are several motivations for this definition:

- For , the definition of as a product involves the product of no numbers at all, and so is an example of the broader convention that the empty product, a product of no factors, is equal to the multiplicative identity.

- There is exactly one permutation of zero objects: with nothing to permute, the only rearrangement is to do nothing.

- This convention makes many identities in combinatorics valid for all valid choices of their parameters. For instance, the number of ways to choose all elements from a set of is a binomial coefficient identity that would only be valid with .

- With , the recurrence relation for the factorial remains valid at . Therefore, with this convention, a recursive computation of the factorial needs to have only the value for zero as a base case, simplifying the computation and avoiding the need for additional special cases.

- Setting allows for the compact expression of many formulae, such as the exponential function, as a power series:

- This choice matches the gamma function , and the gamma function must have this value to be a continuous function.

Applications

The earliest uses of the factorial function involve counting permutations: there are different ways of arranging distinct objects into a sequence. Factorials appear more broadly in many formulas in combinatorics, to account for different orderings of objects. For instance the binomial coefficients count the -element combinations (subsets of elements) from a set with elements, and can be computed from factorials using the formula

In algebra, the factorials arise through the binomial theorem, which uses binomial coefficients to expand powers of sums. They also occur in the coefficients used to relate certain families of polynomials to each other, for instance in Newton's identities for symmetric polynomials. Their use in counting permutations can also be restated algebraically: the factorials are the orders of finite symmetric groups. In calculus, factorials occur in Faà di Bruno's formula for chaining higher derivatives. In mathematical analysis, factorials frequently appear in the denominators of power series, most notably in the series for the exponential function,

In number theory, the most salient property of factorials is the divisibility of by all positive integers up to , described more precisely for prime factors by Legendre's formula. It follows that arbitrarily large prime numbers can be found as the prime factors of the numbers , leading to a proof of Euclid's theorem that the number of primes is infinite. When is itself prime it is called a factorial prime; relatedly, Brocard's problem, also posed by Srinivasa Ramanujan, concerns the existence of square numbers of the form . In contrast, the numbers must all be composite, proving the existence of arbitrarily large prime gaps. An elementary proof of Bertrand's postulate on the existence of a prime in any interval of the form , one of the first results of Paul Erdős, was based on the divisibility properties of factorials. The factorial number system is a mixed radix notation for numbers in which the place values of each digit are factorials.

Factorials are used extensively in probability theory, for instance in the Poisson distribution and in the probabilities of random permutations. In computer science, beyond appearing in the analysis of brute-force searches over permutations, factorials arise in the lower bound of on the number of comparisons needed to comparison sort a set of items, and in the analysis of chained hash tables, where the distribution of keys per cell can be accurately approximated by a Poisson distribution. Moreover, factorials naturally appear in formulae from quantum and statistical physics, where one often considers all the possible permutations of a set of particles. In statistical mechanics, calculations of entropy such as Boltzmann's entropy formula or the Sackur–Tetrode equation must correct the count of microstates by dividing by the factorials of the numbers of each type of indistinguishable particle to avoid the Gibbs paradox. Quantum physics provides the underlying reason for why these corrections are necessary.

Properties

Growth and approximation

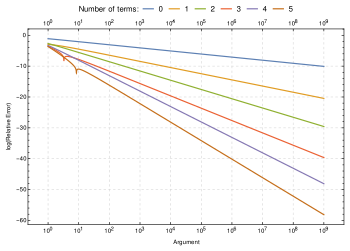

As a function of , the factorial has faster than exponential growth, but grows more slowly than a double exponential function. Its growth rate is similar to , but slower by an exponential factor. One way of approaching this result is by taking the natural logarithm of the factorial, which turns its product formula into a sum, and then estimating the sum by an integral:

The binary logarithm of the factorial, used to analyze comparison sorting, can be very accurately estimated using Stirling's approximation. In the formula below, the term invokes big O notation.

Divisibility and digits

The product formula for the factorial implies that is divisible by all prime numbers that are at most , and by no larger prime numbers. More precise information about its divisibility is given by Legendre's formula, which gives the exponent of each prime in the prime factorization of as

The special case of Legendre's formula for gives the number of trailing zeros in the decimal representation of the factorials. According to this formula, the number of zeros can be obtained by subtracting the base-5 digits of from , and dividing the result by four. Legendre's formula implies that the exponent of the prime is always larger than the exponent for , so each factor of five can be paired with a factor of two to produce one of these trailing zeros. The leading digits of the factorials are distributed according to Benford's law. Every sequence of digits, in any base, is the sequence of initial digits of some factorial number in that base.

Another result on divisibility of factorials, Wilson's theorem, states that is divisible by if and only if is a prime number. For any given integer , the Kempner function of is given by the smallest for which divides . For almost all numbers (all but a subset of exceptions with asymptotic density zero), it coincides with the largest prime factor of .

The product of two factorials, , always evenly divides . There are infinitely many factorials that equal the product of other factorials: if is itself any product of factorials, then equals that same product multiplied by one more factorial, . The only known examples of factorials that are products of other factorials but are not of this "trivial" form are , , and . It would follow from the abc conjecture that there are only finitely many nontrivial examples.

The greatest common divisor of the values of a primitive polynomial of degree over the integers evenly divides .

Continuous interpolation and non-integer generalization

There are infinitely many ways to extend the factorials to a continuous function. The most widely used of these uses the gamma function, which can be defined for positive real numbers as the integral

The same integral converges more generally for any complex number whose real part is positive. It can be extended to the non-integer points in the rest of the complex plane by solving for Euler's reflection formula

Other complex functions that interpolate the factorial values include Hadamard's gamma function, which is an entire function over all the complex numbers, including the non-positive integers. In the p-adic numbers, it is not possible to continuously interpolate the factorial function directly, because the factorials of large integers (a dense subset of the p-adics) converge to zero according to Legendre's formula, forcing any continuous function that is close to their values to be zero everywhere. Instead, the p-adic gamma function provides a continuous interpolation of a modified form of the factorial, omitting the factors in the factorial that are divisible by p.

The digamma function is the logarithmic derivative of the gamma function. Just as the gamma function provides a continuous interpolation of the factorials, offset by one, the digamma function provides a continuous interpolation of the harmonic numbers, offset by the Euler–Mascheroni constant.

Computation

The factorial function is a common feature in scientific calculators. It is also included in scientific programming libraries such as the Python mathematical functions module and the Boost C++ library. If efficiency is not a concern, computing factorials is trivial: just successively multiply a variable initialized to by the integers up to . The simplicity of this computation makes it a common example in the use of different computer programming styles and methods.

The computation of can be expressed in pseudocode using iteration as

define factorial(n):

f := 1

for i := 1, 2, 3, ..., n:

f := f × i

return f

or using recursion based on its recurrence relation as

define factorial(n): if n = 0 return 1 return n × factorial(n − 1)

Other methods suitable for its computation include memoization, dynamic programming, and functional programming. The computational complexity of these algorithms may be analyzed using the unit-cost random-access machine model of computation, in which each arithmetic operation takes constant time and each number uses a constant amount of storage space. In this model, these methods can compute in time , and the iterative version uses space . Unless optimized for tail recursion, the recursive version takes linear space to store its call stack. However, this model of computation is only suitable when is small enough to allow to fit into a machine word. The values 12! and 20! are the largest factorials that can be stored in, respectively, the 32-bit and 64-bit integers. Floating point can represent larger factorials, but approximately rather than exactly, and will still overflow for factorials larger than .

The exact computation of larger factorials involves arbitrary-precision arithmetic, because of fast growth and integer overflow. Time of computation can be analyzed as a function of the number of digits or bits in the result. By Stirling's formula, has bits. The Schönhage–Strassen algorithm can produce a -bit product in time , and faster multiplication algorithms taking time are known. However, computing the factorial involves repeated products, rather than a single multiplication, so these time bounds do not apply directly. In this setting, computing by multiplying the numbers from 1 to in sequence is inefficient, because it involves multiplications, a constant fraction of which take time each, giving total time . A better approach is to perform the multiplications as a divide-and-conquer algorithm that multiplies a sequence of numbers by splitting it into two subsequences of numbers, multiplies each subsequence, and combines the results with one last multiplication. This approach to the factorial takes total time : one logarithm comes from the number of bits in the factorial, a second comes from the multiplication algorithm, and a third comes from the divide and conquer.

Even better efficiency is obtained by computing n! from its prime factorization, based on the principle that exponentiation by squaring is faster than expanding an exponent into a product. An algorithm for this by Arnold Schönhage begins by finding the list of the primes up to , for instance using the sieve of Eratosthenes, and uses Legendre's formula to compute the exponent for each prime. Then it computes the product of the prime powers with these exponents, using a recursive algorithm, as follows:

- Use divide and conquer to compute the product of the primes whose exponents are odd

- Divide all of the exponents by two (rounding down to an integer), recursively compute the product of the prime powers with these smaller exponents, and square the result

- Multiply together the results of the two previous steps

The product of all primes up to is an -bit number, by the prime number theorem, so the time for the first step is , with one logarithm coming from the divide and conquer and another coming from the multiplication algorithm. In the recursive calls to the algorithm, the prime number theorem can again be invoked to prove that the numbers of bits in the corresponding products decrease by a constant factor at each level of recursion, so the total time for these steps at all levels of recursion adds in a geometric series to . The time for the squaring in the second step and the multiplication in the third step are again , because each is a single multiplication of a number with bits. Again, at each level of recursion the numbers involved have a constant fraction as many bits (because otherwise repeatedly squaring them would produce too large a final result) so again the amounts of time for these steps in the recursive calls add in a geometric series to . Consequentially, the whole algorithm takes time , proportional to a single multiplication with the same number of bits in its result.

Related sequences and functions

Several other integer sequences are similar to or related to the factorials:

- Alternating factorial

- The alternating factorial is the absolute value of the alternating sum of the first factorials, . These have mainly been studied in connection with their primality; only finitely many of them can be prime, but a complete list of primes of this form is not known.

- Bhargava factorial

- The Bhargava factorials are a family of integer sequences defined by Manjul Bhargava with similar number-theoretic properties to the factorials, including the factorials themselves as a special case.

- Double factorial

- The product of all the odd integers up to some odd positive integer is called the double factorial of , and denoted by . That is, For example, 9!! = 1 × 3 × 5 × 7 × 9 = 945. Double factorials are used in trigonometric integrals, in expressions for the gamma function at half-integers and the volumes of hyperspheres, and in counting binary trees and perfect matchings.

- Exponential factorial

- Just as triangular numbers sum the numbers from to , and factorials take their product, the exponential factorial exponentiates. The exponential factorial of , denoted as , is defined recursively as , with the base case . For example, These numbers grow much more quickly than regular factorials.

- Falling factorial

- The notations or are sometimes used to represent the product of the integers counting up to and including , equal to . This is also known as a falling factorial or backward factorial, and the notation is a Pochhammer symbol. Falling factorials count the number of different sequences of distinct items that can be drawn from a universe of items. They occur as coefficients in the higher derivatives of polynomials, and in the factorial moments of random variables.

- Hyperfactorials

- The hyperfactorial of is the product . These numbers form the discriminants of Hermite polynomials. They can be continuously interpolated by the K-function, and obey analogues to Stirling's formula and Wilson's theorem.

- Jordan–Pólya numbers

- The Jordan–Pólya numbers are the products of factorials, allowing repetitions. Every tree has a symmetry group whose number of symmetries is a Jordan–Pólya number, and every Jordan–Pólya number counts the symmetries of some tree.

- Primorial

- The primorial is the product of prime numbers less than or equal to ; this construction gives them some similar divisibility properties to factorials, but unlike factorials they are squarefree. As with the factorial primes , researchers have studied primorial primes .

- Subfactorial

- The subfactorial yields the number of derangements of a set of objects. It is sometimes denoted , and equals the closest integer to .

- Superfactorial

- The superfactorial of is the product of the first factorials. The superfactorials are continuously interpolated by the Barnes G-function.

![{\displaystyle [n,2n]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4a6f7024fbcdf1ab8eee885b5e5054776e04ea57)