From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Methods_of_detecting_exoplanets

Any planet is an extremely faint light source compared to its parent star. For example, a star like the Sun is about a billion times as bright as the reflected light from any of the planets orbiting it. In addition to the intrinsic difficulty of detecting such a faint light source, the light from the parent star causes a glare that washes it out. For those reasons, very few of the exoplanets reported as of April 2014 have been observed directly, with even fewer being resolved from their host star.

Instead, astronomers have generally had to resort to indirect methods to detect extrasolar planets. As of 2016, several different indirect methods have yielded success.

Established detection methods

The following methods have at least once proved successful for discovering a new planet or detecting an already discovered planet:

Radial velocity

A star with a planet will move in its own small orbit in response to the planet's gravity. This leads to variations in the speed with which the star moves toward or away from Earth, i.e. the variations are in the radial velocity of the star with respect to Earth. The radial velocity can be deduced from the displacement in the parent star's spectral lines due to the Doppler effect. The radial-velocity method measures these variations in order to confirm the presence of the planet using the binary mass function.

The speed of the star around the system's center of mass is much smaller than that of the planet, because the radius of its orbit around the center of mass is so small. (For example, the Sun moves by about 13 m/s due to Jupiter, but only about 9 cm/s due to Earth). However, velocity variations down to 3 m/s or even somewhat less can be detected with modern spectrometers, such as the HARPS (High Accuracy Radial Velocity Planet Searcher) spectrometer at the ESO 3.6 meter telescope in La Silla Observatory, Chile, the HIRES spectrometer at the Keck telescopes or EXPRES at the Lowell Discovery Telescope. An especially simple and inexpensive method for measuring radial velocity is "externally dispersed interferometry".

Until around 2012, the radial-velocity method (also known as Doppler spectroscopy) was by far the most productive technique used by planet hunters. (After 2012, the transit method from the Kepler spacecraft overtook it in number.) The radial velocity signal is distance independent, but requires high signal-to-noise ratio spectra to achieve high precision, and so is generally used only for relatively nearby stars, out to about 160 light-years from Earth, to find lower-mass planets. It is also not possible to simultaneously observe many target stars at a time with a single telescope. Planets of Jovian mass can be detectable around stars up to a few thousand light years away. This method easily finds massive planets that are close to stars. Modern spectrographs can also easily detect Jupiter-mass planets orbiting 10 astronomical units away from the parent star, but detection of those planets requires many years of observation. Earth-mass planets are currently detectable only in very small orbits around low-mass stars, e.g. Proxima b.

It is easier to detect planets around low-mass stars, for two reasons: First, these stars are more affected by gravitational tug from planets. The second reason is that low-mass main-sequence stars generally rotate relatively slowly. Fast rotation makes spectral-line data less clear because half of the star quickly rotates away from observer's viewpoint while the other half approaches. Detecting planets around more massive stars is easier if the star has left the main sequence, because leaving the main sequence slows down the star's rotation.

Sometimes Doppler spectrography produces false signals, especially in multi-planet and multi-star systems. Magnetic fields and certain types of stellar activity can also give false signals. When the host star has multiple planets, false signals can also arise from having insufficient data, so that multiple solutions can fit the data, as stars are not generally observed continuously. Some of the false signals can be eliminated by analyzing the stability of the planetary system, conducting photometry analysis on the host star and knowing its rotation period and stellar activity cycle periods.

Planets with orbits highly inclined to the line of sight from Earth produce smaller visible wobbles, and are thus more difficult to detect. One of the advantages of the radial velocity method is that eccentricity of the planet's orbit can be measured directly. One of the main disadvantages of the radial-velocity method is that it can only estimate a planet's minimum mass (). The posterior distribution of the inclination angle i depends on the true mass distribution of the planets. However, when there are multiple planets in the system that orbit relatively close to each other and have sufficient mass, orbital stability analysis allows one to constrain the maximum mass of these planets. The radial-velocity method can be used to confirm findings made by the transit method. When both methods are used in combination, then the planet's true mass can be estimated.

Although radial velocity of the star only gives a planet's minimum mass, if the planet's spectral lines can be distinguished from the star's spectral lines then the radial velocity of the planet itself can be found, and this gives the inclination of the planet's orbit. This enables measurement of the planet's actual mass. This also rules out false positives, and also provides data about the composition of the planet. The main issue is that such detection is possible only if the planet orbits around a relatively bright star and if the planet reflects or emits a lot of light.

Transit photometry

Technique, advantages, and disadvantages

While the radial velocity method provides information about a planet's mass, the photometric method can determine the planet's radius. If a planet crosses (transits) in front of its parent star's disk, then the observed visual brightness of the star drops by a small amount, depending on the relative sizes of the star and the planet. For example, in the case of HD 209458, the star dims by 1.7%. However, most transit signals are considerably smaller; for example, an Earth-size planet transiting a Sun-like star produces a dimming of only 80 parts per million (0.008 percent).

A theoretical transiting exoplanet light curve model predicts the following characteristics of an observed planetary system: transit depth (δ), transit duration (T), the ingress/egress duration (τ), and period of the exoplanet (P). However, these observed quantities are based on several assumptions. For convenience in the calculations, we assume that the planet and star are spherical, the stellar disk is uniform, and the orbit is circular. Depending on the relative position that an observed transiting exoplanet is while transiting a star, the observed physical parameters of the light curve will change. The transit depth (δ) of a transiting light curve describes the decrease in the normalized flux of the star during a transit. This details the radius of an exoplanet compared to the radius of the star. For example, if an exoplanet transits a solar radius size star, a planet with a larger radius would increase the transit depth and a planet with a smaller radius would decrease the transit depth. The transit duration (T) of an exoplanet is the length of time that a planet spends transiting a star. This observed parameter changes relative to how fast or slow a planet is moving in its orbit as it transits the star. The ingress/egress duration (τ) of a transiting light curve describes the length of time the planet takes to fully cover the star (ingress) and fully uncover the star (egress). If a planet transits from the one end of the diameter of the star to the other end, the ingress/egress duration is shorter because it takes less time for a planet to fully cover the star. If a planet transits a star relative to any other point other than the diameter, the ingress/egress duration lengthens as you move further away from the diameter because the planet spends a longer time partially covering the star during its transit. From these observable parameters, a number of different physical parameters (semi-major axis, star mass, star radius, planet radius, eccentricity, and inclination) are determined through calculations. With the combination of radial velocity measurements of the star, the mass of the planet is also determined.

This method has two major disadvantages. First, planetary transits are observable only when the planet's orbit happens to be perfectly aligned from the astronomers' vantage point. The probability of a planetary orbital plane being directly on the line-of-sight to a star is the ratio of the diameter of the star to the diameter of the orbit (in small stars, the radius of the planet is also an important factor). About 10% of planets with small orbits have such an alignment, and the fraction decreases for planets with larger orbits. For a planet orbiting a Sun-sized star at 1 AU, the probability of a random alignment producing a transit is 0.47%. Therefore, the method cannot guarantee that any particular star is not a host to planets. However, by scanning large areas of the sky containing thousands or even hundreds of thousands of stars at once, transit surveys can find more extrasolar planets than the radial-velocity method. Several surveys have taken that approach, such as the ground-based MEarth Project, SuperWASP, KELT, and HATNet, as well as the space-based COROT, Kepler and TESS missions. The transit method has also the advantage of detecting planets around stars that are located a few thousand light years away. The most distant planets detected by Sagittarius Window Eclipsing Extrasolar Planet Search are located near the galactic center. However, reliable follow-up observations of these stars are nearly impossible with current technology.

The second disadvantage of this method is a high rate of false detections. A 2012 study found that the rate of false positives for transits observed by the Kepler mission could be as high as 40% in single-planet systems. For this reason, a star with a single transit detection requires additional confirmation, typically from the radial-velocity method or orbital brightness modulation method. The radial velocity method is especially necessary for Jupiter-sized or larger planets, as objects of that size encompass not only planets, but also brown dwarfs and even small stars. As the false positive rate is very low in stars with two or more planet candidates, such detections often can be validated without extensive follow-up observations. Some can also be confirmed through the transit timing variation method.

Many points of light in the sky have brightness variations that may appear as transiting planets by flux measurements. False-positives in the transit photometry method arise in three common forms: blended eclipsing binary systems, grazing eclipsing binary systems, and transits by planet sized stars. Eclipsing binary systems usually produce deep eclipses that distinguish them from exoplanet transits, since planets are usually smaller than about 2RJ, but eclipses are shallower for blended or grazing eclipsing binary systems.

Blended eclipsing binary systems consist of a normal eclipsing binary blended with a third (usually brighter) star along the same line of sight, usually at a different distance. The constant light of the third star dilutes the measured eclipse depth, so the light-curve may resemble that for a transiting exoplanet. In these cases, the target most often contains a large main sequence primary with a small main sequence secondary or a giant star with a main sequence secondary.

Grazing eclipsing binary systems are systems in which one object will just barely graze the limb of the other. In these cases, the maximum transit depth of the light curve will not be proportional to the ratio of the squares of the radii of the two stars, but will instead depend solely on the small fraction of the primary that is blocked by the secondary. The small measured dip in flux can mimic that of an exoplanet transit. Some of the false positive cases of this category can be easily found if the eclipsing binary system has a circular orbit, with the two companions having different masses. Due to the cyclic nature of the orbit, there would be two eclipsing events, one of the primary occulting the secondary and vice versa. If the two stars have significantly different masses, and this different radii and luminosities, then these two eclipses would have different depths. This repetition of a shallow and deep transit event can easily be detected and thus allow the system to be recognized as a grazing eclipsing binary system. However, if the two stellar companions are approximately the same mass, then these two eclipses would be indistinguishable, thus making it impossible to demonstrate that a grazing eclipsing binary system is being observed using only the transit photometry measurements.

Finally, there are two types of stars that are approximately the same size as gas giant planets, white dwarfs and brown dwarfs. This is due to the fact that gas giant planets, white dwarfs, and brown dwarfs, are all supported by degenerate electron pressure. The light curve does not discriminate between masses as it only depends on the size of the transiting object. When possible, radial velocity measurements are used to verify that the transiting or eclipsing body is of planetary mass, meaning less than 13MJ. Transit Time Variations can also determine MP. Doppler Tomography with a known radial velocity orbit can obtain minimum MP and projected sing-orbit alignment.

Red giant branch stars have another issue for detecting planets around them: while planets around these stars are much more likely to transit due to the larger star size, these transit signals are hard to separate from the main star's brightness light curve as red giants have frequent pulsations in brightness with a period of a few hours to days. This is especially notable with subgiants. In addition, these stars are much more luminous, and transiting planets block a much smaller percentage of light coming from these stars. In contrast, planets can completely occult a very small star such as a neutron star or white dwarf, an event which would be easily detectable from Earth. However, due to the small star sizes, the chance of a planet aligning with such a stellar remnant is extremely small.

The main advantage of the transit method is that the size of the planet can be determined from the light curve. When combined with the radial-velocity method (which determines the planet's mass), one can determine the density of the planet, and hence learn something about the planet's physical structure. The planets that have been studied by both methods are by far the best-characterized of all known exoplanets.

The transit method also makes it possible to study the atmosphere of the transiting planet. When the planet transits the star, light from the star passes through the upper atmosphere of the planet. By studying the high-resolution stellar spectrum carefully, one can detect elements present in the planet's atmosphere. A planetary atmosphere, and planet for that matter, could also be detected by measuring the polarization of the starlight as it passed through or is reflected off the planet's atmosphere.

Additionally, the secondary eclipse (when the planet is blocked by its star) allows direct measurement of the planet's radiation and helps to constrain the planet's orbital eccentricity without needing the presence of other planets. If the star's photometric intensity during the secondary eclipse is subtracted from its intensity before or after, only the signal caused by the planet remains. It is then possible to measure the planet's temperature and even to detect possible signs of cloud formations on it. In March 2005, two groups of scientists carried out measurements using this technique with the Spitzer Space Telescope. The two teams, from the Harvard-Smithsonian Center for Astrophysics, led by David Charbonneau, and the Goddard Space Flight Center, led by L. D. Deming, studied the planets TrES-1 and HD 209458b respectively. The measurements revealed the planets' temperatures: 1,060 K (790°C) for TrES-1 and about 1,130 K (860 °C) for HD 209458b. In addition, the hot Neptune Gliese 436 b is known to enter secondary eclipse. However, some transiting planets orbit such that they do not enter secondary eclipse relative to Earth; HD 17156 b is over 90% likely to be one of the latter.

History

The first exoplanet for which transits were observed for HD 209458 b, which was discovered using radial velocity technique. These transits were oberved in 1999 by two teams led David Charbonneau and Gregory W. Henry. The first exoplanet to be discovered with the transit method was OGLE-TR-56b in 2002 by the OGLE project.

A French Space Agency mission, CoRoT, began in 2006 to search for planetary transits from orbit, where the absence of atmospheric scintillation allows improved accuracy. This mission was designed to be able to detect planets "a few times to several times larger than Earth" and performed "better than expected", with two exoplanet discoveries (both of the "hot Jupiter" type) as of early 2008. In June 2013, CoRoT's exoplanet count was 32 with several still to be confirmed. The satellite unexpectedly stopped transmitting data in November 2012 (after its mission had twice been extended), and was retired in June 2013.

In March 2009, NASA mission Kepler was launched to scan a large number of stars in the constellation Cygnus with a measurement precision expected to detect and characterize Earth-sized planets. The NASA Kepler Mission uses the transit method to scan a hundred thousand stars for planets. It was hoped that by the end of its mission of 3.5 years, the satellite would have collected enough data to reveal planets even smaller than Earth. By scanning a hundred thousand stars simultaneously, it was not only able to detect Earth-sized planets, it was able to collect statistics on the numbers of such planets around Sun-like stars.

On 2 February 2011, the Kepler team released a list of 1,235 extrasolar planet candidates, including 54 that may be in the habitable zone. On 5 December 2011, the Kepler team announced that they had discovered 2,326 planetary candidates, of which 207 are similar in size to Earth, 680 are super-Earth-size, 1,181 are Neptune-size, 203 are Jupiter-size and 55 are larger than Jupiter. Compared to the February 2011 figures, the number of Earth-size and super-Earth-size planets increased by 200% and 140% respectively. Moreover, 48 planet candidates were found in the habitable zones of surveyed stars, marking a decrease from the February figure; this was due to the more stringent criteria in use in the December data. By June 2013, the number of planet candidates was increased to 3,278 and some confirmed planets were smaller than Earth, some even Mars-sized (such as Kepler-62c) and one even smaller than Mercury (Kepler-37b).

The Transiting Exoplanet Survey Satellite launched in April 2018.

Reflection and emission modulations

Short-period planets in close orbits around their stars will undergo reflected light variations because, like the Moon, they will go through phases from full to new and back again. In addition, as these planets receive a lot of starlight, it heats them, making thermal emissions potentially detectable. Since telescopes cannot resolve the planet from the star, they see only the combined light, and the brightness of the host star seems to change over each orbit in a periodic manner. Although the effect is small — the photometric precision required is about the same as to detect an Earth-sized planet in transit across a solar-type star – such Jupiter-sized planets with an orbital period of a few days are detectable by space telescopes such as the Kepler Space Observatory. Like with the transit method, it is easier to detect large planets orbiting close to their parent star than other planets as these planets catch more light from their parent star. When a planet has a high albedo and is situated around a relatively luminous star, its light variations are easier to detect in visible light while darker planets or planets around low-temperature stars are more easily detectable with infrared light with this method. In the long run, this method may find the most planets that will be discovered by that mission because the reflected light variation with orbital phase is largely independent of orbital inclination and does not require the planet to pass in front of the disk of the star. It still cannot detect planets with circular face-on orbits from Earth's viewpoint as the amount of reflected light does not change during its orbit.

The phase function of the giant planet is also a function of its thermal properties and atmosphere, if any. Therefore, the phase curve may constrain other planet properties, such as the size distribution of atmospheric particles. When a planet is found transiting and its size is known, the phase variations curve helps calculate or constrain the planet's albedo. It is more difficult with very hot planets as the glow of the planet can interfere when trying to calculate albedo. In theory, albedo can also be found in non-transiting planets when observing the light variations with multiple wavelengths. This allows scientists to find the size of the planet even if the planet is not transiting the star.

The first-ever direct detection of the spectrum of visible light reflected from an exoplanet was made in 2015 by an international team of astronomers. The astronomers studied light from 51 Pegasi b – the first exoplanet discovered orbiting a main-sequence star (a Sunlike star), using the High Accuracy Radial velocity Planet Searcher (HARPS) instrument at the European Southern Observatory's La Silla Observatory in Chile.

Both CoRoT and Kepler have measured the reflected light from planets. However, these planets were already known since they transit their host star. The first planets discovered by this method are Kepler-70b and Kepler-70c, found by Kepler.

Relativistic beaming

A separate novel method to detect exoplanets from light variations uses relativistic beaming of the observed flux from the star due to its motion. It is also known as Doppler beaming or Doppler boosting. The method was first proposed by Abraham Loeb and Scott Gaudi in 2003. As the planet tugs the star with its gravitation, the density of photons and therefore the apparent brightness of the star changes from observer's viewpoint. Like the radial velocity method, it can be used to determine the orbital eccentricity and the minimum mass of the planet. With this method, it is easier to detect massive planets close to their stars as these factors increase the star's motion. Unlike the radial velocity method, it does not require an accurate spectrum of a star, and therefore can be used more easily to find planets around fast-rotating stars and more distant stars.

One of the biggest disadvantages of this method is that the light variation effect is very small. A Jovian-mass planet orbiting 0.025 AU away from a Sun-like star is barely detectable even when the orbit is edge-on. This is not an ideal method for discovering new planets, as the amount of emitted and reflected starlight from the planet is usually much larger than light variations due to relativistic beaming. This method is still useful, however, as it allows for measurement of the planet's mass without the need for follow-up data collection from radial velocity observations.

The first discovery of a planet using this method (Kepler-76b) was announced in 2013.

Ellipsoidal variations

Massive planets can cause slight tidal distortions to their host stars. When a star has a slightly ellipsoidal shape, its apparent brightness varies, depending if the oblate part of the star is facing the observer's viewpoint. Like with the relativistic beaming method, it helps to determine the minimum mass of the planet, and its sensitivity depends on the planet's orbital inclination. The extent of the effect on a star's apparent brightness can be much larger than with the relativistic beaming method, but the brightness changing cycle is twice as fast. In addition, the planet distorts the shape of the star more if it has a low semi-major axis to stellar radius ratio and the density of the star is low. This makes this method suitable for finding planets around stars that have left the main sequence.

Pulsar timing

A pulsar is a neutron star: the small, ultradense remnant of a star that has exploded as a supernova. Pulsars emit radio waves extremely regularly as they rotate. Because the intrinsic rotation of a pulsar is so regular, slight anomalies in the timing of its observed radio pulses can be used to track the pulsar's motion. Like an ordinary star, a pulsar will move in its own small orbit if it has a planet. Calculations based on pulse-timing observations can then reveal the parameters of that orbit.

This method was not originally designed for the detection of planets, but is so sensitive that it is capable of detecting planets far smaller than any other method can, down to less than a tenth the mass of Earth. It is also capable of detecting mutual gravitational perturbations between the various members of a planetary system, thereby revealing further information about those planets and their orbital parameters. In addition, it can easily detect planets which are relatively far away from the pulsar.

There are two main drawbacks to the pulsar timing method: pulsars are relatively rare, and special circumstances are required for a planet to form around a pulsar. Therefore, it is unlikely that a large number of planets will be found this way. Additionally, life would likely not survive on planets orbiting pulsars due to the high intensity of ambient radiation.

In 1992, Aleksander Wolszczan and Dale Frail used this method to discover planets around the pulsar PSR 1257+12. Their discovery was quickly confirmed, making it the first confirmation of planets outside the Solar System.

Variable star timing

Like pulsars, some other types of pulsating variable stars are regular enough that radial velocity could be determined purely photometrically from the Doppler shift of the pulsation frequency, without needing spectroscopy. This method is not as sensitive as the pulsar timing variation method, due to the periodic activity being longer and less regular. The ease of detecting planets around a variable star depends on the pulsation period of the star, the regularity of pulsations, the mass of the planet, and its distance from the host star.

The first success with this method came in 2007, when V391 Pegasi b was discovered around a pulsating subdwarf star.

Transit timing

The transit timing variation method considers whether transits occur with strict periodicity, or if there is a variation. When multiple transiting planets are detected, they can often be confirmed with the transit timing variation method. This is useful in planetary systems far from the Sun, where radial velocity methods cannot detect them due to the low signal-to-noise ratio. If a planet has been detected by the transit method, then variations in the timing of the transit provide an extremely sensitive method of detecting additional non-transiting planets in the system with masses comparable to Earth's. It is easier to detect transit-timing variations if planets have relatively close orbits, and when at least one of the planets is more massive, causing the orbital period of a less massive planet to be more perturbed.

The main drawback of the transit timing method is that usually not much can be learnt about the planet itself. Transit timing variation can help to determine the maximum mass of a planet. In most cases, it can confirm if an object has a planetary mass, but it does not put narrow constraints on its mass. There are exceptions though, as planets in the Kepler-36 and Kepler-88 systems orbit close enough to accurately determine their masses.

The first significant detection of a non-transiting planet using TTV was carried out with NASA's Kepler spacecraft. The transiting planet Kepler-19b shows TTV with an amplitude of five minutes and a period of about 300 days, indicating the presence of a second planet, Kepler-19c, which has a period which is a near-rational multiple of the period of the transiting planet.

In circumbinary planets, variations of transit timing are mainly caused by the orbital motion of the stars, instead of gravitational perturbations by other planets. These variations make it harder to detect these planets through automated methods. However, it makes these planets easy to confirm once they are detected.

Transit duration variation

"Duration variation" refers to changes in how long the transit takes. Duration variations may be caused by an exomoon, apsidal precession for eccentric planets due to another planet in the same system, or general relativity.

When a circumbinary planet is found through the transit method, it can be easily confirmed with the transit duration variation method. In close binary systems, the stars significantly alter the motion of the companion, meaning that any transiting planet has significant variation in transit duration. The first such confirmation came from Kepler-16b.

Eclipsing binary minima timing

When a binary star system is aligned such that – from the Earth's point of view – the stars pass in front of each other in their orbits, the system is called an "eclipsing binary" star system. The time of minimum light, when the star with the brighter surface is at least partially obscured by the disc of the other star, is called the primary eclipse, and approximately half an orbit later, the secondary eclipse occurs when the brighter surface area star obscures some portion of the other star. These times of minimum light, or central eclipses, constitute a time stamp on the system, much like the pulses from a pulsar (except that rather than a flash, they are a dip in brightness). If there is a planet in circumbinary orbit around the binary stars, the stars will be offset around a binary-planet center of mass. As the stars in the binary are displaced back and forth by the planet, the times of the eclipse minima will vary. The periodicity of this offset may be the most reliable way to detect extrasolar planets around close binary systems. With this method, planets are more easily detectable if they are more massive, orbit relatively closely around the system, and if the stars have low masses.

The eclipsing timing method allows the detection of planets further away from the host star than the transit method. However, signals around cataclysmic variable stars hinting for planets tend to match with unstable orbits. In 2011, Kepler-16b became the first planet to be definitely characterized via eclipsing binary timing variations.

Gravitational microlensing

Gravitational microlensing occurs when the gravitational field of a star acts like a lens, magnifying the light of a distant background star. This effect occurs only when the two stars are almost exactly aligned. Lensing events are brief, lasting for weeks or days, as the two stars and Earth are all moving relative to each other. More than a thousand such events have been observed over the past ten years.

If the foreground lensing star has a planet, then that planet's own gravitational field can make a detectable contribution to the lensing effect. Since that requires a highly improbable alignment, a very large number of distant stars must be continuously monitored in order to detect planetary microlensing contributions at a reasonable rate. This method is most fruitful for planets between Earth and the center of the galaxy, as the galactic center provides a large number of background stars.

In 1991, astronomers Shude Mao and Bohdan Paczyński proposed using gravitational microlensing to look for binary companions to stars, and their proposal was refined by Andy Gould and Abraham Loeb in 1992 as a method to detect exoplanets. Successes with the method date back to 2002, when a group of Polish astronomers (Andrzej Udalski, Marcin Kubiak and Michał Szymański from Warsaw, and Bohdan Paczyński) during project OGLE (the Optical Gravitational Lensing Experiment) developed a workable technique. During one month, they found several possible planets, though limitations in the observations prevented clear confirmation. Since then, several confirmed extrasolar planets have been detected using microlensing. This was the first method capable of detecting planets of Earth-like mass around ordinary main-sequence stars.

Unlike most other methods, which have detection bias towards planets with small (or for resolved imaging, large) orbits, the microlensing method is most sensitive to detecting planets around 1-10 astronomical units away from Sun-like stars.

A notable disadvantage of the method is that the lensing cannot be repeated, because the chance alignment never occurs again. Also, the detected planets will tend to be several kiloparsecs away, so follow-up observations with other methods are usually impossible. In addition, the only physical characteristic that can be determined by microlensing is the mass of the planet, within loose constraints. Orbital properties also tend to be unclear, as the only orbital characteristic that can be directly determined is its current semi-major axis from the parent star, which can be misleading if the planet follows an eccentric orbit. When the planet is far away from its star, it spends only a tiny portion of its orbit in a state where it is detectable with this method, so the orbital period of the planet cannot be easily determined. It is also easier to detect planets around low-mass stars, as the gravitational microlensing effect increases with the planet-to-star mass ratio.

The main advantages of the gravitational microlensing method are that it can detect low-mass planets (in principle down to Mars mass with future space projects such as WFIRST); it can detect planets in wide orbits comparable to Saturn and Uranus, which have orbital periods too long for the radial velocity or transit methods; and it can detect planets around very distant stars. When enough background stars can be observed with enough accuracy, then the method should eventually reveal how common Earth-like planets are in the galaxy.

Observations are usually performed using networks of robotic telescopes. In addition to the European Research Council-funded OGLE, the Microlensing Observations in Astrophysics (MOA) group is working to perfect this approach.

The PLANET (Probing Lensing Anomalies NETwork)/RoboNet project is even more ambitious. It allows nearly continuous round-the-clock coverage by a world-spanning telescope network, providing the opportunity to pick up microlensing contributions from planets with masses as low as Earth's. This strategy was successful in detecting the first low-mass planet on a wide orbit, designated OGLE-2005-BLG-390Lb.

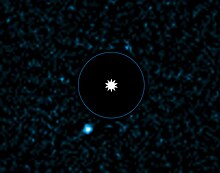

Direct imaging

Planets are extremely faint light sources compared to stars, and what little light comes from them tends to be lost in the glare from their parent star. So in general, it is very difficult to detect and resolve them directly from their host star. Planets orbiting far enough from stars to be resolved reflect very little starlight, so planets are detected through their thermal emission instead. It is easier to obtain images when the star system is relatively near to the Sun, and when the planet is especially large (considerably larger than Jupiter), widely separated from its parent star, and hot so that it emits intense infrared radiation; images have then been made in the infrared, where the planet is brighter than it is at visible wavelengths. Coronagraphs are used to block light from the star, while leaving the planet visible. Direct imaging of an Earth-like exoplanet requires extreme optothermal stability. During the accretion phase of planetary formation, the star-planet contrast may be even better in H alpha than it is in infrared – an H alpha survey is currently underway.

Direct imaging can give only loose constraints of the planet's mass, which is derived from the age of the star and the temperature of the planet. Mass can vary considerably, as planets can form several million years after the star has formed. The cooler the planet is, the less the planet's mass needs to be. In some cases it is possible to give reasonable constraints to the radius of a planet based on planet's temperature, its apparent brightness, and its distance from Earth. The spectra emitted from planets do not have to be separated from the star, which eases determining the chemical composition of planets.

Sometimes observations at multiple wavelengths are needed to rule out the planet being a brown dwarf. Direct imaging can be used to accurately measure the planet's orbit around the star. Unlike the majority of other methods, direct imaging works better with planets with face-on orbits rather than edge-on orbits, as a planet in a face-on orbit is observable during the entirety of the planet's orbit, while planets with edge-on orbits are most easily observable during their period of largest apparent separation from the parent star.

The planets detected through direct imaging currently fall into two categories. First, planets are found around stars more massive than the Sun which are young enough to have protoplanetary disks. The second category consists of possible sub-brown dwarfs found around very dim stars, or brown dwarfs which are at least 100 AU away from their parent stars.

Planetary-mass objects not gravitationally bound to a star are found through direct imaging as well.

Early discoveries

In 2004, a group of astronomers used the European Southern Observatory's Very Large Telescope array in Chile to produce an image of 2M1207b, a companion to the brown dwarf 2M1207. In the following year, the planetary status of the companion was confirmed. The planet is estimated to be several times more massive than Jupiter, and to have an orbital radius greater than 40 AU.

In September 2008, an object was imaged at a separation of 330 AU from the star 1RXS J160929.1−210524, but it was not until 2010, that it was confirmed to be a companion planet to the star and not just a chance alignment.

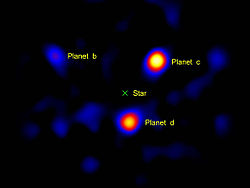

The first multiplanet system, announced on 13 November 2008, was imaged in 2007, using telescopes at both the Keck Observatory and Gemini Observatory. Three planets were directly observed orbiting HR 8799, whose masses are approximately ten, ten, and seven times that of Jupiter. On the same day, 13 November 2008, it was announced that the Hubble Space Telescope directly observed an exoplanet orbiting Fomalhaut, with a mass no more than 3 MJ. Both systems are surrounded by disks not unlike the Kuiper belt.

In 2009, it was announced that analysis of images dating back to 2003, revealed a planet orbiting Beta Pictoris.

In 2012, it was announced that a "Super-Jupiter" planet with a mass about 12.8 MJ orbiting Kappa Andromedae was directly imaged using the Subaru Telescope in Hawaii. It orbits its parent star at a distance of about 55 AU, or nearly twice the distance of Neptune from the sun.

An additional system, GJ 758, was imaged in November 2009, by a team using the HiCIAO instrument of the Subaru Telescope, but it was a brown dwarf.

Other possible exoplanets to have been directly imaged include GQ Lupi b, AB Pictoris b, and SCR 1845 b. As of March 2006, none have been confirmed as planets; instead, they might themselves be small brown dwarfs.

Imaging instruments

Some projects to equip telescopes with planet-imaging-capable instruments include the ground-based telescopes Gemini Planet Imager, VLT-SPHERE, the Subaru Coronagraphic Extreme Adaptive Optics (SCExAO) instrument, Palomar Project 1640, and the space telescope WFIRST. The New Worlds Mission proposes a large occulter in space designed to block the light of nearby stars in order to observe their orbiting planets. This could be used with existing, already planned or new, purpose-built telescopes.

In 2010, a team from NASA's Jet Propulsion Laboratory demonstrated that a vortex coronagraph could enable small scopes to directly image planets. They did this by imaging the previously imaged HR 8799 planets, using just a 1.5 meter-wide portion of the Hale Telescope.

Another promising approach is nulling interferometry.

It has also been proposed that space-telescopes that focus light using zone plates instead of mirrors would provide higher-contrast imaging, and be cheaper to launch into space due to being able to fold up the lightweight foil zone plate.

Data Reduction Techniques

Post-processing of observational data to enhance signal strength of off-axial bodies (i.e. exoplanets) can be accomplished in a variety of ways. Likely the earliest and most popular is the technique of Angualar Differential Imaging (ADI), where exposures are averaged, each exposure undergoes subtraction by the average, and then they are (de-)rotated to stack the feint planetary signal all in one place.

Specral Differential Imaging (SDI) performs an analygous procedure, but for radial changes in brightness (as a function of spectra or wavelength) instead of angular changes.

Combinations of the two are possible (ASDI, SADI, or Combined Differential Imaging "CODI").

Polarimetry

Light given off by a star is un-polarized, i.e. the direction of oscillation of the light wave is random. However, when the light is reflected off the atmosphere of a planet, the light waves interact with the molecules in the atmosphere and become polarized.

By analyzing the polarization in the combined light of the planet and star (about one part in a million), these measurements can in principle be made with very high sensitivity, as polarimetry is not limited by the stability of the Earth's atmosphere. Another main advantage is that polarimetry allows for determination of the composition of the planet's atmosphere. The main disadvantage is that it will not be able to detect planets without atmospheres. Larger planets and planets with higher albedo are easier to detect through polarimetry, as they reflect more light.

Astronomical devices used for polarimetry, called polarimeters, are capable of detecting polarized light and rejecting unpolarized beams. Groups such as ZIMPOL/CHEOPS and PlanetPol are currently using polarimeters to search for extrasolar planets. The first successful detection of an extrasolar planet using this method came in 2008, when HD 189733 b, a planet discovered three years earlier, was detected using polarimetry. However, no new planets have yet been discovered using this method.

Astrometry

This method consists of precisely measuring a star's position in the sky, and observing how that position changes over time. Originally, this was done visually, with hand-written records. By the end of the 19th century, this method used photographic plates, greatly improving the accuracy of the measurements as well as creating a data archive. If a star has a planet, then the gravitational influence of the planet will cause the star itself to move in a tiny circular or elliptical orbit. Effectively, star and planet each orbit around their mutual centre of mass (barycenter), as explained by solutions to the two-body problem. Since the star is much more massive, its orbit will be much smaller. Frequently, the mutual centre of mass will lie within the radius of the larger body. Consequently, it is easier to find planets around low-mass stars, especially brown dwarfs.

Astrometry is the oldest search method for extrasolar planets, and was originally popular because of its success in characterizing astrometric binary star systems. It dates back at least to statements made by William Herschel in the late 18th century. He claimed that an unseen companion was affecting the position of the star he cataloged as 70 Ophiuchi. The first known formal astrometric calculation for an extrasolar planet was made by William Stephen Jacob in 1855 for this star. Similar calculations were repeated by others for another half-century until finally refuted in the early 20th century. For two centuries claims circulated of the discovery of unseen companions in orbit around nearby star systems that all were reportedly found using this method, culminating in the prominent 1996 announcement, of multiple planets orbiting the nearby star Lalande 21185 by George Gatewood. None of these claims survived scrutiny by other astronomers, and the technique fell into disrepute. Unfortunately, changes in stellar position are so small—and atmospheric and systematic distortions so large—that even the best ground-based telescopes cannot produce precise enough measurements. All claims of a planetary companion of less than 0.1 solar mass, as the mass of the planet, made before 1996 using this method are likely spurious. In 2002, the Hubble Space Telescope did succeed in using astrometry to characterize a previously discovered planet around the star Gliese 876.

The space-based observatory Gaia, launched in 2013, is expected to find thousands of planets via astrometry, but prior to the launch of Gaia, no planet detected by astrometry had been confirmed. SIM PlanetQuest was a US project (cancelled in 2010) that would have had similar exoplanet finding capabilities to Gaia.

One potential advantage of the astrometric method is that it is most sensitive to planets with large orbits. This makes it complementary to other methods that are most sensitive to planets with small orbits. However, very long observation times will be required — years, and possibly decades, as planets far enough from their star to allow detection via astrometry also take a long time to complete an orbit. Planets orbiting around one of the stars in binary systems are more easily detectable, as they cause perturbations in the orbits of stars themselves. However, with this method, follow-up observations are needed to determine which star the planet orbits around.

In 2009, the discovery of VB 10b by astrometry was announced. This planetary object, orbiting the low mass red dwarf star VB 10, was reported to have a mass seven times that of Jupiter. If confirmed, this would be the first exoplanet discovered by astrometry, of the many that have been claimed through the years. However recent radial velocity independent studies rule out the existence of the claimed planet.

In 2010, six binary stars were astrometrically measured. One of the star systems, called HD 176051, was found with "high confidence" to have a planet.

In 2018, a study comparing observations from the Gaia spacecraft to Hipparcos data for the Beta Pictoris system was able to measure the mass of Beta Pictoris b, constraining it to 11±2 Jupiter masses. This is in good agreement with previous mass estimations of roughly 13 Jupiter masses.

In 2019, data from the Gaia spacecraft and its predecessor Hipparcos was complemented with HARPS data enabling a better description of ε Indi Ab as the second-closest Jupiter-like exoplanet with a mass of 3 Jupiters on a slightly eccentric orbit with an orbital period of 45 years.

As of 2022, especially thanks to Gaia, the combination of radial velocity and astrometry has been used to detect and characterize numerous Jovian planets, including the nearest Jupiter analogues ε Eridani b and ε Indi Ab. In addition, radio astrometry using the VLBA has been used to discover planets in orbit around TVLM 513-46546 and EQ Pegasi A.

X-ray eclipse

In September 2020, the detection of a candidate planet orbiting the high-mass X-ray binary M51-ULS-1 in the Whirlpool Galaxy was announced. The planet was detected by eclipses of the X-ray source, which consists of a stellar remnant (either a neutron star or a black hole) and a massive star, likely a B-type supergiant. This is the only method capable of detecting a planet in another galaxy.

Disc kinematics

Planets can be detected by the gaps they produce in protoplanetary discs.

Other possible methods

Flare and variability echo detection

Non-periodic variability events, such as flares, can produce extremely faint echoes in the light curve if they reflect off an exoplanet or other scattering medium in the star system. More recently, motivated by advances in instrumentation and signal processing technologies, echoes from exoplanets are predicted to be recoverable from high-cadence photometric and spectroscopic measurements of active star systems, such as M dwarfs. These echoes are theoretically observable in all orbital inclinations.

Transit imaging

An optical/infrared interferometer array doesn't collect as much light as a single telescope of equivalent size, but has the resolution of a single telescope the size of the array. For bright stars, this resolving power could be used to image a star's surface during a transit event and see the shadow of the planet transiting. This could provide a direct measurement of the planet's angular radius and, via parallax, its actual radius. This is more accurate than radius estimates based on transit photometry, which are dependent on stellar radius estimates which depend on models of star characteristics. Imaging also provides more accurate determination of the inclination than photometry does.

Magnetospheric radio emissions

Radio emissions from magnetospheres could be detected with future radio telescopes. This could enable determination of the rotation rate of a planet, which is difficult to detect otherwise.

Auroral radio emissions

Auroral radio emissions from giant planets with plasma sources, such as Jupiter's volcanic moon Io, could be detected with radio telescopes such as LOFAR.

Optical interferometry

In March 2019, ESO astronomers, employing the GRAVITY instrument on their Very Large Telescope Interferometer (VLTI), announced the first direct detection of an exoplanet, HR 8799 e, using optical interferometry.

Modified interferometry

By looking at the wiggles of an interferogram using a Fourier-Transform-Spectrometer, enhanced sensitivity could be obtained in order to detect faint signals from Earth-like planets.

Detection of Dust Trapping around Lagrangian Points

Identification of dust clumps along a protoplanetary disk demonstrate trace accumulation around Lagrangian points. From the detection of this dust, it can be inferred that a planet exists such that it has created those Lagrange points.

Detection of extrasolar asteroids and debris disks

Circumstellar disks

Disks of space dust (debris disks) surround many stars. The dust can be detected because it absorbs ordinary starlight and re-emits it as infrared radiation. Even if the dust particles have a total mass well less than that of Earth, they can still have a large enough total surface area that they outshine their parent star in infrared wavelengths.

The Hubble Space Telescope is capable of observing dust disks with its NICMOS (Near Infrared Camera and Multi-Object Spectrometer) instrument. Even better images have now been taken by its sister instrument, the Spitzer Space Telescope, and by the European Space Agency's Herschel Space Observatory, which can see far deeper into infrared wavelengths than the Hubble can. Dust disks have now been found around more than 15% of nearby sunlike stars.

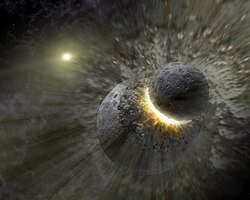

The dust is thought to be generated by collisions among comets and asteroids. Radiation pressure from the star will push the dust particles away into interstellar space over a relatively short timescale. Therefore, the detection of dust indicates continual replenishment by new collisions, and provides strong indirect evidence of the presence of small bodies like comets and asteroids that orbit the parent star.[127] For example, the dust disk around the star Tau Ceti indicates that that star has a population of objects analogous to our own Solar System's Kuiper Belt, but at least ten times thicker.

More speculatively, features in dust disks sometimes suggest the presence of full-sized planets. Some disks have a central cavity, meaning that they are really ring-shaped. The central cavity may be caused by a planet "clearing out" the dust inside its orbit. Other disks contain clumps that may be caused by the gravitational influence of a planet. Both these kinds of features are present in the dust disk around Epsilon Eridani, hinting at the presence of a planet with an orbital radius of around 40 AU (in addition to the inner planet detected through the radial-velocity method). These kinds of planet-disk interactions can be modeled numerically using collisional grooming techniques.

Contamination of stellar atmospheres

Spectral analysis of white dwarfs' atmospheres often finds contamination of heavier elements like magnesium and calcium. These elements cannot originate from the stars' core, and it is probable that the contamination comes from asteroids that got too close (within the Roche limit) to these stars by gravitational interaction with larger planets and were torn apart by star's tidal forces. Up to 50% of young white dwarfs may be contaminated in this manner.

Additionally, the dust responsible for the atmospheric pollution may be detected by infrared radiation if it exists in sufficient quantity, similar to the detection of debris discs around main sequence stars. Data from the Spitzer Space Telescope suggests that 1-3% of white dwarfs possess detectable circumstellar dust.

In 2015, minor planets were discovered transiting the white dwarf WD 1145+017. This material orbits with a period of around 4.5 hours, and the shapes of the transit light curves suggest that the larger bodies are disintegrating, contributing to the contamination in the white dwarf's atmosphere.

Space telescopes

Most confirmed extrasolar planets have been found using space-based telescopes (as of 01/2015). Many of the detection methods can work more effectively with space-based telescopes that avoid atmospheric haze and turbulence. COROT (2007-2012) and Kepler were space missions dedicated to searching for extrasolar planets using transits. COROT discovered about 30 new exoplanets. Kepler (2009-2013) and K2 (2013- ) have discovered over 2000 verified exoplanets. Hubble Space Telescope and MOST have also found or confirmed a few planets. The infrared Spitzer Space Telescope has been used to detect transits of extrasolar planets, as well as occultations of the planets by their host star and phase curves.

The Gaia mission, launched in December 2013, will use astrometry to determine the true masses of 1000 nearby exoplanets. TESS, launched in 2018, CHEOPS launched in 2019 and PLATO in 2026 will use the transit method.

Primary and secondary detection

| Method | Primary | Secondary |

|---|---|---|

| Transit | Primary eclipse. Planet passes in front of star. | Secondary eclipse. Star passes in front of planet. |

| Radial velocity | Radial velocity of star | Radial velocity of planet. This has been done for Tau Boötis b. |

| Astrometry | Astrometry of star. Position of star moves more for large planets with large orbits. | Astrometry of planet. Color-differential astrometry. Position of planet moves quicker for planets with small orbits. Theoretical method—has been proposed for use for the SPICA spacecraft. |

Verification and falsification methods

- Verification by multiplicity

- Transit color signature

- Doppler tomography

- Dynamical stability testing

- Distinguishing between planets and stellar activity

- Transit offset

Characterization methods

- Transmission spectroscopy

- Emission spectroscopy, phase-resolved

- Speckle imaging / Lucky imaging to detect companion stars that the planets could be orbiting instead of the primary star, which would alter planet parameters that are derived from stellar parameters.

- Photoeccentric Effect

- Rossiter–McLaughlin effect