A maser (/ˈmeɪzər/; acronym of microwave amplification by stimulated emission of radiation) is a device that produces coherent electromagnetic waves (i.e. microwaves), through amplification by stimulated emission. The first maser was built by Charles H. Townes, James P. Gordon, and Herbert J. Zeiger at Columbia University in 1953. Townes, Nikolay Basov and Alexander Prokhorov were awarded the 1964 Nobel Prize in Physics for theoretical work leading to the maser. Masers are also used as the timekeeping device in atomic clocks, and as extremely low-noise microwave amplifiers in radio telescopes and deep-space spacecraft communication ground stations.

Modern masers can be designed to generate electromagnetic waves at not only microwave frequencies but also radio and infrared frequencies. For this reason, Townes suggested replacing "microwave" with "molecular" as the first word in the acronym "maser".

The laser works by the same principle as the maser but produces higher frequency coherent radiation at visible wavelengths. The maser was the forerunner of the laser, inspiring theoretical work by Townes and Arthur Leonard Schawlow that led to the invention of the laser in 1960 by Theodore Maiman. When the coherent optical oscillator was first imagined in 1957, it was originally called the "optical maser". This was ultimately changed to laser, for "light amplification by stimulated emission of radiation". Gordon Gould is credited with creating this acronym in 1957.

History

The theoretical principles governing the operation of a maser were first described by Joseph Weber of the University of Maryland, College Park at the Electron Tube Research Conference in June 1952 in Ottawa, with a summary published in the June 1953 Transactions of the Institute of Radio Engineers Professional Group on Electron Devices, and simultaneously by Nikolay Basov and Alexander Prokhorov from Lebedev Institute of Physics, at an All-Union Conference on Radio-Spectroscopy held by the USSR Academy of Sciences in May 1952, subsequently published in October 1954.

Independently, Charles Hard Townes, James P. Gordon, and H. J. Zeiger built the first ammonia maser at Columbia University in 1953. This device used stimulated emission in a stream of energized ammonia molecules to produce amplification of microwaves at a frequency of about 24.0 gigahertz. Townes later worked with Arthur L. Schawlow to describe the principle of the optical maser, or laser, of which Theodore H. Maiman created the first working model in 1960.

For their research in the field of stimulated emission, Townes, Basov and Prokhorov were awarded the Nobel Prize in Physics in 1964.

Technology

The maser is based on the principle of stimulated emission proposed by Albert Einstein in 1917. When atoms have been induced into an excited energy state, they can amplify radiation at a frequency particular to the element or molecule used as the masing medium (similar to what occurs in the lasing medium in a laser).

By putting such an amplifying medium in a resonant cavity, feedback is created that can produce coherent radiation.

Some common types

- Atomic beam masers

- Ammonia maser

- Free electron maser

- Hydrogen maser

- Gas masers

- Rubidium maser

- Liquid-dye and chemical laser

- Solid state masers

- Ruby maser

- Whispering-gallery modes iron-sapphire maser

- Dual noble gas maser (The dual noble gas of a masing medium which is nonpolar.)

21st-century developments

In 2012, a research team from the National Physical Laboratory and Imperial College London developed a solid-state maser that operated at room temperature by using optically pumped, pentacene-doped p-Terphenyl as the amplifier medium. It produced pulses of maser emission lasting for a few hundred microseconds.

In 2018, a research team from Imperial College London and University College London demonstrated continuous-wave maser oscillation using synthetic diamonds containing nitrogen-vacancy defects.

Uses

Masers serve as high precision frequency references. These "atomic frequency standards" are one of the many forms of atomic clocks. Masers were also used as low-noise microwave amplifiers in radio telescopes, though these have largely been replaced by amplifiers based on FETs.

During the early 1960s, the Jet Propulsion Laboratory developed a maser to provide ultra-low-noise amplification of S-band microwave signals received from deep space probes. This maser used deeply refrigerated helium to chill the amplifier down to a temperature of 4 kelvin. Amplification was achieved by exciting a ruby comb with a 12.0 gigahertz klystron. In the early years, it took days to chill and remove the impurities from the hydrogen lines. Refrigeration was a two-stage process with a large Linde unit on the ground, and a crosshead compressor within the antenna. The final injection was at 21 MPa (3,000 psi) through a 150 μm (0.006 in) micrometer-adjustable entry to the chamber. The whole system noise temperature looking at cold sky (2.7 kelvin in the microwave band) was 17 kelvin; this gave such a low noise figure that the Mariner IV space probe could send still pictures from Mars back to the Earth even though the output power of its radio transmitter was only 15 watts, and hence the total signal power received was only −169 decibels with respect to a milliwatt (dBm).

Hydrogen maser

The hydrogen maser is used as an atomic frequency standard. Together with other kinds of atomic clocks, these help make up the International Atomic Time standard ("Temps Atomique International" or "TAI" in French). This is the international time scale coordinated by the International Bureau of Weights and Measures. Norman Ramsey and his colleagues first conceived of the maser as a timing standard. More recent masers are practically identical to their original design. Maser oscillations rely on the stimulated emission between two hyperfine energy levels of atomic hydrogen.

Here is a brief description of how they work:

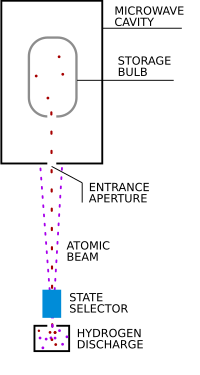

- First, a beam of atomic hydrogen is produced. This is done by submitting the gas at low pressure to a high-frequency radio wave discharge (see the picture on this page).

- The next step is "state selection"—in order to get some stimulated emission, it is necessary to create a population inversion of the atoms. This is done in a way that is very similar to the Stern–Gerlach experiment. After passing through an aperture and a magnetic field, many of the atoms in the beam are left in the upper energy level of the lasing transition. From this state, the atoms can decay to the lower state and emit some microwave radiation.

- A high Q factor (quality factor) microwave cavity confines the microwaves and reinjects them repeatedly into the atom beam. The stimulated emission amplifies the microwaves on each pass through the beam. This combination of amplification and feedback is what defines all oscillators. The resonant frequency of the microwave cavity is tuned to the frequency of the hyperfine energy transition of hydrogen: 1,420,405,752 hertz.

- A small fraction of the signal in the microwave cavity is coupled into a coaxial cable and then sent to a coherent radio receiver.

- The microwave signal coming out of the maser is very weak, a few picowatts. The frequency of the signal is fixed and extremely stable. The coherent receiver is used to amplify the signal and change the frequency. This is done using a series of phase-locked loops and a high performance quartz oscillator.

Astrophysical masers

Maser-like stimulated emission has also been observed in nature from interstellar space, and it is frequently called "superradiant emission" to distinguish it from laboratory masers. Such emission is observed from molecules such as water (H2O), hydroxyl radicals (•OH), methanol (CH3OH), formaldehyde (HCHO), silicon monoxide (SiO), and carbodiimide (HNCNH). Water molecules in star-forming regions can undergo a population inversion and emit radiation at about 22.0 GHz, creating the brightest spectral line in the radio universe. Some water masers also emit radiation from a rotational transition at a frequency of 96 GHz.

Extremely powerful masers, associated with active galactic nuclei, are known as megamasers and are up to a million times more powerful than stellar masers.

Terminology

The meaning of the term maser has changed slightly since its introduction. Initially the acronym was universally given as "microwave amplification by stimulated emission of radiation", which described devices which emitted in the microwave region of the electromagnetic spectrum.

The principle and concept of stimulated emission has since been extended to more devices and frequencies. Thus, the original acronym is sometimes modified, as suggested by Charles H. Townes, to "molecular amplification by stimulated emission of radiation." Some have asserted that Townes's efforts to extend the acronym in this way were primarily motivated by the desire to increase the importance of his invention, and his reputation in the scientific community.

When the laser was developed, Townes and Schawlow and their colleagues at Bell Labs pushed the use of the term optical maser, but this was largely abandoned in favor of laser, coined by their rival Gordon Gould. In modern usage, devices that emit in the X-ray through infrared portions of the spectrum are typically called lasers, and devices that emit in the microwave region and below are commonly called masers, regardless of whether they emit microwaves or other frequencies.

Gould originally proposed distinct names for devices that emit in each portion of the spectrum, including grasers (gamma ray lasers), xasers (x-ray lasers), uvasers (ultraviolet lasers), lasers (visible lasers), irasers (infrared lasers), masers (microwave masers), and rasers (RF masers). Most of these terms never caught on, however, and all have now become (apart from in science fiction) obsolete except for maser and laser.