BIOS implementations provide interrupts that can be invoked by operating systems and application programs to use the facilities of the firmware on IBM PC compatible computers. Traditionally, BIOS calls are mainly used by DOS programs and some other software such as boot loaders (including, mostly historically, relatively simple application software that boots directly and runs without an operating system—especially game software). BIOS runs in the real address mode (Real Mode) of the x86 CPU, so programs that call BIOS either must also run in real mode or must switch from protected mode to real mode before calling BIOS and then switching back again. For this reason, modern operating systems that use the CPU in Protected mode or Long mode generally do not use the BIOS interrupt calls to support system functions, although they use the BIOS interrupt calls to probe and initialize hardware during booting. Real mode has the 1MB memory limitation, modern boot loaders (e.g. GRUB2, Windows Boot Manager) use the unreal mode or protected mode (and execute the BIOS interrupt calls in the Virtual 8086 mode, but only for OS booting) to access up to 4GB memory.

In all computers, software instructions control the physical hardware (screen, disk, keyboard, etc.) from the moment the power is switched on. In a PC, the BIOS, pre-loaded in ROM on the motherboard, takes control immediately after the CPU is reset, including during power-up, when a hardware reset button is pressed, or when a critical software failure (a triple fault) causes the mainboard circuitry to automatically trigger a hardware reset. The BIOS tests the hardware and initializes its state; finds, loads, and runs the boot program (usually, an OS boot loader, and historical ROM BASIC); and provides basic hardware control to the software running on the machine, which is usually an operating system (with application programs) but may be a directly booting single software application.

For IBM's part, they provided all the information needed to use their BIOS fully or to directly utilize the hardware and avoid BIOS completely, when programming the early IBM PC models (prior to the PS/2). From the beginning, programmers had the choice of using BIOS or not, on a per-hardware-peripheral basis. IBM did strongly encourage the authorship of "well-behaved" programs that accessed hardware only through BIOS INT calls (and DOS service calls), to support compatibility of software with current and future PC models having dissimilar peripheral hardware, but IBM understood that for some software developers and hardware customers, a capability for user software to directly control the hardware was a requirement. In part, this was because a significant subset of all the hardware features and functions was not exposed by the BIOS services. For two examples (among many), the MDA and CGA adapters are capable of hardware scrolling, and the PC serial adapter is capable of interrupt-driven data transfer, but the IBM BIOS supports neither of these useful technical features.

Today, the BIOS in a new PC still supports most, if not all, of the BIOS interrupt function calls defined by IBM for the IBM AT (introduced in 1984), along with many more newer ones, plus extensions to some of the originals (e.g. expanded parameter ranges) promulgated by various other organizations and collaborative industry groups. This, combined with a similar degree of hardware compatibility, means that most programs written for an IBM AT can still run correctly on a new PC today, assuming that the faster speed of execution is acceptable (which it typically is for all but games that use CPU-based timing). Despite the considerable limitations of the services accessed through the BIOS interrupts, they have proven extremely useful and durable to technological change.

Purpose of BIOS calls

BIOS interrupt calls perform hardware control or I/O functions requested by a program, return system information to the program, or do both. A key element of the purpose of BIOS calls is abstraction - the BIOS calls perform generally defined functions, and the specific details of how those functions are executed on the particular hardware of the system are encapsulated in the BIOS and hidden from the program. So, for example, a program that wants to read from a hard disk does not need to know whether the hard disk is an ATA, SCSI, or SATA drive (or in earlier days, an ESDI drive, or an MFM or RLL drive with perhaps a Seagate ST-506 controller, perhaps one of the several Western Digital controller types, or with a different proprietary controller of another brand). The program only needs to identify the BIOS-defined number of the drive it wishes to access and the address of the sector it needs to read or write, and the BIOS will take care of translating this general request into the specific sequence of elementary operations required to complete the task through the particular disk controller hardware that is connected to that drive. The program is freed from needing to know how to control at a low level every type of hard disk (or display adapter, or port interface, or real-time clock peripheral) that it may need to access. This both makes programming operating systems and applications easier and makes the programs smaller, reducing the duplication of program code, as the functionality that is included in the BIOS does not need to be included in every program that needs it; relatively short calls to the BIOS are included in the programs instead. (In operating systems where the BIOS is not used, service calls provided by the operating system itself generally fulfill the same function and purpose.)

The BIOS also frees computer hardware designers (to the extent that programs are written to use the BIOS exclusively) from being constrained to maintain exact hardware compatibility with old systems when designing new systems, in order to maintain compatibility with existing software. For example, the keyboard hardware on the IBM PCjr works very differently than the keyboard hardware on earlier IBM PC models, but to programs that use the keyboard only through the BIOS, this difference is nearly invisible. (As a good example of the other side of this issue, a significant share of the PC programs in use at the time the PCjr was introduced did not use the keyboard through BIOS exclusively, so IBM also included hardware features in the PCjr to emulate the way the original IBM PC and IBM PC XT keyboard hardware works. The hardware emulation is not exact, so not all programs that try to use the keyboard hardware directly will work correctly on the PCjr, but all programs that use only the BIOS keyboard services will.)

In addition to giving access to hardware facilities, BIOS provides added facilities that are implemented in the BIOS software. For example, the BIOS maintains separate cursor positions for up to eight text display pages and provides for TTY-like output with automatic line wrap and interpretation of basic control characters such as carriage return and line feed, whereas the CGA-compatible text display hardware has only one global display cursor and cannot automatically advance the cursor, use the cursor position to address the display memory (so as to determine which character cell will be changed or examined), or interpret control characters. For another example, the BIOS keyboard interface interprets many keystrokes and key combinations to keep track of the various shift states (left and right Shift, Ctrl, and Alt), to call the print-screen service when Shift+PrtScrn is pressed, to reboot the system when Ctrl+Alt+Del is pressed, to keep track of the lock states (Caps Lock, Num Lock, and Scroll Lock) and, in AT-class machines, control the corresponding lock-state indicator lights on the keyboard, and to perform other similar interpretive and management functions for the keyboard. In contrast, the ordinary capabilities of the standard PC and PC-AT keyboard hardware are limited to reporting to the system each primitive event of an individual key being pressed or released (i.e. making a transition from the "released" state to the "depressed" state or vice versa), performing a commanded reset and self-test of the keyboard unit, and, for AT-class keyboards, executing a command from the host system to set the absolute states of the lock-state indicators (LEDs).

Calling BIOS: BIOS software interrupts

Operating systems and other software communicate with the BIOS software, in order to control the installed hardware, via software interrupts. A software interrupt is a specific variety of the general concept of an interrupt. An interrupt is a mechanism by which the CPU can be directed to stop executing the main-line program and immediately execute a special program, called an Interrupt Service Routine (ISR), instead. Once the ISR finishes, the CPU continues with the main program. On x86 CPUs, when an interrupt occurs, the ISR to call is found by looking it up in a table of ISR starting-point addresses (called "interrupt vectors") in memory: the Interrupt vector table (IVT). An interrupt is invoked by its type number, from 0 to 255, and the type number is used as an index into the Interrupt Vector Table, and at that index in the table is found the address of the ISR that will be run in response to the interrupt. A software interrupt is simply an interrupt that is triggered by a software command; therefore, software interrupts function like subroutines, with the main difference that the program that makes a software interrupt call does not need to know the address of the ISR, only its interrupt number. This has advantages for modularity, compatibility, and flexibility in system configuration.

BIOS interrupt calls can be thought of as a mechanism for passing messages between BIOS and BIOS client software such as an operating system. The messages request data or action from BIOS and return the requested data, status information, and/or the product of the requested action to the caller. The messages are broken into categories, each with its own interrupt number, and most categories contain sub-categories, called "functions" and identified by "function numbers". A BIOS client passes most information to BIOS in CPU registers, and receives most information back the same way, but data too large to fit in registers, such as tables of control parameters or disk sector data for disk transfers, is passed by allocating a buffer (i.e. some space) in memory and passing the address of the buffer in registers. (Sometimes multiple addresses of data items in memory may be passed in a data structure in memory, with the address of that structure passed to BIOS in registers.) The interrupt number is specified as the parameter of the software interrupt instruction (in Intel assembly language, an "INT" instruction), and the function number is specified in the AH register; that is, the caller sets the AH register to the number of the desired function. In general, the BIOS services corresponding to each interrupt number operate independently of each other, but the functions within one interrupt service are handled by the same BIOS program and are not independent. (This last point is relevant to reentrancy.)

The BIOS software usually returns to the caller with an error code if not successful, or with a status code and/or requested data if successful. The data itself can be as small as one bit or as large as 65,536 bytes of whole raw disk sectors (the maximum that will fit into one real-mode memory segment). BIOS has been expanded and enhanced over the years many times by many different corporate entities, and unfortunately the result of this evolution is that not all the BIOS functions that can be called use consistent conventions for formatting and communicating data or for reporting results. Some BIOS functions report detailed status information, while others may not even report success or failure but just return silently, leaving the caller to assume success (or to test the outcome some other way). Sometimes it can also be difficult to determine whether or not a certain BIOS function call is supported by the BIOS on a certain computer, or what the limits of a call's parameters are on that computer. (For some invalid function numbers, or valid function numbers with invalid values of key parameters—particularly with an early IBM BIOS version—the BIOS may do nothing and return with no error code; then it is the [inconvenient but inevitable] responsibility of the caller either to avoid this case by not making such calls, or to positively test for an expected effect of the call rather than assuming that the call was effective. Because BIOS has evolved extensively in many steps over its history, a function that is valid in one BIOS version from some certain vendor may not be valid in an earlier or divergent BIOS version from the same vendor or in a BIOS version—of any relative age—from a different vendor.)

Because BIOS interrupt calls use CPU register-based parameter passing, the calls are oriented to being made from assembly language and cannot be directly made from most high-level languages (HLLs). However, a high level language may provide a library of wrapper routines which translate parameters from the form (usually stack-based) used by the high-level language to the register-based form required by BIOS, then back to the HLL calling convention after the BIOS returns. In some variants of C, BIOS calls can be made using inline assembly language within a C module. (Support for inline assembly language is not part of the ANSI C standard but is a language extension; therefore, C modules that use inline assembly language are less portable than pure ANSI standard C modules.)

Invoking an interrupt

Invoking an interrupt can be done using the INT x86 assembly language instruction. For example, to print a character to the screen using BIOS interrupt 0x10, the following x86 assembly language instructions could be executed:

mov ah, 0x0e ; function number = 0Eh : Display Character

mov al, '!' ; AL = code of character to display

int 0x10 ; call INT 10h, BIOS video service

Interrupt table

A list of common BIOS interrupt classes can be found below. Note that some BIOSes (particularly old ones) do not implement all of these interrupt classes.

The BIOS also uses some interrupts to relay hardware event interrupts to programs which choose to receive them or to route messages for its own use. The table below includes only those BIOS interrupts which are intended to be called by programs (using the "INT" assembly-language software interrupt instruction) to request services or information.

| Interrupt vector | Description | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

05h

|

Executed when Shift-Print screen is pressed, as well as when the BOUND instruction detects a bound failure.

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

08h

|

This is the real time clock interrupt. It fires 18.2 times/second. The BIOS increments the time-of-day counter during this interrupt. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

09h

|

This is the Keyboard interrupt. This is generally triggered when a key on a keyboard is pressed. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

10h

|

Video Services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

11h

|

Returns equipment list | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

12h

|

Return conventional memory size | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

13h

|

Low Level Disk Services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

14h

|

Serial port services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

15h

|

Miscellaneous system services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

16h

|

Keyboard services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

17h

|

Printer services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

18h

|

Execute Cassette BASIC: On IBM machines up to the early PS/2 line, this interrupt would start the ROM Cassette BASIC. Clones did not have this feature and different machines/BIOSes would perform a variety of different actions if INT 18h was executed, most commonly an error message stating that no bootable disk was present. Modern machines would attempt to boot from a network through this interrupt. On modern machines this interrupt will be treated by the BIOS as a signal from the bootloader that it failed to complete its task. The BIOS can then take appropriate next steps. | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

19h

|

After POST this interrupt is used by the BIOS to load the operating system. A program can call this interrupt to reboot the computer (but must ensure that hardware interrupts or DMA operations will not cause the system to hang or crash during either the reinitialization of the system by BIOS or the boot process). | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Ah

|

Real Time Clock Services

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Ah

|

PCI Services - implemented by BIOSes supporting PCI 2.0 or later

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Bh

|

Ctrl-Break handler - called by INT 09 when Ctrl-Break has been pressed

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Ch

|

Timer tick handler - called by INT 08

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Dh

|

Not to be called; simply a pointer to the VPT (Video Parameter Table), which contains data on video modes | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Eh

|

Not to be called; simply a pointer to the DPT (Diskette Parameter Table), containing a variety of information concerning the diskette drives | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

1Fh

|

Not to be called; simply a pointer to the VGCT (Video Graphics Character Table), which contains the data for ASCII characters 80h to FFh

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

41h

|

Address pointer: FDPT = Fixed Disk Parameter Table (1st hard drive) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

46h

|

Address pointer: FDPT = Fixed Disk Parameter Table (2nd hard drive) | |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

4Ah

|

Called by RTC for alarm |

INT 18h: execute BASIC

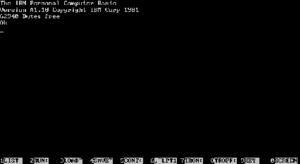

INT 18h traditionally jumped to an implementation of Cassette BASIC (provided by Microsoft) stored in Option ROMs. This call would typically be invoked if the BIOS was unable to identify any bootable disk volumes on startup.

At the time the original IBM PC (IBM machine type 5150) was released in 1981, the BASIC in ROM was a key feature. Contemporary popular personal computers such as the Commodore 64 and the Apple II line also had Microsoft Cassette BASIC in ROM (though Commodore renamed their licensed version Commodore BASIC), so in a substantial portion of its intended market, the IBM PC needed BASIC to compete. As on those other systems, the IBM PC's ROM BASIC served as a primitive diskless operating system, allowing the user to load, save, and run programs, as well as to write and refine them. (The original IBM PC was also the only PC model from IBM that, like its aforementioned two competitors, included cassette interface hardware. A base model IBM PC had only 16 KiB of RAM and no disk drives [of any kind], so the cassette interface and BASIC in ROM were essential to make the base model usable. An IBM PC with less than 32 KiB of RAM is incapable of booting from disk. Of the five 8 KiB ROM chips in an original IBM PC, totaling 40 KiB, four contain BASIC and only one contains the BIOS; when only 16 KiB of RAM are installed, the ROM BASIC accounts for over half of the total system memory [4/7ths, to be precise].)

As time went on and BASIC was no longer shipped on all PCs, this interrupt would simply display an error message indicating that no bootable volume was found (famously, "No ROM BASIC", or more explanatory messages in later BIOS versions); in other BIOS versions it would prompt the user to insert a bootable volume and press a key, and then after the user pressed a key it would loop back to the bootstrap loader (INT 19h) to try booting again.

Digital's Rainbow 100B used INT 18h to call its BIOS, which was incompatible with the IBM BIOS. Turbo Pascal, Turbo C and Turbo C++ repurposed INT 18 for memory allocation and paging. Other programs also reused this vector for their own purposes.

BIOS hooks

DOS

On DOS systems, IO.SYS or IBMBIO.COM hooks INT 13 for floppy disk change detection, tracking formatting calls, correcting DMA boundary errors, and working around problems in IBM's ROM BIOS "01/10/84" with model code 0xFC before the first call.

Bypassing BIOS

Many modern operating systems (such as Linux and Windows) do not use any BIOS interrupt calls at all after startup, instead choosing to directly interface with the hardware. To do this, they rely upon drivers that are either a part of the OS kernel itself, ship along with the OS, or are provided by hardware vendors.

There are several reasons for this practice. Most significant is that modern operating systems run with the processor in protected (or long) mode, whereas the BIOS code will only execute in real mode. This means that if an OS running in protected mode wanted to make a BIOS call, it would have to first switch into real mode, then execute the call and wait for it to return, and finally switch back to protected mode. This would be terribly slow and inefficient. Code that runs in real mode (including the BIOS) is limited to accessing just over 1 MiB of memory, due to using 16-bit segmented memory addressing. Additionally, the BIOS is generally not the fastest way to carry out any particular task. In fact, the speed limitations of the BIOS made it common even in the DOS era for programs to circumvent it in order to avoid its performance limitations, especially for video graphics display and fast serial communication.

Beyond the above factors, problems with BIOS functionality include limitations in the range of functions defined, inconsistency in the subsets of those functions supported on different computers, and variations in the quality of BIOSes (i.e. some BIOSes are complete and reliable, others are abridged and buggy). By taking matters into their own hands and avoiding reliance on BIOS, operating system developers can eliminate some of the risks and complications they face in writing and supporting system software. On the other hand, by doing so those developers become responsible for providing "bare-metal" driver software for every different system or peripheral device they intend for their operating system to work with (or for inducing the hardware producers to provide those drivers).

Thus it should be apparent that compact operating systems developed on small budgets would tend to use BIOS heavily, while large operating systems built by huge groups of software engineers with large budgets would more often opt to write their own drivers instead of using BIOS—that is, even without considering the compatibility problems of BIOS and protected mode.