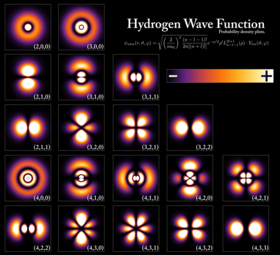

Hydrogen atomic orbitals at different energy levels. The more opaque areas are where one is most likely to find an electron at any given time. | |

| Composition | elementary particle |

|---|---|

| Statistics | fermionic |

| Family | lepton |

| Generation | first |

| Interactions | weak, electromagnetic, gravity |

| Symbol | e− , β− |

| Antiparticle | positron |

| Theorized | Richard Laming (1838–1851), G. Johnstone Stoney (1874) and others. |

| Discovered | J. J. Thomson (1897) |

| Mass | 9.1093837015(28)×10−31 kg 5.48579909065(16)×10−4 Da [1822.888486209(53)]−1 Da 0.51099895000(15) MeV/c2 |

| Mean lifetime | > 6.6×1028 years (stable) |

| Electric charge | −1 e −1.602176634×10−19 C |

| Magnetic moment | −9.2847647043(28)×10−24 J⋅T−1 −1.00115965218128(18) µB |

| Spin | 1 /2 ħ |

| Weak isospin | LH: − 1 /2, RH: 0 |

| Weak hypercharge | LH: −1, RH: −2 |

The electron (

e−

or

β−

) is a subatomic particle with a negative one elementary electric charge. Electrons belong to the first generation of the lepton particle family, and are generally thought to be elementary particles because they have no known components or substructure. The electron's mass is approximately 1/1836 that of the proton. Quantum mechanical properties of the electron include an intrinsic angular momentum (spin) of a half-integer value, expressed in units of the reduced Planck constant, ħ. Being fermions, no two electrons can occupy the same quantum state, per the Pauli exclusion principle. Like all elementary particles, electrons exhibit properties of both particles and waves: They can collide with other particles and can be diffracted like light. The wave properties of electrons are easier to observe with experiments than those of other particles like neutrons and protons because electrons have a lower mass and hence a longer de Broglie wavelength for a given energy.

Electrons play an essential role in numerous physical phenomena, such as electricity, magnetism, chemistry, and thermal conductivity; they also participate in gravitational, electromagnetic, and weak interactions. Since an electron has charge, it has a surrounding electric field; if that electron is moving relative to an observer, the observer will observe it to generate a magnetic field. Electromagnetic fields produced from other sources will affect the motion of an electron according to the Lorentz force law. Electrons radiate or absorb energy in the form of photons when they are accelerated.

Laboratory instruments are capable of trapping individual electrons as well as electron plasma by the use of electromagnetic fields. Special telescopes can detect electron plasma in outer space. Electrons are involved in many applications, such as tribology or frictional charging, electrolysis, electrochemistry, battery technologies, electronics, welding, cathode-ray tubes, photoelectricity, photovoltaic solar panels, electron microscopes, radiation therapy, lasers, gaseous ionization detectors, and particle accelerators.

Interactions involving electrons with other subatomic particles are of interest in fields such as chemistry and nuclear physics. The Coulomb force interaction between the positive protons within atomic nuclei and the negative electrons without allows the composition of the two known as atoms. Ionization or differences in the proportions of negative electrons versus positive nuclei changes the binding energy of an atomic system. The exchange or sharing of the electrons between two or more atoms is the main cause of chemical bonding.

In 1838, British natural philosopher Richard Laming first hypothesized the concept of an indivisible quantity of electric charge to explain the chemical properties of atoms. Irish physicist George Johnstone Stoney named this charge 'electron' in 1891, and J. J. Thomson and his team of British physicists identified it as a particle in 1897 during the cathode-ray tube experiment.

Electrons participate in nuclear reactions, such as nucleosynthesis in stars, where they are known as beta particles. Electrons can be created through beta decay of radioactive isotopes and in high-energy collisions, for instance, when cosmic rays enter the atmosphere. The antiparticle of the electron is called the positron; it is identical to the electron, except that it carries electrical charge of the opposite sign. When an electron collides with a positron, both particles can be annihilated, producing gamma ray photons.

History

Discovery of effect of electric force

The ancient Greeks noticed that amber attracted small objects when rubbed with fur. Along with lightning, this phenomenon is one of humanity's earliest recorded experiences with electricity. In his 1600 treatise De Magnete, the English scientist William Gilbert coined the Neo-Latin term electrica, to refer to those substances with property similar to that of amber which attract small objects after being rubbed. Both electric and electricity are derived from the Latin ēlectrum (also the root of the alloy of the same name), which came from the Greek word for amber, ἤλεκτρον (ēlektron).

Discovery of two kinds of charges

In the early 1700s, French chemist Charles François du Fay found that if a charged gold-leaf is repulsed by glass rubbed with silk, then the same charged gold-leaf is attracted by amber rubbed with wool. From this and other results of similar types of experiments, du Fay concluded that electricity consists of two electrical fluids, vitreous fluid from glass rubbed with silk and resinous fluid from amber rubbed with wool. These two fluids can neutralize each other when combined. American scientist Ebenezer Kinnersley later also independently reached the same conclusion. A decade later Benjamin Franklin proposed that electricity was not from different types of electrical fluid, but a single electrical fluid showing an excess (+) or deficit (−). He gave them the modern charge nomenclature of positive and negative respectively. Franklin thought of the charge carrier as being positive, but he did not correctly identify which situation was a surplus of the charge carrier, and which situation was a deficit.

Between 1838 and 1851, British natural philosopher Richard Laming developed the idea that an atom is composed of a core of matter surrounded by subatomic particles that had unit electric charges. Beginning in 1846, German physicist Wilhelm Eduard Weber theorized that electricity was composed of positively and negatively charged fluids, and their interaction was governed by the inverse square law. After studying the phenomenon of electrolysis in 1874, Irish physicist George Johnstone Stoney suggested that there existed a "single definite quantity of electricity", the charge of a monovalent ion. He was able to estimate the value of this elementary charge e by means of Faraday's laws of electrolysis. However, Stoney believed these charges were permanently attached to atoms and could not be removed. In 1881, German physicist Hermann von Helmholtz argued that both positive and negative charges were divided into elementary parts, each of which "behaves like atoms of electricity".

Stoney initially coined the term electrolion in 1881. Ten years later, he switched to electron to describe these elementary charges, writing in 1894: "... an estimate was made of the actual amount of this most remarkable fundamental unit of electricity, for which I have since ventured to suggest the name electron". A 1906 proposal to change to electrion failed because Hendrik Lorentz preferred to keep electron. The word electron is a combination of the words electric and ion. The suffix -on which is now used to designate other subatomic particles, such as a proton or neutron, is in turn derived from electron.

Discovery of free electrons outside matter

While studying electrical conductivity in rarefied gases in 1859, the German physicist Julius Plücker observed the radiation emitted from the cathode caused phosphorescent light to appear on the tube wall near the cathode; and the region of the phosphorescent light could be moved by application of a magnetic field. In 1869, Plücker's student Johann Wilhelm Hittorf found that a solid body placed in between the cathode and the phosphorescence would cast a shadow upon the phosphorescent region of the tube. Hittorf inferred that there are straight rays emitted from the cathode and that the phosphorescence was caused by the rays striking the tube walls. In 1876, the German physicist Eugen Goldstein showed that the rays were emitted perpendicular to the cathode surface, which distinguished between the rays that were emitted from the cathode and the incandescent light. Goldstein dubbed the rays cathode rays. Decades of experimental and theoretical research involving cathode rays were important in J. J. Thomson's eventual discovery of electrons.

During the 1870s, the English chemist and physicist Sir William Crookes developed the first cathode-ray tube to have a high vacuum inside. He then showed in 1874 that the cathode rays can turn a small paddle wheel when placed in their path. Therefore, he concluded that the rays carried momentum. Furthermore, by applying a magnetic field, he was able to deflect the rays, thereby demonstrating that the beam behaved as though it were negatively charged. In 1879, he proposed that these properties could be explained by regarding cathode rays as composed of negatively charged gaseous molecules in a fourth state of matter in which the mean free path of the particles is so long that collisions may be ignored.

The German-born British physicist Arthur Schuster expanded upon Crookes's experiments by placing metal plates parallel to the cathode rays and applying an electric potential between the plates. The field deflected the rays toward the positively charged plate, providing further evidence that the rays carried negative charge. By measuring the amount of deflection for a given electric and magnetic field, in 1890 Schuster was able to estimate the charge-to-mass ratio of the ray components. However, this produced a value that was more than a thousand times greater than what was expected, so little credence was given to his calculations at the time. This is because it was assumed that the charge carriers were much heavier hydrogen or nitrogen atoms. Schuster's estimates would subsequently turn out to be largely correct.

In 1892 Hendrik Lorentz suggested that the mass of these particles (electrons) could be a consequence of their electric charge.

While studying naturally fluorescing minerals in 1896, the French physicist Henri Becquerel discovered that they emitted radiation without any exposure to an external energy source. These radioactive materials became the subject of much interest by scientists, including the New Zealand physicist Ernest Rutherford who discovered they emitted particles. He designated these particles alpha and beta, on the basis of their ability to penetrate matter. In 1900, Becquerel showed that the beta rays emitted by radium could be deflected by an electric field, and that their mass-to-charge ratio was the same as for cathode rays. This evidence strengthened the view that electrons existed as components of atoms.

In 1897, the British physicist J. J. Thomson, with his colleagues John S. Townsend and H. A. Wilson, performed experiments indicating that cathode rays really were unique particles, rather than waves, atoms or molecules as was believed earlier. Thomson made good estimates of both the charge e and the mass m, finding that cathode ray particles, which he called "corpuscles", had perhaps one thousandth of the mass of the least massive ion known: hydrogen. He showed that their charge-to-mass ratio, e/m, was independent of cathode material. He further showed that the negatively charged particles produced by radioactive materials, by heated materials and by illuminated materials were universal. The name electron was adopted for these particles by the scientific community, mainly due to the advocation by G. F. FitzGerald, J. Larmor, and H. A. Lorentz. In the same year Emil Wiechert and Walter Kaufmann also calculated the e/m ratio but they failed short of interpreting their results while J. J. Thomson would subsequently in 1899 give estimates for the electron charge and mass as well: e~6.8×10−10 esu and m~3×10−26 g

The electron's charge was more carefully measured by the American physicists Robert Millikan and Harvey Fletcher in their oil-drop experiment of 1909, the results of which were published in 1911. This experiment used an electric field to prevent a charged droplet of oil from falling as a result of gravity. This device could measure the electric charge from as few as 1–150 ions with an error margin of less than 0.3%. Comparable experiments had been done earlier by Thomson's team, using clouds of charged water droplets generated by electrolysis, and in 1911 by Abram Ioffe, who independently obtained the same result as Millikan using charged microparticles of metals, then published his results in 1913. However, oil drops were more stable than water drops because of their slower evaporation rate, and thus more suited to precise experimentation over longer periods of time.

Around the beginning of the twentieth century, it was found that under certain conditions a fast-moving charged particle caused a condensation of supersaturated water vapor along its path. In 1911, Charles Wilson used this principle to devise his cloud chamber so he could photograph the tracks of charged particles, such as fast-moving electrons.

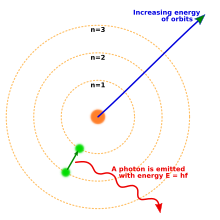

Atomic theory

By 1914, experiments by physicists Ernest Rutherford, Henry Moseley, James Franck and Gustav Hertz had largely established the structure of an atom as a dense nucleus of positive charge surrounded by lower-mass electrons. In 1913, Danish physicist Niels Bohr postulated that electrons resided in quantized energy states, with their energies determined by the angular momentum of the electron's orbit about the nucleus. The electrons could move between those states, or orbits, by the emission or absorption of photons of specific frequencies. By means of these quantized orbits, he accurately explained the spectral lines of the hydrogen atom. However, Bohr's model failed to account for the relative intensities of the spectral lines and it was unsuccessful in explaining the spectra of more complex atoms.

Chemical bonds between atoms were explained by Gilbert Newton Lewis, who in 1916 proposed that a covalent bond between two atoms is maintained by a pair of electrons shared between them. Later, in 1927, Walter Heitler and Fritz London gave the full explanation of the electron-pair formation and chemical bonding in terms of quantum mechanics. In 1919, the American chemist Irving Langmuir elaborated on the Lewis's static model of the atom and suggested that all electrons were distributed in successive "concentric (nearly) spherical shells, all of equal thickness". In turn, he divided the shells into a number of cells each of which contained one pair of electrons. With this model Langmuir was able to qualitatively explain the chemical properties of all elements in the periodic table, which were known to largely repeat themselves according to the periodic law.

In 1924, Austrian physicist Wolfgang Pauli observed that the shell-like structure of the atom could be explained by a set of four parameters that defined every quantum energy state, as long as each state was occupied by no more than a single electron. This prohibition against more than one electron occupying the same quantum energy state became known as the Pauli exclusion principle. The physical mechanism to explain the fourth parameter, which had two distinct possible values, was provided by the Dutch physicists Samuel Goudsmit and George Uhlenbeck. In 1925, they suggested that an electron, in addition to the angular momentum of its orbit, possesses an intrinsic angular momentum and magnetic dipole moment. This is analogous to the rotation of the Earth on its axis as it orbits the Sun. The intrinsic angular momentum became known as spin, and explained the previously mysterious splitting of spectral lines observed with a high-resolution spectrograph; this phenomenon is known as fine structure splitting.

Quantum mechanics

In his 1924 dissertation Recherches sur la théorie des quanta (Research on Quantum Theory), French physicist Louis de Broglie hypothesized that all matter can be represented as a de Broglie wave in the manner of light. That is, under the appropriate conditions, electrons and other matter would show properties of either particles or waves. The corpuscular properties of a particle are demonstrated when it is shown to have a localized position in space along its trajectory at any given moment. The wave-like nature of light is displayed, for example, when a beam of light is passed through parallel slits thereby creating interference patterns. In 1927, George Paget Thomson and Alexander Reid discovered the interference effect was produced when a beam of electrons was passed through thin celluloid foils and later metal films, and by American physicists Clinton Davisson and Lester Germer by the reflection of electrons from a crystal of nickel. Alexander Reid, who was Thomson's graduate student, performed the first experiments but he died soon after in a motorcycle accident and is rarely mentioned.

De Broglie's prediction of a wave nature for electrons led Erwin Schrödinger to postulate a wave equation for electrons moving under the influence of the nucleus in the atom. In 1926, this equation, the Schrödinger equation, successfully described how electron waves propagated. Rather than yielding a solution that determined the location of an electron over time, this wave equation also could be used to predict the probability of finding an electron near a position, especially a position near where the electron was bound in space, for which the electron wave equations did not change in time. This approach led to a second formulation of quantum mechanics (the first by Heisenberg in 1925), and solutions of Schrödinger's equation, like Heisenberg's, provided derivations of the energy states of an electron in a hydrogen atom that were equivalent to those that had been derived first by Bohr in 1913, and that were known to reproduce the hydrogen spectrum. Once spin and the interaction between multiple electrons were describable, quantum mechanics made it possible to predict the configuration of electrons in atoms with atomic numbers greater than hydrogen.

In 1928, building on Wolfgang Pauli's work, Paul Dirac produced a model of the electron – the Dirac equation, consistent with relativity theory, by applying relativistic and symmetry considerations to the hamiltonian formulation of the quantum mechanics of the electro-magnetic field. In order to resolve some problems within his relativistic equation, Dirac developed in 1930 a model of the vacuum as an infinite sea of particles with negative energy, later dubbed the Dirac sea. This led him to predict the existence of a positron, the antimatter counterpart of the electron. This particle was discovered in 1932 by Carl Anderson, who proposed calling standard electrons negatrons and using electron as a generic term to describe both the positively and negatively charged variants.

In 1947, Willis Lamb, working in collaboration with graduate student Robert Retherford, found that certain quantum states of the hydrogen atom, which should have the same energy, were shifted in relation to each other; the difference came to be called the Lamb shift. About the same time, Polykarp Kusch, working with Henry M. Foley, discovered the magnetic moment of the electron is slightly larger than predicted by Dirac's theory. This small difference was later called anomalous magnetic dipole moment of the electron. This difference was later explained by the theory of quantum electrodynamics, developed by Sin-Itiro Tomonaga, Julian Schwinger and Richard Feynman in the late 1940s.

Particle accelerators

With the development of the particle accelerator during the first half of the twentieth century, physicists began to delve deeper into the properties of subatomic particles. The first successful attempt to accelerate electrons using electromagnetic induction was made in 1942 by Donald Kerst. His initial betatron reached energies of 2.3 MeV, while subsequent betatrons achieved 300 MeV. In 1947, synchrotron radiation was discovered with a 70 MeV electron synchrotron at General Electric. This radiation was caused by the acceleration of electrons through a magnetic field as they moved near the speed of light.

With a beam energy of 1.5 GeV, the first high-energy particle collider was ADONE, which began operations in 1968. This device accelerated electrons and positrons in opposite directions, effectively doubling the energy of their collision when compared to striking a static target with an electron. The Large Electron–Positron Collider (LEP) at CERN, which was operational from 1989 to 2000, achieved collision energies of 209 GeV and made important measurements for the Standard Model of particle physics.

Confinement of individual electrons

Individual electrons can now be easily confined in ultra small (L = 20 nm, W = 20 nm) CMOS transistors operated at cryogenic temperature over a range of −269 °C (4 K) to about −258 °C (15 K). The electron wavefunction spreads in a semiconductor lattice and negligibly interacts with the valence band electrons, so it can be treated in the single particle formalism, by replacing its mass with the effective mass tensor.

Characteristics

Classification

In the Standard Model of particle physics, electrons belong to the group of subatomic particles called leptons, which are believed to be fundamental or elementary particles. Electrons have the lowest mass of any charged lepton (or electrically charged particle of any type) and belong to the first-generation of fundamental particles. The second and third generation contain charged leptons, the muon and the tau, which are identical to the electron in charge, spin and interactions, but are more massive. Leptons differ from the other basic constituent of matter, the quarks, by their lack of strong interaction. All members of the lepton group are fermions because they all have half-odd integer spin; the electron has spin 1/2.

Fundamental properties

The invariant mass of an electron is approximately 9.109×10−31 kilograms, or 5.489×10−4 atomic mass units. Due to mass–energy equivalence, this corresponds to a rest energy of 0.511 MeV (8.19×10−14 J). The ratio between the mass of a proton and that of an electron is about 1836. Astronomical measurements show that the proton-to-electron mass ratio has held the same value, as is predicted by the Standard Model, for at least half the age of the universe.

Electrons have an electric charge of −1.602176634×10−19 coulombs, which is used as a standard unit of charge for subatomic particles, and is also called the elementary charge.

Within the limits of experimental accuracy, the electron charge is

identical to the charge of a proton, but with the opposite sign. The electron is commonly symbolized by

e−

, and the positron is symbolized by

e+

.

The electron has an intrinsic angular momentum or spin of ħ/2. This property is usually stated by referring to the electron as a spin-1/2 particle. For such particles the spin magnitude is ħ/2, while the result of the measurement of a projection of the spin on any axis can only be ±ħ/2. In addition to spin, the electron has an intrinsic magnetic moment along its spin axis. It is approximately equal to one Bohr magneton, which is a physical constant equal to 9.27400915(23)×10−24 joules per tesla. The orientation of the spin with respect to the momentum of the electron defines the property of elementary particles known as helicity.

The electron has no known substructure. Nevertheless, in condensed matter physics, spin–charge separation can occur in some materials. In such cases, electrons 'split' into three independent particles, the spinon, the orbiton and the holon (or chargon). The electron can always be theoretically considered as a bound state of the three, with the spinon carrying the spin of the electron, the orbiton carrying the orbital degree of freedom and the chargon carrying the charge, but in certain conditions they can behave as independent quasiparticles.

The issue of the radius of the electron is a challenging problem of modern theoretical physics. The admission of the hypothesis of a finite radius of the electron is incompatible to the premises of the theory of relativity. On the other hand, a point-like electron (zero radius) generates serious mathematical difficulties due to the self-energy of the electron tending to infinity. Observation of a single electron in a Penning trap suggests the upper limit of the particle's radius to be 10−22 meters. The upper bound of the electron radius of 10−18 meters can be derived using the uncertainty relation in energy. There is also a physical constant called the "classical electron radius", with the much larger value of 2.8179×10−15 m, greater than the radius of the proton. However, the terminology comes from a simplistic calculation that ignores the effects of quantum mechanics; in reality, the so-called classical electron radius has little to do with the true fundamental structure of the electron.

There are elementary particles that spontaneously decay into less massive particles. An example is the muon, with a mean lifetime of 2.2×10−6 seconds, which decays into an electron, a muon neutrino and an electron antineutrino. The electron, on the other hand, is thought to be stable on theoretical grounds: the electron is the least massive particle with non-zero electric charge, so its decay would violate charge conservation. The experimental lower bound for the electron's mean lifetime is 6.6×1028 years, at a 90% confidence level.

Quantum properties

As with all particles, electrons can act as waves. This is called the wave–particle duality and can be demonstrated using the double-slit experiment.

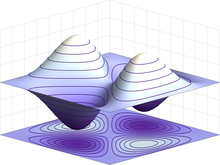

The wave-like nature of the electron allows it to pass through two parallel slits simultaneously, rather than just one slit as would be the case for a classical particle. In quantum mechanics, the wave-like property of one particle can be described mathematically as a complex-valued function, the wave function, commonly denoted by the Greek letter psi (ψ). When the absolute value of this function is squared, it gives the probability that a particle will be observed near a location—a probability density.

Electrons are identical particles because they cannot be distinguished from each other by their intrinsic physical properties. In quantum mechanics, this means that a pair of interacting electrons must be able to swap positions without an observable change to the state of the system. The wave function of fermions, including electrons, is antisymmetric, meaning that it changes sign when two electrons are swapped; that is, ψ(r1, r2) = −ψ(r2, r1), where the variables r1 and r2 correspond to the first and second electrons, respectively. Since the absolute value is not changed by a sign swap, this corresponds to equal probabilities. Bosons, such as the photon, have symmetric wave functions instead.

In the case of antisymmetry, solutions of the wave equation for interacting electrons result in a zero probability that each pair will occupy the same location or state. This is responsible for the Pauli exclusion principle, which precludes any two electrons from occupying the same quantum state. This principle explains many of the properties of electrons. For example, it causes groups of bound electrons to occupy different orbitals in an atom, rather than all overlapping each other in the same orbit.

Virtual particles

In a simplified picture, which often tends to give the wrong idea but may serve to illustrate some aspects, every photon spends some time as a combination of a virtual electron plus its antiparticle, the virtual positron, which rapidly annihilate each other shortly thereafter. The combination of the energy variation needed to create these particles, and the time during which they exist, fall under the threshold of detectability expressed by the Heisenberg uncertainty relation, ΔE · Δt ≥ ħ. In effect, the energy needed to create these virtual particles, ΔE, can be "borrowed" from the vacuum for a period of time, Δt, so that their product is no more than the reduced Planck constant, ħ ≈ 6.6×10−16 eV·s. Thus, for a virtual electron, Δt is at most 1.3×10−21 s.

While an electron–positron virtual pair is in existence, the Coulomb force from the ambient electric field surrounding an electron causes a created positron to be attracted to the original electron, while a created electron experiences a repulsion. This causes what is called vacuum polarization. In effect, the vacuum behaves like a medium having a dielectric permittivity more than unity. Thus the effective charge of an electron is actually smaller than its true value, and the charge decreases with increasing distance from the electron. This polarization was confirmed experimentally in 1997 using the Japanese TRISTAN particle accelerator. Virtual particles cause a comparable shielding effect for the mass of the electron.

The interaction with virtual particles also explains the small (about 0.1%) deviation of the intrinsic magnetic moment of the electron from the Bohr magneton (the anomalous magnetic moment). The extraordinarily precise agreement of this predicted difference with the experimentally determined value is viewed as one of the great achievements of quantum electrodynamics.

The apparent paradox in classical physics of a point particle electron having intrinsic angular momentum and magnetic moment can be explained by the formation of virtual photons in the electric field generated by the electron. These photons can heuristically be thought of as causing the electron to shift about in a jittery fashion (known as zitterbewegung), which results in a net circular motion with precession. This motion produces both the spin and the magnetic moment of the electron. In atoms, this creation of virtual photons explains the Lamb shift observed in spectral lines. The Compton Wavelength shows that near elementary particles such as the electron, the uncertainty of the energy allows for the creation of virtual particles near the electron. This wavelength explains the "static" of virtual particles around elementary particles at a close distance.

Interaction

An electron generates an electric field that exerts an attractive force on a particle with a positive charge, such as the proton, and a repulsive force on a particle with a negative charge. The strength of this force in nonrelativistic approximation is determined by Coulomb's inverse square law. When an electron is in motion, it generates a magnetic field. The Ampère–Maxwell law relates the magnetic field to the mass motion of electrons (the current) with respect to an observer. This property of induction supplies the magnetic field that drives an electric motor. The electromagnetic field of an arbitrary moving charged particle is expressed by the Liénard–Wiechert potentials, which are valid even when the particle's speed is close to that of light (relativistic).

When an electron is moving through a magnetic field, it is subject to the Lorentz force that acts perpendicularly to the plane defined by the magnetic field and the electron velocity. This centripetal force causes the electron to follow a helical trajectory through the field at a radius called the gyroradius. The acceleration from this curving motion induces the electron to radiate energy in the form of synchrotron radiation. The energy emission in turn causes a recoil of the electron, known as the Abraham–Lorentz–Dirac Force, which creates a friction that slows the electron. This force is caused by a back-reaction of the electron's own field upon itself.

Photons mediate electromagnetic interactions between particles in quantum electrodynamics. An isolated electron at a constant velocity cannot emit or absorb a real photon; doing so would violate conservation of energy and momentum. Instead, virtual photons can transfer momentum between two charged particles. This exchange of virtual photons, for example, generates the Coulomb force. Energy emission can occur when a moving electron is deflected by a charged particle, such as a proton. The deceleration of the electron results in the emission of Bremsstrahlung radiation.

An inelastic collision between a photon (light) and a solitary (free) electron is called Compton scattering. This collision results in a transfer of momentum and energy between the particles, which modifies the wavelength of the photon by an amount called the Compton shift. The maximum magnitude of this wavelength shift is h/mec, which is known as the Compton wavelength. For an electron, it has a value of 2.43×10−12 m. When the wavelength of the light is long (for instance, the wavelength of the visible light is 0.4–0.7 μm) the wavelength shift becomes negligible. Such interaction between the light and free electrons is called Thomson scattering or linear Thomson scattering.

The relative strength of the electromagnetic interaction between two charged particles, such as an electron and a proton, is given by the fine-structure constant. This value is a dimensionless quantity formed by the ratio of two energies: the electrostatic energy of attraction (or repulsion) at a separation of one Compton wavelength, and the rest energy of the charge. It is given by α ≈ 7.297353×10−3, which is approximately equal to 1/137.

When electrons and positrons collide, they annihilate each other, giving rise to two or more gamma ray photons. If the electron and positron have negligible momentum, a positronium atom can form before annihilation results in two or three gamma ray photons totalling 1.022 MeV. On the other hand, a high-energy photon can transform into an electron and a positron by a process called pair production, but only in the presence of a nearby charged particle, such as a nucleus.

In the theory of electroweak interaction, the left-handed component of electron's wavefunction forms a weak isospin doublet with the electron neutrino. This means that during weak interactions, electron neutrinos behave like electrons. Either member of this doublet can undergo a charged current interaction by emitting or absorbing a

W

and be converted into the other member. Charge is conserved during this

reaction because the W boson also carries a charge, canceling out any

net change during the transmutation. Charged current interactions are

responsible for the phenomenon of beta decay in a radioactive atom. Both the electron and electron neutrino can undergo a neutral current interaction via a

Z0

exchange, and this is responsible for neutrino-electron elastic scattering.

Atoms and molecules

An electron can be bound to the nucleus of an atom by the attractive Coulomb force. A system of one or more electrons bound to a nucleus is called an atom. If the number of electrons is different from the nucleus's electrical charge, such an atom is called an ion. The wave-like behavior of a bound electron is described by a function called an atomic orbital. Each orbital has its own set of quantum numbers such as energy, angular momentum and projection of angular momentum, and only a discrete set of these orbitals exist around the nucleus. According to the Pauli exclusion principle each orbital can be occupied by up to two electrons, which must differ in their spin quantum number.

Electrons can transfer between different orbitals by the emission or absorption of photons with an energy that matches the difference in potential. Other methods of orbital transfer include collisions with particles, such as electrons, and the Auger effect. To escape the atom, the energy of the electron must be increased above its binding energy to the atom. This occurs, for example, with the photoelectric effect, where an incident photon exceeding the atom's ionization energy is absorbed by the electron.

The orbital angular momentum of electrons is quantized. Because the electron is charged, it produces an orbital magnetic moment that is proportional to the angular momentum. The net magnetic moment of an atom is equal to the vector sum of orbital and spin magnetic moments of all electrons and the nucleus. The magnetic moment of the nucleus is negligible compared with that of the electrons. The magnetic moments of the electrons that occupy the same orbital (so called, paired electrons) cancel each other out.

The chemical bond between atoms occurs as a result of electromagnetic interactions, as described by the laws of quantum mechanics. The strongest bonds are formed by the sharing or transfer of electrons between atoms, allowing the formation of molecules. Within a molecule, electrons move under the influence of several nuclei, and occupy molecular orbitals; much as they can occupy atomic orbitals in isolated atoms. A fundamental factor in these molecular structures is the existence of electron pairs. These are electrons with opposed spins, allowing them to occupy the same molecular orbital without violating the Pauli exclusion principle (much like in atoms). Different molecular orbitals have different spatial distribution of the electron density. For instance, in bonded pairs (i.e. in the pairs that actually bind atoms together) electrons can be found with the maximal probability in a relatively small volume between the nuclei. By contrast, in non-bonded pairs electrons are distributed in a large volume around nuclei.

Conductivity

If a body has more or fewer electrons than are required to balance the positive charge of the nuclei, then that object has a net electric charge. When there is an excess of electrons, the object is said to be negatively charged. When there are fewer electrons than the number of protons in nuclei, the object is said to be positively charged. When the number of electrons and the number of protons are equal, their charges cancel each other and the object is said to be electrically neutral. A macroscopic body can develop an electric charge through rubbing, by the triboelectric effect.

Independent electrons moving in vacuum are termed free electrons. Electrons in metals also behave as if they were free. In reality the particles that are commonly termed electrons in metals and other solids are quasi-electrons—quasiparticles, which have the same electrical charge, spin, and magnetic moment as real electrons but might have a different mass. When free electrons—both in vacuum and metals—move, they produce a net flow of charge called an electric current, which generates a magnetic field. Likewise a current can be created by a changing magnetic field. These interactions are described mathematically by Maxwell's equations.

At a given temperature, each material has an electrical conductivity that determines the value of electric current when an electric potential is applied. Examples of good conductors include metals such as copper and gold, whereas glass and Teflon are poor conductors. In any dielectric material, the electrons remain bound to their respective atoms and the material behaves as an insulator. Most semiconductors have a variable level of conductivity that lies between the extremes of conduction and insulation. On the other hand, metals have an electronic band structure containing partially filled electronic bands. The presence of such bands allows electrons in metals to behave as if they were free or delocalized electrons. These electrons are not associated with specific atoms, so when an electric field is applied, they are free to move like a gas (called Fermi gas) through the material much like free electrons.

Because of collisions between electrons and atoms, the drift velocity of electrons in a conductor is on the order of millimeters per second. However, the speed at which a change of current at one point in the material causes changes in currents in other parts of the material, the velocity of propagation, is typically about 75% of light speed. This occurs because electrical signals propagate as a wave, with the velocity dependent on the dielectric constant of the material.

Metals make relatively good conductors of heat, primarily because the delocalized electrons are free to transport thermal energy between atoms. However, unlike electrical conductivity, the thermal conductivity of a metal is nearly independent of temperature. This is expressed mathematically by the Wiedemann–Franz law, which states that the ratio of thermal conductivity to the electrical conductivity is proportional to the temperature. The thermal disorder in the metallic lattice increases the electrical resistivity of the material, producing a temperature dependence for electric current.

When cooled below a point called the critical temperature, materials can undergo a phase transition in which they lose all resistivity to electric current, in a process known as superconductivity. In BCS theory, pairs of electrons called Cooper pairs have their motion coupled to nearby matter via lattice vibrations called phonons, thereby avoiding the collisions with atoms that normally create electrical resistance. (Cooper pairs have a radius of roughly 100 nm, so they can overlap each other.) However, the mechanism by which higher temperature superconductors operate remains uncertain.

Electrons inside conducting solids, which are quasi-particles themselves, when tightly confined at temperatures close to absolute zero, behave as though they had split into three other quasiparticles: spinons, orbitons and holons. The former carries spin and magnetic moment, the next carries its orbital location while the latter electrical charge.

Motion and energy

According to Einstein's theory of special relativity, as an electron's speed approaches the speed of light, from an observer's point of view its relativistic mass increases, thereby making it more and more difficult to accelerate it from within the observer's frame of reference. The speed of an electron can approach, but never reach, the speed of light in vacuum, c. However, when relativistic electrons—that is, electrons moving at a speed close to c—are injected into a dielectric medium such as water, where the local speed of light is significantly less than c, the electrons temporarily travel faster than light in the medium. As they interact with the medium, they generate a faint light called Cherenkov radiation.

The effects of special relativity are based on a quantity known as the Lorentz factor, defined as where v is the speed of the particle. The kinetic energy Ke of an electron moving with velocity v is:

where me is the mass of electron. For example, the Stanford linear accelerator can accelerate an electron to roughly 51 GeV. Since an electron behaves as a wave, at a given velocity it has a characteristic de Broglie wavelength. This is given by λe = h/p where h is the Planck constant and p is the momentum. For the 51 GeV electron above, the wavelength is about 2.4×10−17 m, small enough to explore structures well below the size of an atomic nucleus.

Formation

The Big Bang theory is the most widely accepted scientific theory to explain the early stages in the evolution of the Universe. For the first millisecond of the Big Bang, the temperatures were over 10 billion kelvins and photons had mean energies over a million electronvolts. These photons were sufficiently energetic that they could react with each other to form pairs of electrons and positrons. Likewise, positron-electron pairs annihilated each other and emitted energetic photons:

An equilibrium between electrons, positrons and photons was maintained during this phase of the evolution of the Universe. After 15 seconds had passed, however, the temperature of the universe dropped below the threshold where electron-positron formation could occur. Most of the surviving electrons and positrons annihilated each other, releasing gamma radiation that briefly reheated the universe.

For reasons that remain uncertain, during the annihilation process there was an excess in the number of particles over antiparticles. Hence, about one electron for every billion electron-positron pairs survived. This excess matched the excess of protons over antiprotons, in a condition known as baryon asymmetry, resulting in a net charge of zero for the universe. The surviving protons and neutrons began to participate in reactions with each other—in the process known as nucleosynthesis, forming isotopes of hydrogen and helium, with trace amounts of lithium. This process peaked after about five minutes. Any leftover neutrons underwent negative beta decay with a half-life of about a thousand seconds, releasing a proton and electron in the process,

For about the next 300000–400000 years, the excess electrons remained too energetic to bind with atomic nuclei. What followed is a period known as recombination, when neutral atoms were formed and the expanding universe became transparent to radiation.

Roughly one million years after the big bang, the first generation of stars began to form. Within a star, stellar nucleosynthesis

results in the production of positrons from the fusion of atomic

nuclei. These antimatter particles immediately annihilate with

electrons, releasing gamma rays. The net result is a steady reduction in

the number of electrons, and a matching increase in the number of

neutrons. However, the process of stellar evolution

can result in the synthesis of radioactive isotopes. Selected isotopes

can subsequently undergo negative beta decay, emitting an electron and

antineutrino from the nucleus. An example is the cobalt-60 (60Co) isotope, which decays to form nickel-60 (60

Ni

).

At the end of its lifetime, a star with more than about 20 solar masses can undergo gravitational collapse to form a black hole. According to classical physics, these massive stellar objects exert a gravitational attraction that is strong enough to prevent anything, even electromagnetic radiation, from escaping past the Schwarzschild radius. However, quantum mechanical effects are believed to potentially allow the emission of Hawking radiation at this distance. Electrons (and positrons) are thought to be created at the event horizon of these stellar remnants.

When a pair of virtual particles (such as an electron and positron) is created in the vicinity of the event horizon, random spatial positioning might result in one of them to appear on the exterior; this process is called quantum tunnelling. The gravitational potential of the black hole can then supply the energy that transforms this virtual particle into a real particle, allowing it to radiate away into space. In exchange, the other member of the pair is given negative energy, which results in a net loss of mass-energy by the black hole. The rate of Hawking radiation increases with decreasing mass, eventually causing the black hole to evaporate away until, finally, it explodes.

Cosmic rays are particles traveling through space with high energies. Energy events as high as 3.0×1020 eV have been recorded. When these particles collide with nucleons in the Earth's atmosphere, a shower of particles is generated, including pions. More than half of the cosmic radiation observed from the Earth's surface consists of muons. The particle called a muon is a lepton produced in the upper atmosphere by the decay of a pion.

A muon, in turn, can decay to form an electron or positron.

Observation

Remote observation of electrons requires detection of their radiated energy. For example, in high-energy environments such as the corona of a star, free electrons form a plasma that radiates energy due to Bremsstrahlung radiation. Electron gas can undergo plasma oscillation, which is waves caused by synchronized variations in electron density, and these produce energy emissions that can be detected by using radio telescopes.

The frequency of a photon is proportional to its energy. As a bound electron transitions between different energy levels of an atom, it absorbs or emits photons at characteristic frequencies. For instance, when atoms are irradiated by a source with a broad spectrum, distinct dark lines appear in the spectrum of transmitted radiation in places where the corresponding frequency is absorbed by the atom's electrons. Each element or molecule displays a characteristic set of spectral lines, such as the hydrogen spectral series. When detected, spectroscopic measurements of the strength and width of these lines allow the composition and physical properties of a substance to be determined.

In laboratory conditions, the interactions of individual electrons can be observed by means of particle detectors, which allow measurement of specific properties such as energy, spin and charge. The development of the Paul trap and Penning trap allows charged particles to be contained within a small region for long durations. This enables precise measurements of the particle properties. For example, in one instance a Penning trap was used to contain a single electron for a period of 10 months. The magnetic moment of the electron was measured to a precision of eleven digits, which, in 1980, was a greater accuracy than for any other physical constant.

The first video images of an electron's energy distribution were captured by a team at Lund University in Sweden, February 2008. The scientists used extremely short flashes of light, called attosecond pulses, which allowed an electron's motion to be observed for the first time.

The distribution of the electrons in solid materials can be visualized by angle-resolved photoemission spectroscopy (ARPES). This technique employs the photoelectric effect to measure the reciprocal space—a mathematical representation of periodic structures that is used to infer the original structure. ARPES can be used to determine the direction, speed and scattering of electrons within the material.

Plasma applications

Particle beams

Electron beams are used in welding. They allow energy densities up to 107 W·cm−2 across a narrow focus diameter of 0.1–1.3 mm and usually require no filler material. This welding technique must be performed in a vacuum to prevent the electrons from interacting with the gas before reaching their target, and it can be used to join conductive materials that would otherwise be considered unsuitable for welding.

Electron-beam lithography (EBL) is a method of etching semiconductors at resolutions smaller than a micrometer. This technique is limited by high costs, slow performance, the need to operate the beam in the vacuum and the tendency of the electrons to scatter in solids. The last problem limits the resolution to about 10 nm. For this reason, EBL is primarily used for the production of small numbers of specialized integrated circuits.

Electron beam processing is used to irradiate materials in order to change their physical properties or sterilize medical and food products. Electron beams fluidise or quasi-melt glasses without significant increase of temperature on intensive irradiation: e.g. intensive electron radiation causes a many orders of magnitude decrease of viscosity and stepwise decrease of its activation energy.

Linear particle accelerators generate electron beams for treatment of superficial tumors in radiation therapy. Electron therapy can treat such skin lesions as basal-cell carcinomas because an electron beam only penetrates to a limited depth before being absorbed, typically up to 5 cm for electron energies in the range 5–20 MeV. An electron beam can be used to supplement the treatment of areas that have been irradiated by X-rays.

Particle accelerators use electric fields to propel electrons and their antiparticles to high energies. These particles emit synchrotron radiation as they pass through magnetic fields. The dependency of the intensity of this radiation upon spin polarizes the electron beam—a process known as the Sokolov–Ternov effect. Polarized electron beams can be useful for various experiments. Synchrotron radiation can also cool the electron beams to reduce the momentum spread of the particles. Electron and positron beams are collided upon the particles' accelerating to the required energies; particle detectors observe the resulting energy emissions, which particle physics studies .

Imaging

Low-energy electron diffraction (LEED) is a method of bombarding a crystalline material with a collimated beam of electrons and then observing the resulting diffraction patterns to determine the structure of the material. The required energy of the electrons is typically in the range 20–200 eV. The reflection high-energy electron diffraction (RHEED) technique uses the reflection of a beam of electrons fired at various low angles to characterize the surface of crystalline materials. The beam energy is typically in the range 8–20 keV and the angle of incidence is 1–4°.

The electron microscope directs a focused beam of electrons at a specimen. Some electrons change their properties, such as movement direction, angle, and relative phase and energy as the beam interacts with the material. Microscopists can record these changes in the electron beam to produce atomically resolved images of the material. In blue light, conventional optical microscopes have a diffraction-limited resolution of about 200 nm. By comparison, electron microscopes are limited by the de Broglie wavelength of the electron. This wavelength, for example, is equal to 0.0037 nm for electrons accelerated across a 100,000-volt potential. The Transmission Electron Aberration-Corrected Microscope is capable of sub-0.05 nm resolution, which is more than enough to resolve individual atoms. This capability makes the electron microscope a useful laboratory instrument for high resolution imaging. However, electron microscopes are expensive instruments that are costly to maintain.

Two main types of electron microscopes exist: transmission and scanning. Transmission electron microscopes function like overhead projectors, with a beam of electrons passing through a slice of material then being projected by lenses on a photographic slide or a charge-coupled device. Scanning electron microscopes rasteri a finely focused electron beam, as in a TV set, across the studied sample to produce the image. Magnifications range from 100× to 1,000,000× or higher for both microscope types. The scanning tunneling microscope uses quantum tunneling of electrons from a sharp metal tip into the studied material and can produce atomically resolved images of its surface.

Other applications

In the free-electron laser (FEL), a relativistic electron beam passes through a pair of undulators that contain arrays of dipole magnets whose fields point in alternating directions. The electrons emit synchrotron radiation that coherently interacts with the same electrons to strongly amplify the radiation field at the resonance frequency. FEL can emit a coherent high-brilliance electromagnetic radiation with a wide range of frequencies, from microwaves to soft X-rays. These devices are used in manufacturing, communication, and in medical applications, such as soft tissue surgery.

Electrons are important in cathode-ray tubes, which have been extensively used as display devices in laboratory instruments, computer monitors and television sets. In a photomultiplier tube, every photon striking the photocathode initiates an avalanche of electrons that produces a detectable current pulse. Vacuum tubes use the flow of electrons to manipulate electrical signals, and they played a critical role in the development of electronics technology. However, they have been largely supplanted by solid-state devices such as the transistor.