The term interdisciplinary is applied within education and training pedagogies to describe studies that use methods and insights of several established disciplines or traditional fields of study. Interdisciplinarity involves researchers, students, and teachers in the goals of connecting and integrating several academic schools of thought, professions, or technologies—along with their specific perspectives—in the pursuit of a common task. The epidemiology of HIV/AIDS or global warming requires understanding of diverse disciplines to solve complex problems. Interdisciplinary may be applied where the subject is felt to have been neglected or even misrepresented in the traditional disciplinary structure of research institutions, for example, women's studies or ethnic area studies. Interdisciplinarity can likewise be applied to complex subjects that can only be understood by combining the perspectives of two or more fields.

The adjective interdisciplinary is most often used in educational circles when researchers from two or more disciplines pool their approaches and modify them so that they are better suited to the problem at hand, including the case of the team-taught course where students are required to understand a given subject in terms of multiple traditional disciplines. For example, the subject of land use may appear differently when examined by different disciplines, for instance, biology, chemistry, economics, geography, and politics.

Development

Although "interdisciplinary" and "interdisciplinarity" are frequently viewed as twentieth century terms, the concept has historical antecedents, most notably Greek philosophy. Julie Thompson Klein attests that "the roots of the concepts lie in a number of ideas that resonate through modern discourse—the ideas of a unified science, general knowledge, synthesis and the integration of knowledge", while Giles Gunn says that Greek historians and dramatists took elements from other realms of knowledge (such as medicine or philosophy) to further understand their own material. The building of Roman roads required men who understood surveying, material science, logistics and several other disciplines. Any broadminded humanist project involves interdisciplinarity, and history shows a crowd of cases, as seventeenth-century Leibniz's task to create a system of universal justice, which required linguistics, economics, management, ethics, law philosophy, politics, and even sinology.

Interdisciplinary programs sometimes arise from a shared conviction that the traditional disciplines are unable or unwilling to address an important problem. For example, social science disciplines such as anthropology and sociology paid little attention to the social analysis of technology throughout most of the twentieth century. As a result, many social scientists with interests in technology have joined science, technology and society programs, which are typically staffed by scholars drawn from numerous disciplines. They may also arise from new research developments, such as nanotechnology, which cannot be addressed without combining the approaches of two or more disciplines. Examples include quantum information processing, an amalgamation of quantum physics and computer science, and bioinformatics, combining molecular biology with computer science. Sustainable development as a research area deals with problems requiring analysis and synthesis across economic, social and environmental spheres; often an integration of multiple social and natural science disciplines. Interdisciplinary research is also key to the study of health sciences, for example in studying optimal solutions to diseases. Some institutions of higher education offer accredited degree programs in Interdisciplinary Studies.

At another level, interdisciplinarity is seen as a remedy to the harmful effects of excessive specialization and isolation in information silos. On some views, however, interdisciplinarity is entirely indebted to those who specialize in one field of study—that is, without specialists, interdisciplinarians would have no information and no leading experts to consult. Others place the focus of interdisciplinarity on the need to transcend disciplines, viewing excessive specialization as problematic both epistemologically and politically. When interdisciplinary collaboration or research results in new solutions to problems, much information is given back to the various disciplines involved. Therefore, both disciplinarians and interdisciplinarians may be seen in complementary relation to one another.

Barriers

Because most participants in interdisciplinary ventures were trained in traditional disciplines, they must learn to appreciate differences of perspectives and methods. For example, a discipline that places more emphasis on quantitative rigor may produce practitioners who are more scientific in their training than others; in turn, colleagues in "softer" disciplines who may associate quantitative approaches with difficulty grasp the broader dimensions of a problem and lower rigor in theoretical and qualitative argumentation. An interdisciplinary program may not succeed if its members remain stuck in their disciplines (and in disciplinary attitudes). Those who lack experience in interdisciplinary collaborations may also not fully appreciate the intellectual contribution of colleagues from those discipline. From the disciplinary perspective, however, much interdisciplinary work may be seen as "soft", lacking in rigor, or ideologically motivated; these beliefs place barriers in the career paths of those who choose interdisciplinary work. For example, interdisciplinary grant applications are often refereed by peer reviewers drawn from established disciplines; interdisciplinary researchers may experience difficulty getting funding for their research. In addition, untenured researchers know that, when they seek promotion and tenure, it is likely that some of the evaluators will lack commitment to interdisciplinarity. They may fear that making a commitment to interdisciplinary research will increase the risk of being denied tenure.

Interdisciplinary programs may also fail if they are not given sufficient autonomy. For example, interdisciplinary faculty are usually recruited to a joint appointment, with responsibilities in both an interdisciplinary program (such as women's studies) and a traditional discipline (such as history). If the traditional discipline makes the tenure decisions, new interdisciplinary faculty will be hesitant to commit themselves fully to interdisciplinary work. Other barriers include the generally disciplinary orientation of most scholarly journals, leading to the perception, if not the fact, that interdisciplinary research is hard to publish. In addition, since traditional budgetary practices at most universities channel resources through the disciplines, it becomes difficult to account for a given scholar or teacher's salary and time. During periods of budgetary contraction, the natural tendency to serve the primary constituency (i.e., students majoring in the traditional discipline) makes resources scarce for teaching and research comparatively far from the center of the discipline as traditionally understood. For these same reasons, the introduction of new interdisciplinary programs is often resisted because it is perceived as a competition for diminishing funds.

Due to these and other barriers, interdisciplinary research areas are strongly motivated to become disciplines themselves. If they succeed, they can establish their own research funding programs and make their own tenure and promotion decisions. In so doing, they lower the risk of entry. Examples of former interdisciplinary research areas that have become disciplines, many of them named for their parent disciplines, include neuroscience, cybernetics, biochemistry and biomedical engineering. These new fields are occasionally referred to as "interdisciplines". On the other hand, even though interdisciplinary activities are now a focus of attention for institutions promoting learning and teaching, as well as organizational and social entities concerned with education, they are practically facing complex barriers, serious challenges and criticism. The most important obstacles and challenges faced by interdisciplinary activities in the past two decades can be divided into "professional", "organizational", and "cultural" obstacles.

Interdisciplinary studies and studies of interdisciplinarity

An initial distinction should be made between interdisciplinary studies, which can be found spread across the academy today, and the study of interdisciplinarity, which involves a much smaller group of researchers. The former is instantiated in thousands of research centers across the US and the world. The latter has one US organization, the Association for Interdisciplinary Studies (founded in 1979), two international organizations, the International Network of Inter- and Transdisciplinarity (founded in 2010) and the Philosophy of/as Interdisciplinarity Network (founded in 2009). The US's research institute devoted to the theory and practice of interdisciplinarity, the Center for the Study of Interdisciplinarity at the University of North Texas, was founded in 2008 but is closed as of 1 September 2014, the result of administrative decisions at the University of North Texas.

An interdisciplinary study is an academic program or process seeking to synthesize broad perspectives, knowledge, skills, interconnections, and epistemology in an educational setting. Interdisciplinary programs may be founded in order to facilitate the study of subjects which have some coherence, but which cannot be adequately understood from a single disciplinary perspective (for example, women's studies or medieval studies). More rarely, and at a more advanced level, interdisciplinarity may itself become the focus of study, in a critique of institutionalized disciplines' ways of segmenting knowledge.

In contrast, studies of interdisciplinarity raise to self-consciousness questions about how interdisciplinarity works, the nature and history of disciplinarity, and the future of knowledge in post-industrial society. Researchers at the Center for the Study of Interdisciplinarity have made the distinction between philosophy 'of' and 'as' interdisciplinarity, the former identifying a new, discrete area within philosophy that raises epistemological and metaphysical questions about the status of interdisciplinary thinking, with the latter pointing toward a philosophical practice that is sometimes called 'field philosophy'.

Perhaps the most common complaint regarding interdisciplinary programs, by supporters and detractors alike, is the lack of synthesis—that is, students are provided with multiple disciplinary perspectives but are not given effective guidance in resolving the conflicts and achieving a coherent view of the subject. Others have argued that the very idea of synthesis or integration of disciplines presupposes questionable politico-epistemic commitments. Critics of interdisciplinary programs feel that the ambition is simply unrealistic, given the knowledge and intellectual maturity of all but the exceptional undergraduate; some defenders concede the difficulty, but insist that cultivating interdisciplinarity as a habit of mind, even at that level, is both possible and essential to the education of informed and engaged citizens and leaders capable of analyzing, evaluating, and synthesizing information from multiple sources in order to render reasoned decisions.

While much has been written on the philosophy and promise of interdisciplinarity in academic programs and professional practice, social scientists are increasingly interrogating academic discourses on interdisciplinarity, as well as how interdisciplinarity actually works—and does not—in practice. Some have shown, for example, that some interdisciplinary enterprises that aim to serve society can produce deleterious outcomes for which no one can be held to account.

Politics of interdisciplinary studies

Since 1998, there has been an ascendancy in the value of interdisciplinary research and teaching and a growth in the number of bachelor's degrees awarded at U.S. universities classified as multi- or interdisciplinary studies. The number of interdisciplinary bachelor's degrees awarded annually rose from 7,000 in 1973 to 30,000 a year by 2005 according to data from the National Center of Educational Statistics (NECS). In addition, educational leaders from the Boyer Commission to Carnegie's President Vartan Gregorian to Alan I. Leshner, CEO of the American Association for the Advancement of Science have advocated for interdisciplinary rather than disciplinary approaches to problem-solving in the 21st century. This has been echoed by federal funding agencies, particularly the National Institutes of Health under the direction of Elias Zerhouni, who has advocated that grant proposals be framed more as interdisciplinary collaborative projects than single-researcher, single-discipline ones.

At the same time, many thriving longstanding bachelor's in interdisciplinary studies programs in existence for 30 or more years, have been closed down, in spite of healthy enrollment. Examples include Arizona International (formerly part of the University of Arizona), the School of Interdisciplinary Studies at Miami University, and the Department of Interdisciplinary Studies at Wayne State University; others such as the Department of Interdisciplinary Studies at Appalachian State University, and George Mason University's New Century College, have been cut back. Stuart Henry has seen this trend as part of the hegemony of the disciplines in their attempt to recolonize the experimental knowledge production of otherwise marginalized fields of inquiry. This is due to threat perceptions seemingly based on the ascendancy of interdisciplinary studies against traditional academia.

Examples

- Communication science: Communication studies takes up theories, models, concepts, etc. of other, independent disciplines such as sociology, political science and economics and thus decisively develops them.

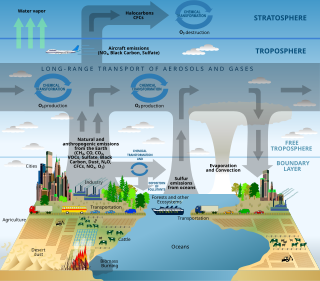

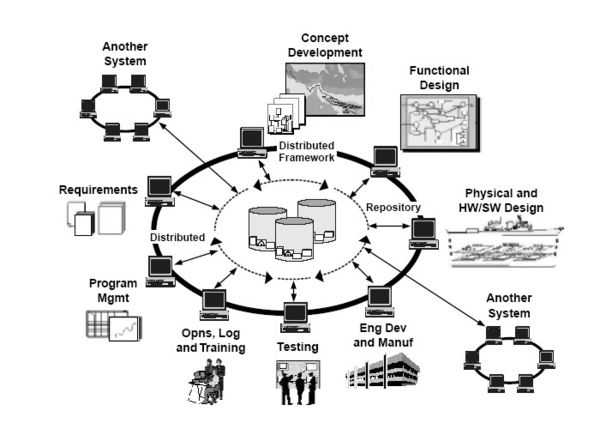

- Environmental science: Environmental science is an interdisciplinary earth science aimed at addressing environmental issues such as global warming and pollution, and involves the use of a wide range of scientific disciplines including geology, chemistry, physics, ecology, and oceanography. Faculty members of environmental programs often collaborate in interdisciplinary teams to solve complex global environmental problems. Those who study areas of environmental policy such as environmental law, sustainability, and environmental justice, may also seek knowledge in the environmental sciences to better develop their expertise and understanding in their fields.

- Knowledge management: Knowledge management discipline exists as a cluster of divergent schools of thought under an overarching knowledge management umbrella by building on works in computer science, economics, human resource management, information systems, organizational behavior, philosophy, psychology, and strategic management.

- Materials science: Field that combines the scientific and engineering aspects of materials, particularly solids. It covers the design, discovery and application of new materials by incorporating elements of physics, chemistry, and engineering.

- Provenance research: Interdisciplinary research comes into play when clarifying the path of artworks into public and private art collections and also in relation to human remains in natural history collections.

- Sports science: Sport science is an interdisciplinary science that researches the problems and manifestations in the field of sport and movement in cooperation with a number of other sciences, such as sociology, ethics, biology, medicine, biomechanics or pedagogy.

- Transport sciences: Transport sciences are a field of science that deals with the relevant problems and events of the world of transport and cooperates with the specialised legal, ecological, technical, psychological or pedagogical disciplines in working out the changes of place of people, goods, messages that characterise them.

- Venture research: Venture research is an interdisciplinary research area located in the human sciences that deals with the conscious entering into and experiencing of borderline situations. For this purpose, the findings of evolutionary theory, cultural anthropology, social sciences, behavioural research, differential psychology, ethics or pedagogy are cooperatively processed and evaluated.

Historical examples

There are many examples of when a particular idea, almost on the same period, arises in different disciplines. One case is the shift from the approach of focusing on "specialized segments of attention" (adopting one particular perspective), to the idea of "instant sensory awareness of the whole", an attention to the "total field", a "sense of the whole pattern, of form and function as a unity", an "integral idea of structure and configuration". This has happened in painting (with cubism), physics, poetry, communication and educational theory. According to Marshall McLuhan, this paradigm shift was due to the passage from an era shaped by mechanization, which brought sequentiality, to the era shaped by the instant speed of electricity, which brought simultaneity.

Efforts to simplify and defend the concept

An article in the Social Science Journal attempts to provide a simple, common-sense, definition of interdisciplinarity, bypassing the difficulties of defining that concept and obviating the need for such related concepts as transdisciplinarity, pluridisciplinarity, and multidisciplinary:

To begin with, a discipline can be conveniently defined as any comparatively self-contained and isolated domain of human experience which possesses its own community of experts. Interdisciplinarity is best seen as bringing together distinctive components of two or more disciplines. In academic discourse, interdisciplinarity typically applies to four realms: knowledge, research, education, and theory. Interdisciplinary knowledge involves familiarity with components of two or more disciplines. Interdisciplinary research combines components of two or more disciplines in the search or creation of new knowledge, operations, or artistic expressions. Interdisciplinary education merges components of two or more disciplines in a single program of instruction. Interdisciplinary theory takes interdisciplinary knowledge, research, or education as its main objects of study.

In turn, interdisciplinary richness of any two instances of knowledge, research, or education can be ranked by weighing four variables: number of disciplines involved, the "distance" between them, the novelty of any particular combination, and their extent of integration.

Interdisciplinary knowledge and research are important because:

- "Creativity often requires interdisciplinary knowledge.

- Immigrants often make important contributions to their new field.

- Disciplinarians often commit errors which can be best detected by people familiar with two or more disciplines.

- Some worthwhile topics of research fall in the interstices among the traditional disciplines.

- Many intellectual, social, and practical problems require interdisciplinary approaches.

- Interdisciplinary knowledge and research serve to remind us of the unity-of-knowledge ideal.

- Interdisciplinarians enjoy greater flexibility in their research.

- More so than narrow disciplinarians, interdisciplinarians often treat themselves to the intellectual equivalent of traveling in new lands.

- Interdisciplinarians may help breach communication gaps in the modern academy, thereby helping to mobilize its enormous intellectual resources in the cause of greater social rationality and justice.

- By bridging fragmented disciplines, interdisciplinarians might play a role in the defense of academic freedom."

Quotations

"The modern mind divides, specializes, thinks in categories: the Greek instinct was the opposite, to take the widest view, to see things as an organic whole [...]. The Olympic games were designed to test the arete of the whole man, not a merely specialized skill [...]. The great event was the pentathlon, if you won this, you were a man. Needless to say, the Marathon race was never heard of until modern times: the Greeks would have regarded it as a monstrosity."

"Previously, men could be divided simply into the learned and the ignorant, those more or less the one, and those more or less the other. But your specialist cannot be brought in under either of these two categories. He is not learned, for he is formally ignorant of all that does not enter into his specialty; but neither is he ignorant, because he is 'a scientist,' and 'knows' very well his own tiny portion of the universe. We shall have to say that he is a learned ignoramus, which is a very serious matter, as it implies that he is a person who is ignorant, not in the fashion of the ignorant man, but with all the petulance of one who is learned in his own special line."

"It is the custom among those who are called 'practical' men to condemn any man capable of a wide survey as a visionary: no man is thought worthy of a voice in politics unless he ignores or does not know nine-tenths of the most important relevant facts."

![Star formation[13]](https://upload.wikimedia.org/wikipedia/commons/thumb/c/c7/Star_formation.jpg/120px-Star_formation.jpg)

![Gravitational waves[14]](https://upload.wikimedia.org/wikipedia/commons/thumb/a/ac/Gravitywaves.JPG/120px-Gravitywaves.JPG)

![Climate visualization[15]](https://upload.wikimedia.org/wikipedia/commons/thumb/2/23/Climate_visualization.jpg/120px-Climate_visualization.jpg)