https://en.wikipedia.org/wiki/Encoding_(memory)

Memory has the ability to encode, store and recall information. Memories give an organism the capability to learn and adapt from previous experiences as well as build relationships. Encoding allows a perceived item of use or interest to be converted into a construct that can be stored within the brain and recalled later from long-term memory. Working memory stores information for immediate use or manipulation, which is aided through hooking onto previously archived items already present in the long-term memory of an individual.

History

Encoding is still relatively new and unexplored but origins of encoding date back to age old philosophers such as Aristotle and Plato. A major figure in the history of encoding is Hermann Ebbinghaus (1850–1909). Ebbinghaus was a pioneer in the field of memory research. Using himself as a subject he studied how we learn and forget information by repeating a list of nonsense syllables to the rhythm of a metronome until they were committed to his memory. These experiments led him to suggest the learning curve. He used these relatively meaningless words so that prior associations between meaningful words would not influence learning. He found that lists that allowed associations to be made and semantic meaning was apparent were easier to recall. Ebbinghaus' results paved the way for experimental psychology in memory and other mental processes.

During the 1900s, further progress in memory research was made. Ivan Pavlov began research pertaining to classical conditioning. His research demonstrated the ability to create a semantic relationship between two unrelated items. In 1932, Frederic Bartlett proposed the idea of mental schemas. This model proposed that whether new information would be encoded was dependent on its consistency with prior knowledge (mental schemas). This model also suggested that information not present at the time of encoding would be added to memory if it was based on schematic knowledge of the world. In this way, encoding was found to be influenced by prior knowledge. With the advance of Gestalt theory came the realization that memory for encoded information was often perceived as different from the stimuli that triggered it. It was also influenced by the context that the stimuli were embedded in.

With advances in technology, the field of neuropsychology emerged and with it a biological basis for theories of encoding. In 1949, Donald Hebb looked at the neuroscience aspect of encoding and stated that "neurons that fire together wire together," implying that encoding occurred as connections between neurons were established through repeated use. The 1950s and 60's saw a shift to the information processing approach to memory based on the invention of computers, followed by the initial suggestion that encoding was the process by which information is entered into memory. In 1956, George Armitage Miller wrote his paper on how short-term memory is limited to seven items, plus-or-minus two, called The Magical Number Seven, Plus or Minus Two. This number was appended when studies done on chunking revealed that seven, plus or minus two could also refer to seven "packets of information". In 1974, Alan Baddeley and Graham Hitch proposed their model of working memory, which consists of the central executive, visuo-spatial sketchpad, and phonological loop as a method of encoding. In 2000, Baddeley added the episodic buffer. Simultaneously Endel Tulving (1983) proposed the idea of encoding specificity whereby context was again noted as an influence on encoding.

Types

There are two main approaches to coding information: the physiological approach, and the mental approach. The physiological approach looks at how a stimulus is represented by neurons firing in the brain, while the mental approach looks at how the stimulus is represented in the mind.

There are many types of mental encoding that are used, such as visual, elaborative, organizational, acoustic, and semantic. However, this is not an extensive list.

Visual encoding

Visual encoding is the process of converting images and visual sensory information to memory stored in the brain. This means that people can convert the new information that they stored into mental pictures (Harrison, C., Semin, A.,(2009). Psychology. New York p. 222) Visual sensory information is temporarily stored within our iconic memory and working memory before being encoded into permanent long-term storage. Baddeley's model of working memory suggests that visual information is stored in the visuo-spatial sketchpad. The visuo-spatial sketchpad is connected to the central executive, which is a key area of working memory. The amygdala is another complex structure that has an important role in visual encoding. It accepts visual input in addition to input, from other systems, and encodes the positive or negative values of conditioned stimuli.

Elaborative encoding

Elaborative encoding is the process of actively relating new information to knowledge that is already in memory. Memories are a combination of old and new information, so the nature of any particular memory depends as much on the old information already in our memories as it does on the new information coming in through our senses. In other words, how we remember something depends on how we think about it at the time. Many studies have shown that long-term retention is greatly enhanced by elaborative encoding.

Semantic encoding

Semantic encoding is the processing and encoding of sensory input that has particular meaning or can be applied to a context. Various strategies can be applied such as chunking and mnemonics to aid in encoding, and in some cases, allow deep processing, and optimizing retrieval.

Words studied in semantic or deep encoding conditions are better recalled as compared to both easy and hard groupings of nonsemantic or shallow encoding conditions with response time being the deciding variable. Brodmann's areas 45, 46, and 47 (the left inferior prefrontal cortex or LIPC) showed significantly more activation during semantic encoding conditions compared to nonsemantic encoding conditions regardless of the difficulty of the nonsemantic encoding task presented. The same area showing increased activation during initial semantic encoding will also display decreasing activation with repetitive semantic encoding of the same words. This suggests the decrease in activation with repetition is process specific occurring when words are semantically reprocessed but not when they are nonsemantically reprocessed. Lesion and neuroimaging studies suggest that the orbitofrontal cortex is responsible for initial encoding and that activity in the left lateral prefrontal cortex correlates with the semantic organization of encoded information.

Acoustic encoding

Acoustic encoding is the encoding of auditory impulses. According to Baddeley, processing of auditory information is aided by the concept of the phonological loop, which allows input within our echoic memory to be sub vocally rehearsed in order to facilitate rememberingen we hear any word, we do so by hearing individual sounds, one at a time. Hence the memory of the beginning of a new word is stored in our echoic memory until the whole sound has been perceived and recognized as a word. Studies indicate that lexical, semantic and phonological factors interact in verbal working memory. The phonological similarity effect (PSE), is modified by word concreteness. This emphasizes that verbal working memory performance cannot exclusively be attributed to phonological or acoustic representation but also includes an interaction of linguistic representation. What remains to be seen is whether linguistic representation is expressed at the time of recall or whether the representational methods used (such as recordings, videos, symbols, etc.) participate in a more fundamental role in encoding and preservation of information in memory. The brain relies primarily on acoustic (aka phonological) encoding for use in short-term storage and primarily semantic encoding for use in long-term storage.

Other senses

Tactile encoding is the processing and encoding of how something feels, normally through touch. Neurons in the primary somatosensory cortex (S1) react to vibrotactile stimuli by activating in synchronization with each series of vibrations. Odors and tastes may also lead to encode.

Organizational encoding is the course of classifying information permitting to the associations amid a sequence of terms.

Long-Term Potentiation

Encoding is a biological event that begins with perception. All perceived and striking sensations travel to the brain's thalamus where all these sensations are combined into one single experience. The hippocampus is responsible for analyzing these inputs and ultimately deciding if they will be committed to long-term memory; these various threads of information are stored in various parts of the brain. However, the exact way in which these pieces are identified and recalled later remains unknown.

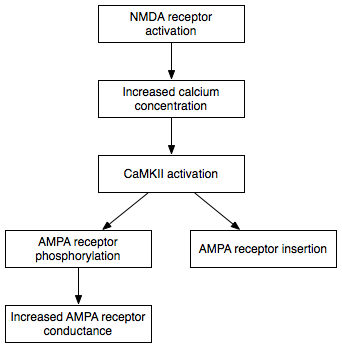

Encoding is achieved using a combination of chemicals and electricity. Neurotransmitters are released when an electrical pulse crosses the synapse which serves as a connection from nerve cells to other cells. The dendrites receive these impulses with their feathery extensions. A phenomenon called long-term potentiation allows a synapse to increase strength with increasing numbers of transmitted signals between the two neurons. For that to happen, NMDA receptor, which influences the flow of information between neurons by controlling the initiation of long-term potentiation in most hippocampal pathways, need to come to the play. For these NMDA receptors to be activated, there must be two conditions. Firstly, glutamate has to be released and bound to the NMDA receptor site on postsynaptic neurons. Secondly, excitation has to take place in postsynaptic neurons. These cells also organize themselves into groups specializing in different kinds of information processing. Thus, with new experiences the brain creates more connections and may 'rewire'. The brain organizes and reorganizes itself in response to one's experiences, creating new memories prompted by experience, education, or training. Therefore, the use of a brain reflects how it is organised. This ability to re-organize is especially important if ever a part of the brain becomes damaged. Scientists are unsure of whether the stimuli of what we do not recall are filtered out at the sensory phase or if they are filtered out after the brain examines their significance.

Mapping Activity

Positron emission tomography (PET) demonstrates a consistent functional anatomical blueprint of hippocampal activation during episodic encoding and retrieval. Activation in the hippocampal region associated with episodic memory encoding has been shown to occur in the rostral portion of the region whereas activation associated with episodic memory retrieval occurs in the caudal portions. This is referred to as the Hippocampal memory encoding and retrieval model or HIPER model.

One study used PET to measure cerebral blood flow during encoding and recognition of faces in both young and older participants. Young people displayed increased cerebral blood flow in the right hippocampus and the left prefrontal and temporal cortices during encoding and in the right prefrontal and parietal cortex during recognition. Elderly people showed no significant activation in areas activated in young people during encoding, however they did show right prefrontal activation during recognition. Thus it may be concluded that as we grow old, failing memories may be the consequence of a failure to adequately encode stimuli as demonstrated in the lack of cortical and hippocampal activation during the encoding process.

Recent findings in studies focusing on patients with post traumatic stress disorder demonstrate that amino acid transmitters, glutamate and GABA, are intimately implicated in the process of factual memory registration, and suggest that amine neurotransmitters, norepinephrine-epinephrine and serotonin, are involved in encoding emotional memory.

Molecular Perspective

The process of encoding is not yet well understood, however key advances have shed light on the nature of these mechanisms. Encoding begins with any novel situation, as the brain will interact and draw conclusions from the results of this interaction. These learning experiences have been known to trigger a cascade of molecular events leading to the formation of memories. These changes include the modification of neural synapses, modification of proteins, creation of new synapses, activation of gene expression and new protein synthesis. One study found that high central nervous system levels of acetylcholine during wakefulness aided in new memory encoding, while low levels of acetylcholine during slow-wave sleep aided in consolidation of memories. However, encoding can occur on different levels. The first step is short-term memory formation, followed by the conversion to a long-term memory, and then a long-term memory consolidation process.

Synaptic Plasticity

Synaptic plasticity is the ability of the brain to strengthen, weaken, destroy and create neural synapses and is the basis for learning. These molecular distinctions will identify and indicate the strength of each neural connection. The effect of a learning experience depends on the content of such an experience. Reactions that are favored will be reinforced and those that are deemed unfavorable will be weakened. This shows that the synaptic modifications that occur can operate either way, in order to be able to make changes over time depending on the current situation of the organism. In the short term, synaptic changes may include the strengthening or weakening of a connection by modifying the preexisting proteins leading to a modification in synapse connection strength. In the long term, entirely new connections may form or the number of synapses at a connection may be increased, or reduced.

The Encoding Process

A significant short-term biochemical change is the covalent modification of pre-existing proteins in order to modify synaptic connections that are already active. This allows data to be conveyed in the short term, without consolidating anything for permanent storage. From here a memory or an association may be chosen to become a long-term memory, or forgotten as the synaptic connections eventually weaken. The switch from short to long-term is the same concerning both implicit memory and explicit memory. This process is regulated by a number of inhibitory constraints, primarily the balance between protein phosphorylation and dephosphorylation. Finally, long term changes occur that allow consolidation of the target memory. These changes include new protein synthesis, the formation of new synaptic connections, and finally the activation of gene expression in accordance with the new neural configuration. The encoding process has been found to be partially mediated by serotonergic interneurons, specifically in regard to sensitization as blocking these interneurons prevented sensitization entirely. However, the ultimate consequences of these discoveries have yet to be identified. Furthermore, the learning process has been known to recruit a variety of modulatory transmitters in order to create and consolidate memories. These transmitters cause the nucleus to initiate processes required for neuronal growth and long-term memory, mark specific synapses for the capture of long-term processes, regulate local protein synthesis, and even appear to mediate attentional processes required for the formation and recall of memories.

Encoding and Genetics

Human memory, including the process of encoding, is known to be a heritable trait that is controlled by more than one gene. In fact, twin studies suggest that genetic differences are responsible for as much as 50% of the variance seen in memory tasks. Proteins identified in animal studies have been linked directly to a molecular cascade of reactions leading to memory formation, and a sizable number of these proteins are encoded by genes that are expressed in humans as well. In fact, variations within these genes appear to be associated with memory capacity and have been identified in recent human genetic studies.

Complementary Processes

The idea that the brain is separated into two complementary processing networks (task positive and task negative) has recently become an area of increasing interest. The task positive network deals with externally oriented processing whereas the task negative network deals with internally oriented processing. Research indicates that these networks are not exclusive and some tasks overlap in their activation. A study done in 2009 shows encoding success and novelty detection activity within the task-positive network have significant overlap and have thus been concluded to reflect common association of externally oriented processing. It also demonstrates how encoding failure and retrieval success share significant overlap within the task negative network indicating common association of internally oriented processing. Finally, a low level of overlap between encoding success and retrieval success activity and between encoding failure and novelty detection activity respectively indicate opposing modes or processing. In sum task positive and task negative networks can have common associations during the performance of different tasks.

Depth of Processing

Different levels of processing influence how well information is remembered. This idea was first introduced by Craik and Lockhart (1972). They claimed that the level of processing information was dependent upon the depth at which the information was being processed; mainly, shallow processing and deep processing. According to Craik and Lockhart, the encoding of sensory information would be considered shallow processing, as it is highly automatic and requires very little focus. Deeper level processing requires more attention being given to the stimulus and engages more cognitive systems to encode the information. An exception to deep processing is if the individual has been exposed to the stimulus frequently and it has become common in the individual’s life, such as the person’s name. These levels of processing can be illustrated by maintenance and elaborate rehearsal.

Maintenance and Elaborative Rehearsal

Maintenance rehearsal is a shallow form of processing information which involves focusing on an object without thought to its meaning or its association with other objects. For example, the repetition of a series of numbers is a form of maintenance rehearsal. In contrast, elaborative or relational rehearsal is a process in which you relate new material to information already stored in Long-term memory. It's a deep form of processing information and involves thought of the object's meaning as well as making connections between the object, past experiences and the other objects of focus. Using the example of numbers, one might associate them with dates that are personally significant such as your parents' birthdays (past experiences) or perhaps you might see a pattern in the numbers that helps you to remember them.

Due to the deeper level of processing that occurs with elaborative rehearsal it is more effective than maintenance rehearsal in creating new memories. This has been demonstrated in people's lack of knowledge of the details in everyday objects. For example, in one study where Americans were asked about the orientation of the face on their country's penny few recalled this with any degree of certainty. Despite the fact that it is a detail that is often seen, it is not remembered as there is no need to because the color discriminates the penny from other coins. The ineffectiveness of maintenance rehearsal, simply being repeatedly exposed to an item, in creating memories has also been found in people's lack of memory for the layout of the digits 0-9 on calculators and telephones.

Maintenance rehearsal has been demonstrated to be important in learning but its effects can only be demonstrated using indirect methods such as lexical decision tasks, and word stem completion which are used to assess implicit learning. In general, however previous learning by maintenance rehearsal is not apparent when memory is being tested directly or explicitly with questions like " Is this the word you were shown earlier?"

Intention to Learn

Studies have shown that the intention to learn has no direct effect on memory encoding. Instead, memory encoding is dependent on how deeply each item is encoded, which could be affected by intention to learn, but not exclusively. That is, intention to learn can lead to more effective learning strategies, and consequently, better memory encoding, but if you learn something incidentally (i.e. without intention to learn) but still process and learn the information effectively, it will get encoded just as well as something learnt with intention.

The effects of elaborative rehearsal or deep processing can be attributed to the number of connections made while encoding that increase the number of pathways available for retrieval.

Optimal Encoding

Organization

Organization is key to memory encoding. Researchers have discovered that our minds naturally organize information if the information received is not organized. One natural way information can be organized is through hierarchies. For example, the grouping mammals, reptiles, and amphibians is a hierarchy of the animal kingdom.

Depth of processing is also related to the organization of information. For example, the connections that are made between the to-be-remembered item, other to-be-remembered items, previous experiences, and context generate retrieval paths for the to-be-remembered item and can act as retrieval cues. These connections create organization on the to-be-remembered item, making it more memorable.

Visual Images

Another method used to enhance encoding is to associate images with words. Gordon Bower and David Winzenz (1970) demonstrated the use of imagery and encoding in their research while using paired-associate learning. Researchers gave participants a list of 15-word-pairs, showing each participant the word pair for 5 seconds for each pair. One group was told to create a mental image of the two words in each pair in which the two items were interacting. The other group was told to use maintenance rehearsal to remember the information. When participants were later tested and asked to recall the second word in each word pairing, researchers found that those who had created visual images of the items interacting remembered over twice as many of the word pairings than those who used maintenance rehearsal.

Mnemonics

When memorizing simple material such as lists of words, mnemonics may be the best strategy, while "material already in long-term store [will be] unaffected". Mnemonic Strategies are an example of how finding organization within a set of items helps these items to be remembered. In the absence of any apparent organization within a group, organization can be imposed with the same memory enhancing results. An example of a mnemonic strategy that imposes organization is the peg-word system which associates the to-be-remembered items with a list of easily remembered items. Another example of a mnemonic device commonly used is the first letter of every word system or acronyms. When learning the colours in a rainbow most students learn the first letter of every color and impose their own meaning by associating it with a name such as Roy. G. Biv which stands for red, orange, yellow, green, blue, indigo, violet. In this way mnemonic devices not only help the encoding of specific items but also their sequence. For more complex concepts, understanding is the key to remembering. In a study done by Wiseman and Neisser in 1974 they presented participants with a picture (the picture was of a Dalmatian in the style of pointillism making it difficult to see the image). They found that memory for the picture was better if the participants understood what was depicted.

Chunking

Chunking is a memory strategy used to maximize the amount of information stored in short term memory in order to combine it into small, meaningful sections. By organizing objects into meaningful sections, these sections are then remembered as a unit rather than separate objects. As larger sections are analyzed and connections are made, information is weaved into meaningful associations and combined into fewer, but larger and more significant pieces of information. By doing so, the ability to hold more information in short-term memory increases. To be more specific, the use of chunking would increase recall from 5 to 8 items to 20 items or more as associations are made between these items.

Words are an example of chunking, where instead of simply perceiving letters we perceive and remember their meaningful wholes: words. The use of chunking increases the number of items we are able to remember by creating meaningful "packets" in which many related items are stored as one. The use of chunking is also seen in numbers. One of the most common forms of chunking seen on a daily basis is that of phone numbers. Generally speaking, phone numbers are separated into sections. An example of this would be 909 200 5890, in which numbers are grouped together to make up one whole. Grouping numbers in this manner, allows them to be recalled with more facility because of their comprehensible acquaintanceship.

State-Dependent Learning

For optimal encoding, connections are not only formed between the items themselves and past experiences, but also between the internal state or mood of the encoder and the situation they are in. The connections that are formed between the encoders internal state or the situation and the items to be remembered are State-dependent. In a 1975 study by Godden and Baddeley the effects of State-dependent learning were shown. They asked deep sea divers to learn various materials while either under water or on the side of the pool. They found that those who were tested in the same condition that they had learned the information in were better able to recall that information, i.e. those who learned the material under water did better when tested on that material under water than when tested on land. Context had become associated with the material they were trying to recall and therefore was serving as a retrieval cue. Results similar to these have also been found when certain smells are present at encoding.

However, although the external environment is important at the time of encoding in creating multiple pathways for retrieval, other studies have shown that simply creating the same internal state that was present at the time of encoding is sufficient to serve as a retrieval cue. Therefore, being in the same mindset as in at the time of encoding will help with recalling in the same way that being in the same situation helps recall. This effect called context reinstatement was demonstrated by Fisher and Craik 1977 when they matched retrieval cues with the way information was memorized.

Transfer-Appropriate Processing

Transfer-appropriate processing is a strategy for encoding that leads to successful retrieval. An experiment conducted by Morris and coworkers in 1977 proved that successful retrieval was a result of matching the type of processing used during encoding. During their experiment, their main findings were that an individual's ability to retrieve information was heavily influenced on whether the task at encoding matched the task during retrieval. In the first task, which consisted of the rhyming group, subjects were given a target word and then asked to review a different set of words. During this process, they were asked whether the new words rhymed with the target word. They were solely focusing on the rhyming rather than the actual meaning of the words. In the second task, individuals were also given a target word, followed by a series of new words. Rather than identify the ones that rhymed, the individual was to focus more on the meaning. As it turns out, the rhyming group, who identified the words that rhymed, was able to recall more words than those in the meaning group, who focused solely on their meaning. This study suggests that those who were focusing on rhyming in the first part of the task and on the second, were able to encode more efficiently. In transfer-appropriate processing, encoding occurs in two different stages. This helps demonstrate how stimuli were processed. In the first phase, the exposure to stimuli is manipulated in a way that matches the stimuli. The second phase then pulls heavily from what occurred in the first phase and how the stimuli was presented; it will match the task during encoding.

Encoding Specificity

The context of learning shapes how information is encoded. For instance, Kanizsa in 1979 showed a picture that could be interpreted as either a white vase on a black background or 2 faces facing each other on a white background. The participants were primed to see the vase. Later they were shown the picture again but this time they were primed to see the black faces on the white background. Although this was the same picture as they had seen before, when asked if they had seen this picture before, they said no. The reason for this was that they had been primed to see the vase the first time the picture was presented, and it was therefore unrecognizable the second time as two faces. This demonstrates that the stimulus is understood within the context it is learned in as well the general rule that what really constitutes good learning are tests that test what has been learned in the same way that it was learned. Therefore, to truly be efficient at remembering information, one must consider the demands that future recall will place on this information and study in a way that will match those demands.

Generation Effect

Another principle that may have the potential to aid encoding is the generation effect. The generation effect implies that learning is enhanced when individuals generate information or items themselves rather than reading the content. The key to properly apply the generation effect is to generate information, rather than passively selecting from information already available like in selecting an answer from a multiple-choice question In 1978, researchers Slameka and Graf conducted an experiment to better understand this effect. In this experiment, the participants were assigned to one of two groups, the read group or the generate group. The participants assigned to the read group were asked to simply read a list of paired words that were related, for example, horse-saddle. The participants assigned to the generate group were asked to fill in the blank letters of one of the related words in the pair. In other words, if the participant was given the word horse, they would need to fill in the last four letters of the word saddle.The researchers discovered that the group that was asked to fill in the blanks had better recall for these word pairs than the group that was asked to simply remember the word pairs.

Self-Reference Effect

Research illustrates that the self-reference effect aids encoding. The self-reference effect is the idea that individuals will encode information more effectively if they can personally relate to the information. For example, some people may claim that some birth dates of family members and friends are easier to remember than others. Some researchers claim this may be due to the self-reference effect. For example, some birth dates are easier for individuals to recall if the date is close to their own birth date or any other dates they deem important, such as anniversary dates.

Research has shown that after being encoded, self-reference effect is more effective when it comes to recalling memory than semantic encoding. Researchers have found that the self-reference effect goes more hand and hand with elaborative rehearsal. Elaborative rehearsal is more often than not, found to have a positive correlation with the improvement of retrieving information from memories. Self-reference effect has shown to be more effective when retrieving information after it has been encoded when being compared to other methods such as semantic encoding. Also, it is important to know that studies have concluded that self-reference effect can be used to encode information among all ages. However, they have determined that older adults are more limited in their use of the self-reference effect when being tested with younger adults.

Salience

When an item or idea is considered "salient", it means the item or idea appears to noticeably stand out. When information is salient, it may be encoded in memory more efficiently than if the information did not stand out to the learner. In reference to encoding, any event involving survival may be considered salient. Research has shown that survival may be related to the self-reference effect due to evolutionary mechanisms. Researchers have discovered that even words that are high in survival value are encoded better than words that are ranked lower in survival value. Some research supports evolution, claiming that the human species remembers content associated with survival. Some researchers wanted to see for themselves whether or not the findings of other research was accurate. The researchers decided to replicate an experiment with results that supported the idea that survival content is encoded better than other content. The findings of the experiment further suggested that survival content has a higher advantage of being encoded than other content.

Retrieval Practice

Studies have shown that an effective tool to increase encoding during the process of learning is to create and take practice tests. Using retrieval in order to enhance performance is called the testing effect, as it actively involves creating and recreating the material that one is intending to learn and increases one’s exposure to it. It is also a useful tool in connecting new information to information already stored in memory, as there is a close association between encoding and retrieval. Thus, creating practice tests allows the individual to process the information at a deeper level than simply reading over the material again or using a pre-made test. The benefits of using retrieval practice have been demonstrated in a study done where college students were asked to read a passage for seven minutes and were then given a two-minute break, during which they completed math problems. One group of participants was given seven minutes to write down as much of the passage as they could remember while the other group was given another seven minutes to reread the material. Later all participants were given a recall test at various increments (five minutes, 2 days, and one week) after the initial learning had taken place. The results of these tests showed that those who had been assigned to the group that had been given a recall test during their first day of the experiment were more likely to retain more information than those that had simply reread the text. This demonstrates that retrieval practice is a useful tool in encoding information into long term memory.

Computational Models of Memory Encoding

Computational models of memory encoding have been developed in order to better understand and simulate the mostly expected, yet sometimes wildly unpredictable, behaviors of human memory. Different models have been developed for different memory tasks, which include item recognition, cued recall, free recall, and sequence memory, in an attempt to accurately explain experimentally observed behaviors.

Item recognition

In item recognition, one is asked whether or not a given probe item has been seen before. It is important to note that the recognition of an item can include context. That is, one can be asked whether an item has been seen in a study list. So even though one may have seen the word "apple" sometime during their life, if it was not on the study list, it should not be recalled.

Item recognition can be modeled using Multiple trace theory and the attribute-similarity model. In brief, every item that one sees can be represented as a vector of the item's attributes, which is extended by a vector representing the context at the time of encoding, and is stored in a memory matrix of all items ever seen. When a probe item is presented, the sum of the similarities to each item in the matrix (which is inversely proportional to the sum of the distances between the probe vector and each item in the memory matrix) is computed. If the similarity is above a threshold value, one would respond, "Yes, I recognize that item." Given that context continually drifts by nature of a random walk, more recently seen items, which each share a similar context vector to the context vector at the time of the recognition task, are more likely to be recognized than items seen longer ago.

Cued Recall

In cued recall, an individual is presented with a stimulus, such as a list of words and then asked to remember as many of those words as possible. They are then given cues, such as categories, to help them remember what the stimuli were. An example of this would be to give a subject words such as meteor, star, space ship, and alien to memorize. Then providing them with the cue of "outer space" to remind them of the list of words given. Giving the subject cues, even when never originally mentioned, helped them recall the stimulus much better. These cues help guide the subjects to recall the stimuli they could not remember for themselves prior to being given a cue. Cues can essentially be anything that will help a memory that is deemed forgotten to resurface. An experiment conducted by Tulvig suggests that when subjects were given cues, they were able to recall the previously presented stimuli.

Cued recall can be explained by extending the attribute-similarity model used for item recognition. Because in cued recall, a wrong response can be given for a probe item, the model has to be extended accordingly to account for that. This can be achieved by adding noise to the item vectors when they are stored in the memory matrix. Furthermore, cued recall can be modeled in a probabilistic manner such that for every item stored in the memory matrix, the more similar it is to the probe item, the more likely it is to be recalled. Because the items in the memory matrix contain noise in their values, this model can account for incorrect recalls, such as mistakenly calling a person by the wrong name.

Free Recall

In free recall, one is allowed to recall items that were learned in any order. For example, you could be asked to name as many countries in Europe as you can. Free recall can be modeled using SAM (Search of Associative Memory) which is based on the dual-store model, first proposed by Atkinson and Shiffrin in 1968. SAM consists of two main components: short-term store (STS) and long-term store (LTS). In brief, when an item is seen, it is pushed into STS where it resides with other items also in STS, until it displaced and put into LTS. The longer the item has been in STS, the more likely it is to be displaced by a new item. When items co-reside in STS, the links between those items are strengthened. Furthermore, SAM assumes that items in STS are always available for immediate recall.

SAM explains both primacy and recency effects. Probabilistically, items at the beginning of the list are more likely to remain in STS, and thus have more opportunities to strengthen their links to other items. As a result, items at the beginning of the list are made more likely to be recalled in a free-recall task (primacy effect). Because of the assumption that items in STS are always available for immediate recall, given that there were no significant distractors between learning and recall, items at the end of the list can be recalled excellently (recency effect).

Studies have shown that free recall is one of the most effective methods of studying and transferring information from short term memory to long term memory compared to item recognition and cued recall as greater relational processing is involved.

Incidentally, the idea of STS and LTS was motivated by the architecture of computers, which contain short-term and long-term storage.

Sequence Memory

Sequence memory is responsible for how we remember lists of things, in which ordering matters. For example, telephone numbers are an ordered list of one digit numbers. There are currently two main computational memory models that can be applied to sequence encoding: associative chaining and positional coding.

Associative chaining theory states that every item in a list is linked to its forward and backward neighbors, with forward links being stronger than backward links, and links to closer neighbors being stronger than links to farther neighbors. For example, associative chaining predicts the tendencies of transposition errors, which occur most often with items in nearby positions. An example of a transposition error would be recalling the sequence "apple, orange, banana" instead of "apple, banana, orange."

Positional coding theory suggests that every item in a list is associated to its position in the list. For example, if the list is "apple, banana, orange, mango" apple will be associated to list position 1, banana to 2, orange to 3, and mango to 4. Furthermore, each item is also, albeit more weakly, associated to its index +/- 1, even more weakly to +/- 2, and so forth. So banana is associated not only to its actual index 2, but also to 1, 3, and 4, with varying degrees of strength. For example, positional coding can be used to explain the effects of recency and primacy. Because items at the beginning and end of a list have fewer close neighbors compared to items in the middle of the list, they have less competition for correct recall.

Although the models of associative chaining and positional coding are able to explain a great amount of behavior seen for sequence memory, they are far from perfect. For example, neither chaining nor positional coding is able to properly illustrate the details of the Ranschburg effect, which reports that sequences of items that contain repeated items are harder to reproduce than sequences of unrepeated items. Associative chaining predicts that recall of lists containing repeated items is impaired because recall of any repeated item would cue not only its true successor but also the successors of all other instances of the item. However, experimental data have shown that spaced repetition of items resulted in impaired recall of the second occurrence of the repeated item. Furthermore, it had no measurable effect on the recall of the items that followed the repeated items, contradicting the prediction of associative chaining. Positional coding predicts that repeated items will have no effect on recall, since the positions for each item in the list act as independent cues for the items, including the repeated items. That is, there is no difference between the similarity between any two items and repeated items. This, again, is not consistent with the data.

Because no comprehensive model has been defined for sequence memory to this day, it makes for an interesting area of research.