The causes of the Great Depression in the early 20th century in the United States have been extensively discussed by economists and remain a matter of active debate. They are part of the larger debate about economic crises and recessions. The specific economic events that took place during the Great Depression are well established.

There was an initial stock market crash that triggered a "panic sell-off" of assets. This was followed by a deflation in asset and commodity prices, dramatic drops in demand and the total quantity of money in the economy, and disruption of trade, ultimately resulting in widespread unemployment (over 13 million people were unemployed by 1932) and impoverishment. However, economists and historians have not reached a consensus on the causal relationships between various events and government economic policies in causing or ameliorating the Depression.

Current mainstream theories may be broadly classified into two main points of view. The first are the demand-driven theories, from Keynesian and institutional economists who argue that the depression was caused by a widespread loss of confidence that led to drastically lower investment and persistent underconsumption. The demand-driven theories argue that the financial crisis following the 1929 crash led to a sudden and persistent reduction in consumption and investment spending, causing the depression that followed. Once panic and deflation set in, many people believed they could avoid further losses by keeping clear of the markets. Holding money therefore became profitable as prices dropped lower and a given amount of money bought ever more goods, exacerbating the drop in demand.

Second, there are the monetarists, who believe that the Great Depression started as an ordinary recession, but that significant policy mistakes by monetary authorities (especially the Federal Reserve) caused a shrinking of the money supply which greatly exacerbated the economic situation, causing a recession to descend into the Great Depression. Related to this explanation are those who point to debt deflation causing those who borrow to owe ever more in real terms.

There are also several various heterodox theories that reject the explanations of the Keynesians and monetarists. Some new classical macroeconomists have argued that various labor market policies imposed at the start caused the length and severity of the Great Depression.

General theoretical reasoning

The two classical competing theories of the Great Depression are the Keynesian (demand-driven) and the monetarist explanation. There are also various heterodox theories that downplay or reject the explanations of the Keynesians and monetarists.

Economists and economic historians are almost evenly split as to whether the traditional monetary explanation that monetary forces were the primary cause of the Great Depression is right, or the traditional Keynesian explanation that a fall in autonomous spending, particularly investment, is the primary explanation for the onset of the Great Depression. Today the controversy is of lesser importance since there is mainstream support for the debt deflation theory and the expectations hypothesis that building on the monetary explanation of Milton Friedman and Anna Schwartz add non-monetary explanations.

There is consensus that the Federal Reserve System should have cut short the process of monetary deflation and banking collapse. If the Federal Reserve System had done that, the economic downturn would have been far less severe and much shorter.

Mainstream theories

Keynesian

In his book The General Theory of Employment, Interest and Money (1936), British economist John Maynard Keynes introduced concepts that were intended to help explain the Great Depression. He argued that there are reasons that the self-correcting mechanisms that many economists claimed should work during a downturn might not work.

One argument for a non-interventionist policy during a recession was that if consumption fell due to savings, the savings would cause the rate of interest to fall. According to the classical economists, lower interest rates would lead to increased investment spending and demand would remain constant. However, Keynes argues that there are good reasons that investment does not necessarily increase in response to a fall in the interest rate. Businesses make investments based on expectations of profit. Therefore, if a fall in consumption appears to be long-term, businesses analyzing trends will lower expectations of future sales. Therefore, the last thing they are interested in doing is investing in increasing future production, even if lower interest rates make capital inexpensive. In that case, the economy can be thrown into a general slump due to a decline in consumption. According to Keynes, this self-reinforcing dynamic is what occurred to an extreme degree during the Depression, where bankruptcies were common and investment, which requires a degree of optimism, was very unlikely to occur. This view is often characterized by economists as being in opposition to Say's Law.

The idea that reduced capital investment was a cause of the depression is a central theme in secular stagnation theory.

Keynes argued that if the national government spent more money to help the economy to recover the money normally spent by consumers and business firms, then unemployment rates would fall. The solution was for the Federal Reserve System to "create new money for the national government to borrow and spend" and to cut taxes rather than raising them, in order for consumers to spend more, and other beneficial factors. Hoover chose to do the opposite of what Keynes thought to be the solution and allowed the federal government to raise taxes exceedingly to reduce the budget shortage brought upon by the depression. Keynes proclaimed that more workers could be employed by decreasing interest rates, encouraging firms to borrow more money and make more products. Employment would prevent the government from having to spend any more money by increasing the amount at which consumers would spend. Keynes' theory was then confirmed by the length of the Great Depression within the United States and the constant unemployment rate. Employment rates began to rise in preparation for World War II by increasing government spending. "In light of these developments, the Keynesian explanation of the Great Depression was increasingly accepted by economists, historians, and politicians".

Monetarist

In their 1963 book A Monetary History of the United States, 1867–1960, Milton Friedman and Anna Schwartz laid out their case for a different explanation of the Great Depression. Essentially, the Great Depression, in their view, was caused by the fall of the money supply. Friedman and Schwartz write: "From the cyclical peak in August 1929 to a cyclical trough in March 1933, the stock of money fell by over a third." The result was what Friedman and Schwartz called "The Great Contraction" — a period of falling income, prices, and employment caused by the choking effects of a restricted money supply. Friedman and Schwartz argue that people wanted to hold more money than the Federal Reserve was supplying. As a result, people hoarded money by consuming less. This caused a contraction in employment and production since prices were not flexible enough to immediately fall. The Fed's failure was in not realizing what was happening and not taking corrective action. In a speech honoring Friedman and Schwartz, Ben Bernanke stated:

"Let me end my talk by abusing slightly my status as an official representative of the Federal Reserve. I would like to say to Milton and Anna: Regarding the Great Depression, you're right. We did it. We're very sorry. But thanks to you, we won't do it again."

— Ben S. Bernanke

After the Depression, the primary explanations of it tended to ignore the importance of the money supply. However, in the monetarist view, the Depression was "in fact a tragic testimonial to the importance of monetary forces". In their view, the failure of the Federal Reserve to deal with the Depression was not a sign that monetary policy was impotent, but that the Federal Reserve implemented the wrong policies. They did not claim the Fed caused the depression, only that it failed to use policies that might have stopped a recession from turning into a depression.

Before the Great Depression, the U.S. economy had already experienced a number of depressions. These depressions were often set off by banking crisis, the most significant occurring in 1873, 1893, 1901, and 1907. Before the 1913 establishment of the Federal Reserve, the banking system had dealt with these crises in the U.S. (such as in the Panic of 1907) by suspending the convertibility of deposits into currency. Starting in 1893, there were growing efforts by financial institutions and business men to intervene during these crises, providing liquidity to banks that were suffering runs. During the banking panic of 1907, an ad hoc coalition assembled by J. P. Morgan successfully intervened in this way, thereby cutting off the panic, which was likely the reason why the depression that would normally have followed a banking panic did not happen this time. A call by some for a government version of this solution resulted in the establishment of the Federal Reserve.

But in 1929–32, the Federal Reserve did not act to provide liquidity to banks suffering bank runs. In fact, its policy contributed to the banking crisis by permitting a sudden contraction of the money supply. During the Roaring Twenties, the central bank had set as its primary goal "price stability", in part because the governor of the New York Federal Reserve, Benjamin Strong, was a disciple of Irving Fisher, a tremendously popular economist who popularized stable prices as a monetary goal. It had kept the number of dollars at such an amount that prices of goods in society appeared stable. In 1928, Strong died, and with his death this policy ended, to be replaced with a real bills doctrine requiring that all currency or securities have material goods backing them. This policy permitted the U.S. money supply to fall by over a third from 1929 to 1933.

When this money shortage caused runs on banks, the Fed maintained its true bills policy, refusing to lend money to the banks in the way that had cut short the 1907 panic, instead allowing each to suffer a catastrophic run and fail entirely. This policy resulted in a series of bank failures in which one-third of all banks vanished. According to Ben Bernanke, the subsequent credit crunches led to waves of bankruptcies. Friedman said that if a policy similar to 1907 had been followed during the banking panic at the end of 1930, perhaps this would have stopped the vicious circle of the forced liquidation of assets at depressed prices. Consequently, the banking panics of 1931, 1932, and 1933 might not have happened, just as suspension of convertibility in 1893 and 1907 had quickly ended the liquidity crises at the time."

Monetarist explanations had been rejected in Samuelson's work Economics, writing: "Today few economists regard Federal Reserve monetary policy as a panacea for controlling the business cycle. Purely monetary factors are considered to be as much symptoms as causes, albeit symptoms with aggravating effects that should not be completely neglected." According to Keynesian economist Paul Krugman, the work of Friedman and Schwartz became dominant among mainstream economists by the 1980s but should be reconsidered in light of Japan's Lost Decade of the 1990s. The role of monetary policy in financial crises is in active debate regarding the financial crisis of 2007–2008; see Causes of the Great Recession.

Additional modern nonmonetary explanations

The monetary explanation has two weaknesses. First, it is not able to explain why the demand for money was falling more rapidly than the supply during the initial downturn in 1930–31. Second, it is not able to explain why in March 1933 a recovery took place although short term interest rates remained close to zero and the money supply was still falling. These questions are addressed by modern explanations that build on the monetary explanation of Milton Friedman and Anna Schwartz but add non-monetary explanations.

Debt deflation

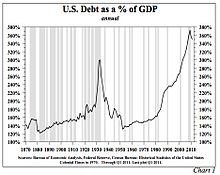

Total debt to GDP levels in the U.S. reached a high of just under 300 per cent by the time of the Depression. This level of debt was not exceeded again until near the end of the 20th century.

Jerome (1934) gives an unattributed quote about finance conditions that allowed the great industrial expansion of the post-W.W.I period:

Probably never before in this country had such a volume of funds been available at such low rates for such a long period.

Furthermore, Jerome says that the volume of new capital issues increased at a 7.7% compounded annual rate from 1922 to 1929 at a time when the Standard Statistics Co.'s index of 60 high grade bonds yielded from 4.98% in 1923 to 4.47% in 1927.

There was also a real estate and housing bubble in the 1920s, especially in Florida, which burst in 1925. Alvin Hansen stated that housing construction during the 1920s decade exceeded population growth by 25 per cent. See also: Florida land boom of the 1920s: Statistics kept by Cook County, Illinois show over 1 million vacant plots for homes in the Chicago area, despite only 950,000 plots being occupied, the result of Chicago's explosive population growth in combination with a real estate bubble.

Irving Fisher argued the predominant factor leading to the Great Depression was over-indebtedness and deflation. Fisher tied loose credit to over-indebtedness, which fueled speculation and asset bubbles. He then outlined nine factors interacting with one another under conditions of debt and deflation to create the mechanics of boom to bust. The chain of events proceeded as follows:

- Debt liquidation and distress selling

- Contraction of the money supply as bank loans are paid off

- A fall in the level of asset prices

- A still greater fall in the net worths of business, precipitating bankruptcies

- A fall in profits

- A reduction in output, in trade and in employment.

- Pessimism and loss of confidence

- Hoarding of money

- A fall in nominal interest rates and a rise in deflation adjusted interest rates.

During the Wall Street Crash of 1929 preceding the Great Depression, margin requirements were only 10%. Brokerage firms, in other words, would lend $90 for every $10 an investor had deposited. When the market fell, brokers called in these loans, which could not be paid back. Banks began to fail as debtors defaulted on debt and depositors attempted to withdraw their deposits en masse, triggering multiple bank runs. Government guarantees and Federal Reserve banking regulations to prevent such panics were ineffective or not used. Bank failures led to the loss of billions of dollars in assets.

Outstanding debts became heavier, because prices and incomes fell by 20–50% but the debts remained at the same dollar amount. After the panic of 1929, and during the first 10 months of 1930, 744 U.S. banks failed. (In all, 9,000 banks failed during the 1930s.) By April 1933, around $7 billion in deposits had been frozen in failed banks or those left unlicensed after the March Bank Holiday.

Bank failures snowballed as desperate bankers called in loans, which the borrowers did not have time or money to repay. With future profits looking poor, capital investment and construction slowed or completely ceased. In the face of bad loans and worsening future prospects, the surviving banks became even more conservative in their lending. Banks built up their capital reserves and made fewer loans, which intensified deflationary pressures. A vicious cycle developed and the downward spiral accelerated.

The liquidation of debt could not keep up with the fall of prices it caused. The mass effect of the stampede to liquidate increased the value of each dollar owed, relative to the value of declining asset holdings. The very effort of individuals to lessen their burden of debt effectively increased it. Paradoxically, the more the debtors paid, the more they owed. This self-aggravating process turned a 1930 recession into a 1933 depression.

Fisher's debt-deflation theory initially lacked mainstream influence because of the counter-argument that debt-deflation represented no more than a redistribution from one group (debtors) to another (creditors). Pure re-distributions should have no significant macroeconomic effects.

Building on both the monetary hypothesis of Milton Friedman and Anna Schwartz as well as the debt deflation hypothesis of Irving Fisher, Ben Bernanke developed an alternative way in which the financial crisis affected output. He builds on Fisher's argument that dramatic declines in the price level and nominal incomes lead to increasing real debt burdens which in turn leads to debtor insolvency and consequently leads to lowered aggregate demand, a further decline in the price level then results in a debt deflationary spiral. According to Bernanke, a small decline in the price level simply reallocates wealth from debtors to creditors without doing damage to the economy. But when the deflation is severe falling asset prices along with debtor bankruptcies lead to a decline in the nominal value of assets on bank balance sheets. Banks will react by tightening their credit conditions, that in turn leads to a credit crunch which does serious harm to the economy. A credit crunch lowers investment and consumption and results in declining aggregate demand which additionally contributes to the deflationary spiral.

Economist Steve Keen revived the debt-reset theory after he accurately predicted the 2008 recession based on his analysis of the Great Depression, and recently advised Congress to engage in debt-forgiveness or direct payments to citizens in order to avoid future financial events. Some people support the debt-reset theory.

Expectations hypothesis

Expectations have been a central element of macroeconomic models since the economic mainstream accepted the new neoclassical synthesis. While not rejecting that it was inadequate demand that sustained the depression, according to Peter Temin, Barry Wigmore, Gauti B. Eggertsson and Christina Romer the key to recovery and the end of the Great Depression was the successful management of public expectations. This thesis is based on the observation that after years of deflation and a very severe recession, important economic indicators turned positive in March 1933, just as Franklin D. Roosevelt took office. Consumer prices turned from deflation to a mild inflation, industrial production bottomed out in March 1933, investment doubled in 1933 with a turnaround in March 1933. There were no monetary forces to explain that turnaround. Money supply was still falling and short term interest rates remained close to zero. Before March 1933, people expected a further deflation and recession so that even interest rates at zero did not stimulate investment. But when Roosevelt announced major regime changes people began to expect inflation and an economic expansion. With those expectations, interest rates at zero began to stimulate investment as planned. Roosevelt's fiscal and monetary policy regime change helped to make his policy objectives credible. The expectation of higher future income and higher future inflation stimulated demand and investments. The analysis suggests that the elimination of the policy dogmas of the gold standard, a balanced budget in times of crises and small government led to a large shift in expectation that accounts for about 70–80 percent of the recovery of output and prices from 1933 to 1937. If the regime change had not happened and the Hoover policy had continued, the economy would have continued its free fall in 1933, and output would have been 30 percent lower in 1937 than in 1933.

The recession of 1937–38, which slowed down economic recovery from the Great Depression, is explained by fears of the population that the moderate tightening of the monetary and fiscal policy in 1937 would be first steps to a restoration of the pre March 1933 policy regime.

Heterodox theories

Austrian School

Austrian economists argue that the Great Depression was the inevitable outcome of the monetary policies of the Federal Reserve during the 1920s. The central bank's policy was an "easy credit policy" which led to an unsustainable credit-driven boom. The inflation of the money supply during this period led to an unsustainable boom in both asset prices (stocks and bonds) and capital goods. By the time the Federal Reserve belatedly tightened monetary policy in 1928, it was too late to avoid a significant economic contraction. Austrians argue that government intervention after the crash of 1929 delayed the market's adjustment and made the road to complete recovery more difficult.

Acceptance of the Austrian explanation of what primarily caused the Great Depression is compatible with either acceptance or denial of the monetarist explanation. Austrian economist Murray Rothbard, who wrote America's Great Depression (1963), rejected the monetarist explanation. He criticized Milton Friedman's assertion that the central bank failed to sufficiently increase the supply of money, claiming instead that the Federal Reserve did pursue an inflationary policy when, in 1932, it purchased $1.1 billion of government securities, which raised its total holding to $1.8 billion. Rothbard says that despite the central bank's policies, "total bank reserves only rose by $212 million, while the total money supply fell by $3 billion". The reason for this, he argues, is that the American populace lost faith in the banking system and began hoarding more cash, a factor very much beyond the control of the Central Bank. The potential for a run on the banks caused local bankers to be more conservative in lending out their reserves, which, according to Rothbard's argument, was the cause of the Federal Reserve's inability to inflate.

Friedrich Hayek had criticised the Federal Reserve and the Bank of England in the 1930s for not taking a more contractionary stance. However, in 1975, Hayek admitted that he made a mistake in the 1930s in not opposing the Central Bank's deflationary policy and stated the reason why he had been ambivalent: "At that time I believed that a process of deflation of some short duration might break the rigidity of wages which I thought was incompatible with a functioning economy. In 1978, he made it clear that he agreed with the point of view of the monetarists, saying, "I agree with Milton Friedman that once the Crash had occurred, the Federal Reserve System pursued a silly deflationary policy", and that he was as opposed to deflation as he was to inflation. Concordantly, economist Lawrence White argues that the business cycle theory of Hayek is inconsistent with a monetary policy which permits a severe contraction of the money supply.

Marxian

Marxists generally argue that the Great Depression was the result of the inherent instability of the capitalist mode of production. According to Forbes, "The idea that capitalism caused the Great Depression was widely held among intellectuals and the general public for many decades."

Specific theories of cause

Non-debt deflation

In addition to the debt deflation there was a component of productivity deflation that had been occurring since The Great Deflation of the last quarter of the 19th century. There may have also been a continuation of the correction to the sharp inflation caused by World War I.

Oil prices reached their all-time low in the early 1930s as production began from the East Texas Oil Field, the largest field ever found in the lower 48 states. With the oil market oversupplied prices locally fell to below ten cents per barrel.

Productivity or technology shock

In the first three decades of the 20th century productivity and economic output surged due in part to electrification, mass production and the increasing motorization of transportation and farm machinery. Electrification and mass production techniques such as Fordism permanently lowered the demand for labor relative to economic output. By the late 1920s the resultant rapid growth in productivity and investment in manufacturing meant there was a considerable excess production capacity.

Sometime after the peak of the business cycle in 1923, more workers were displaced by productivity improvements than growth in the employment market could meet, causing unemployment to slowly rise after 1925. Also, the work week fell slightly in the decade prior to the depression.Wages did not keep up with productivity growth, which led to the problem of underconsumption.

Henry Ford and Edward A. Filene were among prominent businessmen who were concerned with overproduction and underconsumption. Ford doubled wages of his workers in 1914. The over-production problem was also discussed in Congress, with Senator Reed Smoot proposing an import tariff, which became the Smoot–Hawley Tariff Act. The Smoot–Hawley Tariff was enacted in June 1930. The tariff was misguided because the U.S. had been running a trade account surplus during the 1920s.

Another effect of rapid technological change was that after 1910 the rate of capital investment slowed, primarily due to reduced investment in business structures.

The depression led to additional large numbers of plant closings.

It cannot be emphasized too strongly that the [productivity, output and employment] trends we are describing are long-time trends and were thoroughly evident prior to 1929. These trends are in nowise the result of the present depression, nor are they the result of the World War. On the contrary, the present depression is a collapse resulting from these long-term trends. — M. King Hubbert

In the book Mechanization in Industry, whose publication was sponsored by the National Bureau of Economic Research, Jerome (1934) noted that whether mechanization tends to increase output or displace labor depends on the elasticity of demand for the product. In addition, reduced costs of production were not always passed on to consumers. It was further noted that agriculture was adversely affected by the reduced need for animal feed as horses and mules were displaced by inanimate sources of power following World War I. As a related point, Jerome also notes that the term "technological unemployment" was being used to describe the labor situation during the depression.

Some portion of the increased unemployment which characterized the post-War years in the United States may be attributed to the mechanization of industries producing commodities of inelastic demand. — Fredrick C. Wells, 1934

The dramatic rise in productivity of major industries in the U. S. and the effects of productivity on output, wages and the work week are discussed by a Brookings Institution sponsored book.

Corporations decided to lay off workers and reduced the amount of raw materials they purchased to manufacture their products. This decision was made to cut the production of goods because of the amount of products that were not being sold.

Joseph Stiglitz and Bruce Greenwald suggested that it was a productivity-shock in agriculture, through fertilizers, mechanization and improved seed, that caused the drop in agricultural product prices. Farmers were forced off the land, further adding to the excess labor supply.

The prices of agricultural products began to decline after W.W.I and eventually many farmers were forced out of business, causing the failure of hundreds of small rural banks. Agricultural productivity resulting from tractors, fertilizers, and hybrid corn was only part of the problem; the other problem was the change over from horses and mules to internal combustion transportation. The horse and mule population began declining after W.W.I, freeing up enormous quantities of land previously used for animal feed.

The rise of the internal combustion engine and increasing numbers of motorcars and buses also halted the growth of electric street railways.

The years 1929 to 1941 had the highest total factor productivity growth in the history of the U. S., largely due to the productivity increases in public utilities, transportation and trade.

Disparities in wealth and income

Economists such as Waddill Catchings, William Trufant Foster, Rexford Tugwell, Adolph Berle (and later John Kenneth Galbraith), popularized a theory that had some influence on Franklin D. Roosevelt. This theory held that the economy produced more goods than consumers could purchase, because the consumers did not have enough income. According to this view, in the 1920s wages had increased at a lower rate than productivity growth, which had been high. Most of the benefit of the increased productivity went into profits, which went into the stock market bubble rather than into consumer purchases. Thus workers did not have enough income to absorb the large amount of capacity that had been added.

According to this view, the root cause of the Great Depression was a global overinvestment while the level of wages and earnings from independent businesses fell short of creating enough purchasing power. It was argued that government should intervene by an increased taxation of the rich to help make income more equal. With the increased revenue the government could create public works to increase employment and 'kick start' the economy. In the U.S.A. the economic policies had been quite the opposite until 1932. The Revenue Act of 1932 and public works programmes introduced in Hoover's last year as president and taken up by Roosevelt, created some redistribution of purchasing power.

The stock market crash made it evident that banking systems Americans were relying on were not dependable. Americans looked towards insubstantial banking units for their own liquidity supply. As the economy began to fail, these banks were no longer able to support those who depended on their assets – they did not hold as much power as the larger banks. During the depression, "three waves of bank failures shook the economy." The first wave came just when the economy was heading in the direction of recovery at the end of 1930 and the beginning of 1931. The second wave of bank failures occurred "after the Federal Reserve System raised the rediscount rate to stanch an outflow of gold" around the end of 1931. The last wave, which began in the middle of 1932, was the worst and most devastating, continuing "almost to the point of a total breakdown of the banking system in the winter of 1932–1933". The reserve banks led the United States into an even deeper depression between 1931 and 1933, due to their failure to appreciate and put to use the powers they withheld – capable of creating money – as well as the "inappropriate monetary policies pursued by them during these years".

Gold Standard system

According to the gold standard theory of the Depression, the Depression was largely caused by the decision of most western nations after World War I to return to the gold standard at the pre-war gold price. Monetary policy, according to this view, was thereby put into a deflationary setting that would over the next decade slowly grind away at the health of many European economies.

This post-war policy was preceded by an inflationary policy during World War I, when many European nations abandoned the gold standard, forced by the enormous costs of the war. This resulted in inflation because the supply of new money that was created was spent on war, not on investments in productivity to increase aggregate supply that would have neutralized inflation. The view is that the quantity of new money introduced largely determines the inflation rate, and therefore, the cure to inflation is to reduce the amount of new currency created for purposes that are destructive or wasteful, and do not lead to economic growth.

After the war, when America and the nations of Europe went back on the gold standard, most nations decided to return to the gold standard at the pre-war price. When the United Kingdom, for example, passed the Gold Standard Act of 1925, thereby returning Britain to the gold standard, the critical decision was made to set the new price of the Pound Sterling at parity with the pre-war price even though the pound was then trading on the foreign exchange market at a much lower price. At the time, this action was criticized by John Maynard Keynes and others, who argued that in so doing, they were forcing a revaluation of wages without any tendency to equilibrium. Keynes' criticism of Chancellor of the Exchequer Winston Churchill's form of the return to the gold standard implicitly compared it to the consequences of the Treaty of Versailles.

One of the reasons for setting the currencies at parity with the pre-war price was the prevailing opinion at that time that deflation was not a danger, while inflation, particularly the inflation in the Weimar Republic, was an unbearable danger. Another reason was that those who had loaned in nominal amounts hoped to recover the same value in gold that they had lent. Because of the World War I reparations that Germany had to pay France, Germany began a credit-fueled period of growth in order to export and sell enough goods abroad to gain gold to pay the reparations. The U.S., as the world's gold sink, loaned money to Germany to stabilize its currency, which allowed it to access additional credit to spur the growth needed to pay back France, and France to pay back loans to the U.K. and the U.S. The loan and a reparations schedule were codified in the Dawes Plan.

In some cases, deflation can be hard on sectors of the economy such as agriculture, if they are deeply in debt at high interest rates and are unable to refinance, or that are dependent upon loans to finance capital goods when low interest rates are not available. Deflation erodes the price of commodities while increasing the real liability of debt. Deflation is beneficial to those with assets in cash, and to those who wish to invest or purchase assets or loan money.

More recent research, by economists such as Temin, Ben Bernanke, and Barry Eichengreen, has focused on the constraints policy makers were under at the time of the Depression. In this view, the constraints of the inter-war gold standard magnified the initial economic shock and were a significant obstacle to any actions that would ameliorate the growing Depression. According to them, the initial destabilizing shock may have originated with the Wall Street Crash of 1929 in the U.S., but it was the gold standard system that transmitted the problem to the rest of the world.

According to their conclusions, during a time of crisis, policy makers may have wanted to loosen monetary and fiscal policy, but such action would threaten the countries' ability to maintain their obligation to exchange gold at its contractual rate. The gold standard required countries to maintain high interest rates to attract international investors who bought foreign assets with gold. Therefore, governments had their hands tied as the economies collapsed, unless they abandoned their currency's link to gold. Fixing the exchange rate of all countries on the gold standard ensured that the market for foreign exchange can only equilibrate through interest rates. As the Depression worsened, many countries started to abandon the gold standard, and those that abandoned it earlier suffered less from deflation and tended to recover more quickly.

Richard Timberlake, economist of the free banking school and protégé of Milton Friedman, specifically addressed this stance in his paper Gold Standards and the Real Bills Doctrine in U.S. Monetary Policy, wherein he argued that the Federal Reserve actually had plenty of lee-way under the gold standard, as had been demonstrated by the price stability policy of New York Fed governor Benjamin Strong, between 1923 and 1928. But when Strong died in late 1928, the faction that took over dominance of the Fed advocated a real bills doctrine, where all money had to be represented by physical goods. This policy, forcing a 30% deflation of the dollar that inevitably damaged the U.S. economy, is stated by Timberlake as being arbitrary and avoidable, the existing gold standard having been capable of continuing without it:

- This shift in control was decisive. In accordance with the precedent Strong had set in promoting a stable price level policy without heed to any golden fetters, real bills proponents could proceed equally unconstrained in implementing their policy ideal. System policy in 1928–29 consequently shifted from price level stabilization to passive real bills. "The" gold standard remained where it had been—nothing but formal window dressing waiting for an opportune time to reappear.

Financial institution structures

Economic historians (especially Friedman and Schwartz) emphasize the importance of numerous bank failures. The failures were mostly in rural America. Structural weaknesses in the rural economy made local banks highly vulnerable. Farmers, already deeply in debt, saw farm prices plummet in the late 1920s and their implicit real interest rates on loans skyrocket.

Their land was already over-mortgaged (as a result of the 1919 bubble in land prices), and crop prices were too low to allow them to pay off what they owed. Small banks, especially those tied to the agricultural economy, were in constant crisis in the 1920s with their customers defaulting on loans because of the sudden rise in real interest rates; there was a steady stream of failures among these smaller banks throughout the decade.

The city banks also suffered from structural weaknesses that made them vulnerable to a shock. Some of the nation's largest banks were failing to maintain adequate reserves and were investing heavily in the stock market or making risky loans. Loans to Germany and Latin America by New York City banks were especially risky. In other words, the banking system was not well prepared to absorb the shock of a major recession.

Economists have argued that a liquidity trap might have contributed to bank failures.

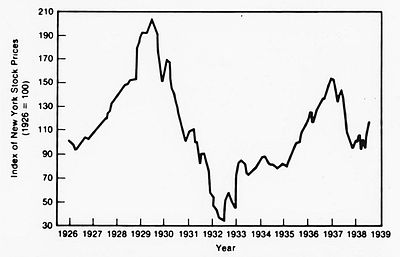

Economists and historians debate how much responsibility to assign the Wall Street Crash of 1929. The timing was right; the magnitude of the shock to expectations of future prosperity was high. Most analysts believe the market in 1928–29 was a "bubble" with prices far higher than justified by fundamentals. Economists agree that somehow it shared some blame, but how much no one has estimated. Milton Friedman concluded, "I don't doubt for a moment that the collapse of the stock market in 1929 played a role in the initial recession".

But the idea of owning government bonds initially became ideal to investors when Liberty Loan drives encouraged this possession in America during World War I. This strive for dominion persisted into the 1920s. After World War I, the United States became the world's creditor and was depended upon by many foreign nations. "Governments from around the globe looked to Wall Street for loans". Investors then started to depend on these loans for further investments. Chief counsel of the Senate Bank Committee, Ferdinand Pecora, disclosed that National City executives were also dependent on loans from a special bank fund as a safety net for their stock losses while American banker, Albert Wiggin, "made millions selling short his own bank shares".

Economist David Hume stated that the economy became imbalanced as the recession spread on an international scale. The cost of goods remained too high for too long during a time where there was less international trade. Policies set in selected countries to "maintain the value of their currency" resulted in an outcome of bank failures. Governments that continued to follow the gold standard were led into bank failure, meaning that it was the governments and central bankers that contributed as a stepping stool into the depression.

The debate has three sides: one group says the crash caused the depression by drastically lowering expectations about the future and by removing large sums of investment capital; a second group says the economy was slipping since summer 1929 and the crash ratified it; the third group says that in either scenario the crash could not have caused more than a recession. There was a brief recovery in the market into April 1930, but prices then started falling steadily again from there, not reaching a final bottom until July 1932. This was the largest long-term U.S. market decline by any measure. To move from a recession in 1930 to a deep depression in 1931–32, entirely different factors had to be in play.

Protectionism

Protectionism, such as the American Smoot–Hawley Tariff Act, is often indicated as a cause of the Great Depression, with countries enacting protectionist policies yielding a beggar thy neighbor result. The Smoot–Hawley Tariff Act was especially harmful to agriculture because it caused farmers to default on their loans. This event may have worsened or even caused the ensuing bank runs in the Midwest and West that caused the collapse of the banking system. A petition signed by over 1,000 economists was presented to the U.S. government warning that the Smoot–Hawley Tariff Act would bring disastrous economic repercussions; however, this did not stop the act from being signed into law.

Governments around the world took various steps into spending less money on foreign goods such as: "imposing tariffs, import quotas, and exchange controls". These restrictions formed a lot of tension between trade nations, causing a major deduction during the depression. Not all countries enforced the same measures of protectionism. Some countries raised tariffs drastically and enforced severe restrictions on foreign exchange transactions, while other countries condensed "trade and exchange restrictions only marginally":

- "Countries that remained on the gold standard, keeping currencies fixed, were more likely to restrict foreign trade." These countries "resorted to protectionist policies to strengthen the balance of payments and limit gold losses". They hoped that these restrictions and depletions would hold the economic decline.

- Countries that abandoned the gold standard, allowed their currencies to depreciate which caused their Balance of payments to strengthen. It also freed up monetary policy so that central banks could lower interest rates and act as lenders of last resort. They possessed the best policy instruments to fight the Depression and did not need protectionism.

- "The length and depth of a country's economic downturn and the timing and vigor of its recovery is related to how long it remained on the gold standard. Countries abandoning the gold standard relatively early experienced relatively mild recessions and early recoveries. In contrast, countries remaining on the gold standard experienced prolonged slumps."

In a 1995 survey of American economic historians, two-thirds agreed that the Smoot-Hawley tariff act at least worsened the Great Depression. However, many economists believe that the Smoot-Hawley tariff act was not a major contributor to the great depression. Economist Paul Krugman holds that, "Where protectionism really mattered was in preventing a recovery in trade when production recovered". He cites a report by Barry Eichengreen and Douglas Irwin: Figure 1 in that report shows trade and production dropping together from 1929 to 1932, but production increasing faster than trade from 1932 to 1937. The authors argue that adherence to the gold standard forced many countries to resort to tariffs, when instead they should have devalued their currencies. Peter Temin argues that contrary the popular argument, the contractionary effect of the tariff was small. He notes that exports were 7 percent of GNP in 1929, they fell by 1.5 percent of 1929 GNP in the next two years and the fall was offset by the increase in domestic demand from tariff.

International debt structure

When the war came to an end in 1918, all European nations that had been allied with the U.S. owed large sums of money to American banks, sums much too large to be repaid out of their shattered treasuries. This is one reason why the Allies had insisted (to the consternation of Woodrow Wilson) on reparation payments from Germany and Austria-Hungary. Reparations, they believed, would provide them with a way to pay off their own debts. However, the German Empire and Austria-Hungary were themselves in deep economic trouble after the war; they were no more able to pay the reparations than the Allies to pay their debts.

The debtor nations put strong pressure on the U.S. in the 1920s to forgive the debts, or at least reduce them. The American government refused. Instead, U.S. banks began making large loans to the nations of Europe. Thus, debts (and reparations) were being paid only by augmenting old debts and piling up new ones. In the late 1920s, and particularly after the American economy began to weaken after 1929, the European nations found it much more difficult to borrow money from the U.S. At the same time, high U.S. tariffs were making it much more difficult for them to sell their goods in U.S. markets. Without any source of revenue from foreign exchange to repay their loans, they began to default.

Beginning late in the 1920s, European demand for U.S. goods began to decline. That was partly because European industry and agriculture were becoming more productive, and partly because some European nations (most notably Weimar Germany) were suffering serious financial crises and could not afford to buy goods overseas. However, the central issue causing the destabilization of the European economy in the late 1920s was the international debt structure that had emerged in the aftermath of World War I.

The high tariff walls such as the Smoot–Hawley Tariff Act critically impeded the payment of war debts. As a result of high U.S. tariffs, only a sort of cycle kept the reparations and war-debt payments going. During the 1920s, the former allies paid the war-debt installments to the U.S. chiefly with funds obtained from German reparations payments, and Germany was able to make those payments only because of large private loans from the U.S. and Britain. Similarly, U.S. investments abroad provided the dollars, which alone made it possible for foreign nations to buy U.S. exports.

The Smoot–Hawley Tariff Act was instituted by Senator Reed Smoot and Representative Willis C. Hawley, and signed into law by President Hoover, to raise taxes on American imports by about 20 percent during June 1930. This tax, which added to already shrinking income and overproduction in the U.S., only benefitted Americans in having to spend less on foreign goods. In contrast, European trading nations frowned upon this tax increase, particularly since the "United States was an international creditor and exports to the U.S. market were already declining". In response to the Smoot–Hawley Tariff Act, some of America's primary producers and largest trading partner, Canada, chose to seek retribution by increasing the financial value of imported goods favoured by the Americans.

In the scramble for liquidity that followed the 1929 stock market crash, funds flowed back from Europe to America, and Europe's fragile economies crumbled.

By 1931, the world was reeling from the worst depression of recent memory, and the entire structure of reparations and war debts collapsed.

Population dynamics

In 1939, prominent economist Alvin Hansen discussed the decline in population growth in relation to the Depression. The same idea was discussed in a 1978 journal article by Clarence Barber, an economist at the University of Manitoba. Using "a form of the Harrod model" to analyze the Depression, Barber states:

In such a model, one would look for the origins of a serious depression in conditions which produced a decline in Harrod's natural rate of growth, more specifically, in a decline in the rate of population and labour force growth and in the rate of growth of productivity or technical progress, to a level below the warranted rate of growth.

Barber says, while there is "no clear evidence" of a decline in "the rate of growth of productivity" during the 1920s, there is "clear evidence" the population growth rate began to decline during that same period. He argues the decline in population growth rate may have caused a decline in "the natural rate of growth" which was significant enough to cause a serious depression.

Barber says a decline in the population growth rate is likely to affect the demand for housing, and claims this is apparently what happened during the 1920s. He concludes:

the rapid and very large decline in the rate of growth of non-farm households was clearly the major reason for the decline that occurred in residential construction in the United States from 1926 on. And this decline, as Bolch and Pilgrim have claimed, may well have been the most important single factor in turning the 1929 downturn into a major depression.

The decline in housing construction that can be attributed to demographics has been estimated to range from 28% in 1933 to 38% in 1940.

Among the causes of the decline in the population growth rate during the 1920s were a declining birth rate after 1910 and reduced immigration. The decline in immigration was largely the result of legislation in the 1920s placing greater restrictions on immigration. In 1921, Congress passed the Emergency Quota Act, followed by the Immigration Act of 1924.

Factors that majorly contributed to the failing of the economy since 1925, was a decrease in both residential and non-residential buildings being constructed. It was the debt as a result of the war, fewer families being formed, and an imbalance of mortgage payments and loans in 1928–29, that mainly contributed to the decline in the number of houses being built. This caused the population growth rate to decelerate. Though non-residential units continued to be built "at a high rate throughout the decade", the demand for such units was actually very low.

Role of economic policy

Calvin Coolidge (1923–29)

There is an ongoing debate between historians as to what extent President Calvin Coolidge's laissez-faire hands-off attitude contributed to the Great Depression. Despite a growing rate of bank failures, he did not heed voices that predicted the lack of banking regulation as potentially dangerous. He did not listen to members of Congress warning that stock speculation had gone too far and he ignored criticisms that workers did not participate sufficiently in the prosperity of the Roaring Twenties.

Leave-it-alone liquidationism (1929–33)

Overview

From the point of view of today's mainstream schools of economic thought, government should strive to keep some broad nominal aggregate on a stable growth path (for proponents of new classical macroeconomics and monetarism, the measure is the nominal money supply; for Keynesian economists it is the nominal aggregate demand itself). During a depression the central bank should pour liquidity into the banking system and the government should cut taxes and accelerate spending in order to keep the nominal money stock and total nominal demand from collapsing.

The United States government and the Federal Reserve did not do that during the 1929‑32 slide into the Great Depression The existence of "liquidationism" played a key part in motivating public policy decisions not to fight the gathering Great Depression. An increasingly common view among economic historians is that the adherence of some Federal Reserve policymakers to the liquidationist thesis led to disastrous consequences. Regarding the policies of President Hoover, economists Barry Eichengreen and J. Bradford DeLong point out that the Hoover administration's fiscal policy was guided by liquidationist economists and policy makers, as Hoover tried to keep the federal budget balanced until 1932, when Hoover lost confidence in his Secretary of the Treasury Andrew Mellon and replaced him. Hoover wrote in his memoirs he did not side with the liquidationists, but took the side of those in his cabinet with "economic responsibility", his Secretary of Commerce Robert P. Lamont and Secretary of Agriculture Arthur M. Hyde, who advised the President to "use the powers of government to cushion the situation". But at the same time he kept Andrew Mellon as Secretary of the Treasury until February 1932. It was during 1932 that Hoover began to support more aggressive measures to combat the Depression. In his memoirs, President Hoover wrote bitterly about members of his Cabinet who had advised inaction during the downslide into the Great Depression:

The leave-it-alone liquidationists headed by Secretary of the Treasury Mellon ... felt that government must keep its hands off and let the slump liquidate itself. Mr. Mellon had only one formula: "Liquidate labor, liquidate stocks, liquidate the farmers, liquidate real estate ... It will purge the rottenness out of the system. High costs of living and high living will come down. People will work harder, live a more moral life. Values will be adjusted, and enterprising people will pick up the wrecks from less competent people."

Before the Keynesian Revolution, such a liquidationist theory was a common position for economists to take and was held and advanced by economists like Friedrich Hayek, Lionel Robbins, Joseph Schumpeter, Seymour Harris and others. According to the liquidationists a depression is good medicine. The function of a depression is to liquidate failed investments and businesses that have been made obsolete by technological development in order to release factors of production (capital and labor) from unproductive uses. These can then be redeployed in other sectors of the technologically dynamic economy. They asserted that deflationary policy minimized the duration of the Depression of 1920–21 by tolerating liquidation which subsequently created economic growth later in the decade. They pushed for deflationary policies (which were already executed in 1921) which – in their opinion – would assist the release of capital and labor from unproductive activities to lay the groundwork for a new economic boom. The liquidationists argued that even if self-adjustment of the economy took mass bankruptcies, then so be it. Postponing the liquidation process would only magnify the social costs. Schumpeter wrote that it

... leads us to believe that recovery is sound only if it does come of itself. For any revival which is merely due to artificial stimulus leaves part of the work of depressions undone and adds, to an undigested remnant of maladjustment, new maladjustment of its own which has to be liquidated in turn, thus threatening business with another (worse) crisis ahead.

Despite liquidationist expectations, a large proportion of the capital stock was not redeployed and vanished during the first years of the Great Depression. According to a study by Olivier Blanchard and Lawrence Summers, the recession caused a drop of net capital accumulation to pre-1924 levels by 1933.

Criticism

Economists such as John Maynard Keynes and Milton Friedman suggested that the do-nothing policy prescription which resulted from the liquidationist theory contributed to deepening the Great Depression. With the rhetoric of ridicule, Keynes tried to discredit the liquidationist view in presenting Hayek, Robbins and Schumpeter as

...austere and puritanical souls [who] regard [the Great Depression] ... as an inevitable and a desirable nemesis on so much "overexpansion" as they call it ... It would, they feel, be a victory for the mammon of unrighteousness if so much prosperity was not subsequently balanced by universal bankruptcy. We need, they say, what they politely call a 'prolonged liquidation' to put us right. The liquidation, they tell us, is not yet complete. But in time it will be. And when sufficient time has elapsed for the completion of the liquidation, all will be well with us again...

Milton Friedman stated that at the University of Chicago such "dangerous nonsense" was never taught and that he understood why at Harvard University —where such nonsense was taught— bright young economists rejected their teachers' macroeconomics, and become Keynesians. He wrote:

I think the Austrian business-cycle theory has done the world a great deal of harm. If you go back to the 1930s, which is a key point, here you had the Austrians sitting in London, Hayek and Lionel Robbins, and saying you just have to let the bottom drop out of the world. You've just got to let it cure itself. You can't do anything about it. You will only make it worse [...] I think by encouraging that kind of do-nothing policy both in Britain and in the United States, they did harm.

Economist Lawrence White, while acknowledging that Hayek and Robbins did not actively oppose the deflationary policy of the early 1930s, nevertheless challenges the argument of Milton Friedman, J. Bradford DeLong et al. that Hayek was a proponent of liquidationism. White argues that the business cycle theory of Hayek and Robbins (which later developed into Austrian business cycle theory in its present-day form) was actually not consistent with a monetary policy which permitted a severe contraction of the money supply. Nevertheless, White says that at the time of the Great Depression Hayek "expressed ambivalence about the shrinking nominal income and sharp deflation in 1929–32". In a talk in 1975, Hayek admitted the mistake he made over forty years earlier in not opposing the Central Bank's deflationary policy and stated the reason why he had been "ambivalent": "At that time I believed that a process of deflation of some short duration might break the rigidity of wages which I thought was incompatible with a functioning economy." 1979 Hayek strongly criticized the Fed's contractionary monetary policy early in the Depression and its failure to offer banks liquidity:

I agree with Milton Friedman that once the Crash had occurred, the Federal Reserve System pursued a silly deflationary policy. I am not only against inflation but I am also against deflation. So, once again, a badly programmed monetary policy prolonged the depression.

Economic policy

Historians gave Hoover credit for working tirelessly to combat the depression and noted that he left government prematurely aged. But his policies are rated as simply not far-reaching enough to address the Great Depression. He was prepared to do something, but nowhere near enough. Hoover was no exponent of laissez-faire. But his principal philosophies were voluntarism, self-help, and rugged individualism. He refused direct federal intervention. He believed that government should do more than his immediate predecessors (Warren G. Harding, Calvin Coolidge) believed. But he was not willing to go as far as Franklin D. Roosevelt later did. Therefore, he is described as the "first of the new presidents" and "the last of the old".

Hoover's first measures were based on voluntarism by businesses not to reduce their workforce or cut wages. But businesses had little choice and wages were reduced, workers were laid off, and investments postponed. The Hoover administration extended over $100 million in emergency farm loans and some $915 million in public works projects between 1930 and 1932. Hoover urged bankers to set up the National Credit Corporation so that big banks could help failing banks survive. But bankers were reluctant to invest in failing banks, and the National Credit Corporation did almost nothing to address the problem. In 1932 Hoover reluctantly established the Reconstruction Finance Corporation, a Federal agency with the authority to lend up to $2 billion to rescue banks and restore confidence in financial institutions. But $2 billion was not enough to save all the banks, and bank runs and bank failures continued.

Federal spending

J. Bradford DeLong explained that Hoover would have been a budget cutter in normal times and continuously wanted to balance the budget. Hoover held the line against powerful political forces that sought to increase government spending after the Depression began for fully two and a half years. During the first two years of the Depression (1929 and 1930) Hoover actually achieved budget surpluses of about 0.8% of gross domestic product (GDP). In 1931, when the recession significantly worsened and GDP declined by 15%, the federal budget had only a small deficit of 0.6% of GDP. It was not until 1932 (when GDP declined by 27% compared to 1929-level) that Hoover pushed for measures (Reconstruction Finance Corporation, Federal Home Loan Bank Act, direct loans to fund state Depression relief programs) that increased spending. But at the same time he pushed for the Revenue Act of 1932 that massively increased taxes in order to balance the budget again.

Uncertainty was a major factor, argued by several economists, that contributed to the worsening and length of the depression. It was also said to be responsible "for the initial decline in consumption that marks the" beginning of the Great Depression by economists Paul R. Flacco and Randall E. Parker. Economist Ludwig Lachmann argues that it was pessimism that prevented the recovery and worsening of the depression President Hoover is said to have been blinded from what was right in front of him.

Economist James Duesenberry argues economic imbalance was not only a result of World War I, but also of the structural changes made during the first quarter of the Twentieth Century. He also states the branches of the nation's economy became smaller, there was not much demand for housing, and the stock market crash "had a more direct impact on consumption than any previous financial panic".

Economist William A. Lewis describes the conflict between America and its primary producers:

Misfortunes [of the 1930s] were due principally to the fact that the production of primary commodities after the war was somewhat in excess of demand. It was this which, by keeping the terms of trade unfavourable to primary producers, kept the trade in manufactures so low, to the detriment of some countries as the United Kingdom, even in the twenties, and it was this which pulled the world economy down in the early thirties....If primary commodity markets had not been so insecure the crisis of 1929 would not have become a great depression....It was the violent fall of prices that was deflationary.

The stock market crash was not the first sign of the Great Depression. "Long before the crash, community banks were failing at the rate of one per day". It was the development of the Federal Reserve System that misled investors in the 1920s into relying on federal banks as a safety net. They were encouraged to continue buying stocks and to overlook any of the fluctuations. Economist Roger Babson tried to warn the investors of the deficiency to come, but was ridiculed even as the economy began to deteriorate during the summer of 1929. While England and Germany struggled under the strain on gold currencies after the war, economists were blinded by an unsustainable 'new economy' they sought to be considerably stable and successful.

Since the United States decided to no longer comply with the gold standard, "the value of the dollar could change freely from day to day". Although this imbalance on an international scale led to crisis, the economy within the nation remained stable.

The depression then affected all nations on an international scale. "The German mark collapsed when the chancellor put domestic politics ahead of sensible finance; the bank of England abandoned the gold standard after a subsequent speculative attack; and the U.S. Federal Reserve raised its discount rate dramatically in October 1931 to preserve the value of the dollar". The Federal Reserve drove the American economy into an even deeper depression.

Tax policy

In 1929 the Hoover administration responded to the economic crises by temporarily lowering income tax rates and the corporate tax rate. At the beginning of 1931, tax returns showed a tremendous decline in income due to the economic downturn. Income tax receipts were 40% less than in 1930. At the same time government spending proved to be a lot greater than estimated. As a result, the budget deficit increased tremendously. While Secretary of the Treasury Andrew Mellon urged to increase taxes, Hoover had no desire to do so since 1932 was an election year. In December 1931, hopes that the economic downturn would come to an end vanished since all economic indicators pointed to a continuing downward trend. On January 7, 1932, Andrew Mellon announced that the Hoover administration would end a further increase in public debt by raising taxes. On June 6, 1932, the Revenue Act of 1932 was signed into law.

Franklin D. Roosevelt (1933–45)

Roosevelt won the 1932 presidential election promising to promote recovery. He enacted a series of programs, including Social Security, banking reform, and suspension of the gold standard, collectively known as the New Deal.

The majority of historians and economists argue the New Deal was beneficial to recovery. In a survey of economic historians conducted by Robert Whaples, professor of economics at Wake Forest University, anonymous questionnaires were sent to members of the Economic History Association. Members were asked to either disagree, agree, or agree with provisos with the statement that read: "Taken as a whole, government policies of the New Deal served to lengthen and deepen the Great Depression." While only 6% of economic historians who worked in the history department of their universities agreed with the statement, 27% of those that work in the economics department agreed. Almost an identical percent of the two groups (21% and 22%) agreed with the statement "with provisos", while 74% of those who worked in the history department, and 51% in the economics department, disagreed with the statement outright.

Arguments for key to recovery

According to Peter Temin, Barry Wigmore, Gauti B. Eggertsson and Christina Romer the biggest primary impact of the New Deal on the economy and the key to recovery and to end the Great Depression was brought about by a successful management of public expectations. Before the first New Deal measures people expected a contractionary economic situation (recession, deflation) to persist. Roosevelt's fiscal and monetary policy regime change helped to make his policy objectives credible. Expectations changed towards an expansionary development (economic growth, inflation). The expectation of higher future income and higher future inflation stimulated demand and investments. The analysis suggests that the elimination of the policy dogmas of the gold standard, balanced budget and small government led to a large shift in expectation that accounts for about 70–80 percent of the recovery of output and prices from 1933 to 1937. If the regime change would not have happened and the Hoover policy would have continued, the economy would have continued its free fall in 1933, and output would have been 30 percent lower in 1937 than in 1933.

Arguments for prolongation of the Great Depression

In the new classical macroeconomics view of the Great Depression large negative shocks caused the 1929–33 downturn – including monetary shocks, productivity shocks, and banking shocks – but those developments become positive after 1933 due to monetary and banking reform policies. According to the model Cole-Ohanian impose, the main culprits for the prolonged depression were labor frictions and productivity/efficiency frictions (perhaps, to a lesser extent). Financial frictions are unlikely to have caused the prolonged slump.

In the Cole-Ohanian model there is a slower than normal recovery which they explain by New Deal policies which they evaluated as tending towards monopoly and distribution of wealth. The key economic paper looking at these diagnostic sources in relation to the Great Depression is Cole and Ohanian's work. Cole-Ohanian point at two policies of New Deal: the National Industrial Recovery Act and National Labor Relations Act (NLRA), the latter strengthening NIRA's labor provision. According to Cole-Ohanian New Deal policies created cartelization, high wages, and high prices in at least manufacturing and some energy and mining industries. Roosevelts policies against the severity of the Depression like the NIRA, a "code of fair competition" for each industry were aimed to reduce cutthroat competition in a period of severe deflation, which was seen as the cause for lowered demand and employment. The NIRA suspended antitrust laws and permitted collusion in some sectors provided that industry raised wages above clearing level and accepted collective bargaining with labor unions. The effects of cartelization can be seen as the basic effect of monopoly. The given corporation produces too little, charges too high of a price, and under-employs labor. Likewise, an increase in the power of unions creates a situation similar to monopoly. Wages are too high for the union members, so the corporation employs fewer people and, produces less output. Cole-Ohanian show that 60% of the difference between the trend and realized output is due to cartelization and unions. Chari, Kehoe, McGrattan also present a nice exposition that's in line with Cole-Ohanian.

This type of analysis has numerous counterarguments including the applicability of the equilibrium business cycle to the Great Depression.