Rogue waves (also known as freak waves or killer waves) are large and unpredictable surface waves that can be extremely dangerous to ships and isolated structures such as lighthouses. They are distinct from tsunamis, which are long wavelength waves, often almost unnoticeable in deep waters and are caused by the displacement of water due to other phenomena (such as earthquakes). A rogue wave at the shore is sometimes called a sneaker wave.

In oceanography, rogue waves are more precisely defined as waves whose height is more than twice the significant wave height (Hs or SWH), which is itself defined as the mean of the largest third of waves in a wave record. Rogue waves do not appear to have a single distinct cause but occur where physical factors such as high winds and strong currents cause waves to merge to create a single large wave. Recent research suggests sea state crest-trough correlation leading to linear superposition may be a dominant factor in predicting the frequency of rogue waves.

Among other causes, studies of nonlinear waves such as the Peregrine soliton, and waves modeled by the nonlinear Schrödinger equation (NLS), suggest that modulational instability can create an unusual sea state where a "normal" wave begins to draw energy from other nearby waves, and briefly becomes very large. Such phenomena are not limited to water and are also studied in liquid helium, nonlinear optics, and microwave cavities. A 2012 study reported that in addition to the Peregrine soliton reaching up to about three times the height of the surrounding sea, a hierarchy of higher order wave solutions could also exist having progressively larger sizes and demonstrated the creation of a "super rogue wave" (a breather around five times higher than surrounding waves) in a water-wave tank.

A 2012 study supported the existence of oceanic rogue holes, the inverse of rogue waves, where the depth of the hole can reach more than twice the significant wave height. Although it is often claimed that rogue holes have never been observed in nature despite replication in wave tank experiments, there is a rogue hole recording from an oil platform in the North Sea, revealed in Kharif et al. The same source also reveals a recording of what is known as the 'Three Sisters'.

Background

Rogue waves are waves in open water that are much larger than surrounding waves. More precisely, rogue waves have a height which is more than twice the significant wave height (Hs or SWH). They can be caused when currents or winds cause waves to travel at different speeds, and the waves merge to create a single large wave; or when nonlinear effects cause energy to move between waves to create a single extremely large wave.

Once considered mythical and lacking hard evidence, rogue waves are now proven to exist and are known to be natural ocean phenomena. Eyewitness accounts from mariners and damage inflicted on ships have long suggested they occur. Still, the first scientific evidence of their existence came with the recording of a rogue wave by the Gorm platform in the central North Sea in 1984. A stand-out wave was detected with a wave height of 11 m (36 ft) in a relatively low sea state. However, what caught the attention of the scientific community was the digital measurement of a rogue wave at the Draupner platform in the North Sea on January 1, 1995; called the "Draupner wave", it had a recorded maximum wave height of 25.6 m (84 ft) and peak elevation of 18.5 m (61 ft). During that event, minor damage was inflicted on the platform far above sea level, confirming the accuracy of the wave-height reading made by a downwards pointing laser sensor.

The existence of rogue waves has since been confirmed by video and photographs, satellite imagery, radar of the ocean surface, stereo wave imaging systems, pressure transducers on the sea-floor, and oceanographic research vessels. In February 2000, a British oceanographic research vessel, the RRS Discovery, sailing in the Rockall Trough west of Scotland, encountered the largest waves ever recorded by any scientific instruments in the open ocean, with a SWH of 18.5 metres (61 ft) and individual waves up to 29.1 metres (95 ft).[12] In 2004, scientists using three weeks of radar images from European Space Agency satellites found ten rogue waves, each 25 metres (82 ft) or higher.

A rogue wave is a natural ocean phenomenon that is not caused by land movement, only lasts briefly, occurs in a limited location, and most often happens far out at sea. Rogue waves are considered rare, but potentially very dangerous, since they can involve the spontaneous formation of massive waves far beyond the usual expectations of ship designers, and can overwhelm the usual capabilities of ocean-going vessels which are not designed for such encounters. Rogue waves are, therefore, distinct from tsunamis. Tsunamis are caused by a massive displacement of water, often resulting from sudden movements of the ocean floor, after which they propagate at high speed over a wide area. They are nearly unnoticeable in deep water and only become dangerous as they approach the shoreline and the ocean floor becomes shallower; therefore, tsunamis do not present a threat to shipping at sea (e.g., the only ships lost in the 2004 Asian tsunami were in port.). These are also different from the wave known as a "hundred-year wave", which is a purely statistical description of a particularly high wave with a 1% chance to occur in any given year in a particular body of water.

Rogue waves have now been proven to cause the sudden loss of some ocean-going vessels. Well-documented instances include the freighter MS München, lost in 1978. Rogue waves have been implicated in the loss of other vessels, including the Ocean Ranger, a semisubmersible mobile offshore drilling unit that sank in Canadian waters on 15 February 1982. In 2007, the United States' National Oceanic and Atmospheric Administration (NOAA) compiled a catalogue of more than 50 historical incidents probably associated with rogue waves.

History of rogue wave knowledge

Early reports

In 1826, French scientist and naval officer Jules Dumont d'Urville reported waves as high as 33 m (108 ft) in the Indian Ocean with three colleagues as witnesses, yet he was publicly ridiculed by fellow scientist François Arago. In that era, the thought was widely held that no wave could exceed 9 m (30 ft). Author Susan Casey wrote that much of that disbelief came because there were very few people who had seen a rogue wave and survived; until the advent of steel double-hulled ships of the 20th century, "people who encountered 100-foot [30 m] rogue waves generally weren't coming back to tell people about it."

Pre-1995 research

Unusual waves have been studied scientifically for many years (for example, John Scott Russell's wave of translation, an 1834 study of a soliton wave). Still, these were not linked conceptually to sailors' stories of encounters with giant rogue ocean waves, as the latter were believed to be scientifically implausible.

Since the 19th century, oceanographers, meteorologists, engineers, and ship designers have used a statistical model known as the Gaussian function (or Gaussian Sea or standard linear model) to predict wave height, on the assumption that wave heights in any given sea are tightly grouped around a central value equal to the average of the largest third, known as the significant wave height (SWH). In a storm sea with an SWH of 12 m (39 ft), the model suggests hardly ever would a wave higher than 15 m (49 ft) occur. It suggests one of 30 m (98 ft) could indeed happen, but only once in 10,000 years. This basic assumption was well accepted, though acknowledged to be an approximation. Using a Gaussian form to model waves has been the sole basis of virtually every text on that topic for the past 100 years.

The first known scientific article on "freak waves" was written by Professor Laurence Draper in 1964. In that paper, he documented the efforts of the National Institute of Oceanography in the early 1960s to record wave height, and the highest wave recorded at that time, which was about 20 metres (67 ft). Draper also described freak wave holes.

Research on cross-swell waves and their contribution to rogue wave studies

Before the Draupner wave was recorded in 1995, early research had already made significant strides in understanding extreme wave interactions. In 1979, Dik Ludikhuize and Henk Jan Verhagen at TU Delft successfully generated cross-swell waves in a wave basin. Although only monochromatic waves could be produced at the time, their findings, reported in 1981, showed that individual wave heights could be added together even when exceeding breaker criteria. This phenomenon provided early evidence that waves could grow significantly larger than anticipated by conventional theories of wave breaking.

This work highlighted that in cases of crossing waves, wave steepness could increase beyond usual limits. Although the waves studied were not as extreme as rogue waves, the research provided an understanding of how multidirectional wave interactions could lead to extreme wave heights - a key concept in the formation of rogue waves. The crossing wave phenomenon studied in the Delft Laboratory therefore had direct relevance to the unpredictable rogue waves encountered at sea.

Research published in 2024 by TU Delft and other institutions has subsequently demonstrated that waves coming from multiple directions can grow up to four times steeper than previously imagined.

The 1995 Draupner wave

The Draupner wave (or New Year's wave) was the first rogue wave to be detected by a measuring instrument. The wave was recorded in 1995 at Unit E of the Draupner platform, a gas pipeline support complex located in the North Sea about 160 km (100 miles) southwest from the southern tip of Norway.

The rig was built to withstand a calculated 1-in-10,000-years wave with a predicted height of 20 m (64 ft) and was fitted with state-of-the-art sensors, including a laser rangefinder wave recorder on the platform's underside. At 3 pm on 1 January 1995, the device recorded a rogue wave with a maximum wave height of 25.6 m (84 ft). Peak elevation above still water level was 18.5 m (61 ft). The reading was confirmed by the other sensors. The platform sustained minor damage in the event.

In the area, the SWH at the time was about 12 m (39 ft), so the Draupner wave was more than twice as tall and steep as its neighbors, with characteristics that fell outside any known wave model. The wave caused enormous interest in the scientific community.

Subsequent research

Following the evidence of the Draupner wave, research in the area became widespread.

The first scientific study to comprehensively prove that freak waves exist, which are clearly outside the range of Gaussian waves, was published in 1997. Some research confirms that observed wave height distribution, in general, follows well the Rayleigh distribution. Still, in shallow waters during high energy events, extremely high waves are rarer than this particular model predicts. From about 1997, most leading authors acknowledged the existence of rogue waves with the caveat that wave models could not replicate rogue waves.

Statoil researchers presented a paper in 2000, collating evidence that freak waves were not the rare realizations of a typical or slightly non-gaussian sea surface population (classical extreme waves) but were the typical realizations of a rare and strongly non-gaussian sea surface population of waves (freak extreme waves). A workshop of leading researchers in the world attended the first Rogue Waves 2000 workshop held in Brest in November 2000.

In 2000, British oceanographic vessel RRS Discovery recorded a 29 m (95 ft) wave off the coast of Scotland near Rockall. This was a scientific research vessel fitted with high-quality instruments. Subsequent analysis determined that under severe gale-force conditions with wind speeds averaging 21 metres per second (41 kn), a ship-borne wave recorder measured individual waves up to 29.1 m (95.5 ft) from crest to trough, and a maximum SWH of 18.5 m (60.7 ft). These were some of the largest waves recorded by scientific instruments up to that time. The authors noted that modern wave prediction models are known to significantly under-predict extreme sea states for waves with a significant height (Hs) above 12 m (39.4 ft). The analysis of this event took a number of years and noted that "none of the state-of-the-art weather forecasts and wave models— the information upon which all ships, oil rigs, fisheries, and passenger boats rely— had predicted these behemoths." In simple terms, a scientific model (and also ship design method) to describe the waves encountered did not exist. This finding was widely reported in the press, which reported that "according to all of the theoretical models at the time under this particular set of weather conditions, waves of this size should not have existed".

In 2004, the ESA MaxWave project identified more than 10 individual giant waves above 25 m (82 ft) in height during a short survey period of three weeks in a limited area of the South Atlantic. By 2007, it was further proven via satellite radar studies that waves with crest-to-trough heights of 20 to 30 m (66 to 98 ft) occur far more frequently than previously thought. Rogue waves are now known to occur in all of the world's oceans many times each day.

Rogue waves are now accepted as a common phenomenon. Professor Akhmediev of the Australian National University has stated that 10 rogue waves exist in the world's oceans at any moment. Some researchers have speculated that roughly three of every 10,000 waves on the oceans achieve rogue status, yet in certain spots— such as coastal inlets and river mouths— these extreme waves can make up three of every 1,000 waves, because wave energy can be focused.

Rogue waves may also occur in lakes. A phenomenon known as the "Three Sisters" is said to occur in Lake Superior when a series of three large waves forms. The second wave hits the ship's deck before the first wave clears. The third incoming wave adds to the two accumulated backwashes and suddenly overloads the ship deck with large amounts of water. The phenomenon is one of various theorized causes of the sinking of the SS Edmund Fitzgerald on Lake Superior in November 1975.

A 2012 study reported that in addition to the Peregrine soliton reaching up to about 3 times the height of the surrounding sea, a hierarchy of higher order wave solutions could also exist having progressively larger sizes, and demonstrated the creation of a "super rogue wave"— a breather around 5 times higher than surrounding waves— in a water tank. Also in 2012, researchers at the Australian National University proved the existence of "rogue wave holes", an inverted profile of a rogue wave. Their research created rogue wave holes on the water surface in a water-wave tank. In maritime folklore, stories of rogue holes are as common as stories of rogue waves. They had followed from theoretical analysis but had never been proven experimentally.

"Rogue wave" has become a near-universal term used by scientists to describe isolated, large-amplitude waves that occur more frequently than expected for normal, Gaussian-distributed, statistical events. Rogue waves appear ubiquitous and are not limited to the oceans. They appear in other contexts and have recently been reported in liquid helium, nonlinear optics, and microwave cavities. Marine researchers universally now accept that these waves belong to a specific kind of sea wave, not considered by conventional models for sea wind waves. A 2015 paper studied the wave behavior around a rogue wave, including optical and the Draupner wave, and concluded, "rogue events do not necessarily appear without warning but are often preceded by a short phase of relative order".

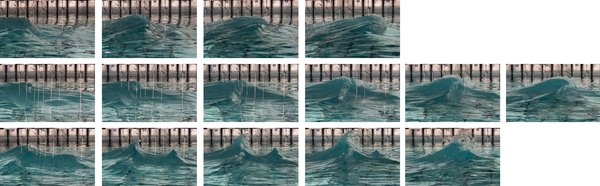

In 2019, researchers succeeded in producing a wave with similar characteristics to the Draupner wave (steepness and breaking), and proportionately greater height, using multiple wavetrains meeting at an angle of 120°. Previous research had strongly suggested that the wave resulted from an interaction between waves from different directions ("crossing seas"). Their research also highlighted that wave-breaking behavior was not necessarily as expected. If waves met at an angle less than about 60°, then the top of the wave "broke" sideways and downwards (a "plunging breaker"). Still, from about 60° and greater, the wave began to break vertically upwards, creating a peak that did not reduce the wave height as usual but instead increased it (a "vertical jet"). They also showed that the steepness of rogue waves could be reproduced in this manner. Lastly, they observed that optical instruments such as the laser used for the Draupner wave might be somewhat confused by the spray at the top of the wave if it broke, and this could lead to uncertainties of around 1.0 to 1.5 m (3 to 5 ft) in the wave height. They concluded, "... the onset and type of wave breaking play a significant role and differ significantly for crossing and noncrossing waves. Crucially, breaking becomes less crest-amplitude limiting for sufficiently large crossing angles and involves the formation of near-vertical jets".

- In the first row (0°), the crest breaks horizontally and plunges, limiting the wave size.

- In the middle row (60°), somewhat upward-lifted breaking behavior occurs.

- In the third row (120°), described as the most accurate simulation achieved of the Draupner wave, the wave breaks upward, as a vertical jet, and the wave crest height is not limited by breaking.

Extreme rogue wave events

On 17 November 2020, a buoy moored in 45 metres (148 ft) of water on Amphitrite Bank in the Pacific Ocean 7 kilometres (4.3 mi; 3.8 nmi) off Ucluelet, Vancouver Island, British Columbia, Canada, at 48.9°N 125.6°W recorded a lone 17.6-metre (58 ft) tall wave among surrounding waves about 6 metres (20 ft) in height. The wave exceeded the surrounding significant wave heights by a factor of 2.93. When the wave's detection was revealed to the public in February 2022, one scientific paper and many news outlets christened the event as "the most extreme rogue wave event ever recorded" and a "once-in-a-millennium" event, claiming that at about three times the height of the waves around it, the Ucluelet wave set a record as the most extreme rogue wave ever recorded at the time in terms of its height in proportion to surrounding waves, and that a wave three times the height of those around it was estimated to occur on average only once every 1,300 years worldwide.

The Ucluelet event generated controversy. Analysis of scientific papers dealing with rogue wave events since 2005 revealed the claims for the record-setting nature and rarity of the wave to be incorrect. The paper Oceanic rogue waves by Dysthe, Krogstad and Muller reports on an event in the Black Sea in 2004 which was far more extreme than the Ucluelet wave, where the Datawell Waverider buoy reported a wave whose height was 10.32 metres (33.86 ft) higher and 3.91 times the significant wave height, as detailed in the paper. Thorough inspection of the buoy after the recording revealed no malfunction. The authors of the paper that reported the Black Sea event assessed the wave as "anomalous" and suggested several theories on how such an extreme wave may have arisen. The Black Sea event differs in the fact that it, unlike the Ucluelet wave, was recorded with a high-precision instrument. The Oceanic rogue waves paper also reports even more extreme waves from a different source, but these were possibly overestimated, as assessed by the data's own authors. The Black Sea wave occurred in relatively calm weather.

Furthermore, a paper by I. Nikolkina and I. Didenkulova also reveals waves more extreme than the Ucluelet wave. In the paper, they infer that in 2006 a 21-metre (69 ft) wave appeared in the Pacific Ocean off the Port of Coos Bay, Oregon, with a significant wave height of 3.9 metres (13 ft). The ratio is 5.38, almost twice that of the Ucluelet wave. The paper also reveals the MV Pont-Aven incident as marginally more extreme than the Ucluelet event. The paper also assesses a report of an 11-metre (36 ft) wave in a significant wave height of 1.9 metres (6 ft 3 in), but the authors cast doubt on that claim. A paper written by Craig B. Smith in 2007 reported on an incident in the North Atlantic, in which the submarine 'Grouper' was hit by a 30-meter wave in calm seas.

Causes

Because the phenomenon of rogue waves is still a matter of active research, clearly stating what the most common causes are or whether they vary from place to place is premature. The areas of highest predictable risk appear to be where a strong current runs counter to the primary direction of travel of the waves; the area near Cape Agulhas off the southern tip of Africa is one such area. The warm Agulhas Current runs to the southwest, while the dominant winds are westerlies, but since this thesis does not explain the existence of all waves that have been detected, several different mechanisms are likely, with localized variation. Suggested mechanisms for freak waves include:

- Diffractive focusing

- According to this hypothesis, coast shape or seabed shape directs several small waves to meet in phase. Their crest heights combine to create a freak wave.

- Focusing by currents

- Waves from one current are driven into an opposing current. This results in shortening of wavelength, causing shoaling (i.e., increase in wave height), and oncoming wave trains to compress together into a rogue wave. This happens off the South African coast, where the Agulhas Current is countered by westerlies.

- Nonlinear effects (modulational instability)

- A rogue wave may occur by natural, nonlinear processes from a random background of smaller waves. In such a case, it is hypothesized, an unusual, unstable wave type may form, which "sucks" energy from other waves, growing to a near-vertical monster itself, before becoming too unstable and collapsing shortly thereafter. One simple model for this is a wave equation known as the nonlinear Schrödinger equation (NLS), in which a normal and perfectly accountable (by the standard linear model) wave begins to "soak" energy from the waves immediately fore and aft, reducing them to minor ripples compared to other waves. The NLS can be used in deep-water conditions. In shallow water, waves are described by the Korteweg–de Vries equation or the Boussinesq equation. These equations also have nonlinear contributions and show solitary-wave solutions. The terms soliton (a type of self-reinforcing wave) and breather (a wave where energy concentrates in a localized and oscillatory fashion) are used for some of these waves, including the well-studied Peregrine soliton. Studies show that nonlinear effects could arise in bodies of water. A small-scale rogue wave consistent with the NLS on (the Peregrine soliton) was produced in a laboratory water-wave tank in 2011.

- Normal part of the wave spectrum

- Some studies argue that many waves classified as rogue waves (with the sole condition that they exceed twice the SWH) are not freaks but just rare, random samples of the wave height distribution, and are, as such, statistically expected to occur at a rate of about one rogue wave every 28 hours. This is commonly discussed as the question "Freak Waves: Rare Realizations of a Typical Population Or Typical Realizations of a Rare Population?" According to this hypothesis, most real-world encounters with huge waves can be explained by linear wave theory (or weakly nonlinear modifications thereof), without the need for special mechanisms like the modulational instability. Recent studies analyzing billions of wave measurements by wave buoys demonstrate that rogue wave occurrence rates in the ocean can be explained with linear theory when the finite spectral bandwidth of the wave spectrum is taken into account. However, whether weakly nonlinear dynamics can explain even the largest rogue waves (such as those exceeding three times the significant wave height, which would be exceedingly rare in linear theory) is not yet known. This has also led to criticism questioning whether defining rogue waves using only their relative height is meaningful in practice.

- Constructive interference of elementary waves

- Rogue waves can result from the constructive interference (dispersive and directional focusing) of elementary three-dimensional waves enhanced by nonlinear effects.

- Wind wave interactions

- While wind alone is unlikely to generate a rogue wave, its effect combined with other mechanisms may provide a fuller explanation of freak wave phenomena. As the wind blows over the ocean, energy is transferred to the sea surface. When strong winds from a storm blow in the ocean current's opposing direction, the forces might be strong enough to generate rogue waves randomly. Theories of instability mechanisms for the generation and growth of wind waves – although not on the causes of rogue waves – are provided by Phillips and Miles.

The spatiotemporal focusing seen in the NLS equation can also occur when the non-linearity is removed. In this case, focusing is primarily due to different waves coming into phase rather than any energy-transfer processes. Further analysis of rogue waves using a fully nonlinear model by R. H. Gibbs (2005) brings this mode into question, as it is shown that a typical wave group focuses in such a way as to produce a significant wall of water at the cost of a reduced height.

A rogue wave, and the deep trough commonly seen before and after it, may last only for some minutes before either breaking or reducing in size again. Apart from a single one, the rogue wave may be part of a wave packet consisting of a few rogue waves. Such rogue wave groups have been observed in nature.

Research efforts

A number of research programmes are currently underway or have concluded whose focus is/was on rogue waves, including:

- In the course of Project MaxWave, researchers from the GKSS Research Centre, using data collected by ESA satellites, identified a large number of radar signatures that have been portrayed as evidence for rogue waves. Further research is underway to develop better methods of translating the radar echoes into sea surface elevation, but at present this technique is not proven.

- The Australian National University, working in collaboration with Hamburg University of Technology and the University of Turin, have been conducting experiments in nonlinear dynamics to try to explain rogue or killer waves. The "Lego Pirate" video has been widely used and quoted to describe what they call "super rogue waves", which their research suggests can be up to five times bigger than the other waves around them.

- The European Space Agency continues to do research into rogue waves by radar satellite.

- United States Naval Research Laboratory, the science arm of the Navy and Marine Corps published results of their modelling work in 2015.

- Massachusetts Institute of Technology(MIT)'s research in this field is ongoing. Two researchers there partially supported by the Naval Engineering Education Consortium (NEEC) considered the problem of short-term prediction of rare, extreme water waves and developed and published their research on a predictive tool of about 25 wave periods. This tool can give ships and their crews a two to three-minute warning of a potentially catastrophic impact allowing crew some time to shut down essential operations on a ship (or offshore platform). The authors cite landing on an aircraft carrier as a prime example.

- The University of Colorado and the University of Stellenbosch

- Kyoto University

- Swinburne University of Technology in Australia recently published work on the probabilities of rogue waves.

- The University of Oxford Department of Engineering Science published a comprehensive review of the science of rogue waves in 2014. In 2019, A team from the Universities of Oxford and Edinburgh recreated the Draupner wave in a lab.

- University of Western Australia

- Tallinn University of Technology in Estonia

- Extreme Seas Project funded by the EU.

- At Umeå University in Sweden, a research group in August 2006 showed that normal stochastic wind-driven waves can suddenly give rise to monster waves. The nonlinear evolution of the instabilities was investigated by means of direct simulations of the time-dependent system of nonlinear equations.

- The Great Lakes Environmental Research Laboratory did research in 2002, which dispelled the long-held contentions that rogue waves were of rare occurrence.

- The University of Oslo has conducted research into crossing sea state and rogue wave probability during the Prestige accident; nonlinear wind-waves, their modification by tidal currents, and application to Norwegian coastal waters; general analysis of realistic ocean waves; modelling of currents and waves for sea structures and extreme wave events; rapid computations of steep surface waves in three dimensions, and comparison with experiments; and very large internal waves in the ocean.

- The National Oceanography Centre in the United Kingdom

- Scripps Institute of Oceanography in the United States

- Ritmare project in Italy.

- University of Copenhagen and University of Victoria

Other media

Researchers at UCLA observed rogue-wave phenomena in microstructured optical fibers near the threshold of soliton supercontinuum generation and characterized the initial conditions for generating rogue waves in any medium. Research in optics has pointed out the role played by a Peregrine soliton that may explain those waves that appear and disappear without leaving a trace.

Rogue waves in other media appear to be ubiquitous and have also been reported in liquid helium, in quantum mechanics, in nonlinear optics, in microwave cavities, in Bose–Einstein condensate, in heat and diffusion, and in finance.

Reported encounters

Many of these encounters are reported only in the media, and are not examples of open-ocean rogue waves. Often, in popular culture, an endangering huge wave is loosely denoted as a "rogue wave", while the case has not been established that the reported event is a rogue wave in the scientific sense – i.e. of a very different nature in characteristics as the surrounding waves in that sea state] and with a very low probability of occurrence.

This section lists a limited selection of notable incidents.

19th century

- Eagle Island lighthouse (1861) – Water broke the glass of the structure's east tower and flooded it, implying a wave that surmounted the 40 m (130 ft) cliff and overwhelmed the 26 m (85 ft) tower.

- Flannan Isles Lighthouse (1900) – Three lighthouse keepers vanished after a storm that resulted in wave-damaged equipment being found 34 m (112 ft) above sea level.

20th century

- SS Kronprinz Wilhelm, September 18, 1901 – The most modern German ocean liner of its time (winner of the Blue Riband) was damaged on its maiden voyage from Cherbourg to New York by a huge wave. The wave struck the ship head-on.

- RMS Lusitania (1910) – On the night of 10 January 1910, a 23 m (75 ft) wave struck the ship over the bow, damaging the forecastle deck and smashing the bridge windows.

- Voyage of the James Caird (1916) – Sir Ernest Shackleton encountered a wave he termed "gigantic" while piloting a lifeboat from Elephant Island to South Georgia.

- USS Memphis, August 29, 1916 – An armored cruiser, formerly known as the USS Tennessee, wrecked while stationed in the harbor of Santo Domingo, with 43 men killed or lost, by a succession of three waves, the largest estimated at 70 feet.

- RMS Homeric (1924) – Hit by a 24 m (80 ft) wave while sailing through a hurricane off the East Coast of the United States, injuring seven people, smashing numerous windows and portholes, carrying away one of the lifeboats, and snapping chairs and other fittings from their fastenings.

- USS Ramapo (1933) – Triangulated at 34 m (112 ft).

- RMS Queen Mary (1942) – Broadsided by a 28 m (92 ft) wave and listed briefly about 52° before slowly righting.

- SS Michelangelo (1966) – Hole torn in superstructure, heavy glass was smashed by the wave 24 m (80 ft) above the waterline, and three deaths.

- SS Edmund Fitzgerald (1975) – Lost on Lake Superior, a Coast Guard report blamed water entry to the hatches, which gradually filled the hold, or errors in navigation or charting causing damage from running onto shoals. However, another nearby ship, the SS Arthur M. Anderson, was hit at a similar time by two rogue waves and possibly a third, and this appeared to coincide with the sinking around 10 minutes later.

- MS München (1978) – Lost at sea, leaving only scattered wreckage and signs of sudden damage including extreme forces 20 m (66 ft) above the water line. Although more than one wave was probably involved, this remains the most likely sinking due to a freak wave.

- Esso Languedoc (1980) – A 25-to-30 m (80-to-100 ft) wave washed across the deck from the stern of the French supertanker near Durban, South Africa.

- Fastnet Lighthouse – Struck by a 48-metre (157 ft) wave in 1985

- Draupner wave (North Sea, 1995) – The first rogue wave confirmed with scientific evidence, it had a maximum height of 26 metres (85 ft)

- Queen Elizabeth 2 (1995) – Encountered a 29 m (95 ft) wave in the North Atlantic, during Hurricane Luis. The master said it "came out of the darkness" and "looked like the White Cliffs of Dover." Newspaper reports at the time described the cruise liner as attempting to "surf" the near-vertical wave in order not to be sunk.

21st century

- U.S. Naval Research Laboratory ocean-floor pressure sensors detected a freak wave caused by Hurricane Ivan in the Gulf of Mexico, 2004. The wave was around 27.7 m (91 ft) high from peak to trough, and around 200 m (660 ft) long. Their computer models also indicated that waves may have exceeded 40 metres (130 ft) in the eyewall.

- Aleutian Ballad, (Bering Sea, 2005) footage of what is identified as an 18 m (60 ft) wave appears in an episode of Deadliest Catch. The wave strikes the ship at night and cripples the vessel, causing the boat to tip for a short period onto its side. This is one of the few video recordings of what might be a rogue wave.

- In 2006, researchers from U.S. Naval Institute theorized rogue waves may be responsible for the unexplained loss of low-flying aircraft, such as U.S. Coast Guard helicopters during search-and-rescue missions.

- MS Louis Majesty (Mediterranean Sea, March 2010) was struck by three successive 8 m (26 ft) waves while crossing the Gulf of Lion on a Mediterranean cruise between Cartagena and Marseille. Two passengers were killed by flying glass when the second and third waves shattered a lounge window. The waves, which struck without warning, were all abnormally high in respect to the sea swell at the time of the incident.

- In 2011, the Sea Shepherd vessel MV Brigitte Bardot was damaged by a rogue wave of 11 m (36.1 ft) while pursuing the Japanese whaling fleet off the western coast of Australia on 28 December 2011. The MV Brigitte Bardot was escorted back to Fremantle by the SSCS flagship, MV Steve Irwin. The main hull was cracked, and the port side pontoon was being held together by straps. The vessel arrived at Fremantle Harbor on 5 January 2012. Both ships were followed by the ICR security vessel MV Shōnan Maru 2 at a distance of 5 nautical miles (9 km).

- In 2019, Hurricane Dorian's extratropical remnant generated a 30 m (100 ft) rogue wave off the coast of Newfoundland.

- In 2022, the Viking cruise ship Viking Polaris was hit by a rogue wave on its way to Ushuaia, Argentina. One person died, four more were injured, and the ship's scheduled route to Antarctica was canceled.

Quantifying the impact of rogue waves on ships

The loss of the MS München in 1978 provided some of the first physical evidence of the existence of rogue waves. München was a state-of-the-art cargo ship with multiple water-tight compartments and an expert crew. She was lost with all crew, and the wreck has never been found. The only evidence found was the starboard lifeboat recovered from floating wreckage sometime later. The lifeboats hung from forward and aft blocks 20 m (66 ft) above the waterline. The pins had been bent back from forward to aft, indicating the lifeboat hanging below it had been struck by a wave that had run from fore to aft of the ship and had torn the lifeboat from the ship. To exert such force, the wave must have been considerably higher than 20 m (66 ft). At the time of the inquiry, the existence of rogue waves was considered so statistically unlikely as to be near impossible. Consequently, the Maritime Court investigation concluded that the severe weather had somehow created an "unusual event" that had led to the sinking of the München.

In 1980, the MV Derbyshire was lost during Typhoon Orchid south of Japan, along with all of her crew. The Derbyshire was an ore-bulk oil combination carrier built in 1976. At 91,655 gross register tons, she was— and remains to be— the largest British ship ever lost at sea. The wreck was found in June 1994. The survey team deployed a remotely operated vehicle to photograph the wreck. A private report published in 1998 prompted the British government to reopen a formal investigation into the sinking. The investigation included a comprehensive survey by the Woods Hole Oceanographic Institution, which took 135,774 pictures of the wreck during two surveys. The formal forensic investigation concluded that the ship sank because of structural failure and absolved the crew of any responsibility. Most notably, the report determined the detailed sequence of events that led to the structural failure of the vessel. A third comprehensive analysis was subsequently done by Douglas Faulkner, professor of marine architecture and ocean engineering at the University of Glasgow. His 2001 report linked the loss of the Derbyshire with the emerging science on freak waves, concluding that the Derbyshire was almost certainly destroyed by a rogue wave.

Work by sailor and author Craig B. Smith in 2007 confirmed prior forensic work by Faulkner in 1998 and determined that the Derbyshire was exposed to a hydrostatic pressure of a "static head" of water of about 20 m (66 ft) with a resultant static pressure of 201 kilopascals (2.01 bar; 29.2 psi). This is in effect 20 m (66 ft) of seawater (possibly a super rogue wave) flowing over the vessel. The deck cargo hatches on the Derbyshire were determined to be the key point of failure when the rogue wave washed over the ship. The design of the hatches only allowed for a static pressure less than 2 m (6.6 ft) of water or 17.1 kPa (0.171 bar; 2.48 psi), meaning that the typhoon load on the hatches was more than 10 times the design load. The forensic structural analysis of the wreck of the Derbyshire is now widely regarded as irrefutable.

In addition, fast-moving waves are now known to also exert extremely high dynamic pressure. Plunging or breaking waves are known to cause short-lived impulse pressure spikes called Gifle peaks. These can reach pressures of 200 kPa (2.0 bar; 29 psi) (or more) for milliseconds, which is sufficient pressure to lead to brittle fracture of mild steel. Evidence of failure by this mechanism was also found on the Derbyshire. Smith documented scenarios where hydrodynamic pressure up to 5,650 kPa (56.5 bar; 819 psi) or over 500 metric tonnes/m2 could occur.

In 2004, an extreme wave was recorded impacting the Alderney Breakwater, Alderney, in the Channel Islands. This breakwater is exposed to the Atlantic Ocean. The peak pressure recorded by a shore-mounted transducer was 745 kPa (7.45 bar; 108.1 psi). This pressure far exceeds almost any design criteria for modern ships, and this wave would have destroyed almost any merchant vessel.

Design standards

In November 1997, the International Maritime Organization(IMO) adopted new rules covering survivability and structural requirements for bulk carriers of 150 m (490 ft) and upwards. The bulkhead and double bottom must be strong enough to allow the ship to survive flooding in hold one unless loading is restricted.

Rogue waves present considerable danger for several reasons: they are rare, unpredictable, may appear suddenly or without warning, and can impact with tremendous force. A 12 m (39 ft) wave in the usual "linear" model would have a breaking force of 6 metric tons per square metre [t/m2] (8.5 psi). Although modern ships are typically designed to tolerate a breaking wave of 15 t/m2, a rogue wave can dwarf both of these figures with a breaking force far exceeding 100 t/m2. Smith presented calculations using the International Association of Classification Societies (IACS) Common Structural Rules for a typical bulk carrier.

Peter Challenor, a scientist from the National Oceanography Centre in the United Kingdom, was quoted in Casey's book in 2010 as saying: "We don’t have that random messy theory for nonlinear waves. At all." He added, "People have been working actively on this for the past 50 years at least. We don’t even have the start of a theory."

In 2006, Smith proposed that the IACS recommendation 34 pertaining to standard wave data be modified so that the minimum design wave height be increased to 19.8 m (65 ft). He presented analysis that sufficient evidence exists to conclude that 20.1 m (66 ft) high waves can be experienced in the 25-year lifetime of oceangoing vessels, and that 29.9 m (98 ft) high waves are less likely, but not out of the question. Therefore, a design criterion based on 11.0 m (36 ft) high waves seems inadequate when the risk of losing crew and cargo is considered. Smith also proposed that the dynamic force of wave impacts should be included in the structural analysis. The Norwegian offshore standards now consider extreme severe wave conditions and require that a 10,000-year wave does not endanger the ships' integrity. W. Rosenthal noted that as of 2005, rogue waves were not explicitly accounted for in Classification Society's rules for ships' design. As an example, DNV GL, one of the world's largest international certification bodies and classification society with main expertise in technical assessment, advisory, and risk management publishes their Structure Design Load Principles which remain largely based on the Significant Wave Height, and as of January 2016, still have not included any allowance for rogue waves.

The U.S. Navy historically took the design position that the largest wave likely to be encountered was 21.4 m (70 ft). Smith observed in 2007 that the navy now believes that larger waves can occur and the possibility of extreme waves that are steeper (i.e. do not have longer wavelengths) is now recognized. The navy has not had to make any fundamental changes in ship design due to new knowledge of waves greater than 21.4 m because the ships are built to higher standards than required.

The more than 50 classification societies worldwide each has different rules. However, most new ships are built to the standards of the 12 members of the International Association of Classification Societies, which implemented two sets of common structural rules - one for oil tankers and one for bulk carriers, in 2006. These were later harmonised into a single set of rules.