Solubility equilibrium is a type of dynamic equilibrium that exists when a chemical compound in the solid state is in chemical equilibrium with a solution of that compound. The solid may dissolve unchanged, with dissociation, or with chemical reaction with another constituent of the solution, such as acid or alkali. Each solubility equilibrium is characterized by a temperature-dependent solubility product which functions like an equilibrium constant. Solubility equilibria are important in pharmaceutical, environmental and many other scenarios.

Definitions

A solubility equilibrium exists when a chemical compound in the solid state is in chemical equilibrium with a solution containing the compound. This type of equilibrium is an example of dynamic equilibrium in that some individual molecules migrate between the solid and solution phases such that the rates of dissolution and precipitation are equal to one another. When equilibrium is established and the solid has not all dissolved, the solution is said to be saturated. The concentration of the solute in a saturated solution is known as the solubility. Units of solubility may be molar (mol dm−3) or expressed as mass per unit volume, such as μg mL−1. Solubility is temperature dependent. A solution containing a higher concentration of solute than the solubility is said to be supersaturated. A supersaturated solution may be induced to come to equilibrium by the addition of a "seed" which may be a tiny crystal of the solute, or a tiny solid particle, which initiates precipitation.

There are three main types of solubility equilibria.

- Simple dissolution.

- Dissolution with dissociation reaction. This is characteristic of salts. The equilibrium constant is known in this case as a solubility product.

- Dissolution with ionization reaction. This is characteristic of the dissolution of weak acids or weak bases in aqueous media of varying pH.

In each case an equilibrium constant can be specified as a quotient of activities. This equilibrium constant is dimensionless as activity is a dimensionless quantity. However, use of activities is very inconvenient, so the equilibrium constant is usually divided by the quotient of activity coefficients, to become a quotient of concentrations. See Equilibrium chemistry § Equilibrium constant for details. Moreover, the activity of a solid is, by definition, equal to 1 so it is omitted from the defining expression.

For a chemical equilibrium the solubility product, Ksp for the compound ApBq is defined as follows where [A] and [B] are the concentrations of A and B in a saturated solution. A solubility product has a similar functionality to an equilibrium constant though formally Ksp has the dimension of (concentration)p+q.

Effects of conditions

Temperature effect

Solubility is sensitive to changes in temperature.

For example, sugar is more soluble in hot water than cool water. It

occurs because solubility products, like other types of equilibrium

constants, are functions of temperature. In accordance with Le Chatelier's Principle, when the dissolution process is endothermic (heat is absorbed), solubility increases with rising temperature. This effect is the basis for the process of recrystallization, which can be used to purify a chemical compound. When dissolution is exothermic (heat is released) solubility decreases with rising temperature.

Sodium sulfate shows increasing solubility with temperature below about 32.4 °C, but a decreasing solubility at higher temperature. This is because the solid phase is the decahydrate (Na

2SO

4·10H

2O) below the transition temperature, but a different hydrate above that temperature.

The dependence on temperature of solubility for an ideal solution (achieved for low solubility substances) is given by the following expression containing the enthalpy of melting, ΔmH, and the mole fraction of the solute at saturation: where is the partial molar enthalpy of the solute at infinite dilution and the enthalpy per mole of the pure crystal.

This differential expression for a non-electrolyte can be integrated on a temperature interval to give:

For nonideal solutions activity of the solute at saturation appears instead of mole fraction solubility in the derivative with respect to temperature:

Common-ion effect

The common-ion effect is the effect of decreased solubility of one salt when another salt that has an ion in common with it is also present. For example, the solubility of silver chloride, AgCl, is lowered when sodium chloride, a source of the common ion chloride, is added to a suspension of AgCl in water. The solubility, S, in the absence of a common ion can be calculated as follows. The concentrations [Ag+] and [Cl−] are equal because one mole of AgCl would dissociate into one mole of Ag+ and one mole of Cl−. Let the concentration of [Ag+(aq)] be denoted by x. Then Ksp for AgCl is equal to 1.77×10−10 mol2 dm−6 at 25 °C, so the solubility is 1.33×10−5 mol dm−3.

Now suppose that sodium chloride is also present, at a concentration of 0.01 mol dm−3 = 0.01 M. The solubility, ignoring any possible effect of the sodium ions, is now calculated by This is a quadratic equation in x, which is also equal to the solubility. In the case of silver chloride, x2 is very much smaller than 0.01 M x, so the first term can be ignored. Therefore a considerable reduction from 1.33×10−5 mol dm−3. In gravimetric analysis for silver, the reduction in solubility due to the common ion effect is used to ensure "complete" precipitation of AgCl.

Particle size effect

The thermodynamic solubility constant is defined for large monocrystals. Solubility will increase with decreasing size of solute particle (or droplet) because of the additional surface energy. This effect is generally small unless particles become very small, typically smaller than 1 μm. The effect of the particle size on solubility constant can be quantified as follows: where *KA is the solubility constant for the solute particles with the molar surface area A, *KA→0 is the solubility constant for substance with molar surface area tending to zero (i.e., when the particles are large), γ is the surface tension of the solute particle in the solvent, Am is the molar surface area of the solute (in m2/mol), R is the universal gas constant, and T is the absolute temperature.

Salt effects

The salt effects (salting in and salting-out) refers to the fact that the presence of a salt which has no ion in common with the solute, has an effect on the ionic strength of the solution and hence on activity coefficients, so that the equilibrium constant, expressed as a concentration quotient, changes.

Phase effect

Equilibria are defined for specific crystal phases. Therefore, the solubility product is expected to be different depending on the phase of the solid. For example, aragonite and calcite will have different solubility products even though they have both the same chemical identity (calcium carbonate). Under any given conditions one phase will be thermodynamically more stable than the other; therefore, this phase will form when thermodynamic equilibrium is established. However, kinetic factors may favor the formation the unfavorable precipitate (e.g. aragonite), which is then said to be in a metastable state.

In pharmacology, the metastable state is sometimes referred to as amorphous state. Amorphous drugs have higher solubility than their crystalline counterparts due to the absence of long-distance interactions inherent in crystal lattice. Thus, it takes less energy to solvate the molecules in amorphous phase. The effect of amorphous phase on solubility is widely used to make drugs more soluble.

Pressure effect

For condensed phases (solids and liquids), the pressure dependence of solubility is typically weak and usually neglected in practice. Assuming an ideal solution, the dependence can be quantified as: where is the mole fraction of the -th component in the solution, is the pressure, is the absolute temperature, is the partial molar volume of the th component in the solution, is the partial molar volume of the th component in the dissolving solid, and is the universal gas constant.

The pressure dependence of solubility does occasionally have practical significance. For example, precipitation fouling of oil fields and wells by calcium sulfate (which decreases its solubility with decreasing pressure) can result in decreased productivity with time.

Quantitative aspects

Simple dissolution

Dissolution of an organic solid can be described as an equilibrium between the substance in its solid and dissolved forms. For example, when sucrose (table sugar) forms a saturated solution

An equilibrium expression for this reaction can be written, as for any chemical reaction (products over reactants):

where Ko is called the thermodynamic solubility constant. The braces indicate activity. The activity of a pure solid is, by definition, unity. Therefore

The activity of a substance, A, in solution can be expressed as the product of the concentration, [A], and an activity coefficient, γ. When Ko is divided by γ, the solubility constant, Ks,

is obtained. This is equivalent to defining the standard state

as the saturated solution so that the activity coefficient is equal to

one. The solubility constant is a true constant only if the activity

coefficient is not affected by the presence of any other solutes that

may be present. The unit of the solubility constant is the same as the

unit of the concentration of the solute. For sucrose Ks = 1.971 mol dm−3 at 25 °C. This shows that the solubility of sucrose at 25 °C is nearly 2 mol dm−3

(540 g/L). Sucrose is unusual in that it does not easily form a

supersaturated solution at higher concentrations, as do most other carbohydrates.

Dissolution with dissociation

Ionic compounds normally dissociate into their constituent ions when they dissolve in water. For example, for silver chloride: The expression for the equilibrium constant for this reaction is: where is the thermodynamic equilibrium constant and braces indicate activity. The activity of a pure solid is, by definition, equal to one.

When the solubility of the salt is very low the activity coefficients of the ions in solution are nearly equal to one. By setting them to be actually equal to one this expression reduces to the solubility product expression:

For 2:2 and 3:3 salts, such as CaSO4 and FePO4, the general expression for the solubility product is the same as for a 1:1 electrolyte

- (electrical charges are omitted in general expressions, for simplicity of notation)

With an unsymmetrical salt like Ca(OH)2 the solubility expression is given by Since the concentration of hydroxide ions is twice the concentration of calcium ions this reduces to

In general, with the chemical equilibrium and the following table, showing the relationship between the solubility of a compound and the value of its solubility product, can be derived.

Salt p q Solubility, S AgCl

Ca(SO4)

Fe(PO4)1 1 √Ksp Na2(SO4)

Ca(OH)22

11

2Na3(PO4)

FeCl33

11

3Al2(SO4)3

Ca3(PO4)22

33

2Mp(An)q p q

Solubility products are often expressed in logarithmic form. Thus, for calcium sulfate, with Ksp = 4.93×10−5 mol2 dm−6, log Ksp = −4.32. The smaller the value of Ksp, or the more negative the log value, the lower the solubility.

Some salts are not fully dissociated in solution. Examples include MgSO4, famously discovered by Manfred Eigen to be present in seawater as both an inner sphere complex and an outer sphere complex. The solubility of such salts is calculated by the method outlined in dissolution with reaction.

Hydroxides

The solubility product for the hydroxide of a metal ion, Mn+, is usually defined, as follows: However, general-purpose computer programs are designed to use hydrogen ion concentrations with the alternative definitions.

For hydroxides, solubility products are often given in a modified form, K*sp, using hydrogen ion concentration in place of hydroxide ion concentration. The two values are related by the self-ionization constant for water, Kw. For example, at ambient temperature, for calcium hydroxide, Ca(OH)2, lg Ksp is ca. −5 and lg K*sp ≈ −5 + 2 × 14 ≈ 23.

Dissolution with reaction

A typical reaction with dissolution involves a weak base, B, dissolving in an acidic aqueous solution. This reaction is very important for pharmaceutical products. Dissolution of weak acids in alkaline media is similarly important. The uncharged molecule usually has lower solubility than the ionic form, so solubility depends on pH and the acid dissociation constant of the solute. The term "intrinsic solubility" is used to describe the solubility of the un-ionized form in the absence of acid or alkali.

Leaching of aluminium salts from rocks and soil by acid rain is another example of dissolution with reaction: alumino-silicates are bases which react with the acid to form soluble species, such as Al3+(aq).

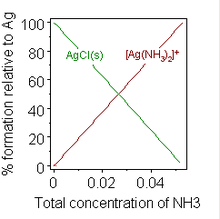

Formation of a chemical complex may also change solubility. A well-known example is the addition of a concentrated solution of ammonia to a suspension of silver chloride, in which dissolution is favoured by the formation of an ammine complex. When sufficient ammonia is added to a suspension of silver chloride, the solid dissolves. The addition of water softeners to washing powders to inhibit the formation of soap scum provides an example of practical importance.

Experimental determination

The determination of solubility is fraught with difficulties. First and foremost is the difficulty in establishing that the system is in equilibrium at the chosen temperature. This is because both precipitation and dissolution reactions may be extremely slow. If the process is very slow solvent evaporation may be an issue. Supersaturation may occur. With very insoluble substances, the concentrations in solution are very low and difficult to determine. The methods used fall broadly into two categories, static and dynamic.

Static methods

In static methods a mixture is brought to equilibrium and the concentration of a species in the solution phase is determined by chemical analysis. This usually requires separation of the solid and solution phases. In order to do this the equilibration and separation should be performed in a thermostatted room. Very low concentrations can be measured if a radioactive tracer is incorporated in the solid phase.

A variation of the static method is to add a solution of the substance in a non-aqueous solvent, such as dimethyl sulfoxide, to an aqueous buffer mixture. Immediate precipitation may occur giving a cloudy mixture. The solubility measured for such a mixture is known as "kinetic solubility". The cloudiness is due to the fact that the precipitate particles are very small resulting in Tyndall scattering. In fact the particles are so small that the particle size effect comes into play and kinetic solubility is often greater than equilibrium solubility. Over time the cloudiness will disappear as the size of the crystallites increases, and eventually equilibrium will be reached in a process known as precipitate ageing.

![{\displaystyle K_{\mathrm {sp} }=[\mathrm {A} ]^{p}[\mathrm {B} ]^{q}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/4849f5de2caa6a6afc41e1ef3e7b1d616727a006)

![{\displaystyle K_{\mathrm {sp} }=\mathrm {[Ag^{+}][Cl^{-}]} =x^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/93f0bffee6482db93b42687debe5e67ae0e9e9ce)

![{\displaystyle {\text{Solubility}}=\mathrm {[Ag^{+}]=[Cl^{-}]} =x={\sqrt {K_{\mathrm {sp} }}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f5d873185045db09f35c9632f90226a68c579f8c)

![{\displaystyle K_{\mathrm {sp} }=\mathrm {[Ag^{+}][Cl^{-}]} =x(0.01\,{\text{M}}+x)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b3c068d38c5972ce310ecc44ebf95541de3f2aed)

![{\displaystyle {\text{Solubility}}=\mathrm {[Ag^{+}]} =x={\frac {K_{\mathrm {sp} }}{0.01\,{\text{M}}}}=\mathrm {1.77\times 10^{-8}\,mol\,dm^{-3}} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/b477a17639a524aafafbf5f84001ddc3ddf9f144)

![{\displaystyle K_{\mathrm {s} }=\left[\mathrm {{C}_{12}{H}_{22}{O}_{11}(aq)} \right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/c0d66d9abd58b332ebc6cd32daa0d77e17b390a9)

![{\displaystyle K_{{\ce {sp}}}=[{\ce {Ag+}}][{\ce {Cl-}}]=[{\ce {Ag+}}]^{2}=[{\ce {Cl-}}]^{2}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b9ac357b69475531bed5ea90b89b8e6c50e3a459)

![{\displaystyle K_{sp}=\mathrm {[A][B]} =\mathrm {[A]^{2}} =\mathrm {[B]^{2}} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/1dd2b5dd67c487389ea015680d348f555feac42c)

![{\displaystyle K_{sp}={\ce {[Ca]}}{\ce {[OH]}}^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a534438eb15ad85b25bcfe83ed835c5e175ef551)

![{\displaystyle \mathrm {K_{sp}=4[Ca]^{3}} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/1dffda519d2e258ac3757b0892d07abadaf9106b)

![{\displaystyle {\ce {[B]}}={\frac {q}{p}}{\ce {[A]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6435c45878873a2f635802bbc4246faff3fbd54e)

![{\displaystyle {\sqrt[{3}]{K_{{\ce {sp}}} \over 4}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b167b5ea84eed3c9072ccd244b605ea8c54ed50d)

![{\displaystyle {\sqrt[{4}]{K_{{\ce {sp}}} \over 27}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b1a0b3148ebf43d2ba452f6cfebbe4ee11b77d06)

![{\displaystyle {\sqrt[{5}]{K_{{\ce {sp}}} \over 108}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9526fde3f222bd83ca16a40a23583a67a68a1650)

![{\displaystyle {\sqrt[{p+q}]{K_{{\ce {sp}}} \over p^{p}q^{q}}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1c731d38cdba0e9a685d52579c48d1175a9cc261)

![{\displaystyle K_{sp}=\mathrm {[M^{n+}][OH^{-}]^{n}} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/7f3222b63bd6c44fc391e7d6ab627d931ca25af2)

![{\displaystyle K_{\text{sp}}^{*}=\mathrm {[M^{n+}][H^{+}]^{-n}} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/58d0ab361c57c0ab190b937c3584e2f70f6ad427)

![{\displaystyle K_{\mathrm {w} }=[\mathrm {H^{+}} ][\mathrm {OH^{-}} ]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0b925a134a80bb3d9fba3a16004e80a4ecb42bb0)

![{\displaystyle \mathrm {AgCl(s)+2NH_{3}(aq)\leftrightharpoons [Ag(NH_{3})_{2}]^{+}(aq)+Cl^{-}(aq)} }](https://wikimedia.org/api/rest_v1/media/math/render/svg/f40adb38b5b59a25fc58a5b0c9529e454fade416)