| WorldWide Telescope | |

|---|---|

| |

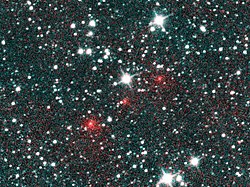

WorldWide Telescope viewing a Hubble image of the Whirlpool Galaxy (M51) | |

| Original author(s) | Jonathan Fay, Curtis Wong |

| Developer(s) | Microsoft Research, .NET Foundation, American Astronomical Society |

| Initial release | February 27, 2008 |

| Stable release | 6.1.2.0 / July 12, 2022 |

| Repository | |

| Written in | C# |

| Operating system | Microsoft Windows; web app version available |

| Platform | .NET Framework, Web platform |

| Available in | English, Chinese, Spanish, German, Russian, Hindi |

| Type | Visualization software |

| License | MIT License |

| Website | worldwidetelescope |

WorldWide Telescope (WWT) is an open-source set of applications, data and cloud services, originally created by Microsoft Research but now an open source project hosted on GitHub. The .NET Foundation holds the copyright and the project is managed by the American Astronomical Society and has been supported by grants from the Moore Foundation and National Science Foundation. WWT displays astronomical, earth and planetary data allowing visual navigation through the 3-dimensional (3D) Universe. Users are able to navigate the sky by panning and zooming, or explore the 3D universe from the surface of Earth to past the Cosmic microwave background (CMB), viewing both visual imagery and scientific data (academic papers, etc.) about that area and the objects in it. Data is curated from hundreds of different data sources, but its open data nature allows users to explore any third party data that conforms to a WWT supported format. With the rich source of multi-spectral all-sky images it is possible to view the sky in many wavelengths of light. The software utilizes Microsoft's Visual Experience Engine technologies to function. WWT can also be used to visualize arbitrary or abstract data sets and time series data.

WWT is completely free and currently comes in two versions: a native application that runs under Microsoft Windows (this version can use the specialized capabilities of a computer graphics card to render up to a half million data points), and a web client based on HTML5 and WebGL. The web client uses a responsive design which allows people to use it on smartphones and on desktops. The Windows desktop application is a high-performance system which scales from a desktop to large multi-channel full dome digital planetariums.

The WWT project began in 2002, at Microsoft Research and Johns Hopkins University. Database researcher Jim Gray had developed a satellite Earth-images database (Terraserver) and wanted to apply a similar technique to organizing the many disparate astronomical databases of sky images. WWT was announced at the TED Conference in Monterey, California in February 2008. As of 2016, WWT has been downloaded by at least 10 million active users."

As of February 2012 the earth science applications of WWT are showcased and supported by the Layerscape community collaboration website, also created by Microsoft Research. Since WWT has gone to Open Source Layerscape communities have been brought into the WWT application and re-branded simply "communities".

Features

Modes

WorldWide Telescope has six main modes. These are Sky, Earth, Planets, Panoramas, Solar System and Sandbox.

Earth

Earth mode allows users to view a 3D model of the Earth, similar to NASA World Wind, Microsoft Virtual Earth and Google Earth. The Earth mode has a default data set with near global coverage and resolution down to sub-meter in high-population centers. Unlike most Earth viewers, WorldWide Telescope supports many different map projections including Mercator, Equirectangular and Tessellated Octahedral Adaptive Subdivision Transform (TOAST). There are also map layers for seasonal, night, streets, hybrid and science oriented Moderate-Resolution Imaging Spectroradiometer (MODIS) imagery. The new layer manager can be used to add data visualization on the Earth or other planets.

Planets

Planets mode currently allows users to view 3D models of eight celestial bodies: Venus, Mars, Jupiter, the Galilean moons of Jupiter, and Earth's Moon. It also allows users to view a Mandelbrot set.

Sky

Sky mode is the main feature of the software. It allows users to view high quality images of outer space with images from many space and Earth-based telescopes. Each image is shown at its actual position in the sky. There are over 200 full-sky images in spectral bands ranging from radio to gamma-rays There are also thousands of individual study images of various astronomical objects from space telescopes such as the Hubble Space Telescope, the Spitzer Space Telescope in infrared, the Chandra X-ray Observatory, COBE, WMAP, ROSAT, IRAS, GALEX as well as many other space and ground-based telescopes. Sky mode also shows the Sun, Moon, planets, and their moons in their current positions.

Users can add their own image data from FITS files or can convert them to standard image formats such as JPEG, PNG, TIFF. These images can be formatted with the astronomical visual metadata (AVM).

Panoramas

The Panorama mode allows users to view several panoramas, from remote robotic rovers: the Curiosity rover, Mars Exploration Rovers, as well as from the Apollo program astronauts.

Users can include their own panoramas, created by gigapixel panoramas such as the ones available for HDView., or single-shot spherical cameras, such as the Ricoh Theta.

Solar System

This mode displays the major Solar System objects from the Sun to Pluto, and Jupiter's moons, orbits of all Solar System moons, and all 550,000+ minor planets positioned with their correct scale, position and phase. The user can move forward and backward in time at various rates, or type in a time and date for which to view the positions of the planets, and can select viewing location. The program can show the Solar System the way it would look from any location at any time between 1 AD and 4000 AD. Using this tool a user can watch an eclipse (e.g., 2017 total solar eclipse) occultation, or astronomical alignment, and preview where the best spot might be to observe a future event. In this mode it is possible to zoom away from the Solar System, through the Milky Way, and out into the cosmos to see a hypothetical view of the entire known universe. Other bodies, spacecraft and orbital reference frames can be added and visualized in the Solar System Mode using the layer manager.

Users can query the Minor Planet Center for the orbits of minor bodies in the Solar System, such as

Sandbox

The Sandbox mode allows users to view arbitrary 3d models (OBJ or 3DS formats) in an empty universe. For instance, this is useful to explore 3D objects such as molecular data.

Local user content

WorldWide Telescope was designed as a professional research environment and as such it facilitates viewing of user data. Virtually all of the data types and visualizations in WorldWide Telescope can be run using supplied user data either locally or over the network. Any of the above viewing modes allow the user to browse and load equirectangular, fisheye, or dome master images to be viewed as planet surfaces, sky images or panoramas. Images with Astronomy Visualization Metadata (AVM) can be loaded and registered to their location in the sky. Images without AVM can be shown on the sky but the user must align the images in the sky by moving, scaling and rotating the images until star patterns align. Once the images are aligned they can be saved to collections for later viewing and sharing. The layer manager can be used to add vector or image data to planet surfaces or in orbit.

Layer Manager

Introduced in the Aphelion release, the Layer Manager allows management of relative reference frames allowing data and images to be places on Earth, the planets, moons, the sky or anywhere else in the universe. Data can be loaded from files, linked live with Microsoft Excel, or pasted in from other applications. Layers support 3D points and Well-known text representation of geometry (WKT), shape files, 3D models, orbital elements, image layers and more. Time series data can be viewed to see smoothly animated events over time. Reference frames can contain orbital information allowing 3d models or other data to be plotted at their correct location over time.

Use for amateur astronomy

The program allows the selection of a telescope and camera and can preview the field of view against the sky. Using ASCOM the user can connect a computer-controlled telescope or an astronomical pointing device such as Meade's MySky, and then either control or follow it. The large selection of catalog objects and 1 arc-second-per-pixel imagery allow an astrophotographer to select and plan a photograph and find a suitable guide star using the multi-chip FOV indicator.

Tours

WorldWide Telescope contains a multimedia authoring environment that allows users or educators to create tours with a simple slide-based paradigm. The slides can have a begin and end camera position allowing for easy Ken Burns Effects. Pictures, objects, and text can be added to the slides, and tours can have both background music and voice-overs with separate volume control. The layer manager can be used in conjunction with a tour to publish user data visualizations with annotations and animations. One of the tours featured was made by a six-year-old boy, while other tours are made by astrophysicists such as Dr. Alyssa A. Goodman of the Center for Astrophysics | Harvard & Smithsonian and Dr. Robert L. Hurt of Caltech/JPL.

Communities

Communities are a way of allowing organizations and communities to add their own images, tours, catalogs and research materials to the WorldWide Telescope interface. The concept is similar to subscribing to a RSS feed except the contents are astronomical metadata.

Virtual observatory

The WorldWide Telescope was designed to be the embodiment of a rich virtual observatory client envisioned by Turing Award winner Jim Gray and JHU astrophysicist and co-principal investigator for the US National Virtual Observatory, Alex Szalay in their paper titled "The WorldWide Telescope". The WorldWide Telescope program makes use of IVOA standards for inter-operating with data providers to provide its image, search and catalog data. Rather than concentrate all data into one database, the WorldWide Telescope sources its data from all over the web and the available content grows as more VO compliant data sources are placed on the web.

Full dome planetarium support

The WorldWide Telescope Windows client application supports both single and multichannel full-dome video projection allowing it to power full-dome digital planetarium systems. It is currently installed in several world-class planetariums where it runs on turn-key planetarium system. It can also be used to create a stand-alone planetarium by using the included tools for calibration, alignment, and blending. This allows using consumer DLP projectors to create a projection system with resolution, performance and functionality comparable to high-end turnkey solutions, at a fraction of the cost. The University of Washington pioneered this approach with the UW Planetarium. WorldWide Telescope can also be used in single channel mode from a laptop using a mirror dome or fisheye projector to display on inflatable domes, or even on user constructed low-cost planetariums for which plans are available on their website.

Reception

WorldWide Telescope was praised before its announcement in a post by blogger Robert Scoble, who said the demo had made him cry. He later called it "the most fabulous thing I’ve seen Microsoft do in years."

Dr. Roy Gould of the Center for Astrophysics | Harvard & Smithsonian said:

- "The WorldWide Telescope takes the best images from the greatest telescopes on Earth ... and in space ... and assembles them into a seamless, holistic view of the universe. This new resource will change the way we do astronomy ... the way we teach astronomy ... and, most importantly, I think it's going to change the way we see ourselves in the universe,"..."The creators of the WorldWide Telescope have now given us a way to have a dialogue with our universe."

A PC World review of the original beta concluded that WorldWide Telescope "has a few shortcomings" but "is a phenomenal resource for enthusiasts, students, and teachers." It also believed the product to be "far beyond Google's current offerings."

Prior to the cross-platform web client release, at least one reviewer regretted the lack of support for non-Windows operating systems, the slow speed at which imagery loads, and the lack of KML support.