From Wikipedia, the free encyclopedia

Quantum gravity (QG) is a field of theoretical physics that seeks to describe the force of gravity according to the principles of quantum mechanics.

The current understanding of gravity is based on Albert Einstein's general theory of relativity, which is formulated within the framework of classical physics. On the other hand, the nongravitational forces are described within the framework of quantum mechanics, a radically different formalism for describing physical phenomena based on probability.[1] The necessity of a quantum mechanical description of gravity follows from the fact that one cannot consistently couple a classical system to a quantum one.[2]

Although a quantum theory of gravity is needed in order to reconcile general relativity with the principles of quantum mechanics, difficulties arise when one attempts to apply the usual prescriptions of quantum field theory to the force of gravity.[3] From a technical point of view, the problem is that the theory one gets in this way is not renormalizable and therefore cannot be used to make meaningful physical predictions. As a result, theorists have taken up more radical approaches to the problem of quantum gravity, the most popular approaches being string theory and loop quantum gravity.[4] A recent development is the theory of causal fermion systems which gives quantum mechanics, general relativity and quantum field theory as limiting cases.[5][6][7][8][9][10]

Strictly speaking, the aim of quantum gravity is only to describe the quantum behavior of the gravitational field and should not be confused with the objective of unifying all fundamental interactions into a single mathematical framework. Although some quantum gravity theories such as string theory try to unify gravity with the other fundamental forces, others such as loop quantum gravity make no such attempt; instead, they make an effort to quantize the gravitational field while it is kept separate from the other forces. A theory of quantum gravity that is also a grand unification of all known interactions is sometimes referred to as a theory of everything (TOE).

One of the difficulties of quantum gravity is that quantum gravitational effects are only expected to become apparent near the Planck scale, a scale far smaller in distance (equivalently, far larger in energy) than what is currently accessible at high energy particle accelerators. As a result, quantum gravity is a mainly theoretical enterprise, although there are speculations about how quantum gravity effects might be observed in existing experiments.[11]

Much of the difficulty in meshing these theories at all energy scales comes from the different assumptions that these theories make on how the universe works. Quantum field theory depends on particle fields embedded in the flat space-time of special relativity. General relativity models gravity as a curvature within space-time that changes as a gravitational mass moves. Historically, the most obvious way of combining the two (such as treating gravity as simply another particle field) ran quickly into what is known as the renormalization problem. In the old-fashioned understanding of renormalization, gravity particles would attract each other and adding together all of the interactions results in many infinite values which cannot easily be cancelled out mathematically to yield sensible, finite results. This is in contrast with quantum electrodynamics where, given that the series still do not converge, the interactions sometimes evaluate to infinite results, but those are few enough in number to be removable via renormalization.

While confirming that quantum mechanics and gravity are indeed consistent at reasonable energies, it is clear that near or above the fundamental cutoff of our effective quantum theory of gravity (the cutoff is generally assumed to be of the order of the Planck scale), a new model of nature will be needed. Specifically, the problem of combining quantum mechanics and gravity becomes an issue only at very high energies, and may well require a totally new kind of model.

Such a theory is required in order to understand problems involving the combination of very high energy and very small dimensions of space, such as the behavior of black holes, and the origin of the universe.

While there is no concrete proof of the existence of gravitons, quantized theories of matter may necessitate their existence.[citation needed] Supporting this theory is the observation that all fundamental forces except gravity have one or more known messenger particles, leading researchers to believe that at least one most likely does exist; they have dubbed this hypothetical particle the graviton. The predicted find would result in the classification of the graviton as a "force particle" similar to the photon of the electromagnetic field. Many of the accepted notions of a unified theory of physics since the 1970s assume, and to some degree depend upon, the existence of the graviton. These include string theory, superstring theory, M-theory, and loop quantum gravity. Detection of gravitons is thus vital to the validation of various lines of research to unify quantum mechanics and relativity theory.

Thus, one had a theory which combined gravity, quantization and even the electromagnetic interaction, promising ingredients of a fundamental physical theory. It is worth noting that the outcome revealed a previously unknown and already existing natural link between general relativity and quantum mechanics. However, this theory needs to be generalized in (2+1) or (3+1) dimensions although, in principle, the field equations are amenable to such generalization as shown with the inclusion of a one-graviton process[21] and yielding the correct Newtonian limit in d dimensions if a dilaton is included. However, it is not yet clear what the fully generalized field equation governing the dilaton in (3+1) dimensions should be. This is further complicated by the fact that gravitons can propagate in (3+1) dimensions and consequently that would imply gravitons and dilatons exist in the real world. Moreover, detection of the dilaton is expected to be even more elusive than the graviton. However, since this approach allows for the combination of gravitational, electromagnetic and quantum effects, their coupling could potentially lead to a means of vindicating the theory, through cosmology and perhaps even experimentally.

However, gravity is perturbatively nonrenormalizable.[22][23] For a quantum field theory to be well-defined according to this understanding of the subject, it must be asymptotically free or asymptotically safe. The theory must be characterized by a choice of finitely many parameters, which could, in principle, be set by experiment. For example, in quantum electrodynamics, these parameters are the charge and mass of the electron, as measured at a particular energy scale.

On the other hand, in quantizing gravity, there are infinitely many independent parameters (counterterm coefficients) needed to define the theory. For a given choice of those parameters, one could make sense of the theory, but since we can never do infinitely many experiments to fix the values of every parameter, we do not have a meaningful physical theory:

Any meaningful theory of quantum gravity that makes sense and is predictive at all energy scales must have some deep principle that reduces the infinitely many unknown parameters to a finite number that can then be measured.

Recent work[12] has shown that by treating general relativity as an effective field theory, one can actually make legitimate predictions for quantum gravity, at least for low-energy phenomena. An example is the well-known calculation of the tiny first-order quantum-mechanical correction to the classical Newtonian gravitational potential between two masses.

On the other hand, quantum mechanics has depended since its inception on a fixed background (non-dynamic) structure. In the case of quantum mechanics, it is time that is given and not dynamic, just as in Newtonian classical mechanics. In relativistic quantum field theory, just as in classical field theory, Minkowski spacetime is the fixed background of the theory.

String theory can be seen as a generalization of quantum field theory where instead of point particles, string-like objects propagate in a fixed spacetime background, although the interactions among closed strings give rise to space-time in a dynamical way. Although string theory had its origins in the study of quark confinement and not of quantum gravity, it was soon discovered that the string spectrum contains the graviton, and that "condensation" of certain vibration modes of strings is equivalent to a modification of the original background. In this sense, string perturbation theory exhibits exactly the features one would expect of a perturbation theory that may exhibit a strong dependence on asymptotics (as seen, for example, in the AdS/CFT correspondence) which is a weak form of background dependence.

Topological quantum field theory provided an example of background-independent quantum theory, but with no local degrees of freedom, and only finitely many degrees of freedom globally. This is inadequate to describe gravity in 3+1 dimensions, which has local degrees of freedom according to general relativity. In 2+1 dimensions, however, gravity is a topological field theory, and it has been successfully quantized in several different ways, including spin networks.

Phenomena such as the Unruh effect, in which particles exist in certain accelerating frames but not in stationary ones, do not pose any difficulty when considered on a curved background (the Unruh effect occurs even in flat Minkowskian backgrounds). The vacuum state is the state with the least energy (and may or may not contain particles). See Quantum field theory in curved spacetime for a more complete discussion.

One suggested starting point is ordinary quantum field theories which, after all, are successful in describing the other three basic fundamental forces in the context of the standard model of elementary particle physics. However, while this leads to an acceptable effective (quantum) field theory of gravity at low energies,[30] gravity turns out to be much more problematic at higher energies. Where, for ordinary field theories such as quantum electrodynamics, a technique known as renormalization is an integral part of deriving predictions which take into account higher-energy contributions,[31] gravity turns out to be nonrenormalizable: at high energies, applying the recipes of ordinary quantum field theory yields models that are devoid of all predictive power.[32]

One attempt to overcome these limitations is to replace ordinary quantum field theory, which is based on the classical concept of a point particle, with a quantum theory of one-dimensional extended objects: string theory.[33] At the energies reached in current experiments, these strings are indistinguishable from point-like particles, but, crucially, different modes of oscillation of one and the same type of fundamental string appear as particles with different (electric and other) charges. In this way, string theory promises to be a unified description of all particles and interactions.[34] The theory is successful in that one mode will always correspond to a graviton, the messenger particle of gravity; however, the price to pay are unusual features such as six extra dimensions of space in addition to the usual three for space and one for time.[35]

In what is called the second superstring revolution, it was conjectured that both string theory and a unification of general relativity and supersymmetry known as supergravity[36] form part of a hypothesized eleven-dimensional model known as M-theory, which would constitute a uniquely defined and consistent theory of quantum gravity.[37][38] As presently understood, however, string theory admits a very large number (10500 by some estimates) of consistent vacua, comprising the so-called "string landscape". Sorting through this large family of solutions remains a major challenge.

Loop quantum gravity is based first of all on the idea to take seriously the insight of general relativity that spacetime is a dynamical field and therefore is a quantum object. The second idea is that the quantum discreteness that determines the particle-like behavior of other field theories (for instance, the photons of the electromagnetic field) also affects the structure of space.

The main result of loop quantum gravity is the derivation of a granular structure of space at the Planck length. This is derived as follows. In the case of electromagnetism, the quantum operator representing the energy of each frequency of the field has discrete spectrum. Therefore the energy of each frequency is quantized, and the quanta are the photons. In the case of gravity, the operators representing the area and the volume of each surface or space region have discrete spectrum. Therefore area and volume of any portion of space are quantized, and the quanta are elementary quanta of space. It follows that spacetime has an elementary quantum granular structure at the Planck scale, which cuts-off the ultraviolet infinities of quantum field theory.

The quantum state of spacetime is described in the theory by means of a mathematical structure called spin networks. Spin networks were initially introduced by Roger Penrose in abstract form, and later shown by Carlo Rovelli and Lee Smolin to derive naturally from a non perturbative quantization of general relativity. Spin networks do not represent quantum states of a field in spacetime: they represent directly quantum states of spacetime.

The theory is based on the reformulation of general relativity known as Ashtekar variables, which represent geometric gravity using mathematical analogues of electric and magnetic fields.[39][40] In the quantum theory space is represented by a network structure called a spin network, evolving over time in discrete steps.[41][42][43][44]

The dynamics of the theory is today constructed in several versions. One version starts with the canonical quantization of general relativity. The analogue of the Schrödinger equation is a Wheeler–DeWitt equation, which can be defined in the theory.[45] In the covariant, or spinfoam formulation of the theory, the quantum dynamics is obtained via a sum over discrete versions of spacetime, called spinfoams. These represent histories of spin networks.

The most widely pursued possibilities for quantum gravity phenomenology include violations of Lorentz invariance, imprints of quantum gravitational effects in the cosmic microwave background (in particular its polarization), and decoherence induced by fluctuations in the space-time foam.

The BICEP2 experiment detected what was initially thought to be primordial B-mode polarization caused by gravitational waves in the early universe. If truly primordial, these waves were born as quantum fluctuations in gravity itself. Cosmologist Ken Olum (Tufts University) stated: "I think this is the only observational evidence that we have that actually shows that gravity is quantized....It's probably the only evidence of this that we will ever have."[56]

The current understanding of gravity is based on Albert Einstein's general theory of relativity, which is formulated within the framework of classical physics. On the other hand, the nongravitational forces are described within the framework of quantum mechanics, a radically different formalism for describing physical phenomena based on probability.[1] The necessity of a quantum mechanical description of gravity follows from the fact that one cannot consistently couple a classical system to a quantum one.[2]

Although a quantum theory of gravity is needed in order to reconcile general relativity with the principles of quantum mechanics, difficulties arise when one attempts to apply the usual prescriptions of quantum field theory to the force of gravity.[3] From a technical point of view, the problem is that the theory one gets in this way is not renormalizable and therefore cannot be used to make meaningful physical predictions. As a result, theorists have taken up more radical approaches to the problem of quantum gravity, the most popular approaches being string theory and loop quantum gravity.[4] A recent development is the theory of causal fermion systems which gives quantum mechanics, general relativity and quantum field theory as limiting cases.[5][6][7][8][9][10]

Strictly speaking, the aim of quantum gravity is only to describe the quantum behavior of the gravitational field and should not be confused with the objective of unifying all fundamental interactions into a single mathematical framework. Although some quantum gravity theories such as string theory try to unify gravity with the other fundamental forces, others such as loop quantum gravity make no such attempt; instead, they make an effort to quantize the gravitational field while it is kept separate from the other forces. A theory of quantum gravity that is also a grand unification of all known interactions is sometimes referred to as a theory of everything (TOE).

One of the difficulties of quantum gravity is that quantum gravitational effects are only expected to become apparent near the Planck scale, a scale far smaller in distance (equivalently, far larger in energy) than what is currently accessible at high energy particle accelerators. As a result, quantum gravity is a mainly theoretical enterprise, although there are speculations about how quantum gravity effects might be observed in existing experiments.[11]

Overview

Much of the difficulty in meshing these theories at all energy scales comes from the different assumptions that these theories make on how the universe works. Quantum field theory depends on particle fields embedded in the flat space-time of special relativity. General relativity models gravity as a curvature within space-time that changes as a gravitational mass moves. Historically, the most obvious way of combining the two (such as treating gravity as simply another particle field) ran quickly into what is known as the renormalization problem. In the old-fashioned understanding of renormalization, gravity particles would attract each other and adding together all of the interactions results in many infinite values which cannot easily be cancelled out mathematically to yield sensible, finite results. This is in contrast with quantum electrodynamics where, given that the series still do not converge, the interactions sometimes evaluate to infinite results, but those are few enough in number to be removable via renormalization.

Effective field theories

Quantum gravity can be treated as an effective field theory. Effective quantum field theories come with some high-energy cutoff, beyond which we do not expect that the theory provides a good description of nature. The "infinities" then become large but finite quantities depending on this finite cutoff scale, and correspond to processes that involve very high energies near the fundamental cutoff. These quantities can then be absorbed into an infinite collection of coupling constants, and at energies well below the fundamental cutoff of the theory, to any desired precision; only a finite number of these coupling constants need to be measured in order to make legitimate quantum-mechanical predictions. This same logic works just as well for the highly successful theory of low-energy pions as for quantum gravity. Indeed, the first quantum-mechanical corrections to graviton-scattering and Newton's law of gravitation have been explicitly computed[12] (although they are so astronomically small that we may never be able to measure them). In fact, gravity is in many ways a much better quantum field theory than the Standard Model, since it appears to be valid all the way up to its cutoff at the Planck scale.While confirming that quantum mechanics and gravity are indeed consistent at reasonable energies, it is clear that near or above the fundamental cutoff of our effective quantum theory of gravity (the cutoff is generally assumed to be of the order of the Planck scale), a new model of nature will be needed. Specifically, the problem of combining quantum mechanics and gravity becomes an issue only at very high energies, and may well require a totally new kind of model.

Quantum gravity theory for the highest energy scales

The general approach to deriving a quantum gravity theory that is valid at even the highest energy scales is to assume that such a theory will be simple and elegant and, accordingly, to study symmetries and other clues offered by current theories that might suggest ways to combine them into a comprehensive, unified theory. One problem with this approach is that it is unknown whether quantum gravity will actually conform to a simple and elegant theory, as it should resolve the dual conundrums of special relativity with regard to the uniformity of acceleration and gravity, and general relativity with regard to spacetime curvature.Such a theory is required in order to understand problems involving the combination of very high energy and very small dimensions of space, such as the behavior of black holes, and the origin of the universe.

Quantum mechanics and general relativity

Gravity Probe B (GP-B) has measured spacetime curvature near Earth to test related models in application of Einstein's general theory of relativity.

The graviton

At present, one of the deepest problems in theoretical physics is harmonizing the theory of general relativity, which describes gravitation, and applications to large-scale structures (stars, planets, galaxies), with quantum mechanics, which describes the other three fundamental forces acting on the atomic scale. This problem must be put in the proper context, however. In particular, contrary to the popular claim that quantum mechanics and general relativity are fundamentally incompatible, one can demonstrate that the structure of general relativity essentially follows inevitably from the quantum mechanics of interacting theoretical spin-2 massless particles (called gravitons).[13][14][15][16][17]While there is no concrete proof of the existence of gravitons, quantized theories of matter may necessitate their existence.[citation needed] Supporting this theory is the observation that all fundamental forces except gravity have one or more known messenger particles, leading researchers to believe that at least one most likely does exist; they have dubbed this hypothetical particle the graviton. The predicted find would result in the classification of the graviton as a "force particle" similar to the photon of the electromagnetic field. Many of the accepted notions of a unified theory of physics since the 1970s assume, and to some degree depend upon, the existence of the graviton. These include string theory, superstring theory, M-theory, and loop quantum gravity. Detection of gravitons is thus vital to the validation of various lines of research to unify quantum mechanics and relativity theory.

The dilaton

The dilaton made its first appearance in Kaluza–Klein theory, a five-dimensional theory that combined gravitation and electromagnetism. Generally, it appears in string theory. More recently, it has appeared in the lower-dimensional many-bodied gravity problem[18] based on the field theoretic approach of Roman Jackiw. The impetus arose from the fact that complete analytical solutions for the metric of a covariant N-body system have proven elusive in general relativity. To simplify the problem, the number of dimensions was lowered to (1+1), i.e. one spatial dimension and one temporal dimension. This model problem, known as R=T theory[19] (as opposed to the general G=T theory) was amenable to exact solutions in terms of a generalization of the Lambert W function. It was also found that the field equation governing the dilaton (derived from differential geometry) was the Schrödinger equation and consequently amenable to quantization.[20]Thus, one had a theory which combined gravity, quantization and even the electromagnetic interaction, promising ingredients of a fundamental physical theory. It is worth noting that the outcome revealed a previously unknown and already existing natural link between general relativity and quantum mechanics. However, this theory needs to be generalized in (2+1) or (3+1) dimensions although, in principle, the field equations are amenable to such generalization as shown with the inclusion of a one-graviton process[21] and yielding the correct Newtonian limit in d dimensions if a dilaton is included. However, it is not yet clear what the fully generalized field equation governing the dilaton in (3+1) dimensions should be. This is further complicated by the fact that gravitons can propagate in (3+1) dimensions and consequently that would imply gravitons and dilatons exist in the real world. Moreover, detection of the dilaton is expected to be even more elusive than the graviton. However, since this approach allows for the combination of gravitational, electromagnetic and quantum effects, their coupling could potentially lead to a means of vindicating the theory, through cosmology and perhaps even experimentally.

Nonrenormalizability of gravity

General relativity, like electromagnetism, is a classical field theory. One might expect that, as with electromagnetism, there should be a corresponding quantum field theory.However, gravity is perturbatively nonrenormalizable.[22][23] For a quantum field theory to be well-defined according to this understanding of the subject, it must be asymptotically free or asymptotically safe. The theory must be characterized by a choice of finitely many parameters, which could, in principle, be set by experiment. For example, in quantum electrodynamics, these parameters are the charge and mass of the electron, as measured at a particular energy scale.

On the other hand, in quantizing gravity, there are infinitely many independent parameters (counterterm coefficients) needed to define the theory. For a given choice of those parameters, one could make sense of the theory, but since we can never do infinitely many experiments to fix the values of every parameter, we do not have a meaningful physical theory:

- At low energies, the logic of the renormalization group tells us that, despite the unknown choices of these infinitely many parameters, quantum gravity will reduce to the usual Einstein theory of general relativity.

- On the other hand, if we could probe very high energies where quantum effects take over, then every one of the infinitely many unknown parameters would begin to matter, and we could make no predictions at all.

Any meaningful theory of quantum gravity that makes sense and is predictive at all energy scales must have some deep principle that reduces the infinitely many unknown parameters to a finite number that can then be measured.

- One possibility is that normal perturbation theory is not a reliable guide to the renormalizability of the theory, and that there really is a UV fixed point for gravity. Since this is a question of non-perturbative quantum field theory, it is difficult to find a reliable answer, but some people still pursue this option.

- Another possibility is that there are new symmetry principles that constrain the parameters and reduce them to a finite set. This is the route taken by string theory, where all of the excitations of the string essentially manifest themselves as new symmetries.

QG as an effective field theory

In an effective field theory, all but the first few of the infinite set of parameters in a non-renormalizable theory are suppressed by huge energy scales and hence can be neglected when computing low-energy effects. Thus, at least in the low-energy regime, the model is indeed a predictive quantum field theory.[12] (A very similar situation occurs for the very similar effective field theory of low-energy pions.) Furthermore, many theorists agree that even the Standard Model should really be regarded as an effective field theory as well, with "nonrenormalizable" interactions suppressed by large energy scales and whose effects have consequently not been observed experimentally.Recent work[12] has shown that by treating general relativity as an effective field theory, one can actually make legitimate predictions for quantum gravity, at least for low-energy phenomena. An example is the well-known calculation of the tiny first-order quantum-mechanical correction to the classical Newtonian gravitational potential between two masses.

Spacetime background dependence

A fundamental lesson of general relativity is that there is no fixed spacetime background, as found in Newtonian mechanics and special relativity; the spacetime geometry is dynamic. While easy to grasp in principle, this is the hardest idea to understand about general relativity, and its consequences are profound and not fully explored, even at the classical level. To a certain extent, general relativity can be seen to be a relational theory,[24] in which the only physically relevant information is the relationship between different events in space-time.On the other hand, quantum mechanics has depended since its inception on a fixed background (non-dynamic) structure. In the case of quantum mechanics, it is time that is given and not dynamic, just as in Newtonian classical mechanics. In relativistic quantum field theory, just as in classical field theory, Minkowski spacetime is the fixed background of the theory.

String theory

Interaction in the subatomic world: world lines of point-like particles in the Standard Model or a world sheet swept up by closed strings in string theory

String theory can be seen as a generalization of quantum field theory where instead of point particles, string-like objects propagate in a fixed spacetime background, although the interactions among closed strings give rise to space-time in a dynamical way. Although string theory had its origins in the study of quark confinement and not of quantum gravity, it was soon discovered that the string spectrum contains the graviton, and that "condensation" of certain vibration modes of strings is equivalent to a modification of the original background. In this sense, string perturbation theory exhibits exactly the features one would expect of a perturbation theory that may exhibit a strong dependence on asymptotics (as seen, for example, in the AdS/CFT correspondence) which is a weak form of background dependence.

Background independent theories

Loop quantum gravity is the fruit of an effort to formulate a background-independent quantum theory.Topological quantum field theory provided an example of background-independent quantum theory, but with no local degrees of freedom, and only finitely many degrees of freedom globally. This is inadequate to describe gravity in 3+1 dimensions, which has local degrees of freedom according to general relativity. In 2+1 dimensions, however, gravity is a topological field theory, and it has been successfully quantized in several different ways, including spin networks.

Semi-classical quantum gravity

Quantum field theory on curved (non-Minkowskian) backgrounds, while not a full quantum theory of gravity, has shown many promising early results. In an analogous way to the development of quantum electrodynamics in the early part of the 20th century (when physicists considered quantum mechanics in classical electromagnetic fields), the consideration of quantum field theory on a curved background has led to predictions such as black hole radiation.Phenomena such as the Unruh effect, in which particles exist in certain accelerating frames but not in stationary ones, do not pose any difficulty when considered on a curved background (the Unruh effect occurs even in flat Minkowskian backgrounds). The vacuum state is the state with the least energy (and may or may not contain particles). See Quantum field theory in curved spacetime for a more complete discussion.

Points of tension

There are other points of tension between quantum mechanics and general relativity.- First, classical general relativity breaks down at singularities, and quantum mechanics becomes inconsistent with general relativity in the neighborhood of singularities (however, no one is certain that classical general relativity applies near singularities in the first place).

- Second, it is not clear how to determine the gravitational field of a particle, since under the Heisenberg uncertainty principle of quantum mechanics its location and velocity cannot be known with certainty. The resolution of these points may come from a better understanding of general relativity.[25]

- Third, there is the problem of time in quantum gravity. Time has a different meaning in quantum mechanics and general relativity and hence there are subtle issues to resolve when trying to formulate a theory which combines the two.[26]

Candidate theories

There are a number of proposed quantum gravity theories.[27] Currently, there is still no complete and consistent quantum theory of gravity, and the candidate models still need to overcome major formal and conceptual problems. They also face the common problem that, as yet, there is no way to put quantum gravity predictions to experimental tests, although there is hope for this to change as future data from cosmological observations and particle physics experiments becomes available.[28][29]String theory

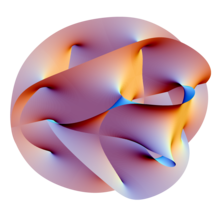

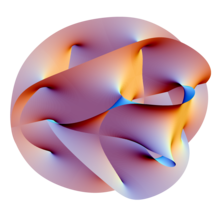

Projection of a Calabi–Yau manifold, one of the ways of compactifying the extra dimensions posited by string theory

One suggested starting point is ordinary quantum field theories which, after all, are successful in describing the other three basic fundamental forces in the context of the standard model of elementary particle physics. However, while this leads to an acceptable effective (quantum) field theory of gravity at low energies,[30] gravity turns out to be much more problematic at higher energies. Where, for ordinary field theories such as quantum electrodynamics, a technique known as renormalization is an integral part of deriving predictions which take into account higher-energy contributions,[31] gravity turns out to be nonrenormalizable: at high energies, applying the recipes of ordinary quantum field theory yields models that are devoid of all predictive power.[32]

One attempt to overcome these limitations is to replace ordinary quantum field theory, which is based on the classical concept of a point particle, with a quantum theory of one-dimensional extended objects: string theory.[33] At the energies reached in current experiments, these strings are indistinguishable from point-like particles, but, crucially, different modes of oscillation of one and the same type of fundamental string appear as particles with different (electric and other) charges. In this way, string theory promises to be a unified description of all particles and interactions.[34] The theory is successful in that one mode will always correspond to a graviton, the messenger particle of gravity; however, the price to pay are unusual features such as six extra dimensions of space in addition to the usual three for space and one for time.[35]

In what is called the second superstring revolution, it was conjectured that both string theory and a unification of general relativity and supersymmetry known as supergravity[36] form part of a hypothesized eleven-dimensional model known as M-theory, which would constitute a uniquely defined and consistent theory of quantum gravity.[37][38] As presently understood, however, string theory admits a very large number (10500 by some estimates) of consistent vacua, comprising the so-called "string landscape". Sorting through this large family of solutions remains a major challenge.

Loop quantum gravity

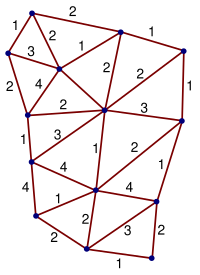

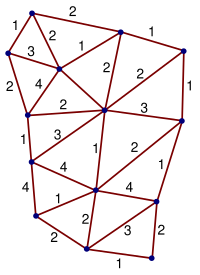

Simple spin network of the type used in loop quantum gravity

Loop quantum gravity is based first of all on the idea to take seriously the insight of general relativity that spacetime is a dynamical field and therefore is a quantum object. The second idea is that the quantum discreteness that determines the particle-like behavior of other field theories (for instance, the photons of the electromagnetic field) also affects the structure of space.

The main result of loop quantum gravity is the derivation of a granular structure of space at the Planck length. This is derived as follows. In the case of electromagnetism, the quantum operator representing the energy of each frequency of the field has discrete spectrum. Therefore the energy of each frequency is quantized, and the quanta are the photons. In the case of gravity, the operators representing the area and the volume of each surface or space region have discrete spectrum. Therefore area and volume of any portion of space are quantized, and the quanta are elementary quanta of space. It follows that spacetime has an elementary quantum granular structure at the Planck scale, which cuts-off the ultraviolet infinities of quantum field theory.

The quantum state of spacetime is described in the theory by means of a mathematical structure called spin networks. Spin networks were initially introduced by Roger Penrose in abstract form, and later shown by Carlo Rovelli and Lee Smolin to derive naturally from a non perturbative quantization of general relativity. Spin networks do not represent quantum states of a field in spacetime: they represent directly quantum states of spacetime.

The theory is based on the reformulation of general relativity known as Ashtekar variables, which represent geometric gravity using mathematical analogues of electric and magnetic fields.[39][40] In the quantum theory space is represented by a network structure called a spin network, evolving over time in discrete steps.[41][42][43][44]

The dynamics of the theory is today constructed in several versions. One version starts with the canonical quantization of general relativity. The analogue of the Schrödinger equation is a Wheeler–DeWitt equation, which can be defined in the theory.[45] In the covariant, or spinfoam formulation of the theory, the quantum dynamics is obtained via a sum over discrete versions of spacetime, called spinfoams. These represent histories of spin networks.

Other approaches

There are a number of other approaches to quantum gravity. The approaches differ depending on which features of general relativity and quantum theory are accepted unchanged, and which features are modified.[46][47] Examples include:- Acoustic metric and other analog models of gravity

- Asymptotic safety in quantum gravity

- Euclidean quantum gravity

- Causal dynamical triangulation[48]

- Causal fermion systems,[5][6][7][8][9][10] giving quantum mechanics, general relativity and quantum field theory as limiting cases.

- Causal sets[49]

- Covariant Feynman path integral approach

- Group field theory[50]

- Wheeler-DeWitt equation

- Geometrodynamics

- Hořava–Lifshitz gravity

- MacDowell–Mansouri action

- Noncommutative geometry.

- Path-integral based models of quantum cosmology[51]

- Regge calculus

- String-nets giving rise to gapless helicity ±2 excitations with no other gapless excitations[52]

- Superfluid vacuum theory a.k.a. theory of BEC vacuum

- Supergravity

- Twistor theory[53]

- Canonical quantum gravity

- E8 Theory

Weinberg–Witten theorem

In quantum field theory, the Weinberg–Witten theorem places some constraints on theories of composite gravity/emergent gravity. However, recent developments attempt to show that if locality is only approximate and the holographic principle is correct, the Weinberg–Witten theorem would not be valid[citation needed].Experimental tests

As was emphasized above, quantum gravitational effects are extremely weak and therefore difficult to test. For this reason, the possibility of experimentally testing quantum gravity had not received much attention prior to the late 1990s. However, in the past decade, physicists have realized that evidence for quantum gravitational effects can guide the development of the theory. Since theoretical development has been slow, the field of phenomenological quantum gravity, which studies the possibility of experimental tests, has obtained increased attention.[54][55]The most widely pursued possibilities for quantum gravity phenomenology include violations of Lorentz invariance, imprints of quantum gravitational effects in the cosmic microwave background (in particular its polarization), and decoherence induced by fluctuations in the space-time foam.

The BICEP2 experiment detected what was initially thought to be primordial B-mode polarization caused by gravitational waves in the early universe. If truly primordial, these waves were born as quantum fluctuations in gravity itself. Cosmologist Ken Olum (Tufts University) stated: "I think this is the only observational evidence that we have that actually shows that gravity is quantized....It's probably the only evidence of this that we will ever have."[56]

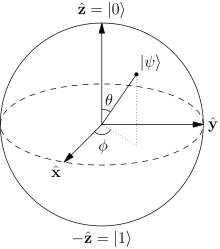

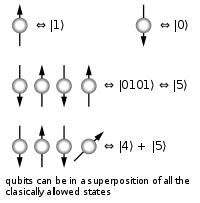

qubits can be in an arbitrary superposition of up to

qubits can be in an arbitrary superposition of up to  different states simultaneously (this compares to a normal computer that can only be in one of these

different states simultaneously (this compares to a normal computer that can only be in one of these  and

and  , or

, or  and

and  ). But in fact any system possessing an

). But in fact any system possessing an

different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1.

different three-bit strings 000, 001, 010, 011, 100, 101, 110, 111. If it is a deterministic computer, then it is in exactly one of these states with probability 1. , that must equal 1. These squared magnitudes represent the probability of each of the given states. However, because a complex number encodes not just a magnitude but also a direction in the

, that must equal 1. These squared magnitudes represent the probability of each of the given states. However, because a complex number encodes not just a magnitude but also a direction in the  , the probability of measuring 001 =

, the probability of measuring 001 =  , etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution

, etc..). Thus, measuring a quantum state described by complex coefficients (a,b,...,h) gives the classical probability distribution  and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

and we say that the quantum state "collapses" to a classical state as a result of making the measurement.

and

and  .

. and

and  .

. , corresponding to the vector (1,0,0,0,0,0,0,0). In classical randomized computation, the system evolves according to the application of

, corresponding to the vector (1,0,0,0,0,0,0,0). In classical randomized computation, the system evolves according to the application of