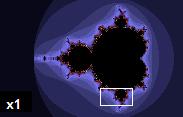

The

same fractal as above, magnified 6-fold. Same patterns reappear, making

the exact scale being examined difficult to determine.

In mathematics, a fractal is a detailed, recursive, and infinitely self-similar mathematical set whose Hausdorff dimension strictly exceeds its topological dimension and which is encountered ubiquitously in nature. Fractals exhibit similar patterns at increasingly small scales, also known as expanding symmetry or unfolding symmetry. If this replication is exactly the same at every scale, as in the Menger sponge, it is called a self-similar pattern. Fractals can also be nearly the same at different levels, as illustrated here in small magnifications of the Mandelbrot set.

One way that fractals are different from finite geometric figures is the way in which they scale. Doubling the edge lengths of a polygon multiplies its area by four, which is two (the ratio of the new to the old side length) raised to the power of two (the dimension of the space the polygon resides in). Likewise, if the radius of a sphere is doubled, its volume scales by eight, which is two (the ratio of the new to the old radius) to the power of three (the dimension that the sphere resides in). However, if a fractal's one-dimensional lengths are all doubled, the spatial content of the fractal scales by a power that is not necessarily an integer. This power is called the fractal dimension of the fractal, and it usually exceeds the fractal's topological dimension.

As mathematical equations, fractals are usually nowhere differentiable. An infinite fractal curve can be conceived of as winding through space differently from an ordinary line - although it is still 1-dimensional its fractal dimension indicates that it also resembles a surface.

Sierpinski carpet (to level 6), a fractal with a topological dimension of 2 and a Hausdorff dimension of 1.893

The mathematical roots of fractals have been traced throughout the years as a formal path of published works, starting in the 17th century with notions of recursion, then moving through increasingly rigorous mathematical treatment of the concept to the study of continuous but not differentiable functions in the 19th century by the seminal work of Bernard Bolzano, Bernhard Riemann, and Karl Weierstrass, and on to the coining of the word fractal in the 20th century with a subsequent burgeoning of interest in fractals and computer-based modelling in the 20th century. The term "fractal" was first used by mathematician Benoit Mandelbrot in 1975. Mandelbrot based it on the Latin frāctus meaning "broken" or "fractured", and used it to extend the concept of theoretical fractional dimensions to geometric patterns in nature.

There is some disagreement amongst authorities about how the concept of a fractal should be formally defined. Mandelbrot himself summarized it as "beautiful, damn hard, increasingly useful. That's fractals." More formally, in 1982 Mandelbrot stated that "A fractal is by definition a set for which the Hausdorff-Besicovitch dimension strictly exceeds the topological dimension." Later, seeing this as too restrictive, he simplified and expanded the definition to: "A fractal is a shape made of parts similar to the whole in some way." Still later, Mandelbrot settled on this use of the language: "...to use fractal without a pedantic definition, to use fractal dimension as a generic term applicable to all the variants."

The consensus is that theoretical fractals are infinitely self-similar, iterated, and detailed mathematical constructs having fractal dimensions, of which many examples have been formulated and studied in great depth. Fractals are not limited to geometric patterns, but can also describe processes in time. Fractal patterns with various degrees of self-similarity have been rendered or studied in images, structures and sounds and found in nature, technology, art, architecture and law. Fractals are of particular relevance in the field of chaos theory, since the graphs of most chaotic processes are fractals.

Introduction

The word "fractal" often has different connotations for laypeople than for mathematicians, where the layperson is more likely to be familiar with fractal art than a mathematical conception. The mathematical concept is difficult to define formally even for mathematicians, but key features can be understood with little mathematical background.The feature of "self-similarity", for instance, is easily understood by analogy to zooming in with a lens or other device that zooms in on digital images to uncover finer, previously invisible, new structure. If this is done on fractals, however, no new detail appears; nothing changes and the same pattern repeats over and over, or for some fractals, nearly the same pattern reappears over and over. Self-similarity itself is not necessarily counter-intuitive (e.g., people have pondered self-similarity informally such as in the infinite regress in parallel mirrors or the homunculus, the little man inside the head of the little man inside the head ...). The difference for fractals is that the pattern reproduced must be detailed.

This idea of being detailed relates to another feature that can be understood without mathematical background: Having a fractional or fractal dimension greater than its topological dimension, for instance, refers to how a fractal scales compared to how geometric shapes are usually perceived. A regular line, for instance, is conventionally understood to be 1-dimensional; if such a curve is divided into pieces each 1/3 the length of the original, there are always 3 equal pieces. In contrast, consider the Koch snowflake. It is also 1-dimensional for the same reason as the ordinary line, but it has, in addition, a fractal dimension greater than 1 because of how its detail can be measured. The fractal curve divided into parts 1/3 the length of the original line becomes 4 pieces rearranged to repeat the original detail, and this unusual relationship is the basis of its fractal dimension.

This also leads to understanding a third feature, that fractals as mathematical equations are "nowhere differentiable". In a concrete sense, this means fractals cannot be measured in traditional ways. To elaborate, in trying to find the length of a wavy non-fractal curve, one could find straight segments of some measuring tool small enough to lay end to end over the waves, where the pieces could get small enough to be considered to conform to the curve in the normal manner of measuring with a tape measure. But in measuring a wavy fractal curve such as the Koch snowflake, one would never find a small enough straight segment to conform to the curve, because the wavy pattern would always re-appear, albeit at a smaller size, essentially pulling a little more of the tape measure into the total length measured each time one attempted to fit it tighter and tighter to the curve.

History

A Koch snowflake

is a fractal that begins with an equilateral triangle and then replaces

the middle third of every line segment with a pair of line segments

that form an equilateral bump

The history of fractals traces a path from chiefly theoretical studies to modern applications in computer graphics, with several notable people contributing canonical fractal forms along the way. According to Pickover, the mathematics behind fractals began to take shape in the 17th century when the mathematician and philosopher Gottfried Leibniz pondered recursive self-similarity (although he made the mistake of thinking that only the straight line was self-similar in this sense). In his writings, Leibniz used the term "fractional exponents", but lamented that "Geometry" did not yet know of them. Indeed, according to various historical accounts, after that point few mathematicians tackled the issues, and the work of those who did remained obscured largely because of resistance to such unfamiliar emerging concepts, which were sometimes referred to as mathematical "monsters". Thus, it was not until two centuries had passed that on July 18, 1872 Karl Weierstrass presented the first definition of a function with a graph that would today be considered a fractal, having the non-intuitive property of being everywhere continuous but nowhere differentiable at the Royal Prussian Academy of Sciences. In addition, the quotient difference becomes arbitrarily large as the summation index increases. Not long after that, in 1883, Georg Cantor, who attended lectures by Weierstrass, published examples of subsets of the real line known as Cantor sets, which had unusual properties and are now recognized as fractals. Also in the last part of that century, Felix Klein and Henri Poincaré introduced a category of fractal that has come to be called "self-inverse" fractals.

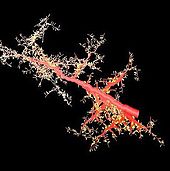

A Julia set, a fractal related to the Mandelbrot set

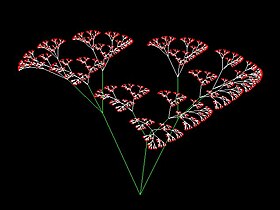

A Sierpinski triangle can be generated by a fractal tree.

One of the next milestones came in 1904, when Helge von Koch, extending ideas of Poincaré and dissatisfied with Weierstrass's abstract and analytic definition, gave a more geometric definition including hand drawn images of a similar function, which is now called the Koch snowflake. Another milestone came a decade later in 1915, when Wacław Sierpiński constructed his famous triangle then, one year later, his carpet. By 1918, two French mathematicians, Pierre Fatou and Gaston Julia, though working independently, arrived essentially simultaneously at results describing what are now seen as fractal behaviour associated with mapping complex numbers and iterative functions and leading to further ideas about attractors and repellors (i.e., points that attract or repel other points), which have become very important in the study of fractals. Very shortly after that work was submitted, by March 1918, Felix Hausdorff expanded the definition of "dimension", significantly for the evolution of the definition of fractals, to allow for sets to have noninteger dimensions. The idea of self-similar curves was taken further by Paul Lévy, who, in his 1938 paper Plane or Space Curves and Surfaces Consisting of Parts Similar to the Whole described a new fractal curve, the Lévy C curve.

A strange attractor that exhibits multifractal scaling

Uniform mass center triangle fractal

2x 120 degrees recursive IFS

Different researchers have postulated that without the aid of modern computer graphics, early investigators were limited to what they could depict in manual drawings, so lacked the means to visualize the beauty and appreciate some of the implications of many of the patterns they had discovered (the Julia set, for instance, could only be visualized through a few iterations as very simple drawings). That changed, however, in the 1960s, when Benoit Mandelbrot started writing about self-similarity in papers such as How Long Is the Coast of Britain? Statistical Self-Similarity and Fractional Dimension, which built on earlier work by Lewis Fry Richardson. In 1975 Mandelbrot solidified hundreds of years of thought and mathematical development in coining the word "fractal" and illustrated his mathematical definition with striking computer-constructed visualizations. These images, such as of his canonical Mandelbrot set, captured the popular imagination; many of them were based on recursion, leading to the popular meaning of the term "fractal".

In 1980, Loren Carpenter gave a presentation at the SIGGRAPH where he introduced his software for generating and rendering fractally generated landscapes.

Characteristics

One often cited description that Mandelbrot published to describe geometric fractals is "a rough or fragmented geometric shape that can be split into parts, each of which is (at least approximately) a reduced-size copy of the whole"; this is generally helpful but limited. Authors disagree on the exact definition of fractal, but most usually elaborate on the basic ideas of self-similarity and an unusual relationship with the space a fractal is embedded in. One point agreed on is that fractal patterns are characterized by fractal dimensions, but whereas these numbers quantify complexity (i.e., changing detail with changing scale), they neither uniquely describe nor specify details of how to construct particular fractal patterns. In 1975 when Mandelbrot coined the word "fractal", he did so to denote an object whose Hausdorff–Besicovitch dimension is greater than its topological dimension. It has been noted that this dimensional requirement is not met by fractal space-filling curves such as the Hilbert curve.According to Falconer, rather than being strictly defined, fractals should, in addition to being nowhere differentiable and able to have a fractal dimension, be generally characterized by a gestalt of the following features;

- Self-similarity, which may be manifested as:

- Exact self-similarity: identical at all scales; e.g. Koch snowflake

- Quasi self-similarity: approximates the same pattern at different scales; may contain small copies of the entire fractal in distorted and degenerate forms; e.g., the Mandelbrot set's satellites are approximations of the entire set, but not exact copies.

- Statistical self-similarity: repeats a pattern stochastically so numerical or statistical measures are preserved across scales; e.g., randomly generated fractals; the well-known example of the coastline of Britain, for which one would not expect to find a segment scaled and repeated as neatly as the repeated unit that defines, for example, the Koch snowflake

- Qualitative self-similarity: as in a time series

- Multifractal scaling: characterized by more than one fractal dimension or scaling rule

- Fine or detailed structure at arbitrarily small scales. A consequence of this structure is fractals may have emergent properties (related to the next criterion in this list).

- Irregularity locally and globally that is not easily described in traditional Euclidean geometric language. For images of fractal patterns, this has been expressed by phrases such as "smoothly piling up surfaces" and "swirls upon swirls".

- Simple and "perhaps recursive" definitions.

Common techniques for generating fractals

Images of fractals can be created by fractal generating programs. Because of the butterfly effect a small change in a single variable can have a unpredictable outcome.- Iterated function systems (IFS) – use fixed geometric replacement rules; may be stochastic or deterministic; e.g., Koch snowflake, Cantor set, Haferman carpet, Sierpinski carpet, Sierpinski gasket, Peano curve, Harter-Heighway dragon curve, T-Square, Menger sponge

- Strange attractors – use iterations of a map or solutions of a system of initial-value differential or difference equations that exhibit chaos

- L-systems – use string rewriting; may resemble branching patterns, such as in plants, biological cells (e.g., neurons and immune system cells), blood vessels, pulmonary structure, etc. or turtle graphics patterns such as space-filling curves and tilings

- Escape-time fractals – use a formula or recurrence relation at each point in a space (such as the complex plane); usually quasi-self-similar; also known as "orbit" fractals; e.g., the Mandelbrot set, Julia set, Burning Ship fractal, Nova fractal and Lyapunov fractal. The 2d vector fields that are generated by one or two iterations of escape-time formulae also give rise to a fractal form when points (or pixel data) are passed through this field repeatedly.

- Random fractals – use stochastic rules; e.g., Lévy flight, percolation clusters, self avoiding walks, fractal landscapes, trajectories of Brownian motion and the Brownian tree (i.e., dendritic fractals generated by modeling diffusion-limited aggregation or reaction-limited aggregation clusters).

A fractal generated by a finite subdivision rule for an alternating link

- Finite subdivision rules use a recursive topological algorithm for refining tilings and they are similar to the process of cell division. The iterative processes used in creating the Cantor set and the Sierpinski carpet are examples of finite subdivision rules, as is barycentric subdivision.

Simulated fractals

Fractal patterns have been modeled extensively, albeit within a range of scales rather than infinitely, owing to the practical limits of physical time and space. Models may simulate theoretical fractals or natural phenomena with fractal features. The outputs of the modelling process may be highly artistic renderings, outputs for investigation, or benchmarks for fractal analysis. Some specific applications of fractals to technology are listed elsewhere. Images and other outputs of modelling are normally referred to as being "fractals" even if they do not have strictly fractal characteristics, such as when it is possible to zoom into a region of the fractal image that does not exhibit any fractal properties. Also, these may include calculation or display artifacts which are not characteristics of true fractals.

Modeled fractals may be sounds, digital images, electrochemical patterns, circadian rhythms, etc. Fractal patterns have been reconstructed in physical 3-dimensional space and virtually, often called "in silico" modeling. Models of fractals are generally created using fractal-generating software that implements techniques such as those outlined above. As one illustration, trees, ferns, cells of the nervous system, blood and lung vasculature, and other branching patterns in nature can be modeled on a computer by using recursive algorithms and L-systems techniques. The recursive nature of some patterns is obvious in certain examples—a branch from a tree or a frond from a fern is a miniature replica of the whole: not identical, but similar in nature. Similarly, random fractals have been used to describe/create many highly irregular real-world objects. A limitation of modeling fractals is that resemblance of a fractal model to a natural phenomenon does not prove that the phenomenon being modeled is formed by a process similar to the modeling algorithms.

Natural phenomena with fractal features

Approximate fractals found in nature display self-similarity over extended, but finite, scale ranges. The connection between fractals and leaves, for instance, is currently being used to determine how much carbon is contained in trees. Phenomena known to have fractal features include:- Actin cytoskeleton

- Algae

- Animal coloration patterns

- Blood vessels and pulmonary vessels

- Coastlines

- Craters

- Crystals

- DNA

- Earthquakes

- Fault lines

- Geometrical optics

- Heart rates

- Heart sounds

- Lightning bolts

- Mountain goat horns

- Mountain ranges

- Ocean waves

- Pineapple

- Psychological subjective perception

- Proteins

- Rings of Saturn

- River networks

- Romanesco broccoli

- Snowflakes

- Soil pores

- Surfaces in turbulent flows

- Trees

- Brownian motion (generated by a one-dimensional Wiener Process).

- High voltage breakdown within a 4 in (100 mm) block of acrylic creates a fractal Lichtenberg figure

In creative works

Since 1999, more than 10 scientific groups have performed fractal analysis on over 50 of Jackson Pollock's (1912–1956) paintings which were created by pouring paint directly onto his horizontal canvases Recently, fractal analysis has been used to achieve a 93% success rate in distinguishing real from imitation Pollocks. Cognitive neuroscientists have shown that Pollock's fractals induce the same stress-reduction in observers as computer-generated fractals and Nature's fractals.Decalcomania, a technique used by artists such as Max Ernst, can produce fractal-like patterns. It involves pressing paint between two surfaces and pulling them apart.

Cyberneticist Ron Eglash has suggested that fractal geometry and mathematics are prevalent in African art, games, divination, trade, and architecture. Circular houses appear in circles of circles, rectangular houses in rectangles of rectangles, and so on. Such scaling patterns can also be found in African textiles, sculpture, and even cornrow hairstyles. Hokky Situngkir also suggested the similar properties in Indonesian traditional art, batik, and ornaments found in traditional houses.

In a 1996 interview with Michael Silverblatt, David Foster Wallace admitted that the structure of the first draft of Infinite Jest he gave to his editor Michael Pietsch was inspired by fractals, specifically the Sierpinski triangle (a.k.a. Sierpinski gasket), but that the edited novel is "more like a lopsided Sierpinsky Gasket".