The word probability has been used in a variety of ways since it was first applied to the mathematical study of games of chance. Does probability measure the real, physical, tendency of something to occur, or is it a measure of how strongly one believes it will occur, or does it draw on both these elements? In answering such questions, mathematicians interpret the probability values of probability theory.

There are two broad categories of probability interpretations which can be called "physical" and "evidential" probabilities. Physical probabilities, which are also called objective or frequency probabilities, are associated with random physical systems such as roulette wheels, rolling dice and radioactive atoms. In such systems, a given type of event (such as a die yielding a six) tends to occur at a persistent rate, or "relative frequency", in a long run of trials. Physical probabilities either explain, or are invoked to explain, these stable frequencies. The two main kinds of theory of physical probability are frequentist accounts (such as those of Venn, Reichenbach and von Mises) and propensity accounts (such as those of Popper, Miller, Giere and Fetzer).

Evidential probability, also called Bayesian probability, can be assigned to any statement whatsoever, even when no random process is involved, as a way to represent its subjective plausibility, or the degree to which the statement is supported by the available evidence. On most accounts, evidential probabilities are considered to be degrees of belief, defined in terms of dispositions to gamble at certain odds. The four main evidential interpretations are the classical (e.g. Laplace's) interpretation, the subjective interpretation (de Finetti and Savage), the epistemic or inductive interpretation (Ramsey, Cox) and the logical interpretation (Keynes and Carnap). There are also evidential interpretations of probability covering groups, which are often labelled as 'intersubjective' (proposed by Gillies and Rowbottom).

Some interpretations of probability are associated with approaches to statistical inference, including theories of estimation and hypothesis testing. The physical interpretation, for example, is taken by followers of "frequentist" statistical methods, such as Ronald Fisher, Jerzy Neyman, and Egon Pearson. Statisticians of the opposing Bayesian school typically accept the frequency interpretation when it makes sense (although not as a definition), but there's less agreement regarding physical probabilities. Bayesians consider the calculation of evidential probabilities to be both valid and necessary in statistics. This article, however, focuses on the interpretations of probability rather than theories of statistical inference.

The terminology of this topic is rather confusing, in part because probabilities are studied within a variety of academic fields. The word "frequentist" is especially tricky. To philosophers it refers to a particular theory of physical probability, one that has more or less been abandoned. To scientists, on the other hand, "frequentist probability" is just another name for physical (or objective) probability. Those who promote Bayesian inference view "frequentist statistics" as an approach to statistical inference that is based on the frequency interpretation of probability, usually relying on the law of large numbers and characterized by what is called 'Null Hypothesis Significance Testing' (NHST). Also the word "objective", as applied to probability, sometimes means exactly what "physical" means here, but is also used of evidential probabilities that are fixed by rational constraints, such as logical and epistemic probabilities.

It is unanimously agreed that statistics depends somehow on probability. But, as to what probability is and how it is connected with statistics, there has seldom been such complete disagreement and breakdown of communication since the Tower of Babel. Doubtless, much of the disagreement is merely terminological and would disappear under sufficiently sharp analysis.

— (Savage, 1954, p 2)

Philosophy

The philosophy of probability presents problems chiefly in matters of epistemology and the uneasy interface between mathematical concepts and ordinary language as it is used by non-mathematicians. Probability theory is an established field of study in mathematics. It has its origins in correspondence discussing the mathematics of games of chance between Blaise Pascal and Pierre de Fermat in the seventeenth century, and was formalized and rendered axiomatic as a distinct branch of mathematics by Andrey Kolmogorov in the twentieth century. In axiomatic form, mathematical statements about probability theory carry the same sort of epistemological confidence within the philosophy of mathematics as are shared by other mathematical statements.

The mathematical analysis originated in observations of the behaviour of game equipment such as playing cards and dice, which are designed specifically to introduce random and equalized elements; in mathematical terms, they are subjects of indifference. This is not the only way probabilistic statements are used in ordinary human language: when people say that "it will probably rain", they typically do not mean that the outcome of rain versus not-rain is a random factor that the odds currently favor; instead, such statements are perhaps better understood as qualifying their expectation of rain with a degree of confidence. Likewise, when it is written that "the most probable explanation" of the name of Ludlow, Massachusetts "is that it was named after Roger Ludlow", what is meant here is not that Roger Ludlow is favored by a random factor, but rather that this is the most plausible explanation of the evidence, which admits other, less likely explanations.

Thomas Bayes attempted to provide a logic that could handle varying degrees of confidence; as such, Bayesian probability is an attempt to recast the representation of probabilistic statements as an expression of the degree of confidence by which the beliefs they express are held.

Though probability initially had somewhat mundane motivations, its modern influence and use is widespread ranging from evidence-based medicine, through six sigma, all the way to the probabilistically checkable proof and the string theory landscape.

|

|

Classical | Frequentist | Subjective | Propensity |

|---|---|---|---|---|

| Main hypothesis | Principle of indifference | Frequency of occurrence | Degree of belief | Degree of causal connection |

| Conceptual basis | Hypothetical symmetry | Past data and reference class | Knowledge and intuition | Present state of system |

| Conceptual approach | Conjectural | Empirical | Subjective | Metaphysical |

| Single case possible | Yes | No | Yes | Yes |

| Precise | Yes | No | No | Yes |

| Problems | Ambiguity in principle of indifference | Circular definition | Reference class problem | Disputed concept |

Classical definition

The first attempt at mathematical rigour in the field of probability, championed by Pierre-Simon Laplace, is now known as the classical definition. Developed from studies of games of chance (such as rolling dice) it states that probability is shared equally between all the possible outcomes, provided these outcomes can be deemed equally likely. (3.1)

The theory of chance consists in reducing all the events of the same kind to a certain number of cases equally possible, that is to say, to such as we may be equally undecided about in regard to their existence, and in determining the number of cases favorable to the event whose probability is sought. The ratio of this number to that of all the cases possible is the measure of this probability, which is thus simply a fraction whose numerator is the number of favorable cases and whose denominator is the number of all the cases possible.

— Pierre-Simon Laplace, A Philosophical Essay on Probabilities

This can be represented mathematically as follows: If a random experiment can result in N mutually exclusive and equally likely outcomes and if NA of these outcomes result in the occurrence of the event A, the probability of A is defined by

There are two clear limitations to the classical definition. Firstly, it is applicable only to situations in which there is only a 'finite' number of possible outcomes. But some important random experiments, such as tossing a coin until it rises heads, give rise to an infinite set of outcomes. And secondly, you need to determine in advance that all the possible outcomes are equally likely without relying on the notion of probability to avoid circularity—for instance, by symmetry considerations.

Frequentism

Frequentists posit that the probability of an event is its relative frequency over time, (3.4) i.e., its relative frequency of occurrence after repeating a process a large number of times under similar conditions. This is also known as aleatory probability. The events are assumed to be governed by some random physical phenomena, which are either phenomena that are predictable, in principle, with sufficient information (see determinism); or phenomena which are essentially unpredictable. Examples of the first kind include tossing dice or spinning a roulette wheel; an example of the second kind is radioactive decay. In the case of tossing a fair coin, frequentists say that the probability of getting a heads is 1/2, not because there are two equally likely outcomes but because repeated series of large numbers of trials demonstrate that the empirical frequency converges to the limit 1/2 as the number of trials goes to infinity.

If we denote by the number of occurrences of an event in trials, then if we say that .

The frequentist view has its own problems. It is of course impossible to actually perform an infinity of repetitions of a random experiment to determine the probability of an event. But if only a finite number of repetitions of the process are performed, different relative frequencies will appear in different series of trials. If these relative frequencies are to define the probability, the probability will be slightly different every time it is measured. But the real probability should be the same every time. If we acknowledge the fact that we only can measure a probability with some error of measurement attached, we still get into problems as the error of measurement can only be expressed as a probability, the very concept we are trying to define. This renders even the frequency definition circular; see for example “What is the Chance of an Earthquake?”

Subjectivism

Subjectivists, also known as Bayesians or followers of epistemic probability, give the notion of probability a subjective status by regarding it as a measure of the 'degree of belief' of the individual assessing the uncertainty of a particular situation. Epistemic or subjective probability is sometimes called credence, as opposed to the term chance for a propensity probability. Some examples of epistemic probability are to assign a probability to the proposition that a proposed law of physics is true or to determine how probable it is that a suspect committed a crime, based on the evidence presented. The use of Bayesian probability raises the philosophical debate as to whether it can contribute valid justifications of belief. Bayesians point to the work of Ramsey (p 182) and de Finetti (p 103) as proving that subjective beliefs must follow the laws of probability if they are to be coherent. Evidence casts doubt that humans will have coherent beliefs. The use of Bayesian probability involves specifying a prior probability. This may be obtained from consideration of whether the required prior probability is greater or lesser than a reference probability associated with an urn model or a thought experiment. The issue is that for a given problem, multiple thought experiments could apply, and choosing one is a matter of judgement: different people may assign different prior probabilities, known as the reference class problem. The "sunrise problem" provides an example.

Propensity

Propensity theorists think of probability as a physical propensity, or disposition, or tendency of a given type of physical situation to yield an outcome of a certain kind or to yield a long run relative frequency of such an outcome. This kind of objective probability is sometimes called 'chance'.

Propensities, or chances, are not relative frequencies, but purported causes of the observed stable relative frequencies. Propensities are invoked to explain why repeating a certain kind of experiment will generate given outcome types at persistent rates, which are known as propensities or chances. Frequentists are unable to take this approach, since relative frequencies do not exist for single tosses of a coin, but only for large ensembles or collectives (see "single case possible" in the table above). In contrast, a propensitist is able to use the law of large numbers to explain the behaviour of long-run frequencies. This law, which is a consequence of the axioms of probability, says that if (for example) a coin is tossed repeatedly many times, in such a way that its probability of landing heads is the same on each toss, and the outcomes are probabilistically independent, then the relative frequency of heads will be close to the probability of heads on each single toss. This law allows that stable long-run frequencies are a manifestation of invariant single-case probabilities. In addition to explaining the emergence of stable relative frequencies, the idea of propensity is motivated by the desire to make sense of single-case probability attributions in quantum mechanics, such as the probability of decay of a particular atom at a particular time.

The main challenge facing propensity theories is to say exactly what propensity means. (And then, of course, to show that propensity thus defined has the required properties.) At present, unfortunately, none of the well-recognised accounts of propensity comes close to meeting this challenge.

A propensity theory of probability was given by Charles Sanders Peirce. A later propensity theory was proposed by philosopher Karl Popper, who had only slight acquaintance with the writings of C. S. Peirce, however. Popper noted that the outcome of a physical experiment is produced by a certain set of "generating conditions". When we repeat an experiment, as the saying goes, we really perform another experiment with a (more or less) similar set of generating conditions. To say that a set of generating conditions has propensity p of producing the outcome E means that those exact conditions, if repeated indefinitely, would produce an outcome sequence in which E occurred with limiting relative frequency p. For Popper then, a deterministic experiment would have propensity 0 or 1 for each outcome, since those generating conditions would have same outcome on each trial. In other words, non-trivial propensities (those that differ from 0 and 1) only exist for genuinely nondeterministic experiments.

A number of other philosophers, including David Miller and Donald A. Gillies, have proposed propensity theories somewhat similar to Popper's.

Other propensity theorists (e.g. Ronald Giere) do not explicitly define propensities at all, but rather see propensity as defined by the theoretical role it plays in science. They argued, for example, that physical magnitudes such as electrical charge cannot be explicitly defined either, in terms of more basic things, but only in terms of what they do (such as attracting and repelling other electrical charges). In a similar way, propensity is whatever fills the various roles that physical probability plays in science.

What roles does physical probability play in science? What are its properties? One central property of chance is that, when known, it constrains rational belief to take the same numerical value. David Lewis called this the Principal Principle, (3.3 & 3.5) a term that philosophers have mostly adopted. For example, suppose you are certain that a particular biased coin has propensity 0.32 to land heads every time it is tossed. What is then the correct price for a gamble that pays $1 if the coin lands heads, and nothing otherwise? According to the Principal Principle, the fair price is 32 cents.

Logical, epistemic, and inductive probability

It is widely recognized that the term "probability" is sometimes used in contexts where it has nothing to do with physical randomness. Consider, for example, the claim that the extinction of the dinosaurs was probably caused by a large meteorite hitting the earth. Statements such as "Hypothesis H is probably true" have been interpreted to mean that the (presently available) empirical evidence (E, say) supports H to a high degree. This degree of support of H by E has been called the logical probability of H given E, or the epistemic probability of H given E, or the inductive probability of H given E.

The differences between these interpretations are rather small, and may seem inconsequential. One of the main points of disagreement lies in the relation between probability and belief. Logical probabilities are conceived (for example in Keynes' Treatise on Probability) to be objective, logical relations between propositions (or sentences), and hence not to depend in any way upon belief. They are degrees of (partial) entailment, or degrees of logical consequence, not degrees of belief. (They do, nevertheless, dictate proper degrees of belief, as is discussed below.) Frank P. Ramsey, on the other hand, was skeptical about the existence of such objective logical relations and argued that (evidential) probability is "the logic of partial belief". (p 157) In other words, Ramsey held that epistemic probabilities simply are degrees of rational belief, rather than being logical relations that merely constrain degrees of rational belief.

Another point of disagreement concerns the uniqueness of evidential probability, relative to a given state of knowledge. Rudolf Carnap held, for example, that logical principles always determine a unique logical probability for any statement, relative to any body of evidence. Ramsey, by contrast, thought that while degrees of belief are subject to some rational constraints (such as, but not limited to, the axioms of probability) these constraints usually do not determine a unique value. Rational people, in other words, may differ somewhat in their degrees of belief, even if they all have the same information.

Prediction

An alternative account of probability emphasizes the role of prediction – predicting future observations on the basis of past observations, not on unobservable parameters. In its modern form, it is mainly in the Bayesian vein. This was the main function of probability before the 20th century, but fell out of favor compared to the parametric approach, which modeled phenomena as a physical system that was observed with error, such as in celestial mechanics.

The modern predictive approach was pioneered by Bruno de Finetti, with the central idea of exchangeability – that future observations should behave like past observations. This view came to the attention of the Anglophone world with the 1974 translation of de Finetti's book, and has since been propounded by such statisticians as Seymour Geisser.

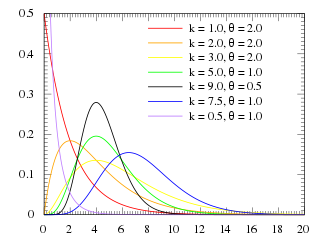

![{\displaystyle k={\frac {E[X]^{2}}{V[X]}}\quad \quad }](https://wikimedia.org/api/rest_v1/media/math/render/svg/79060985aa8683bbf0b380d57ca56522822342ca)

![{\displaystyle \theta ={\frac {V[X]}{E[X]}}\quad \quad }](https://wikimedia.org/api/rest_v1/media/math/render/svg/de7bf8b64325f4129e05929e2385f3ca37bb88bf)

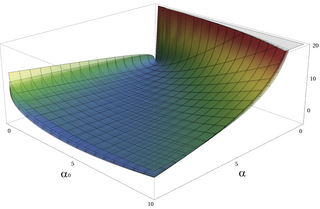

![{\displaystyle \alpha ={\frac {E[X]^{2}}{V[X]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/87074b8ec525badd064920b64dcff7be1c51ceaa)

![{\displaystyle \beta ={\frac {E[X]}{V[X]}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/187bc571898043026331662ae41bb70d4104d429)

![{\displaystyle {\begin{aligned}f(x;\alpha ,\beta )&={\frac {x^{\alpha -1}e^{-\beta x}\beta ^{\alpha }}{\Gamma (\alpha )}}\quad {\text{ for }}x>0\quad \alpha ,\beta >0,\\[6pt]\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ebf760a328d5b468fea5f9f1d47cca54b558b6da)

![{\displaystyle \mathrm {E} [X^{n}]=\theta ^{n}{\frac {\Gamma (n+k)}{\Gamma (k)}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/83c5249cc28b4f7b7529ebe80d80a4f7ab8e7afc)

![{\displaystyle \operatorname {E} [\ln(X)]=\psi (\alpha )-\ln(\beta )}](https://wikimedia.org/api/rest_v1/media/math/render/svg/6da14ff7ed563c7e86154998ef6fd180e79c9bfa)

![{\displaystyle \operatorname {E} [\ln(X)]=\psi (k)+\ln(\theta )}](https://wikimedia.org/api/rest_v1/media/math/render/svg/186737f3b184bf00519b3a4b1412a560e1216093)

![{\displaystyle \operatorname {var} [\ln(X)]=\psi ^{(1)}(\alpha )=\psi ^{(1)}(k)}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b193ce127d5d0de9a3430b7dc803c092262f7b5c)

![{\displaystyle {\begin{aligned}\operatorname {H} (X)&=\operatorname {E} [-\ln(p(X))]\\[4pt]&=\operatorname {E} [-\alpha \ln(\beta )+\ln(\Gamma (\alpha ))-(\alpha -1)\ln(X)+\beta X]\\[4pt]&=\alpha -\ln(\beta )+\ln(\Gamma (\alpha ))+(1-\alpha )\psi (\alpha ).\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/37a24251136eb110aea24081dcffb2ee9e9648d8)

![{\displaystyle \operatorname {E} [x^{m}]={\frac {\Gamma (Nk-m)}{\Gamma (Nk)}}y^{m}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/61ae01ae77aa6c640cbaa1bb2a8863454827916a)