Artificial gravity is the creation of an inertial force that mimics the effects of a gravitational force, usually by rotation. Artificial gravity, or rotational gravity, is thus the appearance of a centrifugal force in a rotating frame of reference (the transmission of centripetal acceleration via normal force in the non-rotating frame of reference), as opposed to the force experienced in linear acceleration, which by the equivalence principle is indistinguishable from gravity. In a more general sense, "artificial gravity" may also refer to the effect of linear acceleration, e.g. by means of a rocket engine.

Rotational simulated gravity has been used in simulations to help astronauts train for extreme conditions. Rotational simulated gravity has been proposed as a solution in human spaceflight to the adverse health effects caused by prolonged weightlessness. However, there are no current practical outer space applications of artificial gravity for humans due to concerns about the size and cost of a spacecraft necessary to produce a useful centripetal force comparable to the gravitational field strength on Earth (g). Scientists are concerned about the effect of such a system on the inner ear of the occupants. The concern is that using centripetal force to create artificial gravity will cause disturbances in the inner ear leading to nausea and disorientation. The adverse effects may prove intolerable for the occupants.

Centrifugal force

In the context of a rotating space station, it is the radial force provided by the spacecraft's hull that acts as centripetal force. Thus, the "gravity" force felt by an object is the centrifugal force perceived in the rotating frame of reference as pointing "downwards" towards the hull.

By Newton's third law, the value of little g (the perceived "downward" acceleration) is equal in magnitude and opposite in direction to the centripetal acceleration. It was tested with satellites like Bion 3 (1975) and Bion 4 (1977); they both had centrifuges on board to put some specimens in an artificial gravity environment.

Differences from normal gravity

From the perspective of people rotating with the habitat, artificial gravity by rotation behaves similarly to normal gravity but with the following differences, which can be mitigated by increasing the radius of a space station.

- Centrifugal force varies with distance: Unlike real gravity, the apparent force felt by observers in the habitat pushes radially outward from the axis, and the centrifugal force is directly proportional to the distance from the axis of the habitat. With a small radius of rotation, a standing person's head would feel significantly less gravity than their feet. Likewise, passengers who move in a space station experience changes in apparent weight in different parts of the body.

- The Coriolis effect gives an apparent force that acts on objects that are moving relative to a rotating reference frame. This apparent force acts at right angles to the motion and the rotation axis and tends to curve the motion in the opposite sense to the habitat's spin. If an astronaut inside a rotating artificial gravity environment moves towards or away from the axis of rotation, they will feel a force pushing them in or against the direction of spin. These forces act on the semicircular canals of the inner ear and can cause dizziness. Lengthening the period of rotation (lower spin rate) reduces the Coriolis force and its effects. It is generally believed that at 2 rpm or less, no adverse effects from the Coriolis forces will occur, although humans have been shown to adapt to rates as high as 23 rpm.

- Changes in the rotation axis or rate of a spin would cause a disturbance in the artificial gravity field and stimulate the semicircular canals (refer to above). Any movement of mass within the station, including a movement of people, would shift the axis and could potentially cause a dangerous wobble. Thus, the rotation of a space station would need to be adequately stabilized, and any operations to deliberately change the rotation would need to be done slowly enough to be imperceptible. One possible solution to prevent the station from wobbling would be to use its liquid water supply as ballast which could be pumped between different sections of the station as required.

Human spaceflight

The Gemini 11 mission attempted in 1966 to produce artificial gravity by rotating the capsule around the Agena Target Vehicle to which it was attached by a 36-meter tether. They were able to generate a small amount of artificial gravity, about 0.00015 g, by firing their side thrusters to slowly rotate the combined craft like a slow-motion pair of bolas. The resultant force was too small to be felt by either astronaut, but objects were observed moving towards the "floor" of the capsule.

Health benefits

Artificial gravity has been suggested as a solution to various health risks associated with spaceflight. In 1964, the Soviet space program believed that a human could not survive more than 14 days in space for fear that the heart and blood vessels would be unable to adapt to the weightless conditions. This fear was eventually discovered to be unfounded as spaceflights have now lasted up to 437 consecutive days, with missions aboard the International Space Station commonly lasting 6 months. However, the question of human safety in space did launch an investigation into the physical effects of prolonged exposure to weightlessness. In June 1991, the Spacelab Life Sciences 1 on the Space Shuttle flight STS-40 flight performed 18 experiments on two men and two women over nine days. In an environment without gravity, it was concluded that the response of white blood cells and muscle mass decreased. Additionally, within the first 24 hours spent in a weightless environment, blood volume decreased by 10%. Long periods of weightlessness can cause brain swelling and eyesight problems. Upon return to Earth, the effects of prolonged weightlessness continue to affect the human body as fluids pool back to the lower body, the heart rate rises, a drop in blood pressure occurs, and there is a reduced tolerance for exercise.

Artificial gravity, for its ability to mimic the behavior of gravity on the human body, has been suggested as one of the most encompassing manners of combating the physical effects inherent in weightless environments. Other measures that have been suggested as symptomatic treatments include exercise, diet, and Pingvin suits. However, criticism of those methods lies in the fact that they do not fully eliminate health problems and require a variety of solutions to address all issues. Artificial gravity, in contrast, would remove the weightlessness inherent in space travel. By implementing artificial gravity, space travelers would never have to experience weightlessness or the associated side effects. Especially in a modern-day six-month journey to Mars, exposure to artificial gravity is suggested in either a continuous or intermittent form to prevent extreme debilitation to the astronauts during travel.

Proposals

Several proposals have incorporated artificial gravity into their design:

- Discovery II: a 2005 vehicle proposal capable of delivering a 172-metric-ton crew to Jupiter's orbit in 118 days. A very small portion of the 1,690-metric-ton craft would incorporate a centrifugal crew station.

- Multi-Mission Space Exploration Vehicle (MMSEV): a 2011 NASA proposal for a long-duration crewed space transport vehicle; it included a rotational artificial gravity space habitat intended to promote crew health for a crew of up to six persons on missions of up to two years in duration. The torus-ring centrifuge would utilize both standard metal-frame and inflatable spacecraft structures and would provide 0.11 to 0.69 g if built with the 40 feet (12 m) diameter option.

- ISS Centrifuge Demo: a 2011 NASA proposal for a demonstration project preparatory to the final design of the larger torus centrifuge space habitat for the Multi-Mission Space Exploration Vehicle. The structure would have an outside diameter of 30 feet (9.1 m) with a ring interior cross-section diameter of 30 inches (760 mm). It would provide 0.08 to 0.51 g partial gravity. This test and evaluation centrifuge would have the capability to become a Sleep Module for the ISS crew.

- Mars Direct: A plan for a crewed Mars mission created by NASA engineers Robert Zubrin and David Baker in 1990, later expanded upon in Zubrin's 1996 book The Case for Mars. The "Mars Habitat Unit", which would carry astronauts to Mars to join the previously launched "Earth Return Vehicle", would have had artificial gravity generated during flight by tying the spent upper stage of the booster to the Habitat Unit, and setting them both rotating about a common axis.

- The proposed Tempo3 mission rotates two halves of a spacecraft connected by a tether to test the feasibility of simulating gravity on a crewed mission to Mars.

- The Mars Gravity Biosatellite was a proposed mission meant to study the effect of artificial gravity on mammals. An artificial gravity field of 0.38 g (equivalent to Mars's surface gravity) was to be produced by rotation (32 rpm, radius of ca. 30 cm). Fifteen mice would have orbited Earth (Low Earth orbit) for five weeks and then land alive. However, the program was canceled on 24 June 2009, due to a lack of funding and shifting priorities at NASA.

- Vast Space is a private company that proposes to build the world's first artificial gravity space station using the rotating spacecraft concept.

Issues with implementation

Some of the reasons that artificial gravity remains unused today in spaceflight trace back to the problems inherent in implementation. One of the realistic methods of creating artificial gravity is the centrifugal effect caused by the centripetal force of the floor of a rotating structure pushing up on the person. In that model, however, issues arise in the size of the spacecraft. As expressed by John Page and Matthew Francis, the smaller a spacecraft (the shorter the radius of rotation), the more rapid the rotation that is required. As such, to simulate gravity, it would be better to utilize a larger spacecraft that rotates slowly.

The requirements on size about rotation are due to the differing forces on parts of the body at different distances from the axis of rotation. If parts of the body closer to the rotational axis experience a force that is significantly different from parts farther from the axis, then this could have adverse effects. Additionally, questions remain as to what the best way is to initially set the rotating motion in place without disturbing the stability of the whole spacecraft's orbit. At the moment, there is not a ship massive enough to meet the rotation requirements, and the costs associated with building, maintaining, and launching such a craft are extensive.

In general, with the small number of negative health effects present in today's typically shorter spaceflights, as well as with the very large cost of research for a technology which is not yet really needed, the present day development of artificial gravity technology has necessarily been stunted and sporadic.

As the length of typical space flights increases, the need for artificial gravity for the passengers in such lengthy spaceflights will most certainly also increase, and so will the knowledge and resources available to create such artificial gravity, most likely also increase. In summary, it is probably only a question of time, as to how long it might take before the conditions are suitable for the completion of the development of artificial gravity technology, which will almost certainly be required at some point along with the eventual and inevitable development of an increase in the average length of a spaceflight.

In science fiction

Several science fiction novels, films, and series have featured artificial gravity production.

- In the movie 2001: A Space Odyssey, a rotating centrifuge in the Discovery spacecraft provides artificial gravity to the astronauts within it. The entirety of Space Station 5 rotates to provide artificial 1g downforce in the shirtsleeve environment of its outer rings; the central docking hub remains closer to zero gravity.

- The 1999 television series Cowboy Bebop, a rotating ring in the Bebop spacecraft creates artificial gravity throughout the spacecraft.

- In the novel The Martian, the Hermes

spacecraft achieves artificial gravity by design; it employs a ringed

structure, at whose periphery forces around 40% of Earth's gravity are

experienced, similar to Mars' gravity.

- In the novel Project Hail Mary by the same author, weight on the titular ship Hail Mary is provided initially by engine thrust, as the ship is capable of constant acceleration up to 2 ɡ and is also able to separate, turn the crew compartment inwards, and rotate to produce 1 ɡ while in orbit.

- The movie Interstellar features a spacecraft called the Endurance that can rotate on its central axis to create artificial gravity, controlled by retro thrusters on the ship.

- The 2021 film Stowaway features the upper stage of a launch vehicle connected by 450-meter long tethers to the ship's main hull, acting as a counterweight for inertia-based artificial gravity.

- The series The Expanse utilizes both rotational gravity and linear thrust gravity in various space stations and spaceships. Notably, Tycho Station and the Generation ship LDSS Nauvoo use rotational gravity. Linear gravity is provided by a fictitious 'Epstein Drive', which killed its creator Solomon Epstein during its maiden flight due to high gravity injuries.

- In the television series For All Mankind, the space hotel Polaris, later renamed Phoenix after being purchased and converted into a space vessel by Helios Aerospace for their own Mars mission, features a wheel-like structure controlled by thrusters to create artificial gravity, whilst a central axial hub operates in zero gravity as a docking station.

Linear acceleration

Linear acceleration is another method of generating artificial gravity, by using the thrust from a spacecraft's engines to create the illusion of being under a gravitational pull. A spacecraft under constant acceleration in a straight line would have the appearance of a gravitational pull in the direction opposite to that of the acceleration, as the thrust from the engines would cause the spacecraft to "push" itself up into the objects and persons inside of the vessel, thus creating the feeling of weight. This is because of Newton's third law: the weight that one would feel standing in a linearly accelerating spacecraft would not be a true gravitational pull, but simply the reaction of oneself pushing against the craft's hull as it pushes back. Similarly, objects that would otherwise be free-floating within the spacecraft if it were not accelerating would "fall" towards the engines when it started accelerating, as a consequence of Newton's first law: the floating object would remain at rest, while the spacecraft would accelerate towards it, and appear to an observer within that the object was "falling".

To emulate artificial gravity on Earth, spacecraft using linear acceleration gravity may be built similar to a skyscraper, with its engines as the bottom "floor". If the spacecraft were to accelerate at the rate of 1 g—Earth's gravitational pull—the individuals inside would be pressed into the hull at the same force, and thus be able to walk and behave as if they were on Earth.

This form of artificial gravity is desirable because it could functionally create the illusion of a gravity field that is uniform and unidirectional throughout a spacecraft, without the need for large, spinning rings, whose fields may not be uniform, not unidirectional with respect to the spacecraft, and require constant rotation. This would also have the advantage of relatively high speed: a spaceship accelerating at 1 g, 9.8 m/s2, for the first half of the journey, and then decelerating for the other half, could reach Mars within a few days. Similarly, a hypothetical space travel using constant acceleration of 1 g for one year would reach relativistic speeds and allow for a round trip to the nearest star, Proxima Centauri. As such, low-impulse but long-term linear acceleration has been proposed for various interplanetary missions. For example, even heavy (100 ton) cargo payloads to Mars could be transported to Mars in 27 months and retain approximately 55 percent of the LEO vehicle mass upon arrival into a Mars orbit, providing a low-gravity gradient to the spacecraft during the entire journey.

This form of gravity is not without challenges, however. At present, the only practical engines that could propel a vessel fast enough to reach speeds comparable to Earth's gravitational pull require chemical reaction rockets, which expel reaction mass to achieve thrust, and thus the acceleration could only last for as long as a vessel had fuel. The vessel would also need to be constantly accelerating and at a constant speed to maintain the gravitational effect, and thus would not have gravity while stationary, and could experience significant swings in g-forces if the vessel were to accelerate above or below 1 g. Further, for point-to-point journeys, such as Earth-Mars transits, vessels would need to constantly accelerate for half the journey, turn off their engines, perform a 180° flip, reactivate their engines, and then begin decelerating towards the target destination, requiring everything inside the vessel to experience weightlessness and possibly be secured down for the duration of the flip.

A propulsion system with a very high specific impulse (that is, good efficiency in the use of reaction mass that must be carried along and used for propulsion on the journey) could accelerate more slowly producing useful levels of artificial gravity for long periods of time. A variety of electric propulsion systems provide examples. Two examples of this long-duration, low-thrust, high-impulse propulsion that have either been practically used on spacecraft or are planned in for near-term in-space use are Hall effect thrusters and Variable Specific Impulse Magnetoplasma Rockets (VASIMR). Both provide very high specific impulse but relatively low thrust, compared to the more typical chemical reaction rockets. They are thus ideally suited for long-duration firings which would provide limited amounts of, but long-term, milli-g levels of artificial gravity in spacecraft.

In a number of science fiction plots, acceleration is used to produce artificial gravity for interstellar spacecraft, propelled by as yet theoretical or hypothetical means.

This effect of linear acceleration is well understood, and is routinely used for 0 g cryogenic fluid management for post-launch (subsequent) in-space firings of upper stage rockets.

Roller coasters, especially launched roller coasters or those that rely on electromagnetic propulsion, can provide linear acceleration "gravity", and so can relatively high acceleration vehicles, such as sports cars. Linear acceleration can be used to provide air-time on roller coasters and other thrill rides.

Simulating lunar gravity

In January 2022, China was reported by the South China Morning Post to have built a small (60 centimetres (24 in) diameter) research facility to simulate low lunar gravity with the help of magnets. The facility was reportedly partly inspired by the work of Andre Geim (who later shared the 2010 Nobel Prize in Physics for his research on graphene) and Michael Berry, who both shared the Ig Nobel Prize in Physics in 2000 for the magnetic levitation of a frog.

Graviton control or generator

Speculative or fictional mechanisms

In science fiction, artificial gravity (or cancellation of gravity) or "paragravity" is sometimes present in spacecraft that are neither rotating nor accelerating. At present, there is no confirmed technique as such that can simulate gravity other than actual rotation or acceleration. There have been many claims over the years of such a device. Eugene Podkletnov, a Russian engineer, has claimed since the early 1990s to have made such a device consisting of a spinning superconductor producing a powerful "gravitomagnetic field." In 2006, a research group funded by ESA claimed to have created a similar device that demonstrated positive results for the production of gravitomagnetism, although it produced only 0.0001 g.

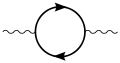

![{\displaystyle S_{\text{QED}}=\int d^{4}x\,\left[-{\frac {1}{4}}F^{\mu \nu }F_{\mu \nu }+{\bar {\psi }}\,(i\gamma ^{\mu }D_{\mu }-m)\,\psi \right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/5c55691a9d87188b6e030994eeb7c7b49c783f11)

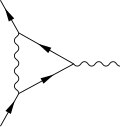

![{\displaystyle U=T\exp \left[-{\frac {i}{\hbar }}\int _{t_{0}}^{t}dt'\,V(t')\right],}](https://wikimedia.org/api/rest_v1/media/math/render/svg/39929208db730144caaaaf58ec4275d40b1a2db3)