From Wikipedia, the free encyclopedia

| Joseph Fourier | |

|---|---|

Jean-Baptiste Joseph Fourier

|

|

| Born | 21 March 1768 Auxerre, Burgundy, Kingdom of France (now in Yonne, France) |

| Died | 16 May 1830 (aged 62) Paris, Kingdom of France |

| Residence | France |

| Nationality | French |

| Fields | Mathematician, physicist, historian |

| Institutions | École Normale École Polytechnique |

| Alma mater | École Normale |

| Academic advisors | Joseph-Louis Lagrange |

| Notable students | Peter Gustav Lejeune Dirichlet Claude-Louis Navier Giovanni Plana |

| Known for | Fourier series Fourier transform Fourier's law of conduction Fourier–Motzkin elimination |

Jean-Baptiste Joseph Fourier - (/ˈfʊəriˌeɪ, -iər/;[1] French: [fuʁje]; 21 March 1768 – 16 May 1830) was a French mathematician and physicist born in Auxerre and best known for initiating the investigation of Fourier series and their applications to problems of heat transfer and vibrations. The Fourier transform and Fourier's law are also named in his honour. Fourier is also generally credited with the discovery of the greenhouse effect.[2]

Biography

Fourier was born at Auxerre (now in the Yonne département of France), the son of a tailor. He was orphaned at age nine. Fourier was recommended to the Bishop of Auxerre, and through this introduction, he was educated by the Benedictine Order of the Convent of St. Mark. The commissions in the scientific corps of the army were reserved for those of good birth, and being thus ineligible, he accepted a military lectureship on mathematics. He took a prominent part in his own district in promoting the French Revolution, serving on the local Revolutionary Committee. He was imprisoned briefly during the Terror but in 1795 was appointed to the École Normale, and subsequently succeeded Joseph-Louis Lagrange at the École Polytechnique.Fourier accompanied Napoleon Bonaparte on his Egyptian expedition in 1798, as scientific adviser, and was appointed secretary of the Institut d'Égypte. Cut off from France by the English fleet, he organized the workshops on which the French army had to rely for their munitions of war. He also contributed several mathematical papers to the Egyptian Institute (also called the Cairo Institute) which Napoleon founded at Cairo, with a view of weakening English influence in the East. After the British victories and the capitulation of the French under General Menou in 1801, Fourier returned to France.

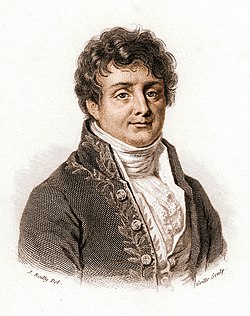

1820 watercolor caricatures of French mathematicians Adrien-Marie Legendre (left) and Joseph Fourier (right) by French artist Julien-Leopold Boilly, watercolor portrait numbers 29 and 30 of Album de 73 Portraits-Charge Aquarelle’s des Membres de I’Institute.[3]

In 1801,[4] Napoleon appointed Fourier Prefect (Governor) of the Department of Isère in Grenoble, where he oversaw road construction and other projects. However, Fourier had previously returned home from the Napoleon expedition to Egypt to resume his academic post as professor at École Polytechnique when Napoleon decided otherwise in his remark

... the Prefect of the Department of Isère having recently died, I would like to express my confidence in citizen Fourier by appointing him to this place.[4]

Hence being faithful to Napoleon, he took the office of Prefect.[4] It was while at Grenoble that he began to experiment on the propagation of heat. He presented his paper On the Propagation of Heat in Solid Bodies to the Paris Institute on December 21, 1807. He also contributed to the monumental Description de l'Égypte.[5]

Fourier moved to England in 1816. Later, he returned to France, and in 1822 succeeded Jean Baptiste Joseph Delambre as Permanent Secretary of the French Academy of Sciences. In 1830, he was elected a foreign member of the Royal Swedish Academy of Sciences.

In 1830, his diminished health began to take its toll:

Fourier had already experienced, in Egypt and Grenoble, some attacks of aneurism of the heart. At Paris, it was impossible to be mistaken with respect to the primary cause of the frequent suffocations which he experienced. A fall, however, which he sustained on the 4th of May 1830, while descending a flight of stairs, aggravated the malady to an extent beyond what could have been ever feared.[6]Shortly after this event, he died in his bed on 16 May 1830.

Fourier was buried in the Père Lachaise Cemetery in Paris, a tomb decorated with an Egyptian motif to reflect his position as secretary of the Cairo Institute, and his collation of Description de l'Égypte. His name is one of the 72 names inscribed on the Eiffel Tower.

A bronze statue was erected in Auxerre in 1849, but it was melted down for armaments during World War II.[7] Joseph Fourier University in Grenoble is named after him.

The Analytic Theory of Heat

Sketch of Fourier, circa 1820.

In 1822 Fourier published his work on heat flow in Théorie analytique de la chaleur (The Analytical Theory of Heat),[8] in which he based his reasoning on Newton's law of cooling, namely, that the flow of heat between two adjacent molecules is proportional to the extremely small difference of their temperatures. This book was translated,[9] with editorial 'corrections',[10] into English 56 years later by Freeman (1878).[11] The book was also edited, with many editorial corrections, by Darboux and republished in French in 1888.[10]

There were three important contributions in this work, one purely mathematical, two essentially physical. In mathematics, Fourier claimed that any function of a variable, whether continuous or discontinuous, can be expanded in a series of sines of multiples of the variable. Though this result is not correct without additional conditions, Fourier's observation that some discontinuous functions are the sum of infinite series was a breakthrough. The question of determining when a Fourier series converges has been fundamental for centuries. Joseph-Louis Lagrange had given particular cases of this (false) theorem, and had implied that the method was general, but he had not pursued the subject. Peter Gustav Lejeune Dirichlet was the first to give a satisfactory demonstration of it with some restrictive conditions. This work provides the foundation for what is today known as the Fourier transform.

One important physical contribution in the book was the concept of dimensional homogeneity in equations; i.e. an equation can be formally correct only if the dimensions match on either side of the equality; Fourier made important contributions to dimensional analysis.[12] The other physical contribution was Fourier's proposal of his partial differential equation for conductive diffusion of heat. This equation is now taught to every student of mathematical physics.

Determinate equations

Bust of Fourier in Grenoble

Fourier left an unfinished work on determinate equations which was edited by Claude-Louis Navier and published in 1831. This work contains much original matter — in particular, there is a demonstration of Fourier's theorem on the position of the roots of an algebraic equation. Joseph-Louis Lagrange had shown how the roots of an algebraic equation might be separated by means of another equation whose roots were the squares of the differences of the roots of the original equation. François Budan, in 1807 and 1811, had enunciated the theorem generally known by the name of Fourier, but the demonstration was not altogether satisfactory. Fourier's proof[13] is the same as that usually given in textbooks on the theory of equations. The final solution of the problem was given in 1829 by Jacques Charles François Sturm.

Discovery of the greenhouse effect

Fourier's grave, Père Lachaise Cemetery

In the 1820s Fourier calculated that an object the size of the Earth, and at its distance from the Sun, should be considerably colder than the planet actually is if warmed by only the effects of incoming solar radiation. He examined various possible sources of the additional observed heat in articles published in 1824[14] and 1827.[15] While he ultimately suggested that interstellar radiation might be responsible for a large portion of the additional warmth, Fourier's consideration of the possibility that the Earth's atmosphere might act as an insulator of some kind is widely recognized as the first proposal of what is now known as the greenhouse effect,[16] although Fourier never called it that.[17][18]

In his articles, Fourier referred to an experiment by de Saussure, who lined a vase with blackened cork. Into the cork, he inserted several panes of transparent glass, separated by intervals of air. Midday sunlight was allowed to enter at the top of the vase through the glass panes. The temperature became more elevated in the more interior compartments of this device. Fourier concluded that gases in the atmosphere could form a stable barrier like the glass panes.[19] This conclusion may have contributed to the later use of the metaphor of the 'greenhouse effect' to refer to the processes that determine atmospheric temperatures.[20] Fourier noted that the actual mechanisms that determine the temperatures of the atmosphere included convection, which was not present in de Saussure's experimental device.

Works

- Fourier, Joseph (1822). Théorie analytique de la chaleur. Paris: Firmin Didot Père et Fils.

- Fourier, Joseph (1824). Annales de chimie et de physique. 27. Paris: Annals of Chemistry and Physics. pp. 236–281.

- Fourier, Joseph (1827). Mémoire sur la température du globe terrestre et des espaces planétaires. 7. Memoirs of the Royal Academy of Sciences of the Institut de France. pp. 569–604.

- Fourier, Joseph (1827). Mémoire sur la distinction des racines imaginaires, et sur l'application des théorèmes d'analyse algébrique aux équations transcendantes qui dépendent de la théorie de la chaleur. 7. Memoirs of the Royal Academy of Sciences of the Institut de France. pp. 605–624.

- Fourier, Joseph (1827). Analyse des équations déterminées. 10. Firmin Didot frères. pp. 119–146.

- Fourier, Joseph (1827). Remarques générales sur l'application du principe de l'analyse algébrique aux équations transcendantes. 10. Paris: Memoirs of the Royal Academy of Sciences of the Institut de France. pp. 119–146.

- Fourier, Joseph (1833). Mémoire d'analyse sur le mouvement de la chaleur dans les fluides. 12. Paris: Memoirs of the Royal Academy of Sciences of the Institut de France. pp. 507–530.

- Fourier, Joseph (1821). Rapport sur les tontines. 5. Paris: Memoirs of the Royal Academy of Sciences of the Institut de France. pp. 26–43.

![{\displaystyle {\begin{aligned}{\frac {\mathrm {d} ^{2}P}{\mathrm {d} T^{2}}}={\frac {1}{v_{2}-v_{1}}}\left[{\frac {c_{p2}-c_{p1}}{T}}-2(v_{2}\alpha _{2}-v_{1}\alpha _{1}){\frac {\mathrm {d} P}{\mathrm {d} T}}\right]+\\{\frac {1}{v_{2}-v_{1}}}\left[(v_{2}\kappa _{T2}-v_{1}\kappa _{T1})\left({\frac {\mathrm {d} P}{\mathrm {d} T}}\right)^{2}\right],\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/79e330791babe59875083305c175a95e06ff78ae)