From Wikipedia, the free encyclopedia

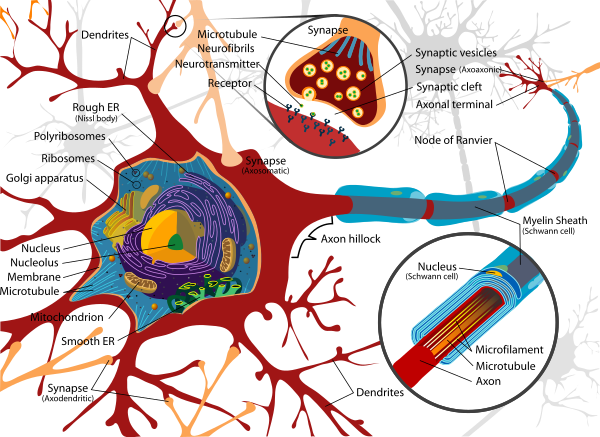

This

schematic shows an anatomically accurate single pyramidal neuron, the

primary excitatory neuron of cerebral cortex, with a synaptic connection

from an incoming axon onto a dendritic spine.

A

neuron, also known as a

neurone (British spelling) and

nerve cell, is an

electrically excitable cell

that receives, processes, and transmits information through electrical

and chemical signals. These signals between neurons occur via

specialized connections called

synapses. Neurons can connect to each other to form

neural circuits. Neurons are the primary components of the

central nervous system, which includes the

brain and

spinal cord, and of the

peripheral nervous system, which comprises the

autonomic nervous system and the

somatic nervous system.

There are many types of specialized neurons.

Sensory neurons respond to one particular type of stimulus such as touch, sound, or light and all other stimuli affecting the cells of the

sensory organs, and converts it into an electrical signal via transduction, which is then sent to the spinal cord or brain.

Motor neurons receive signals from the brain and spinal cord to cause everything from

muscle contractions and affect

glandular outputs.

Interneurons connect neurons to other neurons within the same region of the brain or spinal cord in neural networks.

A typical neuron consists of a cell body (

soma),

dendrites, and an

axon. The term

neurite is used to describe either a dendrite or an axon, particularly in its

undifferentiated

stage. Dendrites are thin structures that arise from the cell body,

often extending for hundreds of micrometers and branching multiple

times, giving rise to a complex "dendritic tree". An axon (also called a

nerve fiber) is a special cellular extension (process) that arises from

the cell body at a site called the

axon hillock

and travels for a distance, as far as 1 meter in humans or even more in

other species. Most neurons receive signals via the dendrites and send

out signals down the axon. Numerous axons are often bundled into

fascicles that make up the

nerves in the

peripheral nervous system (like strands of wire make up cables). Bundles of axons in the central nervous system are called

tracts.

The cell body of a neuron frequently gives rise to multiple dendrites,

but never to more than one axon, although the axon may branch hundreds

of times before it terminates. At the majority of synapses, signals are

sent from the axon of one neuron to a dendrite of another. There are,

however, many exceptions to these rules: for example, neurons can lack

dendrites, or have no axon, and synapses can connect an axon to another

axon or a dendrite to another dendrite.

All neurons are electrically excitable, due to maintenance of

voltage gradients across their

membranes by means of metabolically driven

ion pumps, which combine with

ion channels embedded in the membrane to generate intracellular-versus-extracellular concentration differences of

ions such as

sodium,

potassium,

chloride, and

calcium. Changes in the cross-membrane voltage can alter the function of

voltage-dependent ion channels. If the voltage changes by a large enough amount, an all-or-none

electrochemical pulse called an

action potential

is generated and this change in cross-membrane potential travels

rapidly along the cell's axon, and activates synaptic connections with

other cells when it arrives.

In most cases, neurons are generated by special types of

stem cells during brain development and childhood. Neurons in the adult brain generally do not undergo cell division.

Astrocytes are star-shaped

glial cells that have also been observed to turn into neurons by virtue of the stem cell characteristic

pluripotency.

Neurogenesis

largely ceases during adulthood in most areas of the brain. However,

there is strong evidence for generation of substantial numbers of new

neurons in two brain areas, the

hippocampus and

olfactory bulb.

[1][2]

Overview

A neuron is a specialized type of cell found in the bodies of all

eumetozoans. Only

sponges and a few other simpler animals lack neurons. The features that define a neuron are electrical excitability

[3]

and the presence of synapses, which are complex membrane junctions that

transmit signals to other cells. The body's neurons, plus the glial

cells that give them structural and metabolic support, together

constitute the nervous system. In vertebrates, the majority of neurons

belong to the

central nervous system, but some reside in peripheral

ganglia, and many sensory neurons are situated in sensory organs such as the

retina and

cochlea.

A typical neuron is divided into three parts: the soma or cell

body, dendrites, and axon. The soma is usually compact; the axon and

dendrites are filaments that extrude from it. Dendrites typically branch

profusely, getting thinner with each branching, and extending their

farthest branches a few hundred micrometers from the soma. The axon

leaves the soma at a swelling called the

axon hillock,

and can extend for great distances, giving rise to hundreds of

branches. Unlike dendrites, an axon usually maintains the same diameter

as it extends. The soma may give rise to numerous dendrites, but never

to more than one axon. Synaptic signals from other neurons are received

by the soma and dendrites; signals to other neurons are transmitted by

the axon. A typical synapse, then, is a contact between the axon of one

neuron and a dendrite or soma of another. Synaptic signals may be

excitatory or

inhibitory.

If the net excitation received by a neuron over a short period of time

is large enough, the neuron generates a brief pulse called an action

potential, which originates at the soma and propagates rapidly along the

axon, activating synapses onto other neurons as it goes.

Many neurons fit the foregoing schema in every respect, but there

are also exceptions to most parts of it. There are no neurons that lack

a soma, but there are neurons that lack dendrites, and others that lack

an axon. Furthermore, in addition to the typical axodendritic and

axosomatic synapses, there are axoaxonic (axon-to-axon) and

dendrodendritic (dendrite-to-dendrite) synapses.

The key to neural function is the synaptic signaling process,

which is partly electrical and partly chemical. The electrical aspect

depends on properties of the neuron's membrane. Like all animal cells,

the cell body of every neuron is enclosed by a

plasma membrane, a bilayer of

lipid molecules with many types of protein structures embedded in it. A lipid bilayer is a powerful electrical

insulator,

but in neurons, many of the protein structures embedded in the membrane

are electrically active. These include ion channels that permit

electrically charged ions to flow across the membrane and ion pumps that

actively transport ions from one side of the membrane to the other.

Most ion channels are permeable only to specific types of ions. Some ion

channels are

voltage gated,

meaning that they can be switched between open and closed states by

altering the voltage difference across the membrane. Others are

chemically gated, meaning that they can be switched between open and

closed states by interactions with chemicals that diffuse through the

extracellular fluid. The interactions between ion channels and ion pumps

produce a voltage difference across the membrane, typically a bit less

than 1/10 of a volt at baseline. This voltage has two functions: first,

it provides a power source for an assortment of voltage-dependent

protein machinery that is embedded in the membrane; second, it provides a

basis for electrical signal transmission between different parts of the

membrane.

Neurons communicate by

chemical and

electrical synapses in a process known as

neurotransmission, also called synaptic transmission. The fundamental process that triggers the release of

neurotransmitters is the

action potential, a propagating electrical signal that is generated by exploiting the

electrically excitable membrane of the neuron. This is also known as a wave of depolarization.

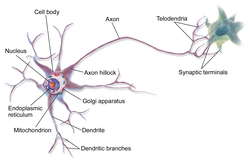

Anatomy and histology

Diagram of a typical myelinated vertebrate motor

neuron

Neurons are highly specialized for the processing and transmission of

cellular signals. Given their diversity of functions performed in

different parts of the nervous system, there is a wide variety in their

shape, size, and electrochemical properties. For instance, the soma of a

neuron can vary from 4 to 100

micrometers in diameter.

[4]

- The soma is the body of the neuron. As it contains the nucleus, most protein synthesis occurs here. The nucleus can range from 3 to 18 micrometers in diameter.[5]

- The dendrites of a neuron are cellular extensions with many

branches. This overall shape and structure is referred to metaphorically

as a dendritic tree. This is where the majority of input to the neuron

occurs via the dendritic spine.

- The axon is a finer, cable-like projection that can extend tens,

hundreds, or even tens of thousands of times the diameter of the soma in

length. The axon carries nerve signals

away from the soma (and also carries some types of information back to

it). Many neurons have only one axon, but this axon may—and usually

will—undergo extensive branching, enabling communication with many

target cells. The part of the axon where it emerges from the soma is

called the axon hillock. Besides being an anatomical structure, the axon hillock is also the part of the neuron that has the greatest density of voltage-dependent sodium channels.

This makes it the most easily excited part of the neuron and the spike

initiation zone for the axon: in electrophysiological terms, it has the

most negative action potential threshold. While the axon and axon

hillock are generally involved in information outflow, this region can

also receive input from other neurons.

- The axon terminal contains synapses, specialized structures where neurotransmitter chemicals are released to communicate with target neurons.

The accepted view of the neuron attributes dedicated functions to its

various anatomical components; however, dendrites and axons often act

in ways contrary to their so-called main function.

Axons and dendrites in the central nervous system are typically

only about one micrometer thick, while some in the peripheral nervous

system are much thicker. The soma is usually about 10–25 micrometers in

diameter and often is not much larger than the cell nucleus it contains.

The longest axon of a human

motor neuron can be over a meter long, reaching from the base of the spine to the toes.

Sensory neurons can have axons that run from the toes to the

posterior column of the spinal cord, over 1.5 meters in adults.

Giraffes

have single axons several meters in length running along the entire

length of their necks. Much of what is known about axonal function comes

from studying the

squid giant axon, an ideal experimental preparation because of its relatively immense size (0.5–1 millimeters thick, several centimeters long).

Fully differentiated neurons are permanently

postmitotic;

[6] however, research starting around 2002 shows that additional neurons throughout the brain can originate from neural

stem cells through the process of

neurogenesis. These are found throughout the brain, but are particularly concentrated in the

subventricular zone and

subgranular zone.

[7]

Histology and internal structure

Golgi-stained neurons in human hippocampal tissue

Actin filaments in a mouse Cortical Neuron in culture

Numerous microscopic clumps called

Nissl substance (or

Nissl bodies) are seen when nerve cell bodies are stained with a basophilic ("base-loving") dye. These structures consist of rough

endoplasmic reticulum and associated

ribosomal RNA. Named after German psychiatrist and neuropathologist

Franz Nissl

(1860–1919), they are involved in protein synthesis and their

prominence can be explained by the fact that nerve cells are very

metabolically active. Basophilic dyes such as

aniline or (weakly)

haematoxylin [8] highlight negatively charged components, and so bind to the phosphate backbone of the ribosomal RNA.

The cell body of a neuron is supported by a complex mesh of structural proteins called

neurofilaments, which are assembled into larger neurofibrils. Some neurons also contain pigment granules, such as

neuromelanin (a brownish-black pigment that is byproduct of synthesis of

catecholamines), and

lipofuscin (a yellowish-brown pigment), both of which accumulate with age. Other structural proteins that are important for neuronal function are

actin and the

tubulin of

microtubules.

Actin is predominately found at the tips of axons and dendrites during

neuronal development. There the actin dynamics can be modulated via an

interplay with microtubule.

[12]

There are different internal structural characteristics between axons and dendrites. Typical axons almost never contain

ribosomes,

except some in the initial segment. Dendrites contain granular

endoplasmic reticulum or ribosomes, in diminishing amounts as the

distance from the cell body increases.

Classification

Neurons exist in a number of different shapes and sizes and can be classified by their morphology and function.

[14] The anatomist

Camillo Golgi

grouped neurons into two types; type I with long axons used to move

signals over long distances and type II with short axons, which can

often be confused with dendrites. Type I cells can be further divided by

where the cell body or soma is located. The basic morphology of type I

neurons, represented by spinal

motor neurons, consists of a cell body called the soma and a long thin axon covered by the

myelin sheath.

Around the cell body is a branching dendritic tree that receives

signals from other neurons. The end of the axon has branching terminals (

axon terminal) that release neurotransmitters into a gap called the

synaptic cleft between the terminals and the dendrites of the next neuron.

Structural classification

Polarity

Most neurons can be anatomically characterized as:

- Unipolar: only 1 process

- Bipolar: 1 axon and 1 dendrite

- Multipolar: 1 axon and 2 or more dendrites

- Golgi I: neurons with long-projecting axonal processes; examples are pyramidal cells, Purkinje cells, and anterior horn cells.

- Golgi II: neurons whose axonal process projects locally; the best example is the granule cell.

- Anaxonic: where the axon cannot be distinguished from the dendrite(s).

- pseudounipolar: 1 process which then serves as both an axon and a dendrite

Other

Furthermore,

some unique neuronal types can be identified according to their

location in the nervous system and distinct shape. Some examples are:

- Basket cells, interneurons that form a dense plexus of terminals around the soma of target cells, found in the cortex and cerebellum.

- Betz cells, large motor neurons.

- Lugaro cells, interneurons of the cerebellum.

- Medium spiny neurons, most neurons in the corpus striatum.

- Purkinje cells, huge neurons in the cerebellum, a type of Golgi I multipolar neuron.

- Pyramidal cells, neurons with triangular soma, a type of Golgi I.

- Renshaw cells, neurons with both ends linked to alpha motor neurons.

- Unipolar brush cells, interneurons with unique dendrite ending in a brush-like tuft.

- Granule cells, a type of Golgi II neuron.

- Anterior horn cells, motoneurons located in the spinal cord.

- Spindle cells, interneurons that connect widely separated areas of the brain

Functional classification

Direction

Afferent and efferent also refer generally to neurons that,

respectively, bring information to or send information from the brain.

Action on other neurons

A neuron affects other neurons by releasing a neurotransmitter that binds to

chemical receptors.

The effect upon the postsynaptic neuron is determined not by the

presynaptic neuron or by the neurotransmitter, but by the type of

receptor that is activated. A neurotransmitter can be thought of as a

key, and a receptor as a lock: the same type of key can here be used to

open many different types of locks. Receptors can be classified broadly

as

excitatory (causing an increase in firing rate),

inhibitory (causing a decrease in firing rate), or

modulatory (causing long-lasting effects not directly related to firing rate).

The two most common neurotransmitters in the brain,

glutamate and

GABA,

have actions that are largely consistent. Glutamate acts on several

different types of receptors, and have effects that are excitatory at

ionotropic receptors and a modulatory effect at

metabotropic receptors.

Similarly, GABA acts on several different types of receptors, but all

of them have effects (in adult animals, at least) that are inhibitory.

Because of this consistency, it is common for neuroscientists to

simplify the terminology by referring to cells that release glutamate as

"excitatory neurons", and cells that release GABA as "inhibitory

neurons". Since over 90% of the neurons in the brain release either

glutamate or GABA, these labels encompass the great majority of neurons.

There are also other types of neurons that have consistent effects on

their targets, for example, "excitatory" motor neurons in the spinal

cord that release

acetylcholine, and "inhibitory"

spinal neurons that release

glycine.

The distinction between excitatory and inhibitory

neurotransmitters is not absolute, however. Rather, it depends on the

class of chemical receptors present on the postsynaptic neuron. In

principle, a single neuron, releasing a single neurotransmitter, can

have excitatory effects on some targets, inhibitory effects on others,

and modulatory effects on others still. For example,

photoreceptor cells in the retina constantly release the neurotransmitter glutamate in the absence of light. So-called OFF

bipolar cells

are, like most neurons, excited by the released glutamate. However,

neighboring target neurons called ON bipolar cells are instead

inhibited by glutamate, because they lack the typical

ionotropic glutamate receptors and instead express a class of inhibitory

metabotropic glutamate receptors.

[15] When light is present, the photoreceptors cease releasing glutamate,

which relieves the ON bipolar cells from inhibition, activating them;

this simultaneously removes the excitation from the OFF bipolar cells,

silencing them.

It is possible to identify the type of inhibitory effect a

presynaptic neuron will have on a postsynaptic neuron, based on the

proteins the presynaptic neuron expresses.

Parvalbumin-expressing neurons typically dampen the output signal of the postsynaptic neuron in the

visual cortex, whereas

somatostatin-expressing neurons typically block dendritic inputs to the postsynaptic neuron.

[16]

Discharge patterns

Neurons have intrinsic electroresponsive properties like intrinsic transmembrane voltage

oscillatory patterns.

[17] So neurons can be classified according to their

electrophysiological characteristics:

- Tonic or regular spiking. Some neurons are typically constantly (or tonically) active. Example: interneurons in neurostriatum.

- Phasic or bursting. Neurons that fire in bursts are called phasic.

- Fast spiking. Some neurons are notable for their high firing rates, for example some types of cortical inhibitory interneurons, cells in globus pallidus, retinal ganglion cells.[18][19]

Classification by neurotransmitter production

- Cholinergic

neurons—acetylcholine. Acetylcholine is released from presynaptic

neurons into the synaptic cleft. It acts as a ligand for both

ligand-gated ion channels and metabotropic (GPCRs) muscarinic receptors.

Nicotinic receptors are pentameric ligand-gated ion channels composed of alpha and beta subunits that bind nicotine. Ligand binding opens the channel causing influx of Na+ depolarization and increases the probability of presynaptic neurotransmitter release. Acetylcholine is synthesized from choline and acetyl coenzyme A.

- GABAergic neurons—gamma aminobutyric acid. GABA is one of two neuroinhibitors in the central nervous system (CNS), the other being Glycine. GABA has a homologous function to ACh, gating anion channels that allow Cl− ions to enter the post synaptic neuron. Cl−

causes hyperpolarization within the neuron, decreasing the probability

of an action potential firing as the voltage becomes more negative

(recall that for an action potential to fire, a positive voltage

threshold must be reached). GABA is synthesized from glutamate

neurotransmitters by the enzyme glutamate decarboxylase.

- Glutamatergic neurons—glutamate. Glutamate is one of two primary

excitatory amino acid neurotransmitter, the other being Aspartate.

Glutamate receptors are one of four categories, three of which are

ligand-gated ion channels and one of which is a G-protein coupled

receptor (often referred to as GPCR).

- AMPA and Kainate receptors both function as cation channels permeable to Na+ cation channels mediating fast excitatory synaptic transmission

- NMDA receptors are another cation channel that is more permeable to Ca2+.

The function of NMDA receptors is dependant on Glycine receptor binding

as a co-agonist within the channel pore. NMDA receptors do not function

without both ligands present.

- Metabotropic receptors, GPCRs modulate synaptic transmission and postsynaptic excitability.

- Glutamate can cause excitotoxicity when blood flow to the brain

is interrupted, resulting in brain damage. When blood flow is

suppressed, glutamate is released from presynaptic neurons causing NMDA

and AMPA receptor activation more so than would normally be the case

outside of stress conditions, leading to elevated Ca2+ and Na+ entering the post synaptic neuron and cell damage. Glutamate is synthesized from the amino acid glutamine by the enzyme glutamate synthase.

- Dopaminergic neurons—dopamine.

Dopamine is a neurotransmitter that acts on D1 type (D1 and D5) Gs

coupled receptors, which increase cAMP and PKA, and D2 type (D2, D3, and

D4) receptors, which activate Gi-coupled receptors that decrease cAMP

and PKA. Dopamine is connected to mood and behavior and modulates both

pre and post synaptic neurotransmission. Loss of dopamine neurons in the

substantia nigra has been linked to Parkinson's disease. Dopamine is synthesized from the amino acid tyrosine. Tyrosine is catalyzed into levadopa (or L-DOPA) by tyrosine hydroxlase, and levadopa is then converted into dopamine by amino acid decarboxylase.

- Serotonergic neurons—serotonin.

Serotonin (5-Hydroxytryptamine, 5-HT) can act as excitatory or

inhibitory. Of the four 5-HT receptor classes, 3 are GPCR and 1 is

ligand gated cation channel. Serotonin is synthesized from tryptophan by

tryptophan hydroxylase, and then further by aromatic acid

decarboxylase. A lack of 5-HT at postsynaptic neurons has been linked to

depression. Drugs that block the presynaptic serotonin transporter are used for treatment, such as Prozac and Zoloft.

Connectivity

A signal propagating down an axon to the cell body and dendrites of the next cell

Neurons communicate with one another via

synapses, where the

axon terminal or

en passant

bouton (a type of terminal located along the length of the axon) of one

cell contacts another neuron's dendrite, soma or, less commonly, axon.

Neurons such as Purkinje cells in the cerebellum can have over 1000

dendritic branches, making connections with tens of thousands of other

cells; other neurons, such as the magnocellular neurons of the

supraoptic nucleus, have only one or two dendrites, each of which receives thousands of synapses. Synapses can be

excitatory or

inhibitory

and either increase or decrease activity in the target neuron,

respectively. Some neurons also communicate via electrical synapses,

which are direct, electrically conductive

junctions between cells.

In a chemical synapse, the process of synaptic transmission is as

follows: when an action potential reaches the axon terminal, it opens

voltage-gated calcium channels, allowing

calcium ions to enter the terminal. Calcium causes

synaptic vesicles

filled with neurotransmitter molecules to fuse with the membrane,

releasing their contents into the synaptic cleft. The neurotransmitters

diffuse across the synaptic cleft and activate receptors on the

postsynaptic neuron. High cytosolic calcium in the

axon terminal also triggers mitochondrial calcium uptake, which, in turn, activates mitochondrial

energy metabolism to produce ATP to support continuous neurotransmission.

[20]

An

autapse is a synapse in which a neuron's axon connects to its own dendrites.

The

human brain has a huge number of synapses. Each of the 10

11

(one hundred billion) neurons has on average 7,000 synaptic connections

to other neurons. It has been estimated that the brain of a

three-year-old child has about 10

15 synapses (1 quadrillion). This number declines with age, stabilizing by adulthood. Estimates vary for an adult, ranging from 10

14 to 5 x 10

14 synapses (100 to 500 trillion).

[21]

An

annotated diagram of the stages of an action potential propagating down

an axon including the role of ion concentration and pump and channel

proteins.

Mechanisms for propagating action potentials

In 1937,

John Zachary Young suggested that the

squid giant axon could be used to study neuronal electrical properties.

[22]

Being larger than but similar in nature to human neurons, squid cells

were easier to study. By inserting electrodes into the giant squid

axons, accurate measurements were made of the membrane potential.

The cell membrane of the axon and soma contain voltage-gated ion

channels that allow the neuron to generate and propagate an electrical

signal (an action potential). These signals are generated and propagated

by charge-carrying

ions including sodium (Na

+), potassium (K

+), chloride (Cl

−), and calcium (Ca

2+).

There are several stimuli that can activate a neuron leading to electrical activity, including

pressure, stretch, chemical transmitters, and changes of the electric potential across the cell membrane.

[23]

Stimuli cause specific ion-channels within the cell membrane to open,

leading to a flow of ions through the cell membrane, changing the

membrane potential.

Thin neurons and axons require less

metabolic

expense to produce and carry action potentials, but thicker axons

convey impulses more rapidly. To minimize metabolic expense while

maintaining rapid conduction, many neurons have insulating sheaths of

myelin around their axons. The sheaths are formed by

glial cells:

oligodendrocytes in the central nervous system and

Schwann cells in the peripheral nervous system. The sheath enables action potentials to travel

faster

than in unmyelinated axons of the same diameter, whilst using less

energy. The myelin sheath in peripheral nerves normally runs along the

axon in sections about 1 mm long, punctuated by unsheathed

nodes of Ranvier, which contain a high density of voltage-gated ion channels.

Multiple sclerosis is a neurological disorder that results from demyelination of axons in the central nervous system.

Some neurons do not generate action potentials, but instead generate a

graded electrical signal, which in turn causes graded neurotransmitter release. Such

nonspiking neurons tend to be sensory neurons or interneurons, because they cannot carry signals long distances.

Neural coding

Neural coding

is concerned with how sensory and other information is represented in

the brain by neurons. The main goal of studying neural coding is to

characterize the relationship between the

stimulus and the individual or

ensemble neuronal responses, and the relationships amongst the electrical activities of the neurons within the ensemble.

[24] It is thought that neurons can encode both

digital and

analog information.

[25]

All-or-none principle

The conduction of nerve impulses is an example of an

all-or-none

response. In other words, if a neuron responds at all, then it must

respond completely. Greater intensity of stimulation does not produce a

stronger signal but can produce a higher frequency of firing. There are

different types of receptor responses to stimuli, slowly adapting or

tonic receptors

respond to steady stimulus and produce a steady rate of firing. These

tonic receptors most often respond to increased intensity of stimulus by

increasing their firing frequency, usually as a power function of

stimulus plotted against impulses per second. This can be likened to an

intrinsic property of light where to get greater intensity of a specific

frequency (color) there have to be more photons, as the photons can't

become "stronger" for a specific frequency.

There are a number of other receptor types that are called

quickly adapting or phasic receptors, where firing decreases or stops

with steady stimulus; examples include:

skin

when touched by an object causes the neurons to fire, but if the object

maintains even pressure against the skin, the neurons stop firing. The

neurons of the skin and muscles that are responsive to pressure and

vibration have filtering accessory structures that aid their function.

The

pacinian corpuscle

is one such structure. It has concentric layers like an onion, which

form around the axon terminal. When pressure is applied and the

corpuscle is deformed, mechanical stimulus is transferred to the axon,

which fires. If the pressure is steady, there is no more stimulus; thus,

typically these neurons respond with a transient depolarization during

the initial deformation and again when the pressure is removed, which

causes the corpuscle to change shape again. Other types of adaptation

are important in extending the function of a number of other neurons.

[26]

History

Drawing of a Purkinje cell in the

cerebellar cortex done by Santiago Ramón y Cajal, demonstrating the ability of Golgi's staining method to reveal fine detail

The neuron's place as the primary functional unit of the nervous

system was first recognized in the late 19th century through the work of

the Spanish anatomist

Santiago Ramón y Cajal.

[27]

To make the structure of individual neurons visible, Ramón y Cajal improved a

silver staining process that had been developed by

Camillo Golgi.

[27] The improved process involves a technique called "double impregnation" and is still in use today.

In 1888 Ramón y Cajal published a paper about the bird cerebellum. In this paper, he tells he could not find evidence for

anastomis between axons and dendrites and calls each nervous element "an absolutely autonomous canton."

[27][28] This became known as the

neuron doctrine, one of the central tenets of modern

neuroscience.

[27]

In 1891 the German anatomist

Heinrich Wilhelm Waldeyer wrote a highly influential review about the neuron doctrine in which he introduced the term

neuron to describe the anatomical and physiological unit of the nervous system.

[29][30]

The silver impregnation stains are an extremely useful method for

neuroanatomical

investigations because, for reasons unknown, it stains a very small

percentage of cells in a tissue, so one is able to see the complete

micro structure of individual neurons without much overlap from other

cells in the densely packed brain.

[31]

Neuron doctrine

The

neuron doctrine is the now fundamental idea that neurons are the basic

structural and functional units of the nervous system. The theory was

put forward by Santiago Ramón y Cajal in the late 19th century. It held

that neurons are discrete cells (not connected in a meshwork), acting as

metabolically distinct units.

Later discoveries yielded a few refinements to the simplest form of the doctrine. For example,

glial cells, which are not considered neurons, play an essential role in information processing.

[32] Also, electrical synapses are more common than previously thought,

[33]

meaning that there are direct, cytoplasmic connections between neurons.

In fact, there are examples of neurons forming even tighter coupling:

the squid giant axon arises from the fusion of multiple axons.

[34]

Ramón y Cajal also postulated the Law of Dynamic Polarization,

which states that a neuron receives signals at its dendrites and cell

body and transmits them, as action potentials, along the axon in one

direction: away from the cell body.

[35] The Law of Dynamic Polarization has important exceptions; dendrites can serve as synaptic output sites of neurons

[36] and axons can receive synaptic inputs.

[37]

Neurons in the brain

The number of neurons in the brain varies dramatically from species to species.

[38] The adult human brain contains about 85-86 billion neurons,

[38][39] of which 16.3 billion are in the cerebral cortex and 69 billion in the

cerebellum.

[39] By contrast, the

nematode worm

Caenorhabditis elegans

has just 302 neurons, making it an ideal experimental subject as

scientists have been able to map all of the organism's neurons. The

fruit fly

Drosophila melanogaster,

a common subject in biological experiments, has around 100,000 neurons

and exhibits many complex behaviors. Many properties of neurons, from

the type of neurotransmitters used to ion channel composition, are

maintained across species, allowing scientists to study processes

occurring in more complex organisms in much simpler experimental

systems.

Neurological disorders

Charcot–Marie–Tooth disease (CMT) is a heterogeneous inherited disorder of nerves (

neuropathy)

that is characterized by loss of muscle tissue and touch sensation,

predominantly in the feet and legs but also in the hands and arms in the

advanced stages of disease. Presently incurable, this disease is one of

the most common inherited neurological disorders, with 36 in 100,000

affected.

[40]

Alzheimer's disease (AD), also known simply as

Alzheimer's, is a

neurodegenerative disease characterized by progressive

cognitive deterioration, together with declining activities of daily living and

neuropsychiatric symptoms or behavioral changes.

[41] The most striking early symptom is loss of short-term memory (

amnesia),

which usually manifests as minor forgetfulness that becomes steadily

more pronounced with illness progression, with relative preservation of

older memories. As the disorder progresses, cognitive (intellectual)

impairment extends to the domains of language (

aphasia), skilled movements (

apraxia), and recognition (

agnosia), and functions such as decision-making and planning become impaired.

[42][43]

Parkinson's disease (PD), also known as

Parkinson disease, is a degenerative disorder of the central nervous system that often impairs the sufferer's motor skills and speech.

[44] Parkinson's disease belongs to a group of conditions called

movement disorders.

[45] It is characterized by muscle rigidity,

tremor, a slowing of physical movement (

bradykinesia), and in extreme cases, a loss of physical movement (

akinesia). The primary symptoms are the results of decreased stimulation of the

motor cortex by the

basal ganglia,

normally caused by the insufficient formation and action of dopamine,

which is produced in the dopaminergic neurons of the brain. Secondary

symptoms may include high level

cognitive dysfunction and subtle language problems. PD is both chronic and progressive.

Myasthenia gravis is a neuromuscular disease leading to fluctuating

muscle weakness and fatigability during simple activities. Weakness is typically caused by circulating

antibodies that block

acetylcholine receptors

at the post-synaptic neuromuscular junction, inhibiting the stimulative

effect of the neurotransmitter acetylcholine. Myasthenia is treated

with

immunosuppressants,

cholinesterase inhibitors and, in selected cases,

thymectomy.

Demyelination

Guillain–Barré syndrome – demyelination

Demyelination

is the act of demyelinating, or the loss of the myelin sheath

insulating the nerves. When myelin degrades, conduction of signals along

the nerve can be impaired or lost, and the nerve eventually withers.

This leads to certain neurodegenerative disorders like

multiple sclerosis and

chronic inflammatory demyelinating polyneuropathy.

Axonal degeneration

Although

most injury responses include a calcium influx signaling to promote

resealing of severed parts, axonal injuries initially lead to acute

axonal degeneration, which is rapid separation of the proximal and

distal ends within 30 minutes of injury. Degeneration follows with

swelling of the

axolemma, and eventually leads to bead like formation. Granular disintegration of the axonal

cytoskeleton and inner

organelles occurs after axolemma degradation. Early changes include accumulation of

mitochondria

in the paranodal regions at the site of injury. Endoplasmic reticulum

degrades and mitochondria swell up and eventually disintegrate. The

disintegration is dependent on

ubiquitin and

calpain proteases

(caused by influx of calcium ion), suggesting that axonal degeneration

is an active process. Thus the axon undergoes complete fragmentation.

The process takes about roughly 24 hrs in the

peripheral nervous system (PNS), and longer in the CNS. The signaling pathways leading to axolemma degeneration are currently unknown.

Neurogenesis

It has been demonstrated that

neurogenesis can sometimes occur in the adult

vertebrate brain, a finding that led to controversy in 1999.

[46]

Later studies of the age of human neurons suggest that this process

occurs only for a minority of cells, and a vast majority of neurons

composing the

neocortex were formed before birth and persist without replacement.

[2]

The body contains a variety of stem cell types that have the capacity to differentiate into neurons. A report in

Nature suggested that researchers had found a way to transform human skin cells into working nerve cells using a process called

transdifferentiation in which "cells are forced to adopt new identities".

[47]

Nerve regeneration

It is often possible for peripheral axons to regrow if they are severed,

[48] but a neuron cannot be functionally replaced by one of another type (

Llinás' law).

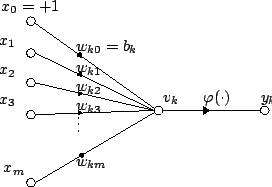

,

is the current iteration

is the iteration limit

is the index of the target input data vector in the input data set

is a target input data vector

is the index of the node in the map

is the current weight vector of node

is the index of the best matching unit (BMU) in the map

is a restraint due to distance from BMU, usually called the neighborhood function, and

is a learning restraint due to iteration progress.

and repeat from step 2 while

and repeat from step 2 while