Returning to designs abandoned in the 1970s, start-ups are developing a new kind of reactor that promises to be much safer and cleaner than current ones.

Troels

Schönfeldt can trace his path to becoming a nuclear energy entrepreneur

back to 2009, when he and other young physicists at the Niels Bohr

Institute in Copenhagen started getting together for an occasional “beer

and nuclear” meetup.

The beer was an India pale ale that they brewed themselves in an old,

junk-filled lab space in the institute’s basement. The “nuclear” part

was usually a bull session about their options for fighting two of

humanity’s biggest problems: global poverty and climate change. “If you

want poor countries to become richer,” says Schönfeldt, “you need a

cheap and abundant power source.” But if you want to avoid spewing out

enough extra carbon dioxide to fry the planet, you need to provide that

power without using coal and gas.

It seemed clear to Schönfeldt and the others that the standard

alternatives simply wouldn’t be sufficient. Wind and solar power by

themselves couldn’t offer nearly enough energy, not with billions of

poor people trying to join the global middle class. Yet conventional

nuclear reactors — which could meet the need, in principle —

were massively expensive, potentially dangerous and anathema to much of

the public. And if anyone needed a reminder of why, the catastrophic

meltdown at Japan’s Fukushima Daiichi plant came along to provide it in

March 2011.

On the other hand, says Schönfeldt, the worldwide nuclear engineering

community was beginning to get fired up about unconventional reactor

designs — technologies that had been sidelined 40 or 50 years before,

but that might have a lot fewer problems than existing reactors. And the

beer-and-nuclear group found that one such design, the molten salt

reactor, had a simplicity, elegance and, well, weirdness that especially

appealed.

Molten salt reactors might just turn nuclear power into the greenest energy source on the planet.

The weird bit was that word “molten,” says Schönfeldt: Every other

reactor design in history had used fuel that’s solid, not liquid. This

thing was basically a pot of hot nuclear soup. The recipe called for

taking a mix of salts — compounds whose molecules are held together

electrostatically, the way sodium and chloride ions are in table salt —

and heating them up until they melted. This gave you a clear, hot liquid

that was about the consistency of water. Then you stirred in a salt

such as uranium tetrafluoride, which produced a lovely green tint, and

let the uranium undergo nuclear fission right there in the melt — a

reaction that would not only keep the salts nice and hot, but could

power a city or two besides.

A compound combining various salts (including uranium tetrafluoride),

shown as a solid at left and a liquid at right, is one example of a

compound that could be used in a molten salt reactor. CREDIT: ORNL / US DOE

Weird or not, molten salt technology was viable; the Oak Ridge

National Laboratory in Tennessee had successfully operated a

demonstration reactor back in the 1960s. And more to the point, the

beer-and-nuclear group realized, the liquid nature of the fuel meant

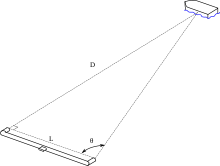

that they could potentially build molten salt reactors that were cheap

enough for poor countries to buy; compact enough to deliver on a flatbed

truck; green enough to burn our existing stockpiles of nuclear waste

instead of generating more — and safe enough to put in cities and

factories. That’s because Fukushima-style meltdowns would be physically

impossible in a mix that’s molten already. Better still, these reactors

would be proliferation resistant, because their hot, liquid contents

would be very hard for rogue states or terrorists to hijack for making

nuclear weapons.

Molten salt reactors might just turn nuclear power into the greenest energy source on the planet.

Crazy? “We had to try,” says Schönfeldt. So in 2014 he and his

colleagues launched Seaborg Technologies, a Copenhagen-based start-up

named in honor of the late Glenn Seaborg, a Manhattan Project veteran

who helped pioneer the peaceful uses of nuclear energy. With Schönfeldt

as chief executive officer, they set about turning their vision into an

ultracompact molten salt reactor that could serve the developed and

developing world alike.

“It will be exceedingly hard, but that is significantly better than impossible.” Troels Schönfeldt

They weren’t alone: Efforts to revive older nuclear designs had been

bubbling up elsewhere, and dozens of start-ups were trying to

commercialize them. At least half a dozen of these start-ups were

focused on molten salt reactors specifically, since they were arguably

the cleanest and safest of the lot. Research funding agencies around the

world had begun to pour millions of dollars per year into developing

molten salt technology. Even power companies were starting to make

investments. A prime example was the Southern Company, a utility

conglomerate headquartered in Atlanta, Georgia. In 2016, the company

started an ambitious molten salt development program in collaboration

with Oak Ridge and TerraPower, a nuclear research company in Bellevue,

Washington.

“In the next 20 to 30 years, the energy environment is going to

undergo a major transformation to a low- to no-carbon future,” says Nick

Irvin, Southern’s director of research and development. There will be

far fewer centralized power plants and many more distributed sources

like wind and solar, he says. Molten salt reactors fit ideally into this

future, he adds, because of both their inherent safety and their

ability to consume spent nuclear fuel from traditional nuclear reactors.

A molten salt reactor differs from a conventional nuclear reactor in a number of ways, starting with the fact that it uses nuclear fuel that’s liquid instead of solid. This has profound implications for safety. For example, meltdowns would be a non-issue: The fuel is already molten. And if temperatures in the fuel mix get too high for any reason, a plug of frozen salt below the reactor will melt and allow everything to drain into an underground holding tank for safekeeping. Long-lived nuclear waste would also be a non-issue: A chemical system would continuously extract reaction-slowing fission products from the molten fuel, which would allow plutonium and all the other long-half-life fissile isotopes to be completely consumed.

Getting there won’t be easy — not least because hot molten salts can

be just as corrosive as they sound. Every component that comes into

contact with the brew will have to be made of a specialized, high-tech

alloy that can resist them. “You dissolve the uranium in the salt,” says

Nathan Myhrvold, a venture capitalist who serves as vice chairman of

TerraPower’s board. “What you have to make sure is that you don’t

dissolve your reactor in it!”

Certainly no one expects to have a prototype power plant operating

before the mid-2020s, or to field full-scale commercial reactors until

the 2030s. Still, says Schönfeldt, “it will be exceedingly hard, but

that is significantly better than impossible.”

Rethinking nuclear

To Rachel Slaybaugh, today’s surge of entrepreneurial focus on

nuclear technology is astonishing. “It feels like we’re at the beginning

of a movement, with an explosion of ideas,” says Slaybaugh, a nuclear

engineer at the University of California, Berkeley, who has written

about green energy options in the Annual Review of Environment and Resources.

But, as nuclear engineer Leslie Dewan points out, this explosion is

also something of a throwback to the post–World War II era. “Nuclear

power technology was incredibly new,” says Dewan, who in 2011 cofounded

one of the first of the molten salt start-ups, Transatomic Power in

Cambridge, Massachusetts. It was a time of blue-sky thinking, she says,

“where they were trying many, many different types of technologies,

running experiments, building and prototyping.”

Atoms may be the smallest unit of matter, but they are made up of even smaller subunits, including protons, neutrons and electrons. The energy that binds the protons and neutrons together in the atom’s nucleus is enormous, and can be released and put to use during a nuclear reaction.

The basics had been known since 1938, when German scientists

discovered that firing a neutron into certain heavy atomic nuclei would

cause the nucleus to fission, or split into two pieces. The rupture of

such a “fissile” nucleus would release an enormous amount of energy,

plus at least two new neutrons. These neutrons could then slam into

nearby nuclei and trigger the release of more energy, plus 4 neutrons —

then 8, 16, 32 and so on in an exponentially growing chain reaction.

This runaway energy release could produce a very powerful bomb, as

the wartime Manhattan Project demonstrated. But taming it, and turning

the chain reaction into a safe, steady-state heat source for power

production, was a lot trickier.

Fission is at the heart of nuclear energy production. Fission happens when a free neutron slams into an unstable atomic nucleus and shatters it into two or more “fission products”: lighter elements such as krypton and barium that cluster around the middle of the periodic table. Thanks to Einstein’s famous equation E = mc2, this process also transforms a tiny bit of the original nucleus’s mass into an immense amount of energy.

That’s where all the postwar experimentation

came in. There were reactors fueled by uranium, which comes out of the

ground containing virtually the only fissile isotope found in nature,

uranium-235. There were reactors known as breeders, which could

accomplish the magical-sounding feat of producing more fuel than they

consumed. (Actually, they relied on the fact that uranium is “fertile,”

meaning that its most abundant isotope, uranium-238, almost never

undergoes fission by itself — but it can absorb a neutron and turn into

highly fissile plutonium-239.) And there were reactors fueled with

thorium, a fertile element that sits two slots to the left of uranium in

the periodic table, and is about three times more abundant in the

Earth’s crust. A neutron will turn its dominant isotope, thorium-232,

into fissile uranium-233.

An element, such as carbon or uranium, is defined by the number of protons in its nucleus: Carbon has 6, uranium 92. But atoms may vary in the number of neutrons, producing slightly different flavors, or isotopes, of an element. For example, the most common form of carbon is carbon-12, which has 6 protons and 6 neutrons, and is stable. But other isotopes include carbon-13, which is also stable, and carbon-14, which is unstable and radioactive. Uranium’s most common form is uranium-238, which has 92 protons and 146 neutrons, and decays only after billions of years. Other, less stable isotopes include uranium-235 (92 protons and 143 neutrons) and uranium-233 (92 protons and 141 neutrons), both of which can undergo nuclear fission if they are struck by a neutron.

At the same time, designers were trying out different types of

coolant: the fluid that circulates through the reactor core, absorbs the

heat being produced by the fission reactions, and carries it out to

where the heat can do something useful like running a standard steam

turbine to generate electricity. Some opted for ordinary water: an

abundant, familiar substance that carries a lot of heat per unit of

volume. But others went with high-temperature substances such as liquid

sodium metal, helium gas or even molten lead. “Coolants” like these

could keep a reactor running at 700 degrees Celsius or more, which would

make it substantially more efficient at generating power.

By the 1960s, researchers had tested reactors featuring combinations

of all these options and more. But the approach that won out for

commercial power production — and that is still used in virtually all of

the 454 nuclear plants operating around the world — was the

water-cooled uranium reactor. This wasn’t necessarily the best nuclear

design, but it was one of the first: Water-cooled reactors were

originally developed in the 1940s to power submarines. So in the 1950s,

when the Eisenhower administration launched a high-profile push to

harness nuclear energy for peaceful purposes, the technology was adapted

for civilian use and scaled up enormously. Other designs were left for

later, if ever. By the 1960s and 1970s, second-generation water-cooled

reactors were being deployed globally.

“It feels like we’re at the beginning of a movement.” Rachel Slaybaugh

Even then, however, there were many in the field who were uneasy with that choice.

Among the most notable was nuclear physicist Alvin Weinberg, a

Manhattan Project veteran and director of the Oak Ridge National

Laboratory. Weinberg had participated in the development of water-cooled

reactors, and knew that they had some key vulnerabilities — including

water’s low boiling point, just 100°C at normal atmospheric pressure.

Commercial nuclear plants could get that up to 325°C or so by

pressurizing the reactor vessel. But as Weinberg and others knew very

well, that was not enough to rule out the nightmare of nightmares: a

meltdown. All it would take was some freak accident that interrupted the

flow of water through the core and trapped all the heat inside. You

could shut down power production by dropping rods of boron or cadmium

into the reactor core to soak up neutrons and stop the chain reaction.

But nothing could stop the heat produced by the decay of fission

products — the melange of short-lived but fiercely radioactive elements

that inevitably build up inside an active reactor as nuclei split in

two.

When the nuclei of certain “fissile” isotopes such as uranium-235 are struck by a neutron, they don’t just split apart. They also release at least two additional neutrons, which can then go on to split two more nuclei. This produces at least 4 additional neutrons – then 8, 16, 32, 64 and so on. The result is an exponentially growing chain reaction, which can produce a nuclear explosion if it’s allowed to run away — or useful nuclear power if it’s contained and controlled inside a reactor.

Unless the operators managed to restore the coolant flow within a few

hours, that trapped fission-product heat would send temperatures

soaring past the 325°C mark, turn the water into high-pressure steam,

and reduce the solid fuel to a radioactive puddle melting its way

through the reactor vessel floor. Soon after, the vessel would likely

rupture and send a pressurized plume of fission products into the

atmosphere. Included would be radioactive strontium-90, iodine-131 and

caesium-137 — extremely dangerous isotopes that can easily enter the

food chain and end up in the body.

To forestall such a catastrophe, designers had equipped the

commercial water-cooled reactors with all manner of redundancies and

emergency backup cooling systems. But to Weinberg’s mind, that was a bit

like installing fire alarms and sprinkler systems in a house built of

papier-mâché. What you really wanted was the nuclear equivalent of a

house built of fireproof brick — a reactor that based its safety on the

laws of physics, with no need for operators or backup systems to do

anything.

Weinberg and his team at Oak Ridge believed that they could come very

close to that ideal with the molten salt reactor, which they had been

working on since 1954. Such a reactor couldn’t possibly suffer a

meltdown, even in an accident: The molten salt core was liquid already.

The fission-product heat would simply cause the salt mix to expand and

move the fuel nuclei farther apart, which would dampen the chain

reaction.

Pressure would be a non-issue as well: The salts would have a boiling

point far higher than any temperature the fission products could

produce. (One common choice for nuclear applications is FLiBe, a mix of

lithium fluorides and beryllium fluorides that doesn’t boil until

1,400°C, about the temperature of a butane blowtorch.) So the reactor

vessel would never be in danger of rupture from molten salt “steam.” In

fact, the reactor would barely shift from its normal operating pressure

of one atmosphere.

Better still, the molten core would trap fission products far more

securely than in solid-fueled reactors. Cesium, iodine and all the rest

would chemically bind with the salts the instant they were created. And

since the salts could not boil away in even the worst accident, these

fission products would be held in place instead of being free to drift

off and take up radioactive residence in people’s bones and thyroid

glands.

And just in case, the liquid nature of the fuel allowed for a simple

fail-safe known as a freeze plug. This involved connecting the bottom of

the reactor vessel to a drain pipe, which would be plugged with a lump

of solid fuel salt kept frozen by a jet of cool gas. If the power failed

and the gas flow stopped, or if the reactor got too hot, the plug would

melt and gravity would drain the contents into an underground holding

tank. The mix would then cool, solidify and remain in the tank until the

crisis was over — salts, fuel, fission products and all.

Molten salt success, then a detour

Weinberg and his team successfully demonstrated all this in the

Molten Salt Reactor Experiment, an 8-megawatt prototype that ran at Oak

Ridge from 1965 to 1969. The corrosiveness of the salts was a potential

threat to the long-term integrity of pipes, pumps and other parts, but

the researchers had identified a number of corrosion-resistant materials

they thought might solve the problem. By the early 1970s, the group was

well into development of an even more ambitious prototype that would

allow them to test those materials as well as to demonstrate the use of

thorium fuel salts instead of uranium.

Hundreds of nuclear isotopes have been observed in the laboratory, but only three of them seem to be a practical source of fission energy. Uranium-235, which comprises 0.7 percent of natural uranium ore, can sustain a chain reaction all by itself; it is the primary energy source for nuclear power reactors operating in the world today. Uranium-238, which comprises virtually all the other atoms in mined uranium, is “fertile”: it can’t sustain a chain reaction by itself, but when it’s hit by a neutron it can transform into the highly fissile isotope plutonium-239. Likewise with thorium-232: Slamming it with a neutron can turn it into the fissile isotope uranium-233.

The Oak Ridge physicists were also eager to try out a new system for

dealing with the waste fission products — one that again took advantage

of the fuel’s liquid nature, but had been tested only in the laboratory.

The idea was to siphon off a little of the reactor’s fuel mix each day

and run it through a nearby purification system, which would chemically

extract the fission products in much the same way that the kidneys

remove toxins from the bloodstream. The cleaned-up fuel would then be

circulated back into the reactor, which could continue running at full

power the whole time. This process would not only keep the fission

products from building up until they snuffed out the chain reaction — a

problem for any reactor, since these elements tend to absorb a lot of

neutrons — but it would also enhance the safety of molten salt still

further. Not even the worst accident can contaminate the countryside

with fission products that aren’t there.

But none of it was to be. Officials in the US nuclear program

terminated the Oak Ridge molten salt program in January 1973 — and fired

Weinberg.

The nuclear engineering community was just too heavily committed to

solid fuels, both financially and intellectually. Practitioners already

had decades of experience with experimental and commercial solid-fueled

reactors, versus that one molten salt experiment at Oak Ridge. A huge

infrastructure existed for processing and producing solid fuel. And, not

incidentally, the US research program was committed to a grand vision

for the global nuclear future that would expand this infrastructure

enormously — and that, viewed with 20-20 hindsight, would lead the

nuclear industry into a trap.

Key to that vision was a different way of dealing with the buildup of

fission products. Since Oak Ridge–style continuous purification wasn’t

an option in a solid-fuel reactor, water-cooled or otherwise, standard

procedure called for burning fuel until the fission products rendered it

useless, or spent. In water-cooled power reactors this took roughly

three years, at which point the spent fuel would be switched out for

fresh, then stored at the bottom of a pool of water for a few years

while the worst of its fission-product radioactivity decayed.

Radioactivity forms when an unstable atomic nucleus sheds its excess energy by firing off a high-speed particle. This allows the nucleus to “decay,” or settle into a more stable form. The type of particle that’s emitted depends on the isotope involved, but primarily is alpha, beta or gamma. The rate of decay depends on the isotope’s half-life: the time it takes for half the original sample of nuclei to decay.

From there, the plan was to recycle it. Counting the remaining

uranium, plus the plutonium that had formed from neutrons hitting

uranium-238 nuclei, the fuel still contained most of the potential

fission energy it had started with. So there was to be a new, global

network of reprocessing plants that would chemically extract the fission

products for disposal, and turn the uranium and plutonium into fresh

fuel. That network, in turn, would ultimately support a new generation

of sodium-cooled breeder reactors that would produce plutonium by the

ton — thus solving what was then thought to be an acute shortage of the

uranium needed to power the all-nuclear global economy of the future.

But that plan started to look considerably less visionary in May

1974, when India tested a nuclear bomb made with plutonium extracted

from the spent fuel of a conventional reactor. Governments around the

world suddenly realized that global reprocessing would be an invitation

to rampant nuclear weapons proliferation: In plants handling large

quantities of pure plutonium, it would be entirely too easy for bad

actors to secretly divert a few kilograms at a time for bombs. So in

April 1977, US President Jimmy Carter banned commercial reprocessing in

the United States, and much of the rest of the world followed.

The vision for nuclear energy 50 years ago included a “closed loop” for nuclear fuels. In this scenario, most fuels would be reprocessed and cycled back into reactors to make more energy. The only wastes would be fission products, which are radioactive for a few hundred years at most.

That helped cement the already declining interest in breeder

reactors, which made no sense without reprocessing plants to extract the

new-made plutonium, and left the world with a nasty disposal problem.

Instead of storing spent fuel underwater for a few years, engineers were

now supposed to isolate it for something like 240,000 years, thanks to

the 24,100-year half-life of plutonium-239. (The rule of thumb for

safety is to wait 10 half-lives, which reduces radiation levels more

than a thousand-fold.) No one has yet figured out how to guarantee

isolation for that span of time. Today, there are nearly 300,000 tons of

spent nuclear fuel still piling up at reactors around the world, part

of an as yet unresolved long-term storage problem.

In retrospect, those 1970s-era nuclear planners would have done well

to put serious money back into Oak Ridge’s molten salt program: As

developers there tried to point out at the time, the continuous

purification approach could have solved both the spent-fuel and

proliferation problems at a stroke.

The proliferation risk would be minimal because — unlike the kind of

reprocessing plants envisioned for the breeder program — the Oak Ridge

system would never isolate uranium-235, plutonium-239 or any other

fissile material. Instead, these isotopes would stay in the cleaned-up

fuel salts at concentrations far too low to make a bomb. They would be

circulated back into the reactor, where they could continue fissioning

until they were completely consumed.

“The nuclear industry was not in an innovation frame of mind for 30 years.” Nathan Myhrvold

The reactor’s purification system would likewise offer a solution to

the spent fuel issue. It would strip out the reaction-quenching fission

products from the fuel almost as quickly as they formed, which would

potentially allow the reactor to run for decades at a stretch with only

an occasional injection of fresh fuel to replace what it burned. Some of

that fuel could even come from today’s 300,000-ton backlog of spent

solid fuel.

Admittedly, it would take centuries for even a large network of

molten salt reactors to work through the full backlog. But burning it

would eliminate the need to safely store it for thousands of centuries.

By consuming the long-lived isotopes like plutonium-239, molten salt

reactors could reduce the nuclear waste stream to a comparatively small

volume of fission products having half-lives of 30 years or less. By the

10 half-life rule, this waste would then need to be isolated for just

300 years. That’s not trivial, says Schönfeldt, “but it’s something that

can be handled” — say, by encasing the waste in concrete and steel, or

putting it down a deep borehole.

Unfortunately, the late 1970s was not a good time for reviving any

kind of nuclear program, molten salt or otherwise. Public mistrust of

nuclear energy was escalating rapidly, thanks to rising concerns over

safety, waste and weapons proliferation. The power companies’ patience

was wearing thin, thanks to the skyrocketing, multibillion-dollar cost

of standard water-cooled reactors. And then in March 1979 came a partial

meltdown at Three Mile Island, a conventional nuclear plant near

Harrisburg, Pennsylvania. In April 1986, another catastrophe hit with

the fire and meltdown at the Chernobyl plant in Ukraine.

The resulting backlash against nuclear power was so strong that new

plant construction effectively ceased — which is why most of the nuclear

reactors operating today are at least three to four decades old. And

nuclear power research stagnated, as well, with most of the money and

effort going into ensuring the safety of those aging plants.

“The nuclear industry was not in an innovation frame of mind for 30 years,” says TerraPower’s Myhrvold.

In the 1970s, the threat that reprocessed fuels could be secretly diverted to make nuclear weapons led the United States and many other nations to reject the closed fuel cycle. Instead, they have opted to dispose of spent nuclear fuel after just one pass through the reactor. But because the fuel now contains long-half-life isotopes like plutonium-239, the wastes must be managed and stored for more than 200,000 years before they will be considered safe. No one knows how to guarantee that spent fuel will remain undisturbed for that span of time — one of the many safety concerns that have dogged nuclear energy programs.

Old tech revival

This defensive crouch lasted well into the new century, while the

molten salt concept fell further and further into obscurity. That began

to change only in 2002, when Kirk Sorensen came across a book describing

what the molten salt program had accomplished at Oak Ridge.

“Why didn’t we do it this way in the first place?” he remembers wondering.

Sorensen, then a NASA engineer in Huntsville, Alabama, was so

intrigued that he tracked down the old Oak Ridge technical reports,

which were moldering in file cabinets, and talked NASA into paying to

have them scanned. The files filled up five compact discs, which he

copied and sent around to leaders in the US energy industry. “I received

no response,” he says. So in 2006, in hopes of reaching somebody who

would find the concept as compelling as he did, he uploaded the

documents to energyfromthorium.com, a website he’d created with his own money.

That strategy worked — slowly. “I would give Kirk Sorensen personal

credit,” says Lou Qualls, a nuclear engineer at Oak Ridge who became the

Department of Energy’s first national technical director for molten

salt reactors in 2017. “So Kirk is one of those voices out in the

wilderness, and for a long time people would go, 'We don’t even know

what you’re talking about.’” But once the old reports became available

online, “people started to look at the technology, to understand it, see

that it had a history,” Qualls says. “It started getting more

credibility.”

“We … became nuclear engineers because we’re environmentalists.” Leslie Dewan

It helped that rising concerns about climate change — and the

ever-growing backlog of spent nuclear fuel — had put many nuclear

engineers in the mood for a radical rethink of their field. They could

see that incremental improvements in standard reactor technology weren’t

getting anywhere. Manufacturers had been hyping their “Generation III”

designs for water-cooled reactors with enhanced safety features, but

these were proving to be just as slow and expensive to build as their

second-generation predecessors from the 1970s.

So instead, there was a move to revive the old reactor concepts and

update them into a whole new series of Generation IV reactors: devices

that would be considerably smaller and cheaper than their

1,000-megawatt, multibillion-dollar predecessors, with safety and

proliferation resistance built in from the start. Among the most

prominent symbols of this movement was TerraPower. Launched in 2008 with

major funding from Microsoft cofounder Bill Gates, the company

immediately started development of a liquid sodium-cooled device called

the Traveling Wave Reactor.

The molten salt idea was definitely on the Gen IV list. Schönfeldt

remembers getting excited about it as early as 2008. At MIT, Dewan and

her fellow graduate student Mark Massie first encountered the idea in

2010, and were intrigued by the reactors’ inherent safety. “We both

became nuclear engineers because we’re environmentalists,” says Dewan.

Besides, her classmate had grown up watching his native West Virginia

being devastated by mountaintop removal mining. “So Mark wanted to

design a nuclear reactor that’s good enough to shut down the coal

industry.”

Then in March 2011, the dangers of the nuclear status quo were

underscored yet again. A tsunami knocked out all the cooling systems and

backups at Japan’s Fukushima Daiichi plant and sent its 1970s-vintage

power reactors into the worst meltdown since Chernobyl. That April,

Sorensen launched the first of the molten salt start-up companies,

Huntsville-based Flibe Energy. His goal ever since has been to develop

and commercialize a Liquid-Fluoride Thorium Reactor — pretty much the

same device that was envisioned at Oak Ridge back in the 1960s.

Dewan and Massie founded Transatomic the same month. And other molten

salt start-ups soon followed, each building on the basic concept with a

host of different design strategies and fuel choices. When Seaborg

launched in 2014, for example, Schönfeldt and his colleagues started

designing a molten salt Compact Used fuel BurnEr (called CUBE) that

would not only run on a combination of spent nuclear fuel and thorium,

but also be really, really small by reactor standards. “The fact that

you can transport it to the site on the back of a truck is a major

upside,” says Schönfeldt, “especially in remote regions.”

TerraPower, meanwhile, decided in 2015 to develop a much larger

molten salt device, the Molten Chloride Fast Reactor, as a complement to

the company’s ongoing work on its sodium-cooled Traveling Wave Reactor.

The new system retains the latter’s ability to burn the widest possible

range of fuels — including not just spent nuclear fuel, but also the

ordinarily non-fissile uranium-238. (Both designs take advantage of the

fact that a uranium-238 nucleus hit by a neutron has a tiny, but

non-zero, probability of fissioning.) But unlike in the Traveling Wave

Reactor, explains the company’s chief technical officer, John Gilleland,

the molten salts’ 700°C-plus operating temperature will allow it to

generate the kind of heat needed for industrial processes such as

petroleum cracking and plastics making. Industrial process heat

currently accounts for about one-third of total energy usage within the

US manufacturing sector.

This industrial heat is now produced almost entirely by burning coal,

oil or natural gas, says Gilleland. So if you could replace all that

with carbon-free nuclear heat, he says, “you could hit the carbon

problem in a very striking way.”

Of course, none of this is going to happen tomorrow. The various

molten salt companies are still refining their designs by gathering lab

data on liquid fuel chemistry, and running massive computer simulations

of how the melt behaves when it’s simultaneously flowing and fissioning.

The first prototypes won’t be up and running until the mid-2020s at the

earliest.

And not all the companies will be there. Transatomic became the

molten salt movement’s first casualty in September 2018, when Dewan shut

it down. Her company had simply fallen too far behind in its design

work relative to competitors, she explains. So even though investors

were willing to keep going, she says, “it wouldn’t feel right for us to

continue taking their money when I didn’t see a viable path forward for

the business side.”

Still, most of the molten salt pioneers say they see reason for

cautious optimism. Since at least 2015, the US Department of Energy has

been ramping up its support for advanced reactor research in general,

and molten salt reactors in particular.

“We kept telling people the three big advantages of molten salt reactors — no meltdown, no proliferation, burning up nuclear waste.” Troels Schönfeldt

Meanwhile, notes Slaybaugh, licensing agencies such as the US Nuclear

Regulatory Commission are gearing up with the computer simulations and

evaluation tools they will need when the advanced-reactor companies

start seeking approval for constructing their prototypes. “People are

looking at these technologies more carefully and more seriously than

they have in a long time,” she says.

Perhaps the biggest and most unpredictable barrier is the public’s

ingrained fear about almost anything labeled “nuclear.” What happens if

people lump in molten salt reactors with older nuclear technologies, and

reject them out of hand?

Based on their experience to date, most proponents are cautiously

optimistic on this front as well. In Copenhagen, Schönfeldt and his

colleagues kept hammering on the why of nuclear power, which

was to fight climate change, poverty and pollution. “And we kept telling

people the three big advantages of molten salt reactors — no meltdown,

no proliferation, burning up nuclear waste,” he says. And slowly, people

were willing to listen.

“We’ve moved a long way,” says Schönfeldt. “When we started in 2014,

commercial nuclear power was illegal in Denmark. In 2017, we got public

funding.”