Placebos are typically inert tablets, such as sugar pills

A placebo (/pləˈsiːboʊ/ plə-SEE-boh) is an inert substance or treatment which is designed to have no therapeutic value. Common placebos include inert tablets (like sugar pills), inert injections (like saline), sham surgery, and other procedures.

In general, placebos can affect how patients perceive their

condition and encourage the body's chemical processes for relieving pain and a few other symptoms, but have no impact on the disease itself. Improvements that patients experience after being treated with a placebo can also be due to unrelated factors, such as regression to the mean (a natural recovery from the illness).

The use of placebos as treatment in clinical medicine raises ethical

concerns, as it introduces dishonesty into the doctor–patient

relationship.

In drug testing and medical research, a placebo can be made to

resemble an active medication or therapy so that it functions as a control; this is to prevent the recipient or others from knowing (with their consent) whether a treatment is active or inactive, as expectations about efficacy can influence results. In a clinical trial any change in the placebo arm is known as the placebo response, and the difference between this and the result of no treatment is the placebo effect.

The idea of a placebo effect—a therapeutic outcome derived from an inert treatment—was discussed in 18th century psychology but became more prominent in the 20th century. An influential 1955 study entitled The Powerful Placebo firmly established the idea that placebo effects were clinically important, and were a result of the brain's role in physical health.

A 1997 reassessment found no evidence of any placebo effect in the

source data, as the study had not accounted for regression to the mean.

Definitions

The word "placebo", Latin for "I will please", dates back to a Latin translation of the Bible by St Jerome.

The American Society of Pain Management Nursing define a placebo

as "any sham medication or procedure designed to be void of any known

therapeutic value".

In a clinical trial, a placebo response is the measured response of subjects to a placebo; the placebo effect is the difference between that response and no treatment. It is also part of the recorded response to any active medical intervention.

Any measurable placebo effect is termed either objective (e.g. lowered blood pressure) or subjective (e.g. a lowered perception of pain).

Effects

Placebos can improve patient-reported outcomes such as pain and nausea. This effect is unpredictable and hard to measure, even in the best conducted trials. For example, if used to treat insomnia, placebos can cause patients to perceive that they are sleeping better, but do not improve objective measurements of sleep onset latency. A 2001 Cochrane Collaboration

meta-analysis of the placebo effect looked at trials in 40 different

medical conditions, and concluded the only one where it had been shown

to have a significant effect was for pain.

By contrast, placebos do not appear to affect the actual diseases, or outcomes that are not dependent on a patient's perception. One exception to the latter is Parkinson's disease, where recent research has linked placebo interventions to improved motor functions.

Measuring the extent of the placebo effect is difficult due to confounding factors.

For example, a patient may feel better after taking a placebo due to

regression to the mean (i.e. a natural recovery or change in symptoms). It is harder still to tell the difference between the placebo effect and the effects of response bias, observer bias and other flaws in trial methodology, as a trial comparing placebo treatment and no treatment will not be a blinded experiment. In their 2010 meta-analysis of the placebo effect, Asbjørn Hróbjartsson and Peter C. Gøtzsche

argue that "even if there were no true effect of placebo, one would

expect to record differences between placebo and no-treatment groups due

to bias associated with lack of blinding."

Hróbjartsson and Gøtzsche concluded that their study "did not

find that placebo interventions have important clinical effects in

general." Jeremy Howick

has argued that combining so many varied studies to produce a single

average might obscure that "some placebos for some things could be quite

effective."

To demonstrate this, he participated in a systematic review comparing

active treatments and placebos using a similar method, which generated a

clearly misleading conclusion that there is "no difference between

treatment and placebo effects".

Factors influencing the power of the placebo effect

Louis Lasagna helped make placebo-controlled trials a standard practice in the U.S.. He also believed "warmth, sympathy, and understanding" had therapeutic benefits.

A review published in JAMA Psychiatry found that, in trials of

antipsychotic medications, the change in response to receiving a

placebo had increased significantly between 1960 and 2013. The review's

authors identified several factors that could be responsible for this

change, including inflation of baseline scores and enrollment of fewer

severely ill patients. Another analysis published in Pain in 2015 found that placebo responses had increased considerably in neuropathic pain

clinical trials conducted in the United States from 1990 to 2013. The

researchers suggested that this may be because such trials have

"increased in study size and length" during this time period.

Some studies have investigated the use of placebos where the patient is fully aware that the treatment is inert, known as an open-label placebo.

A May 2017 meta-analysis found some evidence that open-label placebos

have positive effects in comparison to no treatment, but said the result

should be treated with "caution" and that further trials were needed.

Symptoms and conditions

A

2010 Cochrane Collaboration review suggests that placebo effects are

apparent only in subjective, continuous measures, and in the treatment

of pain and related conditions.

Pain

Placebos are

believed to be capable of altering a person's perception of pain. "A

person might reinterpret a sharp pain as uncomfortable tingling."

One way in which the magnitude of placebo analgesia can be

measured is by conducting "open/hidden" studies, in which some patients

receive an analgesic and are informed that they will be receiving it

(open), while others are administered the same drug without their

knowledge (hidden). Such studies have found that analgesics are

considerably more effective when the patient knows they are receiving

them.

Depression

In 2008, a controversial meta-analysis led by psychologist Irving Kirsch, analyzing data from the FDA, concluded that 82% of the response to antidepressants was accounted for by placebos.

However, there are serious doubts about the used methods and the

interpretation of the results, especially the use of 0.5 as cut-off

point for the effect-size.

A complete reanalysis and recalculation based on the same FDA data

discovered that the Kirsch study suffered from "important flaws in the

calculations". The authors concluded that although a large percentage of

the placebo response was due to expectancy, this was not true for the

active drug. Besides confirming drug effectiveness, they found that the

drug effect was not related to depression severity.

Another meta-analysis found that 79% of depressed patients

receiving placebo remained well (for 12 weeks after an initial 6–8 weeks

of successful therapy) compared to 93% of those receiving

antidepressants. In the continuation phase however, patients on placebo

relapsed significantly more often than patients on antidepressants.

Negative effects

A phenomenon opposite to the placebo effect has also been observed.

When an inactive substance or treatment is administered to a recipient

who has an expectation of it having a negative impact, this intervention is known as a nocebo (Latin nocebo = "I shall harm"). A nocebo effect

occurs when the recipient of an inert substance reports a negative

effect or a worsening of symptoms, with the outcome resulting not from

the substance itself, but from negative expectations about the

treatment.

Another negative consequence is that placebos can cause side-effects associated with real treatment.

Withdrawal symptoms can also occur after placebo treatment. This was found, for example, after the discontinuation of the Women's Health Initiative study of hormone replacement therapy for menopause. Women had been on placebo for an average of 5.7 years. Moderate or severe withdrawal symptoms were reported by 4.8% of those on placebo compared to 21.3% of those on hormone replacement.

Ethics

In research trials

Knowingly

giving a person a placebo when there is an effective treatment

available is a bioethically complex issue. While placebo-controlled

trials might provide information about the effectiveness of a treatment,

it denies some patients what could be the best available (if unproven)

treatment. Informed consent

is usually required for a study to be considered ethical, including the

disclosure that some test subjects will receive placebo treatments.

The ethics of placebo-controlled studies have been debated in the revision process of the Declaration of Helsinki.

Of particular concern has been the difference between trials comparing

inert placebos with experimental treatments, versus comparing the best

available treatment with an experimental treatment; and differences

between trials in the sponsor's developed countries versus the trial's

targeted developing countries.

Some suggest that existing medical treatments should be used

instead of placebos, to avoid having some patients not receive medicine

during the trial.

In medical practice

The

practice of doctors prescribing placebos that are disguised as real

medication is controversial. A chief concern is that it is deceptive and

could harm the doctor–patient relationship in the long run. While some

say that blanket consent, or the general consent to unspecified

treatment given by patients beforehand, is ethical, others argue that

patients should always obtain specific information about the name of the

drug they are receiving, its side effects, and other treatment options. This view is shared by some on the grounds of patient autonomy.

There are also concerns that legitimate doctors and pharmacists could

open themselves up to charges of fraud or malpractice by using a

placebo. Critics also argued that using placebos can delay the proper diagnosis and treatment of serious medical conditions.

About 25% of physicians in both the Danish and Israeli studies

used placebos as a diagnostic tool to determine if a patient's symptoms

were real, or if the patient was malingering. Both the critics and the defenders of the medical use of placebos agreed that this was unethical. The British Medical Journal editorial said, "That a patient gets pain relief from a placebo does not imply that the pain is not real or organic in origin ...the

use of the placebo for 'diagnosis' of whether or not pain is real is

misguided." A survey in the United States of more than 10,000 physicians

came to the result that while 24% of physicians would prescribe a

treatment that is a placebo simply because the patient wanted treatment,

58% would not, and for the remaining 18%, it would depend on the

circumstances.

Referring specifically to homeopathy, the House of Commons of the United Kingdom Science and Technology Committee has stated:

In the Committee's view, homeopathy is a placebo treatment and the Government should have a policy on prescribing placebos. The Government is reluctant to address the appropriateness and ethics of prescribing placebos to patients, which usually relies on some degree of patient deception. Prescribing of placebos is not consistent with informed patient choice—which the Government claims is very important—as it means patients do not have all the information needed to make choice meaningful. A further issue is that the placebo effect is unreliable and unpredictable.

In his 2008 book Bad Science, Ben Goldacre argues that instead of deceiving patients with placebos, doctors should use the placebo effect to enhance effective medicines. Edzard Ernst

has argued similarly that "As a good doctor you should be able to

transmit a placebo effect through the compassion you show your

patients."

In an opinion piece about homeopathy, Ernst argued that it is wrong to

approve an ineffective treatment on the basis that it can make patients

feel better through the placebo effect. His concerns are that it is deceitful and that the placebo effect is unreliable. Goldacre also concludes that the placebo effect does not justify the use of alternative medicine.

Mechanisms

Expectation plays a clear role. A placebo presented as a stimulant may trigger an effect on heart rhythm and blood pressure, but when administered as a depressant, the opposite effect.

Psychology

The "placebo effect" may be related to expectations

In psychology, the two main hypotheses of placebo effect are expectancy theory and classical conditioning.

In 1985, Irving Kirsch

hypothesized that placebo effects are produced by the self-fulfilling

effects of response expectancies, in which the belief that one will feel

different leads a person to actually feel different.

According to this theory, the belief that one has received an active

treatment can produce the subjective changes thought to be produced by

the real treatment. Placebos can act similarly through classical

conditioning, wherein a placebo and an actual stimulus are used

simultaneously until the placebo is associated with the effect from the

actual stimulus. Both conditioning and expectations play a role in placebo effect, and make different kinds of contribution. Conditioning has a longer-lasting effect, and can affect earlier stages of information processing.

Those who think a treatment will work display a stronger placebo effect

than those who do not, as evidenced by a study of acupuncture.

Additionally, motivation

may contribute to the placebo effect. The active goals of an individual

changes their somatic experience by altering the detection and

interpretation of expectation-congruent symptoms, and by changing the

behavioral strategies a person pursues.

Motivation may link to the meaning through which people experience

illness and treatment. Such meaning is derived from the culture in which

they live and which informs them about the nature of illness and how it

responds to treatment.

Placebo analgesia

Functional imaging upon placebo analgesia suggests links to the activation, and increased functional correlation between this activation, in the anterior cingulate, prefrontal, orbitofrontal and insular cortices, nucleus accumbens, amygdala, the brainstem periaqueductal gray matter, and the spinal cord.

It has been known that placebo analgesia depends upon the release in the brain of endogenous opioids since 1978.

Such analgesic placebos activation changes processing lower down in the

brain by enhancing the descending inhibition through the periaqueductal

gray on spinal nociceptive reflexes, while the expectations of anti-analgesic nocebos acts in the opposite way to block this.

Functional imaging upon placebo analgesia has been summarized as

showing that the placebo response is "mediated by "top-down" processes

dependent on frontal cortical areas that generate and maintain cognitive

expectancies. Dopaminergic reward pathways may underlie these expectancies". "Diseases lacking major 'top-down' or cortically based regulation may be less prone to placebo-related improvement".

Brain and body

In conditioning, a neutral stimulus saccharin is paired in a drink with an agent that produces an unconditioned response. For example, that agent might be cyclophosphamide, which causes immunosuppression.

After learning this pairing, the taste of saccharin by itself is able

to cause immunosuppression, as a new conditioned response via neural

top-down control.

Such conditioning has been found to affect a diverse variety of not

just basic physiological processes in the immune system but ones such as

serum iron levels, oxidative DNA damage levels, and insulin secretion. Recent reviews have argued that the placebo effect is due to top-down control by the brain for immunity and pain.

Pacheco-López and colleagues have raised the possibility of

"neocortical-sympathetic-immune axis providing neuroanatomical

substrates that might explain the link between placebo/conditioned and

placebo/expectation responses." There has also been research aiming to understand underlying neurobiological mechanisms of action in pain relief, immunosuppression, Parkinson's disease and depression.

Dopaminergic pathways have been implicated in the placebo response in pain and depression.

Confounding factors

Placebo-controlled

studies, as well as studies of the placebo effect itself, often fail to

adequately identify confounding factors. False impressions of placebo effects are caused by many factors including:

- Regression to the mean (natural recovery or fluctuation of symptoms)

- Additional treatments

- Response bias from subjects, including scaling bias, answers of politeness, experimental subordination, conditioned answers;

- Reporting bias from experimenters, including misjudgment and irrelevant response variables.

- Non-inert ingredients of the placebo medication having an unintended physical effect

History

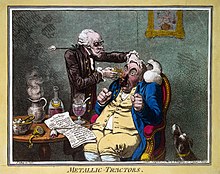

A quack treating a patient with Perkins Patent Tractors by James Gillray, 1801. John Haygarth used this remedy to illustrate the power of the placebo effect.

The word placebo was used in a medicinal context in the late 18th

century to describe a "commonplace method or medicine" and in 1811 it

was defined as "any medicine adapted more to please than to benefit the

patient". Although this definition contained a derogatory implication it did not necessarily imply that the remedy had no effect.

Placebos have featured in medical use until well into the twentieth century. In 1955 Henry K. Beecher published an influential paper entitled The Powerful Placebo which proposed idea that placebo effects were clinically important. Subsequent re-analysis of his materials, however, found in them no evidence of any "placebo effect".

Placebo-controlled studies

The placebo effect makes it more difficult to evaluate new

treatments. Clinical trials control for this effect by including a group

of subjects that receives a sham treatment. The subjects in such trials

are blinded as to whether they receive the treatment or a placebo. If a

person is given a placebo under one name, and they respond, they will

respond in the same way on a later occasion to that placebo under that

name but not if under another.

Clinical trials are often double-blinded so that the researchers

also do not know which test subjects are receiving the active or placebo

treatment. The placebo effect in such clinical trials is weaker than in

normal therapy since the subjects are not sure whether the treatment

they are receiving is active.