In chemical kinetics, the overall rate of a reaction is often approximately determined by the slowest step, known as the rate-determining step (RDS) or rate-limiting step. For a given reaction mechanism, the prediction of the corresponding rate equation (for comparison with the experimental rate law) is often simplified by using this approximation of the rate-determining step.

In principle, the time evolution of the reactant and product concentrations can be determined from the set of simultaneous rate equations for the individual steps of the mechanism, one for each step. However, the analytical solution of these differential equations is not always easy, and in some cases numerical integration may even be required. The hypothesis of a single rate-determining step can greatly simplify the mathematics. In the simplest case the initial step is the slowest, and the overall rate is just the rate of the first step.

Also, the rate equations for mechanisms with a single rate-determining step are usually in a simple mathematical form, whose relation to the mechanism and choice of rate-determining step is clear. The correct rate-determining step can be identified by predicting the rate law for each possible choice and comparing the different predictions with the experimental law, as for the example of NO

2 and CO below.

The concept of the rate-determining step is very important to the optimization and understanding of many chemical processes such as catalysis and combustion.

Example reaction: NO

In principle, the time evolution of the reactant and product concentrations can be determined from the set of simultaneous rate equations for the individual steps of the mechanism, one for each step. However, the analytical solution of these differential equations is not always easy, and in some cases numerical integration may even be required. The hypothesis of a single rate-determining step can greatly simplify the mathematics. In the simplest case the initial step is the slowest, and the overall rate is just the rate of the first step.

Also, the rate equations for mechanisms with a single rate-determining step are usually in a simple mathematical form, whose relation to the mechanism and choice of rate-determining step is clear. The correct rate-determining step can be identified by predicting the rate law for each possible choice and comparing the different predictions with the experimental law, as for the example of NO

2 and CO below.

The concept of the rate-determining step is very important to the optimization and understanding of many chemical processes such as catalysis and combustion.

Example reaction: NO

2 + CO

As an example, consider the gas-phase reaction NO

2 + CO → NO + CO

2. If this reaction occurred in a single step, its reaction rate (r) would be proportional to the rate of collisions between NO

2 and CO molecules: r = k[NO

2 ][CO], where k is the reaction rate constant, and square brackets indicate a molar concentration. Another typical example is the Zel'dovich mechanism.

2 + CO → NO + CO

2. If this reaction occurred in a single step, its reaction rate (r) would be proportional to the rate of collisions between NO

2 and CO molecules: r = k[NO

2 ][CO], where k is the reaction rate constant, and square brackets indicate a molar concentration. Another typical example is the Zel'dovich mechanism.

First step rate-determining

In fact, however, the observed reaction rate is second-order in NO

2 and zero-order in CO, with rate equation r = k[NO

2 ]2. This suggests that the rate is determined by a step in which two NO

2 molecules react, with the CO molecule entering at another, faster, step. A possible mechanism in two elementary steps that explains the rate equation is:

2 and zero-order in CO, with rate equation r = k[NO

2 ]2. This suggests that the rate is determined by a step in which two NO

2 molecules react, with the CO molecule entering at another, faster, step. A possible mechanism in two elementary steps that explains the rate equation is:

- NO

2 + NO

2 → NO + NO

3 (slow step, rate-determining) - NO

3 + CO → NO

2 + CO

2 (fast step)

In this mechanism the reactive intermediate species NO

3 is formed in the first step with rate r1 and reacts with CO in the second step with rate r2. However NO

3 can also react with NO if the first step occurs in the reverse direction (NO + NO

3 → 2 NO

2 ) with rate r−1, where the minus sign indicates the rate of a reverse reaction.

3 is formed in the first step with rate r1 and reacts with CO in the second step with rate r2. However NO

3 can also react with NO if the first step occurs in the reverse direction (NO + NO

3 → 2 NO

2 ) with rate r−1, where the minus sign indicates the rate of a reverse reaction.

The concentration of a reactive intermediate such as [NO

3 ] remains low and almost constant. It may therefore be estimated by the steady-state approximation, which specifies that the rate at which it is formed equals the (total) rate at which it is consumed. In this example NO

3 is formed in one step and reacts in two, so that

3 ] remains low and almost constant. It may therefore be estimated by the steady-state approximation, which specifies that the rate at which it is formed equals the (total) rate at which it is consumed. In this example NO

3 is formed in one step and reacts in two, so that

The statement that the first step is the slow step actually means that the first step in the reverse direction is slower than the second step in the forward direction, so that almost all NO

3 is consumed by reaction with CO and not with NO. That is, r−1 ≪ r2, so that r1 − r2 ≈ 0. But the overall rate of reaction is the rate of formation of final product (here CO

2), so that r = r2 ≈ r1. That is, the overall rate is determined by the rate of the first step, and (almost) all molecules that react at the first step continue to the fast second step.

3 is consumed by reaction with CO and not with NO. That is, r−1 ≪ r2, so that r1 − r2 ≈ 0. But the overall rate of reaction is the rate of formation of final product (here CO

2), so that r = r2 ≈ r1. That is, the overall rate is determined by the rate of the first step, and (almost) all molecules that react at the first step continue to the fast second step.

Pre-equilibrium: if the second step were rate-determining

The

other possible case would be that the second step is slow and

rate-determining, meaning that it is slower than the first step in the

reverse direction: r2 ≪ r−1. In this hypothesis, r1 − r−1 ≈ 0, so that the first step is (almost) at equilibrium. The overall rate is determined by the second step: r = r2 ≪ r1,

as very few molecules that react at the first step continue to the

second step, which is much slower. Such a situation in which an

intermediate (here NO

3 ) forms an equilibrium with reactants prior to the rate-determining step is described as a pre-equilibrium For the reaction of NO

2 and CO, this hypothesis can be rejected, since it implies a rate equation that disagrees with experiment.

3 ) forms an equilibrium with reactants prior to the rate-determining step is described as a pre-equilibrium For the reaction of NO

2 and CO, this hypothesis can be rejected, since it implies a rate equation that disagrees with experiment.

If the first step were at equilibrium, then its equilibrium constant expression permits calculation of the concentration of the intermediate NO

3 in terms of more stable (and more easily measured) reactant and product species:

3 in terms of more stable (and more easily measured) reactant and product species:

The overall reaction rate would then be

which disagrees with the experimental rate law given above, and so

disproves the hypothesis that the second step is rate-determining for

this reaction. However, some other reactions are believed to involve

rapid pre-equilibria prior to the rate-determining step, as shown below.

Nucleophilic substitution

Another example is the unimolecular nucleophilic substitution (SN1) reaction in organic chemistry, where it is the first, rate-determining step that is unimolecular. A specific case is the basic hydrolysis of tert-butyl bromide (t-C

4H

9Br) by aqueous sodium hydroxide. The mechanism has two steps (where R denotes the tert-butyl radical t-C

4H

9):

4H

9Br) by aqueous sodium hydroxide. The mechanism has two steps (where R denotes the tert-butyl radical t-C

4H

9):

- Formation of a carbocation R−Br → R+ + Br−.

- Nucleophilic attack by one water molecule R+ + OH− → ROH.

This reaction is found to be first-order with r = k[R−Br], which indicates that the first step is slow and determines the rate. The second step with OH− is much faster, so the overall rate is independent of the concentration of OH−.

In contrast, the alkaline hydrolysis of methyl bromide (CH

3Br) is a bimolecular nucleophilic substitution (SN2) reaction in a single bimolecular step. Its rate law is second-order: r = k[R−Br][OH−].

3Br) is a bimolecular nucleophilic substitution (SN2) reaction in a single bimolecular step. Its rate law is second-order: r = k[R−Br][OH−].

Composition of the transition state

A

useful rule in the determination of mechanism is that the concentration

factors in the rate law indicate the composition and charge of the activated complex or transition state. For the NO

2 –CO reaction above, the rate depends on [NO

2 ]2, so that the activated complex has composition N

2O

4, with 2 NO

2 entering the reaction before the transition state, and CO reacting after the transition state.

2 –CO reaction above, the rate depends on [NO

2 ]2, so that the activated complex has composition N

2O

4, with 2 NO

2 entering the reaction before the transition state, and CO reacting after the transition state.

A multistep example is the reaction between oxalic acid and chlorine in aqueous solution: H

2C

2O

4 + Cl

2 → 2 CO

2 + 2 H+ + 2 Cl−. The observed rate law is

2C

2O

4 + Cl

2 → 2 CO

2 + 2 H+ + 2 Cl−. The observed rate law is

which implies an activated complex in which the reactants lose 2H+ + Cl− before the rate-determining step. The formula of the activated complex is Cl

2 + H

2C

2O

4 − 2 H+ − Cl− + x H

2O, or C

2O

4Cl(H

2O)–

x (an unknown number of water molecules are added because the possible dependence of the reaction rate on H

2O was not studied, since the data were obtained in water solvent at a large and essentially unvarying concentration).

2 + H

2C

2O

4 − 2 H+ − Cl− + x H

2O, or C

2O

4Cl(H

2O)–

x (an unknown number of water molecules are added because the possible dependence of the reaction rate on H

2O was not studied, since the data were obtained in water solvent at a large and essentially unvarying concentration).

One possible mechanism in which the preliminary steps are assumed

to be rapid pre-equilibria occurring prior to the transition state is

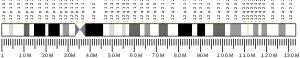

Reaction coordinate diagram

In a multistep reaction, the rate-determining step does not necessarily correspond to the highest Gibbs energy on the reaction coordinate diagram. If there is a reaction intermediate whose energy is lower than the initial reactants, then the activation energy needed to pass through any subsequent transition state

depends on the Gibbs energy of that state relative to the lower-energy

intermediate. The rate-determining step is then the step with the

largest Gibbs energy difference relative either to the starting material

or to any previous intermediate on the diagram.

Also, for reaction steps that are not first-order, concentration terms must be considered in choosing the rate-determining step.

Chain reactions

Not all reactions have a single rate-determining step. In particular, the rate of a chain reaction is usually not controlled by any single step.

Diffusion control

In

the previous examples the rate determining step was one of the

sequential chemical reactions leading to a product. The rate-determining

step can also be the transport of reactants to where they can interact

and form the product. This case is referred to as diffusion control

and, in general, occurs when the formation of product from the

activated complex is very rapid and thus the provision of the supply of

reactants is rate-determining.

![{\displaystyle {\frac {d{\ce {[NO3]}}}{dt}}=r_{1}-r_{2}-r_{-1}\approx 0.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7ecf92288f5a82cd3e1109f4bcf95c175b0304d5)

![{\displaystyle K_{1}={\frac {{\ce {[NO][NO3]}}}{{\ce {[NO2]^2}}}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/80999e5d22d393cfb0b436e351a14dbcc7ea1ffe)

![{\displaystyle [{\ce {NO3}}]=K_{1}{\frac {{\ce {[NO2]^2}}}{{\ce {[NO]}}}}.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/657b6abb8fc1acb20fbcda4fd85dd5d01cc84657)

![{\displaystyle r=r_{2}=k_{2}{\ce {[NO3][CO]}}=k_{2}K_{1}{\frac {{\ce {[NO2]^2 [CO]}}}{{\ce {[NO]}}}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/469e8efed24cec0fda01438c293b926abf7ea94d)

![{\displaystyle v=k{\frac {{\ce {[Cl2][H2C2O4]}}}{[{\ce {H+}}]^{2}[{\ce {Cl^-}}]}},}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f393df90aea1ad148e41b5c616546f5204a71e28)