Supramolecular chemistry refers to the area of chemistry concerning chemical systems composed of a discrete number of molecules. The strength of the forces responsible for spatial organization of the system range from weak intermolecular forces, electrostatic charge, or hydrogen bonding to strong covalent bonding, provided that the electronic coupling strength remains small relative to the energy parameters of the component. Whereas traditional chemistry concentrates on the covalent bond, supramolecular chemistry examines the weaker and reversible non-covalent interactions between molecules. These forces include hydrogen bonding, metal coordination, hydrophobic forces, van der Waals forces, pi–pi interactions and electrostatic effects.

Important concepts advanced by supramolecular chemistry include molecular self-assembly, molecular folding, molecular recognition, host–guest chemistry, mechanically-interlocked molecular architectures, and dynamic covalent chemistry. The study of non-covalent interactions is crucial to understanding many biological processes that rely on these forces for structure and function. Biological systems are often the inspiration for supramolecular research.

Gallery

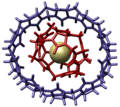

Host–guest complex within another host (cucurbituril)

An example of a host–guest chemistry

Host–guest complex with a p-xylylenediammonium bound within a cucurbituril

Intramolecular self-assembly of a foldamer

History

The existence of intermolecular forces was first postulated by Johannes Diderik van der Waals in 1873. However, Nobel laureate Hermann Emil Fischer developed supramolecular chemistry's philosophical roots. In 1894, Fischer suggested that enzyme–substrate interactions take the form of a "lock and key", the fundamental principles of molecular recognition and host–guest chemistry. In the early twentieth century non-covalent bonds were understood in gradually more detail, with the hydrogen bond being described by Latimer and Rodebush in 1920.

The use of these principles led to an increasing understanding of protein structure and other biological processes. For instance, the important breakthrough that allowed the elucidation of the double helical structure of DNA occurred when it was realized that there are two separate strands of nucleotides connected through hydrogen bonds. The use of non-covalent bonds is essential to replication because they allow the strands to be separated and used to template new double stranded DNA. Concomitantly, chemists began to recognize and study synthetic structures based on non-covalent interactions, such as micelles and microemulsions.

Eventually, chemists were able to take these concepts and apply them to synthetic systems. The breakthrough came in the 1960s with the synthesis of the crown ethers by Charles J. Pedersen. Following this work, other researchers such as Donald J. Cram, Jean-Marie Lehn and Fritz Vögtle became active in synthesizing shape- and ion-selective receptors, and throughout the 1980s research in the area gathered a rapid pace with concepts such as mechanically interlocked molecular architectures emerging.

The importance of supramolecular chemistry was established by the 1987 Nobel Prize for Chemistry which was awarded to Donald J. Cram, Jean-Marie Lehn, and Charles J. Pedersen in recognition of their work in this area. The development of selective "host–guest" complexes in particular, in which a host molecule recognizes and selectively binds a certain guest, was cited as an important contribution.

In the 1990s, supramolecular chemistry became even more sophisticated, with researchers such as James Fraser Stoddart developing molecular machinery and highly complex self-assembled structures, and Itamar Willner developing sensors and methods of electronic and biological interfacing. During this period, electrochemical and photochemical motifs became integrated into supramolecular systems in order to increase functionality, research into synthetic self-replicating system began, and work on molecular information processing devices began. The emerging science of nanotechnology also had a strong influence on the subject, with building blocks such as fullerenes, nanoparticles, and dendrimers becoming involved in synthetic systems.

Control

Thermodynamics

Supramolecular chemistry deals with subtle interactions, and consequently control over the processes involved can require great precision. In particular, non-covalent bonds have low energies and often no activation energy for formation. As demonstrated by the Arrhenius equation, this means that, unlike in covalent bond-forming chemistry, the rate of bond formation is not increased at higher temperatures. In fact, chemical equilibrium equations show that the low bond energy results in a shift towards the breaking of supramolecular complexes at higher temperatures.

However, low temperatures can also be problematic to supramolecular processes. Supramolecular chemistry can require molecules to distort into thermodynamically disfavored conformations (e.g. during the "slipping" synthesis of rotaxanes), and may include some covalent chemistry that goes along with the supramolecular. In addition, the dynamic nature of supramolecular chemistry is utilized in many systems (e.g. molecular mechanics), and cooling the system would slow these processes.

Thus, thermodynamics is an important tool to design, control, and study supramolecular chemistry. Perhaps the most striking example is that of warm-blooded biological systems, which entirely cease to operate outside a very narrow temperature range.

Environment

The molecular environment around a supramolecular system is also of prime importance to its operation and stability. Many solvents have strong hydrogen bonding, electrostatic, and charge-transfer capabilities, and are therefore able to become involved in complex equilibria with the system, even breaking complexes completely. For this reason, the choice of solvent can be critical.

Concepts

Molecular self-assembly

Molecular self-assembly is the construction of systems without guidance or management from an outside source (other than to provide a suitable environment). The molecules are directed to assemble through non-covalent interactions. Self-assembly may be subdivided into intermolecular self-assembly (to form a supramolecular assembly), and intramolecular self-assembly (or folding as demonstrated by foldamers and polypeptides). Molecular self-assembly also allows the construction of larger structures such as micelles, membranes, vesicles, liquid crystals, and is important to crystal engineering.

Molecular recognition and complexation

Molecular recognition is the specific binding of a guest molecule to a complementary host molecule to form a host–guest complex. Often, the definition of which species is the "host" and which is the "guest" is arbitrary. The molecules are able to identify each other using non-covalent interactions. Key applications of this field are the construction of molecular sensors and catalysis.

Template-directed synthesis

Molecular recognition and self-assembly may be used with reactive species in order to pre-organize a system for a chemical reaction (to form one or more covalent bonds). It may be considered a special case of supramolecular catalysis. Non-covalent bonds between the reactants and a "template" hold the reactive sites of the reactants close together, facilitating the desired chemistry. This technique is particularly useful for situations where the desired reaction conformation is thermodynamically or kinetically unlikely, such as in the preparation of large macrocycles. This pre-organization also serves purposes such as minimizing side reactions, lowering the activation energy of the reaction, and producing desired stereochemistry. After the reaction has taken place, the template may remain in place, be forcibly removed, or may be "automatically" decomplexed on account of the different recognition properties of the reaction product. The template may be as simple as a single metal ion or may be extremely complex.

Mechanically interlocked molecular architectures

Mechanically interlocked molecular architectures consist of molecules that are linked only as a consequence of their topology. Some non-covalent interactions may exist between the different components (often those that were utilized in the construction of the system), but covalent bonds do not. Supramolecular chemistry, and template-directed synthesis in particular, is key to the efficient synthesis of the compounds. Examples of mechanically interlocked molecular architectures include catenanes, rotaxanes, molecular knots, molecular Borromean rings and ravels.

Dynamic covalent chemistry

In dynamic covalent chemistry covalent bonds are broken and formed in a reversible reaction under thermodynamic control. While covalent bonds are key to the process, the system is directed by non-covalent forces to form the lowest energy structures.

Biomimetics

Many synthetic supramolecular systems are designed to copy functions of biological systems. These biomimetic architectures can be used to learn about both the biological model and the synthetic implementation. Examples include photoelectrochemical systems, catalytic systems, protein design and self-replication.

Imprinting

Molecular imprinting describes a process by which a host is constructed from small molecules using a suitable molecular species as a template. After construction, the template is removed leaving only the host. The template for host construction may be subtly different from the guest that the finished host binds to. In its simplest form, imprinting utilizes only steric interactions, but more complex systems also incorporate hydrogen bonding and other interactions to improve binding strength and specificity.

Molecular machinery

Molecular machines are molecules or molecular assemblies that can perform functions such as linear or rotational movement, switching, and entrapment. These devices exist at the boundary between supramolecular chemistry and nanotechnology, and prototypes have been demonstrated using supramolecular concepts. Jean-Pierre Sauvage, Sir J. Fraser Stoddart and Bernard L. Feringa shared the 2016 Nobel Prize in Chemistry for the 'design and synthesis of molecular machines'.

Building blocks

Supramolecular systems are rarely designed from first principles. Rather, chemists have a range of well-studied structural and functional building blocks that they are able to use to build up larger functional architectures. Many of these exist as whole families of similar units, from which the analog with the exact desired properties can be chosen.

Synthetic recognition motifs

- The pi-pi charge-transfer interactions of bipyridinium with dioxyarenes or diaminoarenes have been used extensively for the construction of mechanically interlocked systems and in crystal engineering.

- The use of crown ether binding with metal or ammonium cations is ubiquitous in supramolecular chemistry.

- The formation of carboxylic acid dimers and other simple hydrogen bonding interactions.

- The complexation of bipyridines or terpyridines with ruthenium, silver or other metal ions is of great utility in the construction of complex architectures of many individual molecules.

- The complexation of porphyrins or phthalocyanines around metal ions gives access to catalytic, photochemical and electrochemical properties in addition to the complexation itself. These units are used a great deal by nature.

Macrocycles

Macrocycles are very useful in supramolecular chemistry, as they provide whole cavities that can completely surround guest molecules and may be chemically modified to fine-tune their properties.

- Cyclodextrins, calixarenes, cucurbiturils and crown ethers are readily synthesized in large quantities, and are therefore convenient for use in supramolecular systems.

- More complex cyclophanes, and cryptands can be synthesised to provide more tailored recognition properties.

- Supramolecular metallocycles are macrocyclic aggregates with metal ions in the ring, often formed from angular and linear modules. Common metallocycle shapes in these types of applications include triangles, squares, and pentagons, each bearing functional groups that connect the pieces via "self-assembly."

- Metallacrowns are metallomacrocycles generated via a similar self-assembly approach from fused chelate-rings.

Structural units

Many supramolecular systems require their components to have suitable spacing and conformations relative to each other, and therefore easily employed structural units are required.

- Commonly used spacers and connecting groups include polyether chains, biphenyls and triphenyls, and simple alkyl chains. The chemistry for creating and connecting these units is very well understood.

- nanoparticles, nanorods, fullerenes and dendrimers offer nanometer-sized structure and encapsulation units.

- Surfaces can be used as scaffolds for the construction of complex systems and also for interfacing electrochemical systems with electrodes. Regular surfaces can be used for the construction of self-assembled monolayers and multilayers.

- The understanding of intermolecular interactions in solids has undergone a major renaissance via inputs from different experimental and computational methods in the last decade. This includes high-pressure studies in solids and in situ crystallization of compounds which are liquids at room temperature along with the utilization of electron density analysis, crystal structure prediction and DFT calculations in solid state to enable a quantitative understanding of the nature, energetics and topological properties associated with such interactions in crystals.

Photo-chemically and electro-chemically active units

- Porphyrins, and phthalocyanines have highly tunable photochemical and electrochemical activity as well as the potential to form complexes.

- Photochromic and photoisomerizable groups can change their shapes and properties, including binding properties, upon exposure to light.

- Tetrathiafulvalene (TTF) and quinones have multiple stable oxidation states, and therefore can be used in redox reactions and electrochemistry.

- Other units, such as benzidine derivatives, viologens, and fullerenes, are useful in supramolecular electrochemical devices.

Biologically-derived units

- The extremely strong complexation between avidin and biotin is instrumental in blood clotting, and has been used as the recognition motif to construct synthetic systems.

- The binding of enzymes with their cofactors has been used as a route to produce modified enzymes, electrically contacted enzymes, and even photoswitchable enzymes.

- DNA has been used both as a structural and as a functional unit in synthetic supramolecular systems.

Applications

Materials technology

Supramolecular chemistry has found many applications, in particular molecular self-assembly processes have been applied to the development of new materials. Large structures can be readily accessed using bottom-up synthesis as they are composed of small molecules requiring fewer steps to synthesize. Thus most of the bottom-up approaches to nanotechnology are based on supramolecular chemistry. Many smart materials are based on molecular recognition.

Catalysis

A major application of supramolecular chemistry is the design and understanding of catalysts and catalysis. Non-covalent interactions are extremely important in catalysis, binding reactants into conformations suitable for reaction and lowering the transition state energy of reaction. Template-directed synthesis is a special case of supramolecular catalysis. Encapsulation systems such as micelles, dendrimers, and cavitands are also used in catalysis to create microenvironments suitable for reactions (or steps in reactions) to progress that is not possible to use on a macroscopic scale.

Medicine

Design based on supramolecular chemistry has led to numerous applications in the creation of functional biomaterials and therapeutics. Supramolecular biomaterials afford a number of modular and generalizable platforms with tunable mechanical, chemical and biological properties. These include systems based on supramolecular assembly of peptides, host–guest macrocycles, high-affinity hydrogen bonding, and metal–ligand interactions.

A supramolecular approach has been used extensively to create artificial ion channels for the transport of sodium and potassium ions into and out of cells.

Supramolecular chemistry is also important to the development of new pharmaceutical therapies by understanding the interactions at a drug binding site. The area of drug delivery has also made critical advances as a result of supramolecular chemistry providing encapsulation and targeted release mechanisms. In addition, supramolecular systems have been designed to disrupt protein–protein interactions that are important to cellular function.