| Flying Spaghetti Monster | |

|---|---|

Pastafarianism | |

Touched by His Noodly Appendage, a parody of Michelangelo's The Creation of Adam, is an iconic image of the Flying Spaghetti Monster by Arne Niklas Jansson. | |

| Abode | spaghettimonster.org |

| Symbol |  |

| Texts | The Gospel of the Flying Spaghetti Monster, The Loose Canon, the Holy Book of the Church of the Flying Spaghetti Monster |

| Festivals | "Holiday" |

The Flying Spaghetti Monster (FSM) is the deity of the Church of the Flying Spaghetti Monster or Pastafarianism, a social movement that promotes a light-hearted view of religion and opposes the teaching of intelligent design and creationism in public schools. According to adherents, Pastafarianism (a portmanteau of pasta and Rastafarianism) is a "real, legitimate religion, as much as any other". It has received some limited recognition as such.

The "Flying Spaghetti Monster" was first described in a satirical open letter written by Bobby Henderson in 2005 to protest the Kansas State Board of Education decision to permit teaching intelligent design as an alternative to evolution in public school science classes. In the letter, Henderson demanded equal time in science classrooms for "Flying Spaghetti Monsterism", alongside intelligent design and evolution. After Henderson published the letter on his website, the Flying Spaghetti Monster rapidly became an Internet phenomenon and a symbol of opposition to the teaching of intelligent design in public schools.

Pastafarian tenets (generally satires of creationism) are presented on Henderson's Church of the Flying Spaghetti Monster website (where he is described as "prophet"), and are also elucidated in The Gospel of the Flying Spaghetti Monster, written by Henderson in 2006, and in The Loose Canon, the Holy Book of the Church of the Flying Spaghetti Monster. The central creation myth is that an invisible and undetectable Flying Spaghetti Monster created the universe after drinking heavily. Pirates are revered as the original Pastafarians. Henderson asserts that a decline in the number of pirates over the years is the cause of global warming. The FSM community congregates at Henderson's website to share ideas about the Flying Spaghetti Monster and crafts representing images of it.

Because of its popularity and exposure, the Flying Spaghetti Monster is often used as a contemporary version of Russell's teapot—an argument that the philosophic burden of proof lies upon those who make unfalsifiable claims, not on those who reject them. Pastafarians have engaged in disputes with creationists, including in Polk County, Florida, where they played a role in dissuading the local school board from adopting new rules on teaching evolution. Pastafarianism has received praise from the scientific community and criticism from proponents of intelligent design.

History

In January 2005, Bobby Henderson, a 24-year-old Oregon State University physics graduate, sent an open letter regarding the Flying Spaghetti Monster to the Kansas State Board of Education. In that letter, Henderson satirized creationism by professing his belief that whenever a scientist carbon-dates an object, a supernatural creator that closely resembles spaghetti and meatballs is there "changing the results with His Noodly Appendage". Henderson argued that his beliefs were just as valid as intelligent design, and called for equal time in science classrooms alongside intelligent design and evolution. The letter was sent prior to the Kansas evolution hearings as an argument against the teaching of intelligent design in biology classes. Henderson, describing himself as a "concerned citizen" representing more than ten million others, argued that intelligent design and his belief that "the universe was created by a Flying Spaghetti Monster" were equally valid. In his letter, he noted,

I think we can all look forward to the time when these three theories are given equal time in our science classrooms across the country, and eventually the world; one third time for Intelligent Design, one third time for Flying Spaghetti Monsterism, and one third time for logical conjecture based on overwhelming observable evidence.

— Bobby Henderson

According to Henderson, since the intelligent design movement uses ambiguous references to a designer, any conceivable entity may fulfill that role, including a Flying Spaghetti Monster. Henderson explained, "I don't have a problem with religion. What I have a problem with is religion posing as science. If there is a god and he's intelligent, then I would guess he has a sense of humor."

In May 2005, having received no reply from the Kansas State Board of Education, Henderson posted the letter on his website, gaining significant public interest. Shortly thereafter, Pastafarianism became an Internet phenomenon. Henderson published the responses he then received from board members. Three board members, all of whom opposed the curriculum amendments, responded positively; a fourth board member responded with the comment "It is a serious offense to mock God". Henderson has also published the significant amount of hate mail, including death threats, that he has received. Within one year of sending the open letter, Henderson received thousands of emails on the Flying Spaghetti Monster, eventually totaling over 60,000, of which he has said that "about 95 percent have been supportive, while the other five percent have said I am going to hell". During that time, his site garnered tens of millions of hits.

Internet phenomenon

As word of Henderson's challenge to the board spread, his website and cause received more attention and support. The satirical nature of Henderson's argument made the Flying Spaghetti Monster popular with bloggers as well as humor and Internet culture websites. The Flying Spaghetti Monster was featured on websites such as Boing Boing, Something Awful, Uncyclopedia, and Fark.com. Moreover, an International Society for Flying Spaghetti Monster Awareness and other fan sites emerged. As public awareness grew, the mainstream media picked up on the phenomenon. The Flying Spaghetti Monster became a symbol for the case against intelligent design in public education. The open letter was printed in several major newspapers, including The New York Times, The Washington Post, and Chicago Sun-Times, and received worldwide press attention. Henderson himself was surprised by its success, stating that he "wrote the letter for my own amusement as much as anything".

In August 2005, in response to a challenge from a reader, Boing Boing announced a $250,000 prize—later raised to $1,000,000—of "Intelligently Designed currency" payable to any individual who could produce empirical evidence proving that Jesus is not the son of the Flying Spaghetti Monster. It was modeled as a parody of a similar challenge issued by young-earth creationist Kent Hovind.

According to Henderson, newspaper articles on the Flying Spaghetti Monster attracted the attention of book publishers; he said that at one point, there were six publishers interested in the Flying Spaghetti Monster. In November 2005, Henderson received an advance from Villard to write The Gospel of the Flying Spaghetti Monster.

In November 2005, the Kansas State Board of Education voted to allow criticisms of evolution, including language about intelligent design, as part of testing standards. On February 13, 2007, the board voted 6–4 to reject the amended science standards enacted in 2005. This was the fifth time in eight years that the board had rewritten the standards on evolution.

Tenets

Bobby Henderson

Although Henderson has stated that "the only dogma allowed in the Church of the Flying Spaghetti Monster is the rejection of dogma", some general beliefs are held by Pastafarians. Henderson proposed many Pastafarian tenets in reaction to common arguments by proponents of intelligent design. These "canonical beliefs" are presented by Henderson in his letter to the Kansas State Board of Education, The Gospel of the Flying Spaghetti Monster, and on Henderson's web site, where he is described as a "prophet". They tend to satirize creationism.

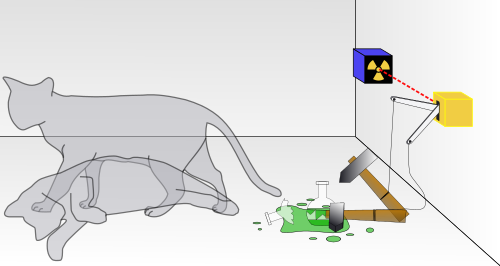

Creation

The central creation myth is that an invisible and undetectable Flying Spaghetti Monster created the universe "after drinking heavily". According to these beliefs, the Monster's intoxication was the cause for a flawed Earth. Furthermore, according to Pastafarianism, all evidence for evolution was planted by the Flying Spaghetti Monster in an effort to test the faith of Pastafarians—parodying certain biblical literalists. When scientific measurements such as radiocarbon dating are taken, the Flying Spaghetti Monster "is there changing the results with His Noodly Appendage".

Afterlife

The Pastafarian conception of Heaven includes a beer volcano and a stripper (or sometimes prostitute) factory. The Pastafarian Hell is similar, except that the beer is stale and the strippers have sexually transmitted diseases.

Pirates and global warming

According to Pastafarian beliefs, pirates are "absolute divine beings" and the original Pastafarians. Furthermore, Pastafarians believe that the concept of pirates as "thieves and outcasts" is misinformation spread by Christian theologians in the Middle Ages and by Hare Krishnas. Instead, Pastafarians believe that they were "peace-loving explorers and spreaders of good will" who distributed candy to small children, adding that modern pirates are in no way similar to "the fun-loving buccaneers from history". In addition, Pastafarians believe that ghost pirates are responsible for all of the mysteriously lost ships and planes of the Bermuda Triangle. Pastafarians are among those who celebrate International Talk Like a Pirate Day on September 19.

The inclusion of pirates in Pastafarianism was part of Henderson's original letter to the Kansas State Board of Education, in an effort to illustrate that correlation does not imply causation. Henderson presented the argument that "global warming, earthquakes, hurricanes, and other natural disasters are a direct effect of the shrinking numbers of pirates since the 1800s". A deliberately misleading graph accompanying the letter (with numbers humorously disordered on the x-axis) shows that as the number of pirates decreased, global temperatures increased. This parodies the suggestion from some religious groups that the high numbers of disasters, famines, and wars in the world is due to the lack of respect and worship toward their deity. In 2008, Henderson interpreted the growing pirate activities at the Gulf of Aden as additional support, pointing out that Somalia has "the highest number of pirates and the lowest carbon emissions of any country".

Holidays

Pastafarian beliefs extend into lighthearted religious ceremony. Pastafarians celebrate every Friday as a holy day. Prayers are concluded with a final declaration of affirmation, "R'amen" (or "rAmen"); the term is a parodic portmanteau of the terms "Amen" and "Ramen", referring to instant noodles and to the "noodly appendages" of their deity. The celebration of "Pastover" requires consuming large amounts of pasta, and during "Ramendan", only Ramen noodles are consumed; International Talk Like a Pirate Day is observed as a holiday.

Around the time of Christmas, Hanukkah, and Kwanzaa, Pastafarians celebrate a vaguely defined holiday named "Holiday". Holiday does not take place on "a specific date so much as it is the Holiday season, itself". According to Henderson, as Pastafarians "reject dogma and formalism", there are no specific requirements for Holiday. Pastafarians celebrate Holiday in any manner they please. Pastafarians interpret the increasing usage of "Happy Holidays", rather than more traditional greetings (such as "Merry Christmas"), as support for Pastafarianism. In December 2005, George W. Bush's White House Christmas greeting cards wished people a happy "holiday season", leading Henderson to write the President a note of thanks, including a "fish" emblem depicting the Flying Spaghetti Monster for his limousine or plane. Henderson also thanked Walmart for its use of the phrase.

Books

The Gospel of the Flying Spaghetti Monster

In December 2005 Bobby Henderson received a reported US$80,000 advance from Villard to write The Gospel of the Flying Spaghetti Monster. Henderson said he planned to use proceeds from the book to build a pirate ship, with which he would spread the Pastafarian religion. The book was released on March 28, 2006, and elaborates on Pastafarian beliefs established in the open letter. Henderson employs satire to present perceived flaws with evolutionary biology and discusses history and lifestyle from a Pastafarian perspective. The gospel urges readers to try Pastafarianism for thirty days, saying, "If you don't like us, your old religion will most likely take you back". Henderson states on his website that more than 100,000 copies of the book have been sold.

Scientific American described the gospel as "an elaborate spoof on Intelligent Design" and "very funny". In 2006, it was nominated for the Quill Award in Humor, but was not selected as the winner. Wayne Allen Brenner of The Austin Chronicle characterized the book as "a necessary bit of comic relief in the overly serious battle between science and superstition". Simon Singh of The Daily Telegraph wrote that the gospel "might be slightly repetitive...but overall it is a brilliant, provocative, witty and important gem of a book".

Casey Luskin of the Discovery Institute, which advocates intelligent design, labeled the gospel "a mockery of the Christian New Testament".

The Loose Canon

In September 2005, before Henderson had received an advance to write the Gospel of the Flying Spaghetti Monster, a Pastafarian member of the Venganza forums known as Solipsy, announced the beginning of a project to collect texts from fellow Pastafarians to compile into The Loose Canon, the Holy Book of the Church of the Flying Spaghetti Monster, essentially analogous to the Bible. The book was completed in 2010 and was made available for download.

Some excerpts from The Loose Canon include:

I am the Flying Spaghetti Monster. Thou shalt have no other monsters before Me (Afterwards is OK; just use protection). The only Monster who deserves capitalization is Me! Other monsters are false monsters, undeserving of capitalization.

— Suggestions 1:1

"Since you have done a half-ass job, you will receive half an ass!" The Great Pirate Solomon grabbed his ceremonial scimitar and struck his remaining donkey, cleaving it in two.

— Slackers 1:51–52

Influence

As a cultural phenomenon

The Church of the Flying Spaghetti Monster now consists of thousands of followers, primarily concentrated on college campuses in North America and Europe. According to the Associated Press, Henderson's website has become "a kind of cyber-watercooler for opponents of intelligent design". On it, visitors track meetings of pirate-clad Pastafarians, sell trinkets and bumper stickers, and sample photographs that show "visions" of the Flying Spaghetti Monster.

In August 2005, the Swedish concept designer Niklas Jansson created an adaptation of Michelangelo's The Creation of Adam, superimposing the Flying Spaghetti Monster over God. This became and remains the Flying Spaghetti Monster's de facto brand image. The Hunger Artists Theatre Company produced a comedy called The Flying Spaghetti Monster Holiday Pageant in December 2006, detailing the history of Pastafarianism. The production has spawned a sequel called Flying Spaghetti Monster Holy Mug of Grog, performed in December 2008. This communal activity attracted the attention of three University of Florida religious scholars, who assembled a panel at the 2007 American Academy of Religion meeting to discuss the Flying Spaghetti Monster.

In November 2007, four talks about the Flying Spaghetti Monster were delivered at the American Academy of Religion's annual meeting in San Diego. The talks, with titles such as Holy Pasta and Authentic Sauce: The Flying Spaghetti Monster's Messy Implications for Theorizing Religion, examined the elements necessary for a group to constitute a religion. Speakers inquired whether "an anti-religion like Flying Spaghetti Monsterism [is] actually a religion". The talks were based on the paper, Evolutionary Controversy and a Side of Pasta: The Flying Spaghetti Monster and the Subversive Function of Religious Parody, published in the GOLEM Journal of Religion and Monsters. The panel garnered an audience of one hundred of the more than 9,000 conference attendees, and conference organizers received critical e-mails from Christians offended by it.

Since October 2008, the local chapter of the Church of the Flying Spaghetti Monster has sponsored an annual convention called Skepticon on the campus of Missouri State University. Atheists and skeptics give speeches on various topics, and a debate with Christian experts is held. Organizers tout the event as the "largest gathering of atheists in the Midwest".

The Moldovan-born poet, fiction writer, and culturologist Igor Ursenco entitled his 2012 poetry book The Flying Spaghetti Monster (thriller poems).

On the nonprofit microfinancing site, Kiva, the Flying Spaghetti Monster group is in an ongoing competition to top all other "religious congregations" in the number of loans issued via their team. The group's motto is "Thou shalt share, that none may seek without funding", an allusion to the Loose Canon which states "Thou shalt share, that none may seek without finding." As of October 2018 it reported to have funded US$4,002,350 in loans.

Bathyphysa conifera, a siphonophore, has been called "Flying Spaghetti Monster" in reference to the FSM.

The 2020 documentary called I, Pastafari details the Church of the Flying Spaghetti Monster and its fight for legal recognition.

In September 2019, the Pastafarian pastor Barrett Fletcher offered an opening prayer on behalf of the Church of the Flying Spaghetti Monster to open a Kenai Peninsula Borough Assembly government meeting in Alaska.

Use in religious disputes

Owing to its popularity and media exposure, the Flying Spaghetti Monster is often used as a modern version of Russell's teapot. Proponents argue that, since the existence of the invisible and undetectable Flying Spaghetti Monster—similar to other proposed supernatural beings—cannot be falsified, it demonstrates that the burden of proof rests on those who affirm the existence of such beings. Richard Dawkins explains, "The onus is on somebody who says, I want to believe in God, Flying Spaghetti Monster, fairies, or whatever it is. It is not up to us to disprove it." Furthermore, according to Lance Gharavi, an editor of The Journal of Religion and Theater, the Flying Spaghetti Monster is "ultimately...an argument about the arbitrariness of holding any one view of creation", since any one view is equally as plausible as the Flying Spaghetti Monster. A similar argument was discussed in the books The God Delusion and The Atheist Delusion.

In December 2007 the Church of the Flying Spaghetti Monster was credited with spearheading successful efforts in Polk County, Florida, to dissuade the Polk County School Board from adopting new science standards on evolution. The issue was raised after five of the seven board members declared a personal belief in intelligent design. Opponents describing themselves as Pastafarians e-mailed members of the Polk County School Board demanding equal instruction time for the Flying Spaghetti Monster. Board member Margaret Lofton, who supported intelligent design, dismissed the e-mail as ridiculous and insulting, stating, "they've made us the laughing stock of the world". Lofton later stated that she had no interest in engaging with the Pastafarians or anyone else seeking to discredit intelligent design. As the controversy developed, scientists expressed opposition to intelligent design. In response to hopes for a new "applied science" campus at the University of South Florida in Lakeland, university vice president Marshall Goodman expressed surprise, stating, "[intelligent design is] not science. You can't even call it pseudo-science." While unhappy with the outcome, Lofton chose not to resign over the issue. She and the other board members expressed a desire to return to the day-to-day work of running the school district.

Legal status

National branches of the Church of the Flying Spaghetti Monster have been striving in many countries to have Pastafarianism become an officially (legally) recognized religion, with varying degrees of success. In New Zealand, Pastafarian representatives have been authorized as marriage celebrants, as the movement satisfies criteria laid down for organisations that primarily promote religious, philosophical, or humanitarian convictions.

A federal court in the US state of Nebraska ruled that Flying Spaghetti Monster is a satirical parody religion, rather than an actual religion, and as a result, Pastafarians are not entitled to religious accommodation under the Religious Land Use and Institutionalized Persons Act:

"This is not a question of theology", the ruling reads in part. "The FSM Gospel is plainly a work of satire, meant to entertain while making a pointed political statement. To read it as religious doctrine would be little different from grounding a 'religious exercise' on any other work of fiction."

Pastafarians have used their claimed faith as a test case to argue for freedom of religion, and to oppose government discrimination against people who do not follow a recognized religion.

Marriage

The Church of the Flying Spaghetti Monster operates an ordination mill on their website which enables officiates in jurisdictions where credentials are needed to officiate weddings. Pastafarians say that separation of church and state precludes the government from arbitrarily labelling one denomination religiously valid but another an ordination mill. In November 2014, Rodney Michael Rogers and Minneapolis-based Atheists for Human Rights sued Washington County, Minnesota under the Fourteenth Amendment equal protection clause and the First Amendment free speech clause, with their attorney claiming discrimination against atheists: "When the statute clearly permits recognition of a marriage celebrant whose religious credentials consist of nothing more than a $20 'ordination' obtained from the Church of the Flying Spaghetti Monster... the requirement is absolutely meaningless in terms of ensuring the qualifications of a marriage celebrant." A few days prior to a hearing on the matter, Washington County changed its policy to allow Rogers his ability to officiate weddings. This action was done in an effort to deny the court jurisdiction on the underlying claim. On May 13, 2015 the Federal Court held that the issue had become moot and dismissed the case. The first legally recognized Pastafarian wedding occurred in New Zealand on April 16, 2016.

Free speech

In March 2007, Bryan Killian, a high school student in Buncombe County, North Carolina, was suspended for wearing "pirate regalia" which he said was part of his Pastafarian faith. Killian protested the suspension, saying it violated his First Amendment rights to religious freedom and freedom of expression. "If this is what I believe in, no matter how stupid it might sound, I should be able to express myself however I want to", he said.

In March 2008, Pastafarians in Crossville, Tennessee, were permitted to place a Flying Spaghetti Monster statue in a free speech zone on the courthouse lawn, and proceeded to do so. The display gained national interest on blogs and online news sites and was even covered by Rolling Stone magazine. It was later removed from the premises, along with all the other long-term statues, as a result of the controversy over the statue. In December 2011, Pastafarianism was one of the multiple denominations given equal access to placing holiday displays on the Loudoun County courthouse lawn, in Leesburg, Virginia.

In 2012, Tracy McPherson of the Pennsylvanian Pastafarians petitioned the Chester County, Pennsylvania Commissioners to allow representation of the FSM at the county courthouse, equally with a Jewish menorah and a Christian nativity scene. One commissioner stated that either all religions should be allowed or no religion should be represented, but without support from the other commissioners the motion was rejected. Another commissioner stated that this petition garnered more attention than any he had seen before.

On September 21, 2012, Pastafarian Giorgos Loizos was arrested in Greece on charges of malicious blasphemy and offense of religion for the creation of a satirical Facebook page called "Elder Pastitsios", based on a well-known deceased Greek Orthodox monk, Elder Paisios, where his name and face were substituted with pastitsio – a local pasta and béchamel sauce dish. The case, which started as a Facebook flame, reached the Greek Parliament and created a strong political reaction to the arrest.

In August 2013, Christian Orthodox religious activists from an unregistered group known as "God's Will" attacked a peaceful rally that Russian Pastafarians had organized. Activists as well as police knocked some rally participants to the ground. Police arrested and charged eight of the Pastafarians with attempting to hold an unsanctioned rally. One of the Pastafarians later complained that they were arrested "just for walking".

In February 2014, union officials at London South Bank University forbade an atheist group to display posters of the Flying Spaghetti Monster at a student orientation conference and later banned the group from the conference, leading to complaints about interference with free speech. The Students' Union subsequently apologized.

In November 2014, the Church of the FSM obtained city signage in Templin, Germany, announcing the time of Friday's weekly Nudelmesse ("pasta mass"), alongside signage for various Catholic and Protestant Sunday services.

Headgear in identity photos

Origins and overview

In July 2011, Austrian pastafarian Niko Alm won the legal right to be shown in his driving license photo wearing a pasta strainer on his head, after three years spent pursuing permission and obtaining an examination certifying that he was psychologically fit to drive. He got the idea after reading that Austrian regulations allow headgear in official photos only when it is worn for religious reasons. Some sources report that the colander in the form of pasta strainer, was recognised by Austrian authorities as a religious headgear of the parody religion Pastafarianism in 2011. This was denied by Austrian authorities, saying that religious motives were not the reason to grant the permission of wearing the headgear in a passport.

Alm's initiative has since been replicated in several (mostly Western) countries around the world, with mixed successes. Many national or subnational authorities (such as U.S. states) granted driver's licences, identity cards or passports featuring photos of citizens wearing a colander, while other authorities rejected applications on grounds that either Pastafarianism was 'not a (real) religion' and reflected satire rather than sincerity or seriousness, or that wearing a colander could not be demonstrated to be a religious obligation as other head-covering items were claimed to be in other religions, such as the hijab in Islam and the kippah/yarmulke in Judaism. Applicants and their attorneys retorted by arguing – also with mixed successes – that Pastafarianism did constitute a real religion, or that it was neither up to the government to decide what qualifies as a religion, nor whether certain religious beliefs are valid or invalid, nor whether certain practices within religions had the status of obligation, established doctrine, recommendation, or personal choice. Moreover, some Pastafarians argued, satire and parody themselves are or could be a religious practice or an integral part of a religion such as Pastafarianism, and the government has no right to decide which beliefs should be taken seriously and which should not, and that it is only up to the individual believers themselves to decide which elements of their religion to take seriously, and to what degree.

- Europe

On August 9, 2011 the chairman of the church of the Flying Spaghetti Monster Germany, Rüdiger Weida, obtained a driver's license with a picture of him wearing a pirate bandana. In contrast to the Austrian officials in the case of Niko Alm the German officials allowed the headgear as a religious exception.

Some anti-clerical protesters wore colanders to Piazza XXIV Maggio square in Milan, Italy, on June 2, 2012, in mock obedience to the Flying Spaghetti Monster.

In March 2013 a Belgian's identity photos were refused by the local and national administrations because he wore a pasta strainer on his head.

The Czech Republic recognised this as religious headgear in 2013. In July that year, Lukáš Nový, a member of the Czech Pirate Party from Brno was given permission to wear a pasta strainer on his head for the photograph on his official Czech Republic ID card.

A man's Irish driving licence photograph including a colander was rejected by the Road Safety Authority (RSA) in December 2013. In March 2016, an Equality Officer of the Workplace Relations Commission reviewed the RSA's decision under the Equal Rights Acts and upheld it, on the basis that the complaint did "not come within the definition of religion and/or religious belief".

In January 2016 Russian Pastafarian Andrei Filin got a driver's license with his photo in a colander.

In the Netherlands, Dirk Jan Dijkstra applied for a Dutch passport around 2015 using a colander on his identity photo, which was rejected by the municipality of Emmen, after which Dijkstra successfully registered the Church of the Flying Spaghetti Monster as a church association (kerkgenootschap) at the – initially hesitant – Dutch Chamber of Commerce in January 2016. However, the municipality continued rejecting his application, arguing that registering as a church association did not mean that Pastafarianism was now a (recognised) religion, leading Dijkstra to sue the municipality for discrimination, and gathering dozens of colander-wearing FSM Church members and sympathisers at the trial in Groningen on 7 July 2016. Meanwhile, other Pastafarians succeeded in obtaining colander-featuring passports and driver's licences from the municipalities of Leiden and The Hague. On 1 August 2016, the Groningen court ruled that, although Pastafarianism is a life stance, it is not a religion, nor is there a duty in Pastafarianism to wear the colander, and therefore the religious exemption to the prohibition on wearing headgear in identity photos did not apply to Pastafarians. In January 2017, Nijmegen Pastafarian and law student Mienke de Wilde petitioned the Arnhem court to be allowed to wear a colander in her driver's licence photo. She lost the petition, both at first instance in February 2017 and on appeal at the Council of State in August 2018.

United States

In February 2013, a Pastafarian was denied the right to wear a spaghetti strainer on his head for his driver's license photo by the New Jersey Motor Vehicle Commission, which stated that a pasta strainer was not on a list of approved religious headwear.

In August 2013 Eddie Castillo, a student at Texas Tech University, got approval to wear a pasta strainer on his head in his driver's license photo. He said, "You might think this is some sort of a gag or prank by a college student, but thousands, including myself, see it as a political and religious milestone for all atheists everywhere."

In January 2014 a member of the Pomfret, New York Town Council wore a colander while taking the oath of office.

In November 2014 former porn star Asia Carrera obtained an identity photo with the traditional Pastafarian headgear from a Department of Motor Vehicles office in Hurricane, Utah. The director of Utah's Driver License Division says that about a dozen Pastafarians have had their state driver's license photos taken with a similar pasta strainer over the years.

In November 2015 Massachusetts resident Lindsay Miller was allowed to wear a colander on her head in her driver's license photo after she cited her religious beliefs. Miller (who resides in Lowell) said on Friday, November 13 that she "absolutely loves the history and the story" of Pastafarians, whose website says has existed in secrecy for hundreds of years and entered the mainstream in 2005. Ms. Miller was represented in her quest by The American Humanist Association's Appignani Humanist Legal Center.

In February 2016, a man from Madison, Wisconsin won a legal struggle against the state, which, reasoning that Pastafarianism was not a religion, had initially refused him a colander photo on his driver's licence. The man's attorney successfully defended his request on the basis of the First Amendment to the United States Constitution, arguing that it was 'not up to the government to decide what qualifies as a religion'.

After the Drivers Services of Schaumburg, Illinois initially granted Rachel Hoover, a student at Northern Illinois University, a colander-featuring photo in her driver's licence in June 2016, the Illinois Secretary of State's office overturned the decision in July 2016, stating that such a photo was 'incorrect' and a new one had to be taken before her old licence expired on 29 July. The office did not recognise Pastafarianism as a religion, with a spokesperson saying 'If you look into their history, it’s more of a mockery of religion than a practice itself'. Hoover lodged a religious discrimination complaint with the American Civil Liberties Union, but was unsure to pursue further legal action since it didn't fit into her college budget. Previously, Pastafarian David Hoover from Pekin, Illinois had his request for a driving licence featuring a colander picture rejected in May 2013.

In June 2017, Sean Corbett from Chandler, Arizona succeeded in obtaining a driver's licence with a colander picture after trying several Arizona motor vehicle locations for two years.

In October 2019, the Ohio Bureau of Motor Vehicles rejected a Cincinnati man's driver's licence colander photo, saying its policy allows people to wear religious head coverings in driver's licence photos only if they wear them in public in daily life.

Commonwealth of Nations

In June 2014 a New Zealand man called Russell obtained a driver's license with a photograph of himself wearing a blue spaghetti strainer on his head. This was granted under a law allowing the wearing of religious headgear in official photos.

In October 2014, Obi Canuel, an ordained minister in the Church of the Flying Spaghetti Monster residing in Surrey, British Columbia, Canada, effectively lost his right to drive. After initially refusing Canuel's request for a licence renewal in autumn 2013 because he insisted on wearing a colander on the photo, the Insurance Corporation of British Columbia granted him temporary driving permits while it was considering to definitively reject or grant his request. ICBC claimed their October 2014 definitive refusal was based on the fact that it would only 'accommodate customers with head coverings where their faith prohibits them from removing it', and that 'Mr. Canuel was not able to provide us with any evidence that he cannot remove his head covering for his photo'.

The states of Australia have differed in dealing with applications for official documents featuring colander photos. Sydney science student Preshalin Moodley got a provisional driver's licence from New South Wales in September 2014, while Brisbane tradesman Simon Leadbetter was denied a licence renewal by Queensland's Department of Transport and Main Roads the same month. Earlier in 2014, South Australia refused Adelaide resident Guy Ablon a gun licence with a photo of him wearing a colander; the authorities even seized his legally obtained guns, questioned his religion and forced him to undergo a psychiatric evaluation before his weapons were returned. state of Victoria issued the first strainer-featuring driver's licence in November 2016.

List of identity photo applications with headgear

| Jurisdiction | Document status | Date | Person(s) involved |

|---|---|---|---|

| Driver's licence granted | July 2011 | Niko Alm | |

| Driver's licence granted | August 2011 | Rüdiger Weida | |

| Driver's licence refused | February 2013 | Aaron Williams | |

| Identity card refused | March 2013 | Alain Graulus | |

| Identity card granted | July 2013 | Lukáš Nový | |

| Driver's licence granted | August 2013 | Eddie Castillo | |

| Gun licence refused | 2014 | Guy Ablon | |

| Driver's licence granted | June 2014 | Russell | |

| Driver's licence granted | August 2014 | Beth | |

| Driver's licence granted | September 2014 | Shawna Hammond | |

| Driver's licence granted | September 2014 | Preshalin Moodley | |

| Driver's licence refused | September 2014 | Simon Leadbetter | |

| Driver's licence refused | October 2014 | Obi Canuel | |

| Driver's licence granted | November 2014 | Asia Carrera | |

| Driver's licence granted | December 2014 | Joy Camacho | |

| Driver's licence granted | November 2015 | Lindsay Miller | |

| Driver's licence refused | December 2015 | Chris Avino | |

| Passport granted | 2016 | Michael Afanasyev | |

| Driver's licence granted | January 2016 | Chris Avino | |

| Driver's licence granted | January 2016 | Andrei Filin | |

| Driver's licence granted | February 2016 | Michael Schumacher | |

| Driver's licence refused | 2013, 2016 | Noel Mulryan | |

| Driver's licence granted, then refused | July 2016 | Rachel Hoover | |

| Driver's licence granted | November 2016 | Marcus Bowring | |

| Driver's licence granted | June 2017 | Sean Corbett | |

| Passport and driver's licence refused | 2015 – 2018 | Mienke de Wilde and others | |

| Driver's licence refused | October 2019 | Richard Moser |

Critical reception

With regard to Henderson's 2005 open letter, according to Justin Pope of the Associated Press:

Between the lines, the point of the letter was this: there's no more scientific basis for intelligent design than there is for the idea an omniscient creature made of pasta created the universe. If intelligent design supporters could demand equal time in a science class, why not anyone else? The only reasonable solution is to put nothing into sciences classes but the best available science.

— Justin Pope

Pope praised the Flying Spaghetti Monster as "a clever and effective argument". Simon Singh of the Daily Telegraph described the Flying Spaghetti Monster as "a masterstroke, which underlined the absurdity of Intelligent Design", and applauded Henderson for "galvanis[ing] a defence of science and rationality". Sarah Boxer of the New York Times said that Henderson "has wit on his side". In addition, the Flying Spaghetti Monster was mentioned in an article footnote of the Harvard Civil Rights-Civil Liberties Law Review as an example of evolution "enter[ing] the fray in popular culture", which the author deemed necessary for evolution to prevail over intelligent design. The abstract of the paper, Evolutionary Controversy and a Side of Pasta: The Flying Spaghetti Monster and the Subversive Function of Religious Parody, describes the Flying Spaghetti Monster as "a potent example of how monstrous humor can be used as a popular tool of carnivalesque subversion". Its author praised Pastafarianism for its "epistemological humility". Moreover, Henderson's website contains numerous endorsements from the scientific community. As Jack Schofield of The Guardian noted, "The joke, of course, is that it's arguably more rational than Intelligent Design."

Conservative columnist Jeff Jacoby wrote in The Boston Globe that intelligent design "isn't primitivism or Bible-thumping or flying spaghetti. It's science." This view of science, however, was rejected by the United States National Academy of Sciences. Peter Gallings of Answers in Genesis, a Young Earth Creationist ministry said "Ironically enough, Pastafarians, in addition to mocking God himself, are lampooning the Intelligent Design Movement for not identifying a specific deity—that is, leaving open the possibility that a spaghetti monster could be the intelligent designer... Thus, the satire is possible because the Intelligent Design Movement hasn't affiliated with a particular religion, exactly the opposite of what its other critics claim!"