An industrial robot is a robot system used for manufacturing. Industrial robots are automated, programmable and capable of movement on three or more axes.

Typical applications of robots include welding, painting, assembly, disassembly, pick and place for printed circuit boards, packaging and labeling, palletizing, product inspection, and testing; all accomplished with high endurance, speed, and precision. They can assist in material handling.

In the year 2020, an estimated 1.64 million industrial robots were in operation worldwide according to International Federation of Robotics (IFR).

Types and features

There are six types of industrial robots.

Articulated robots

Articulated robots are the most common industrial robots. They look like a human arm, which is why they are also called robotic arm or manipulator arm. Their articulations with several degrees of freedom allow the articulated arms a wide range of movements.

Cartesian coordinate robots

Cartesian robots, also called rectilinear, gantry robots, and x-y-z robots have three prismatic joints for the movement of the tool and three rotary joints for its orientation in space.

To be able to move and orient the effector organ in all directions, such a robot needs 6 axes (or degrees of freedom). In a 2-dimensional environment, three axes are sufficient, two for displacement and one for orientation.

Cylindrical coordinate robots

The cylindrical coordinate robots are characterized by their rotary joint at the base and at least one prismatic joint connecting its links. They can move vertically and horizontally by sliding. The compact effector design allows the robot to reach tight workspaces without any loss of speed.

Spherical coordinate robots

Spherical coordinate robots only have rotary joints. They are one of the first robots to have been used in industrial applications. They are commonly used for machine tending in die-casting, plastic injection and extrusion, and for welding.

SCARA robots

SCARA is an acronym for Selective Compliance Assembly Robot Arm. SCARA robots are recognized by their two parallel joints which provide movement in the X-Y plane. Rotating shafts are positioned vertically at the effector..

SCARA robots are used for jobs that require precise lateral movements. They are ideal for assembly applications.

Delta robots

Delta robots are also referred to as parallel link robots. They consist of parallel links connected to a common base. Delta robots are particularly useful for direct control tasks and high maneuvering operations (such as quick pick-and-place tasks). Delta robots take advantage of four bar or parallelogram linkage systems.

Furthermore, industrial robots can have a serial or parallel architecture.

Serial manipulators

Serial architectures a.k.a Serial manipulators are the most common industrial robots and they are designed as a series of links connected by motor-actuated joints that extend from a base to an end-effector. SCARA , Stanford manipulators are typical examples of this category.

Parallel Architecture

A parallel manipulator is designed so that each chain is usually short, simple and can thus be rigid against unwanted movement, compared to a serial manipulator. Errors in one chain's positioning are averaged in conjunction with the others, rather than being cumulative. Each actuator must still move within its own degree of freedom, as for a serial robot; however in the parallel robot the off-axis flexibility of a joint is also constrained by the effect of the other chains. It is this closed-loop stiffness that makes the overall parallel manipulator stiff relative to its components, unlike the serial chain that becomes progressively less rigid with more components.

Lower mobility parallel manipulators and concomitant motion

A full parallel manipulator can move an object with up to 6 degrees of freedom (DoF), determined by 3 translation 3T and 3 rotation 3R coordinates for full 3T3R mobility. However, when a manipulation task requires less than 6 DoF, the use of lower mobility manipulators, with fewer than 6 DoF, may bring advantages in terms of simpler architecture, easier control, faster motion and lower cost. For example, the 3 DoF Delta robot has lower 3T mobility and has proven to be very successful for rapid pick-and-place translational positioning applications. The workspace of lower mobility manipulators may be decomposed into `motion’ and `constraint’ subspaces. For example, 3 position coordinates constitute the motion subspace of the 3 DoF Delta robot and the 3 orientation coordinates are in the constraint subspace. The motion subspace of lower mobility manipulators may be further decomposed into independent (desired) and dependent (concomitant) subspaces: consisting of `concomitant’ or `parasitic’ motion which is undesired motion of the manipulator. The debilitating effects of concomitant motion should be mitigated or eliminated in the successful design of lower mobility manipulators. For example, the Delta robot does not have parasitic motion since its end effector does not rotate.

Autonomy

Robots exhibit varying degrees of autonomy. Some robots are programmed to faithfully carry out specific actions over and over again (repetitive actions) without variation and with a high degree of accuracy. These actions are determined by programmed routines that specify the direction, acceleration, velocity, deceleration, and distance of a series of coordinated motions

Other robots are much more flexible as to the orientation of the object on which they are operating or even the task that has to be performed on the object itself, which the robot may even need to identify. For example, for more precise guidance, robots often contain machine vision sub-systems acting as their visual sensors, linked to powerful computers or controllers. Artificial intelligence, or what passes for it, is becoming an increasingly important factor in the modern industrial robot.

History of industrial robotics

The earliest known industrial robot, conforming to the ISO definition was completed by "Bill" Griffith P. Taylor in 1937 and published in Meccano Magazine, March 1938. The crane-like device was built almost entirely using Meccano parts, and powered by a single electric motor. Five axes of movement were possible, including grab and grab rotation. Automation was achieved using punched paper tape to energise solenoids, which would facilitate the movement of the crane's control levers. The robot could stack wooden blocks in pre-programmed patterns. The number of motor revolutions required for each desired movement was first plotted on graph paper. This information was then transferred to the paper tape, which was also driven by the robot's single motor. Chris Shute built a complete replica of the robot in 1997.

George Devol applied for the first robotics patents in 1954 (granted in 1961). The first company to produce a robot was Unimation, founded by Devol and Joseph F. Engelberger in 1956. Unimation robots were also called programmable transfer machines since their main use at first was to transfer objects from one point to another, less than a dozen feet or so apart. They used hydraulic actuators and were programmed in joint coordinates, i.e. the angles of the various joints were stored during a teaching phase and replayed in operation. They were accurate to within 1/10,000 of an inch (note: although accuracy is not an appropriate measure for robots, usually evaluated in terms of repeatability - see later). Unimation later licensed their technology to Kawasaki Heavy Industries and GKN, manufacturing Unimates in Japan and England respectively. For some time Unimation's only competitor was Cincinnati Milacron Inc. of Ohio. This changed radically in the late 1970s when several big Japanese conglomerates began producing similar industrial robots.

In 1969 Victor Scheinman at Stanford University invented the Stanford arm, an all-electric, 6-axis articulated robot designed to permit an arm solution. This allowed it accurately to follow arbitrary paths in space and widened the potential use of the robot to more sophisticated applications such as assembly and welding. Scheinman then designed a second arm for the MIT AI Lab, called the "MIT arm." Scheinman, after receiving a fellowship from Unimation to develop his designs, sold those designs to Unimation who further developed them with support from General Motors and later marketed it as the Programmable Universal Machine for Assembly (PUMA).

Industrial robotics took off quite quickly in Europe, with both ABB Robotics and KUKA Robotics bringing robots to the market in 1973. ABB Robotics (formerly ASEA) introduced IRB 6, among the world's first commercially available all electric micro-processor controlled robot. The first two IRB 6 robots were sold to Magnusson in Sweden for grinding and polishing pipe bends and were installed in production in January 1974. Also in 1973 KUKA Robotics built its first robot, known as FAMULUS, also one of the first articulated robots to have six electromechanically driven axes.

Interest in robotics increased in the late 1970s and many US companies entered the field, including large firms like General Electric, and General Motors (which formed joint venture FANUC Robotics with FANUC LTD of Japan). U.S. startup companies included Automatix and Adept Technology, Inc. At the height of the robot boom in 1984, Unimation was acquired by Westinghouse Electric Corporation for 107 million U.S. dollars. Westinghouse sold Unimation to Stäubli Faverges SCA of France in 1988, which is still making articulated robots for general industrial and cleanroom applications and even bought the robotic division of Bosch in late 2004.

Only a few non-Japanese companies ultimately managed to survive in this market, the major ones being: Adept Technology, Stäubli, the Swedish-Swiss company ABB Asea Brown Boveri, the German company KUKA Robotics and the Italian company Comau.

Technical description

Defining parameters

- Number of axes – two axes are required to reach any point in a plane; three axes are required to reach any point in space. To fully control the orientation of the end of the arm(i.e. the wrist) three more axes (yaw, pitch, and roll) are required. Some designs (e.g. the SCARA robot) trade limitations in motion possibilities for cost, speed, and accuracy.

- Degrees of freedom – this is usually the same as the number of axes.

- Working envelope – the region of space a robot can reach.

- Kinematics – the actual arrangement of rigid members and joints in the robot, which determines the robot's possible motions. Classes of robot kinematics include articulated, cartesian, parallel and SCARA.

- Carrying capacity or payload – how much weight a robot can lift.

- Speed – how fast the robot can position the end of its arm. This may be defined in terms of the angular or linear speed of each axis or as a compound speed i.e. the speed of the end of the arm when all axes are moving.

- Acceleration – how quickly an axis can accelerate. Since this is a limiting factor a robot may not be able to reach its specified maximum speed for movements over a short distance or a complex path requiring frequent changes of direction.

- Accuracy – how closely a robot can reach a commanded position. When the absolute position of the robot is measured and compared to the commanded position the error is a measure of accuracy. Accuracy can be improved with external sensing for example a vision system or Infra-Red. See robot calibration. Accuracy can vary with speed and position within the working envelope and with payload (see compliance).

- Repeatability – how well the robot will return to a programmed position. This is not the same as accuracy. It may be that when told to go to a certain X-Y-Z position that it gets only to within 1 mm of that position. This would be its accuracy which may be improved by calibration. But if that position is taught into controller memory and each time it is sent there it returns to within 0.1mm of the taught position then the repeatability will be within 0.1mm.

Accuracy and repeatability are different measures. Repeatability is usually the most important criterion for a robot and is similar to the concept of 'precision' in measurement—see accuracy and precision. ISO 9283 sets out a method whereby both accuracy and repeatability can be measured. Typically a robot is sent to a taught position a number of times and the error is measured at each return to the position after visiting 4 other positions. Repeatability is then quantified using the standard deviation of those samples in all three dimensions. A typical robot can, of course make a positional error exceeding that and that could be a problem for the process. Moreover, the repeatability is different in different parts of the working envelope and also changes with speed and payload. ISO 9283 specifies that accuracy and repeatability should be measured at maximum speed and at maximum payload. But this results in pessimistic values whereas the robot could be much more accurate and repeatable at light loads and speeds. Repeatability in an industrial process is also subject to the accuracy of the end effector, for example a gripper, and even to the design of the 'fingers' that match the gripper to the object being grasped. For example, if a robot picks a screw by its head, the screw could be at a random angle. A subsequent attempt to insert the screw into a hole could easily fail. These and similar scenarios can be improved with 'lead-ins' e.g. by making the entrance to the hole tapered.

- Motion control – for some applications, such as simple pick-and-place assembly, the robot need merely return repeatably to a limited number of pre-taught positions. For more sophisticated applications, such as welding and finishing (spray painting), motion must be continuously controlled to follow a path in space, with controlled orientation and velocity.

- Power source – some robots use electric motors, others use hydraulic actuators. The former are faster, the latter are stronger and advantageous in applications such as spray painting, where a spark could set off an explosion; however, low internal air-pressurisation of the arm can prevent ingress of flammable vapours as well as other contaminants. Nowadays, it is highly unlikely to see any hydraulic robots in the market. Additional sealings, brushless electric motors and spark-proof protection eased the construction of units that are able to work in the environment with an explosive atmosphere.

- Drive – some robots connect electric motors to the joints via gears; others connect the motor to the joint directly (direct drive). Using gears results in measurable 'backlash' which is free movement in an axis. Smaller robot arms frequently employ high speed, low torque DC motors, which generally require high gearing ratios; this has the disadvantage of backlash. In such cases the harmonic drive is often used.

- Compliance - this is a measure of the amount in angle or distance that a robot axis will move when a force is applied to it. Because of compliance when a robot goes to a position carrying its maximum payload it will be at a position slightly lower than when it is carrying no payload. Compliance can also be responsible for overshoot when carrying high payloads in which case acceleration would need to be reduced.

Robot programming and interfaces

The setup or programming of motions and sequences for an industrial robot is typically taught by linking the robot controller to a laptop, desktop computer or (internal or Internet) network.

A robot and a collection of machines or peripherals is referred to as a workcell, or cell. A typical cell might contain a parts feeder, a molding machine and a robot. The various machines are 'integrated' and controlled by a single computer or PLC. How the robot interacts with other machines in the cell must be programmed, both with regard to their positions in the cell and synchronizing with them.

Software: The computer is installed with corresponding interface software. The use of a computer greatly simplifies the programming process. Specialized robot software is run either in the robot controller or in the computer or both depending on the system design.

There are two basic entities that need to be taught (or programmed): positional data and procedure. For example, in a task to move a screw from a feeder to a hole the positions of the feeder and the hole must first be taught or programmed. Secondly the procedure to get the screw from the feeder to the hole must be programmed along with any I/O involved, for example a signal to indicate when the screw is in the feeder ready to be picked up. The purpose of the robot software is to facilitate both these programming tasks.

Teaching the robot positions may be achieved a number of ways:

Positional commands The robot can be directed to the required position using a GUI or text based commands in which the required X-Y-Z position may be specified and edited.

Teach pendant: Robot positions can be taught via a teach pendant. This is a handheld control and programming unit. The common features of such units are the ability to manually send the robot to a desired position, or "inch" or "jog" to adjust a position. They also have a means to change the speed since a low speed is usually required for careful positioning, or while test-running through a new or modified routine. A large emergency stop button is usually included as well. Typically once the robot has been programmed there is no more use for the teach pendant. All teach pendants are equipped with a 3-position deadman switch. In the manual mode, it allows the robot to move only when it is in the middle position (partially pressed). If it is fully pressed in or completely released, the robot stops. This principle of operation allows natural reflexes to be used to increase safety.

Lead-by-the-nose: this is a technique offered by many robot manufacturers. In this method, one user holds the robot's manipulator, while another person enters a command which de-energizes the robot causing it to go into limp. The user then moves the robot by hand to the required positions and/or along a required path while the software logs these positions into memory. The program can later run the robot to these positions or along the taught path. This technique is popular for tasks such as paint spraying.

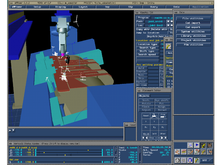

Offline programming is where the entire cell, the robot and all the machines or instruments in the workspace are mapped graphically. The robot can then be moved on screen and the process simulated. A robotics simulator is used to create embedded applications for a robot, without depending on the physical operation of the robot arm and end effector. The advantages of robotics simulation is that it saves time in the design of robotics applications. It can also increase the level of safety associated with robotic equipment since various "what if" scenarios can be tried and tested before the system is activated.[8] Robot simulation software provides a platform to teach, test, run, and debug programs that have been written in a variety of programming languages.

Robot simulation tools allow for robotics programs to be conveniently written and debugged off-line with the final version of the program tested on an actual robot. The ability to preview the behavior of a robotic system in a virtual world allows for a variety of mechanisms, devices, configurations and controllers to be tried and tested before being applied to a "real world" system. Robotics simulators have the ability to provide real-time computing of the simulated motion of an industrial robot using both geometric modeling and kinematics modeling.

Manufacturing independent robot programming tools are a relatively new but flexible way to program robot applications. Using a graphical user interface the programming is done via drag and drop of predefined template/building blocks. They often feature the execution of simulations to evaluate the feasibility and offline programming in combination. If the system is able to compile and upload native robot code to the robot controller, the user no longer has to learn each manufacturer's proprietary language. Therefore, this approach can be an important step to standardize programming methods.

Others in addition, machine operators often use user interface devices, typically touchscreen units, which serve as the operator control panel. The operator can switch from program to program, make adjustments within a program and also operate a host of peripheral devices that may be integrated within the same robotic system. These include end effectors, feeders that supply components to the robot, conveyor belts, emergency stop controls, machine vision systems, safety interlock systems, barcode printers and an almost infinite array of other industrial devices which are accessed and controlled via the operator control panel.

The teach pendant or PC is usually disconnected after programming and the robot then runs on the program that has been installed in its controller. However a computer is often used to 'supervise' the robot and any peripherals, or to provide additional storage for access to numerous complex paths and routines.

End-of-arm tooling

The most essential robot peripheral is the end effector, or end-of-arm-tooling (EOT). Common examples of end effectors include welding devices (such as MIG-welding guns, spot-welders, etc.), spray guns and also grinding and deburring devices (such as pneumatic disk or belt grinders, burrs, etc.), and grippers (devices that can grasp an object, usually electromechanical or pneumatic). Other common means of picking up objects is by vacuum or magnets. End effectors are frequently highly complex, made to match the handled product and often capable of picking up an array of products at one time. They may utilize various sensors to aid the robot system in locating, handling, and positioning products.

Controlling movement

For a given robot the only parameters necessary to completely locate the end effector (gripper, welding torch, etc.) of the robot are the angles of each of the joints or displacements of the linear axes (or combinations of the two for robot formats such as SCARA). However, there are many different ways to define the points. The most common and most convenient way of defining a point is to specify a Cartesian coordinate for it, i.e. the position of the 'end effector' in mm in the X, Y and Z directions relative to the robot's origin. In addition, depending on the types of joints a particular robot may have, the orientation of the end effector in yaw, pitch, and roll and the location of the tool point relative to the robot's faceplate must also be specified. For a jointed arm these coordinates must be converted to joint angles by the robot controller and such conversions are known as Cartesian Transformations which may need to be performed iteratively or recursively for a multiple axis robot. The mathematics of the relationship between joint angles and actual spatial coordinates is called kinematics.

Positioning by Cartesian coordinates may be done by entering the coordinates into the system or by using a teach pendant which moves the robot in X-Y-Z directions. It is much easier for a human operator to visualize motions up/down, left/right, etc. than to move each joint one at a time. When the desired position is reached it is then defined in some way particular to the robot software in use, e.g. P1 - P5 below.

Typical programming

Most articulated robots perform by storing a series of positions in memory, and moving to them at various times in their programming sequence. For example, a robot which is moving items from one place (bin A) to another (bin B) might have a simple 'pick and place' program similar to the following:

Define points P1–P5:

- Safely above workpiece (defined as P1)

- 10 cm Above bin A (defined as P2)

- At position to take part from bin A (defined as P3)

- 10 cm Above bin B (defined as P4)

- At position to take part from bin B. (defined as P5)

Define program:

- Move to P1

- Move to P2

- Move to P3

- Close gripper

- Move to P2

- Move to P4

- Move to P5

- Open gripper

- Move to P4

- Move to P1 and finish

For examples of how this would look in popular robot languages see industrial robot programming.

Singularities

The American National Standard for Industrial Robots and Robot Systems — Safety Requirements (ANSI/RIA R15.06-1999) defines a singularity as “a condition caused by the collinear alignment of two or more robot axes resulting in unpredictable robot motion and velocities.” It is most common in robot arms that utilize a “triple-roll wrist”. This is a wrist about which the three axes of the wrist, controlling yaw, pitch, and roll, all pass through a common point. An example of a wrist singularity is when the path through which the robot is traveling causes the first and third axes of the robot's wrist (i.e. robot's axes 4 and 6) to line up. The second wrist axis then attempts to spin 180° in zero time to maintain the orientation of the end effector. Another common term for this singularity is a “wrist flip”. The result of a singularity can be quite dramatic and can have adverse effects on the robot arm, the end effector, and the process. Some industrial robot manufacturers have attempted to side-step the situation by slightly altering the robot's path to prevent this condition. Another method is to slow the robot's travel speed, thus reducing the speed required for the wrist to make the transition. The ANSI/RIA has mandated that robot manufacturers shall make the user aware of singularities if they occur while the system is being manually manipulated.

A second type of singularity in wrist-partitioned vertically articulated six-axis robots occurs when the wrist center lies on a cylinder that is centered about axis 1 and with radius equal to the distance between axes 1 and 4. This is called a shoulder singularity. Some robot manufacturers also mention alignment singularities, where axes 1 and 6 become coincident. This is simply a sub-case of shoulder singularities. When the robot passes close to a shoulder singularity, joint 1 spins very fast.

The third and last type of singularity in wrist-partitioned vertically articulated six-axis robots occurs when the wrist's center lies in the same plane as axes 2 and 3.

Singularities are closely related to the phenomena of gimbal lock, which has a similar root cause of axes becoming lined up.

Market structure

According to the International Federation of Robotics (IFR) study World Robotics 2019, there were about 2,439,543 operational industrial robots by the end of 2017. This number is estimated to reach 3,788,000 by the end of 2021. For the year 2018 the IFR estimates the worldwide sales of industrial robots with US$16.5 billion. Including the cost of software, peripherals and systems engineering, the annual turnover for robot systems is estimated to be US$48.0 billion in 2018.

China is the largest industrial robot market, with 154,032 units sold in 2018. China had the largest operational stock of industrial robots, with 649,447 at the end of 2018. The United States industrial robot-makers shipped 35,880 robot to factories in the US in 2018 and this was 7% more than in 2017.

The biggest customer of industrial robots is automotive industry with 30% market share, then electrical/electronics industry with 25%, metal and machinery industry with 10%, rubber and plastics industry with 5%, food industry with 5%. In textiles, apparel and leather industry, 1,580 units are operational.

Estimated worldwide annual supply of industrial robots (in units):

| Year | supply |

|---|---|

| 1998 | 69,000 |

| 1999 | 79,000 |

| 2000 | 99,000 |

| 2001 | 78,000 |

| 2002 | 69,000 |

| 2003 | 81,000 |

| 2004 | 97,000 |

| 2005 | 120,000 |

| 2006 | 112,000 |

| 2007 | 114,000 |

| 2008 | 113,000 |

| 2009 | 60,000 |

| 2010 | 118,000 |

| 2012 | 159,346 |

| 2013 | 178,132 |

| 2014 | 229,261 |

| 2015 | 253,748 |

| 2016 | 294,312 |

| 2017 | 381,335 |

| 2018 | 422,271 |

Health and safety

The International Federation of Robotics has predicted a worldwide increase in adoption of industrial robots and they estimated 1.7 million new robot installations in factories worldwide by 2020 [IFR 2017]. Rapid advances in automation technologies (e.g. fixed robots, collaborative and mobile robots, and exoskeletons) have the potential to improve work conditions but also to introduce workplace hazards in manufacturing workplaces. Despite the lack of occupational surveillance data on injuries associated specifically with robots, researchers from the US National Institute for Occupational Safety and Health (NIOSH) identified 61 robot-related deaths between 1992 and 2015 using keyword searches of the Bureau of Labor Statistics (BLS) Census of Fatal Occupational Injuries research database (see info from Center for Occupational Robotics Research). Using data from the Bureau of Labor Statistics, NIOSH and its state partners have investigated 4 robot-related fatalities under the Fatality Assessment and Control Evaluation Program. In addition the Occupational Safety and Health Administration (OSHA) has investigated dozens of robot-related deaths and injuries, which can be reviewed at OSHA Accident Search page. Injuries and fatalities could increase over time because of the increasing number of collaborative and co-existing robots, powered exoskeletons, and autonomous vehicles into the work environment.

Safety standards are being developed by the Robotic Industries Association (RIA) in conjunction with the American National Standards Institute (ANSI). On October 5, 2017, OSHA, NIOSH and RIA signed an alliance

to work together to enhance technical expertise, identify and help

address potential workplace hazards associated with traditional

industrial robots and the emerging technology of human-robot

collaboration installations and systems, and help identify needed

research to reduce workplace hazards. On October 16 NIOSH launched the Center for Occupational Robotics Research

to "provide scientific leadership to guide the development and use of

occupational robots that enhance worker safety, health, and wellbeing."

So far, the research needs identified by NIOSH and its partners

include: tracking and preventing injuries and fatalities, intervention

and dissemination strategies to promote safe machine control and

maintenance procedures, and on translating effective evidence-based

interventions into workplace practice.

An industrial robot is a robot system used for manufacturing. Industrial robots are automated, programmable and capable of movement on three or more axes.

Typical applications of robots include welding, painting, assembly, disassembly, pick and place for printed circuit boards, packaging and labeling, palletizing, product inspection, and testing; all accomplished with high endurance, speed, and precision. They can assist in material handling.

In the year 2020, an estimated 1.64 million industrial robots were in operation worldwide according to International Federation of Robotics (IFR).

Types and features

There are six types of industrial robots.

Articulated robots

Articulated robots are the most common industrial robots. They look like a human arm, which is why they are also called robotic arm or manipulator arm. Their articulations with several degrees of freedom allow the articulated arms a wide range of movements.

Cartesian coordinate robots

Cartesian robots, also called rectilinear, gantry robots, and x-y-z robots have three prismatic joints for the movement of the tool and three rotary joints for its orientation in space.

To be able to move and orient the effector organ in all directions, such a robot needs 6 axes (or degrees of freedom). In a 2-dimensional environment, three axes are sufficient, two for displacement and one for orientation.

Cylindrical coordinate robots

The cylindrical coordinate robots are characterized by their rotary joint at the base and at least one prismatic joint connecting its links. They can move vertically and horizontally by sliding. The compact effector design allows the robot to reach tight workspaces without any loss of speed.

Spherical coordinate robots

Spherical coordinate robots only have rotary joints. They are one of the first robots to have been used in industrial applications. They are commonly used for machine tending in die-casting, plastic injection and extrusion, and for welding.

SCARA robots

SCARA is an acronym for Selective Compliance Assembly Robot Arm. SCARA robots are recognized by their two parallel joints which provide movement in the X-Y plane. Rotating shafts are positioned vertically at the effector..

SCARA robots are used for jobs that require precise lateral movements. They are ideal for assembly applications.

Delta robots

Delta robots are also referred to as parallel link robots. They consist of parallel links connected to a common base. Delta robots are particularly useful for direct control tasks and high maneuvering operations (such as quick pick-and-place tasks). Delta robots take advantage of four bar or parallelogram linkage systems.

Furthermore, industrial robots can have a serial or parallel architecture.

Serial manipulators

Serial architectures a.k.a Serial manipulators are the most common industrial robots and they are designed as a series of links connected by motor-actuated joints that extend from a base to an end-effector. SCARA , Stanford manipulators are typical examples of this category.

Parallel Architecture

A parallel manipulator is designed so that each chain is usually short, simple and can thus be rigid against unwanted movement, compared to a serial manipulator. Errors in one chain's positioning are averaged in conjunction with the others, rather than being cumulative. Each actuator must still move within its own degree of freedom, as for a serial robot; however in the parallel robot the off-axis flexibility of a joint is also constrained by the effect of the other chains. It is this closed-loop stiffness that makes the overall parallel manipulator stiff relative to its components, unlike the serial chain that becomes progressively less rigid with more components.

Lower mobility parallel manipulators and concomitant motion

A full parallel manipulator can move an object with up to 6 degrees of freedom (DoF), determined by 3 translation 3T and 3 rotation 3R coordinates for full 3T3R mobility. However, when a manipulation task requires less than 6 DoF, the use of lower mobility manipulators, with fewer than 6 DoF, may bring advantages in terms of simpler architecture, easier control, faster motion and lower cost. For example, the 3 DoF Delta robot has lower 3T mobility and has proven to be very successful for rapid pick-and-place translational positioning applications. The workspace of lower mobility manipulators may be decomposed into `motion’ and `constraint’ subspaces. For example, 3 position coordinates constitute the motion subspace of the 3 DoF Delta robot and the 3 orientation coordinates are in the constraint subspace. The motion subspace of lower mobility manipulators may be further decomposed into independent (desired) and dependent (concomitant) subspaces: consisting of `concomitant’ or `parasitic’ motion which is undesired motion of the manipulator. The debilitating effects of concomitant motion should be mitigated or eliminated in the successful design of lower mobility manipulators. For example, the Delta robot does not have parasitic motion since its end effector does not rotate.

Autonomy

Robots exhibit varying degrees of autonomy. Some robots are programmed to faithfully carry out specific actions over and over again (repetitive actions) without variation and with a high degree of accuracy. These actions are determined by programmed routines that specify the direction, acceleration, velocity, deceleration, and distance of a series of coordinated motions

Other robots are much more flexible as to the orientation of the object on which they are operating or even the task that has to be performed on the object itself, which the robot may even need to identify. For example, for more precise guidance, robots often contain machine vision sub-systems acting as their visual sensors, linked to powerful computers or controllers. Artificial intelligence, or what passes for it, is becoming an increasingly important factor in the modern industrial robot.

History of industrial robotics

The earliest known industrial robot, conforming to the ISO definition was completed by "Bill" Griffith P. Taylor in 1937 and published in Meccano Magazine, March 1938. The crane-like device was built almost entirely using Meccano parts, and powered by a single electric motor. Five axes of movement were possible, including grab and grab rotation. Automation was achieved using punched paper tape to energise solenoids, which would facilitate the movement of the crane's control levers. The robot could stack wooden blocks in pre-programmed patterns. The number of motor revolutions required for each desired movement was first plotted on graph paper. This information was then transferred to the paper tape, which was also driven by the robot's single motor. Chris Shute built a complete replica of the robot in 1997.

George Devol applied for the first robotics patents in 1954 (granted in 1961). The first company to produce a robot was Unimation, founded by Devol and Joseph F. Engelberger in 1956. Unimation robots were also called programmable transfer machines since their main use at first was to transfer objects from one point to another, less than a dozen feet or so apart. They used hydraulic actuators and were programmed in joint coordinates, i.e. the angles of the various joints were stored during a teaching phase and replayed in operation. They were accurate to within 1/10,000 of an inch (note: although accuracy is not an appropriate measure for robots, usually evaluated in terms of repeatability - see later). Unimation later licensed their technology to Kawasaki Heavy Industries and GKN, manufacturing Unimates in Japan and England respectively. For some time Unimation's only competitor was Cincinnati Milacron Inc. of Ohio. This changed radically in the late 1970s when several big Japanese conglomerates began producing similar industrial robots.

In 1969 Victor Scheinman at Stanford University invented the Stanford arm, an all-electric, 6-axis articulated robot designed to permit an arm solution. This allowed it accurately to follow arbitrary paths in space and widened the potential use of the robot to more sophisticated applications such as assembly and welding. Scheinman then designed a second arm for the MIT AI Lab, called the "MIT arm." Scheinman, after receiving a fellowship from Unimation to develop his designs, sold those designs to Unimation who further developed them with support from General Motors and later marketed it as the Programmable Universal Machine for Assembly (PUMA).

Industrial robotics took off quite quickly in Europe, with both ABB Robotics and KUKA Robotics bringing robots to the market in 1973. ABB Robotics (formerly ASEA) introduced IRB 6, among the world's first commercially available all electric micro-processor controlled robot. The first two IRB 6 robots were sold to Magnusson in Sweden for grinding and polishing pipe bends and were installed in production in January 1974. Also in 1973 KUKA Robotics built its first robot, known as FAMULUS, also one of the first articulated robots to have six electromechanically driven axes.

Interest in robotics increased in the late 1970s and many US companies entered the field, including large firms like General Electric, and General Motors (which formed joint venture FANUC Robotics with FANUC LTD of Japan). U.S. startup companies included Automatix and Adept Technology, Inc. At the height of the robot boom in 1984, Unimation was acquired by Westinghouse Electric Corporation for 107 million U.S. dollars. Westinghouse sold Unimation to Stäubli Faverges SCA of France in 1988, which is still making articulated robots for general industrial and cleanroom applications and even bought the robotic division of Bosch in late 2004.

Only a few non-Japanese companies ultimately managed to survive in this market, the major ones being: Adept Technology, Stäubli, the Swedish-Swiss company ABB Asea Brown Boveri, the German company KUKA Robotics and the Italian company Comau.

Technical description

Defining parameters

- Number of axes – two axes are required to reach any point in a plane; three axes are required to reach any point in space. To fully control the orientation of the end of the arm(i.e. the wrist) three more axes (yaw, pitch, and roll) are required. Some designs (e.g. the SCARA robot) trade limitations in motion possibilities for cost, speed, and accuracy.

- Degrees of freedom – this is usually the same as the number of axes.

- Working envelope – the region of space a robot can reach.

- Kinematics – the actual arrangement of rigid members and joints in the robot, which determines the robot's possible motions. Classes of robot kinematics include articulated, cartesian, parallel and SCARA.

- Carrying capacity or payload – how much weight a robot can lift.

- Speed – how fast the robot can position the end of its arm. This may be defined in terms of the angular or linear speed of each axis or as a compound speed i.e. the speed of the end of the arm when all axes are moving.

- Acceleration – how quickly an axis can accelerate. Since this is a limiting factor a robot may not be able to reach its specified maximum speed for movements over a short distance or a complex path requiring frequent changes of direction.

- Accuracy – how closely a robot can reach a commanded position. When the absolute position of the robot is measured and compared to the commanded position the error is a measure of accuracy. Accuracy can be improved with external sensing for example a vision system or Infra-Red. See robot calibration. Accuracy can vary with speed and position within the working envelope and with payload (see compliance).

- Repeatability – how well the robot will return to a programmed position. This is not the same as accuracy. It may be that when told to go to a certain X-Y-Z position that it gets only to within 1 mm of that position. This would be its accuracy which may be improved by calibration. But if that position is taught into controller memory and each time it is sent there it returns to within 0.1mm of the taught position then the repeatability will be within 0.1mm.

Accuracy and repeatability are different measures. Repeatability is usually the most important criterion for a robot and is similar to the concept of 'precision' in measurement—see accuracy and precision. ISO 9283 sets out a method whereby both accuracy and repeatability can be measured. Typically a robot is sent to a taught position a number of times and the error is measured at each return to the position after visiting 4 other positions. Repeatability is then quantified using the standard deviation of those samples in all three dimensions. A typical robot can, of course make a positional error exceeding that and that could be a problem for the process. Moreover, the repeatability is different in different parts of the working envelope and also changes with speed and payload. ISO 9283 specifies that accuracy and repeatability should be measured at maximum speed and at maximum payload. But this results in pessimistic values whereas the robot could be much more accurate and repeatable at light loads and speeds. Repeatability in an industrial process is also subject to the accuracy of the end effector, for example a gripper, and even to the design of the 'fingers' that match the gripper to the object being grasped. For example, if a robot picks a screw by its head, the screw could be at a random angle. A subsequent attempt to insert the screw into a hole could easily fail. These and similar scenarios can be improved with 'lead-ins' e.g. by making the entrance to the hole tapered.

- Motion control – for some applications, such as simple pick-and-place assembly, the robot need merely return repeatably to a limited number of pre-taught positions. For more sophisticated applications, such as welding and finishing (spray painting), motion must be continuously controlled to follow a path in space, with controlled orientation and velocity.

- Power source – some robots use electric motors, others use hydraulic actuators. The former are faster, the latter are stronger and advantageous in applications such as spray painting, where a spark could set off an explosion; however, low internal air-pressurisation of the arm can prevent ingress of flammable vapours as well as other contaminants. Nowadays, it is highly unlikely to see any hydraulic robots in the market. Additional sealings, brushless electric motors and spark-proof protection eased the construction of units that are able to work in the environment with an explosive atmosphere.

- Drive – some robots connect electric motors to the joints via gears; others connect the motor to the joint directly (direct drive). Using gears results in measurable 'backlash' which is free movement in an axis. Smaller robot arms frequently employ high speed, low torque DC motors, which generally require high gearing ratios; this has the disadvantage of backlash. In such cases the harmonic drive is often used.

- Compliance - this is a measure of the amount in angle or distance that a robot axis will move when a force is applied to it. Because of compliance when a robot goes to a position carrying its maximum payload it will be at a position slightly lower than when it is carrying no payload. Compliance can also be responsible for overshoot when carrying high payloads in which case acceleration would need to be reduced.

Robot programming and interfaces

The setup or programming of motions and sequences for an industrial robot is typically taught by linking the robot controller to a laptop, desktop computer or (internal or Internet) network.

A robot and a collection of machines or peripherals is referred to as a workcell, or cell. A typical cell might contain a parts feeder, a molding machine and a robot. The various machines are 'integrated' and controlled by a single computer or PLC. How the robot interacts with other machines in the cell must be programmed, both with regard to their positions in the cell and synchronizing with them.

Software: The computer is installed with corresponding interface software. The use of a computer greatly simplifies the programming process. Specialized robot software is run either in the robot controller or in the computer or both depending on the system design.

There are two basic entities that need to be taught (or programmed): positional data and procedure. For example, in a task to move a screw from a feeder to a hole the positions of the feeder and the hole must first be taught or programmed. Secondly the procedure to get the screw from the feeder to the hole must be programmed along with any I/O involved, for example a signal to indicate when the screw is in the feeder ready to be picked up. The purpose of the robot software is to facilitate both these programming tasks.

Teaching the robot positions may be achieved a number of ways:

Positional commands The robot can be directed to the required position using a GUI or text based commands in which the required X-Y-Z position may be specified and edited.

Teach pendant: Robot positions can be taught via a teach pendant. This is a handheld control and programming unit. The common features of such units are the ability to manually send the robot to a desired position, or "inch" or "jog" to adjust a position. They also have a means to change the speed since a low speed is usually required for careful positioning, or while test-running through a new or modified routine. A large emergency stop button is usually included as well. Typically once the robot has been programmed there is no more use for the teach pendant. All teach pendants are equipped with a 3-position deadman switch. In the manual mode, it allows the robot to move only when it is in the middle position (partially pressed). If it is fully pressed in or completely released, the robot stops. This principle of operation allows natural reflexes to be used to increase safety.

Lead-by-the-nose: this is a technique offered by many robot manufacturers. In this method, one user holds the robot's manipulator, while another person enters a command which de-energizes the robot causing it to go into limp. The user then moves the robot by hand to the required positions and/or along a required path while the software logs these positions into memory. The program can later run the robot to these positions or along the taught path. This technique is popular for tasks such as paint spraying.

Offline programming is where the entire cell, the robot and all the machines or instruments in the workspace are mapped graphically. The robot can then be moved on screen and the process simulated. A robotics simulator is used to create embedded applications for a robot, without depending on the physical operation of the robot arm and end effector. The advantages of robotics simulation is that it saves time in the design of robotics applications. It can also increase the level of safety associated with robotic equipment since various "what if" scenarios can be tried and tested before the system is activated.[8] Robot simulation software provides a platform to teach, test, run, and debug programs that have been written in a variety of programming languages.

Robot simulation tools allow for robotics programs to be conveniently written and debugged off-line with the final version of the program tested on an actual robot. The ability to preview the behavior of a robotic system in a virtual world allows for a variety of mechanisms, devices, configurations and controllers to be tried and tested before being applied to a "real world" system. Robotics simulators have the ability to provide real-time computing of the simulated motion of an industrial robot using both geometric modeling and kinematics modeling.

Manufacturing independent robot programming tools are a relatively new but flexible way to program robot applications. Using a graphical user interface the programming is done via drag and drop of predefined template/building blocks. They often feature the execution of simulations to evaluate the feasibility and offline programming in combination. If the system is able to compile and upload native robot code to the robot controller, the user no longer has to learn each manufacturer's proprietary language. Therefore, this approach can be an important step to standardize programming methods.

Others in addition, machine operators often use user interface devices, typically touchscreen units, which serve as the operator control panel. The operator can switch from program to program, make adjustments within a program and also operate a host of peripheral devices that may be integrated within the same robotic system. These include end effectors, feeders that supply components to the robot, conveyor belts, emergency stop controls, machine vision systems, safety interlock systems, barcode printers and an almost infinite array of other industrial devices which are accessed and controlled via the operator control panel.

The teach pendant or PC is usually disconnected after programming and the robot then runs on the program that has been installed in its controller. However a computer is often used to 'supervise' the robot and any peripherals, or to provide additional storage for access to numerous complex paths and routines.

End-of-arm tooling

The most essential robot peripheral is the end effector, or end-of-arm-tooling (EOT). Common examples of end effectors include welding devices (such as MIG-welding guns, spot-welders, etc.), spray guns and also grinding and deburring devices (such as pneumatic disk or belt grinders, burrs, etc.), and grippers (devices that can grasp an object, usually electromechanical or pneumatic). Other common means of picking up objects is by vacuum or magnets. End effectors are frequently highly complex, made to match the handled product and often capable of picking up an array of products at one time. They may utilize various sensors to aid the robot system in locating, handling, and positioning products.

Controlling movement

For a given robot the only parameters necessary to completely locate the end effector (gripper, welding torch, etc.) of the robot are the angles of each of the joints or displacements of the linear axes (or combinations of the two for robot formats such as SCARA). However, there are many different ways to define the points. The most common and most convenient way of defining a point is to specify a Cartesian coordinate for it, i.e. the position of the 'end effector' in mm in the X, Y and Z directions relative to the robot's origin. In addition, depending on the types of joints a particular robot may have, the orientation of the end effector in yaw, pitch, and roll and the location of the tool point relative to the robot's faceplate must also be specified. For a jointed arm these coordinates must be converted to joint angles by the robot controller and such conversions are known as Cartesian Transformations which may need to be performed iteratively or recursively for a multiple axis robot. The mathematics of the relationship between joint angles and actual spatial coordinates is called kinematics.

Positioning by Cartesian coordinates may be done by entering the coordinates into the system or by using a teach pendant which moves the robot in X-Y-Z directions. It is much easier for a human operator to visualize motions up/down, left/right, etc. than to move each joint one at a time. When the desired position is reached it is then defined in some way particular to the robot software in use, e.g. P1 - P5 below.

Typical programming

Most articulated robots perform by storing a series of positions in memory, and moving to them at various times in their programming sequence. For example, a robot which is moving items from one place (bin A) to another (bin B) might have a simple 'pick and place' program similar to the following:

Define points P1–P5:

- Safely above workpiece (defined as P1)

- 10 cm Above bin A (defined as P2)

- At position to take part from bin A (defined as P3)

- 10 cm Above bin B (defined as P4)

- At position to take part from bin B. (defined as P5)

Define program:

- Move to P1

- Move to P2

- Move to P3

- Close gripper

- Move to P2

- Move to P4

- Move to P5

- Open gripper

- Move to P4

- Move to P1 and finish

For examples of how this would look in popular robot languages see industrial robot programming.

Singularities

The American National Standard for Industrial Robots and Robot Systems — Safety Requirements (ANSI/RIA R15.06-1999) defines a singularity as “a condition caused by the collinear alignment of two or more robot axes resulting in unpredictable robot motion and velocities.” It is most common in robot arms that utilize a “triple-roll wrist”. This is a wrist about which the three axes of the wrist, controlling yaw, pitch, and roll, all pass through a common point. An example of a wrist singularity is when the path through which the robot is traveling causes the first and third axes of the robot's wrist (i.e. robot's axes 4 and 6) to line up. The second wrist axis then attempts to spin 180° in zero time to maintain the orientation of the end effector. Another common term for this singularity is a “wrist flip”. The result of a singularity can be quite dramatic and can have adverse effects on the robot arm, the end effector, and the process. Some industrial robot manufacturers have attempted to side-step the situation by slightly altering the robot's path to prevent this condition. Another method is to slow the robot's travel speed, thus reducing the speed required for the wrist to make the transition. The ANSI/RIA has mandated that robot manufacturers shall make the user aware of singularities if they occur while the system is being manually manipulated.

A second type of singularity in wrist-partitioned vertically articulated six-axis robots occurs when the wrist center lies on a cylinder that is centered about axis 1 and with radius equal to the distance between axes 1 and 4. This is called a shoulder singularity. Some robot manufacturers also mention alignment singularities, where axes 1 and 6 become coincident. This is simply a sub-case of shoulder singularities. When the robot passes close to a shoulder singularity, joint 1 spins very fast.

The third and last type of singularity in wrist-partitioned vertically articulated six-axis robots occurs when the wrist's center lies in the same plane as axes 2 and 3.

Singularities are closely related to the phenomena of gimbal lock, which has a similar root cause of axes becoming lined up.

Market structure

According to the International Federation of Robotics (IFR) study World Robotics 2019, there were about 2,439,543 operational industrial robots by the end of 2017. This number is estimated to reach 3,788,000 by the end of 2021. For the year 2018 the IFR estimates the worldwide sales of industrial robots with US$16.5 billion. Including the cost of software, peripherals and systems engineering, the annual turnover for robot systems is estimated to be US$48.0 billion in 2018.

China is the largest industrial robot market, with 154,032 units sold in 2018. China had the largest operational stock of industrial robots, with 649,447 at the end of 2018. The United States industrial robot-makers shipped 35,880 robot to factories in the US in 2018 and this was 7% more than in 2017.

The biggest customer of industrial robots is automotive industry with 30% market share, then electrical/electronics industry with 25%, metal and machinery industry with 10%, rubber and plastics industry with 5%, food industry with 5%. In textiles, apparel and leather industry, 1,580 units are operational.

Estimated worldwide annual supply of industrial robots (in units):

| Year | supply |

|---|---|

| 1998 | 69,000 |

| 1999 | 79,000 |

| 2000 | 99,000 |

| 2001 | 78,000 |

| 2002 | 69,000 |

| 2003 | 81,000 |

| 2004 | 97,000 |

| 2005 | 120,000 |

| 2006 | 112,000 |

| 2007 | 114,000 |

| 2008 | 113,000 |

| 2009 | 60,000 |

| 2010 | 118,000 |

| 2012 | 159,346 |

| 2013 | 178,132 |

| 2014 | 229,261 |

| 2015 | 253,748 |

| 2016 | 294,312 |

| 2017 | 381,335 |

| 2018 | 422,271 |

Health and safety

The International Federation of Robotics has predicted a worldwide increase in adoption of industrial robots and they estimated 1.7 million new robot installations in factories worldwide by 2020. Rapid advances in automation technologies (e.g. fixed robots, collaborative and mobile robots, and exoskeletons) have the potential to improve work conditions but also to introduce workplace hazards in manufacturing workplaces. Despite the lack of occupational surveillance data on injuries associated specifically with robots, researchers from the US National Institute for Occupational Safety and Health (NIOSH) identified 61 robot-related deaths between 1992 and 2015 using keyword searches of the Bureau of Labor Statistics (BLS) Census of Fatal Occupational Injuries research database (see info from Center for Occupational Robotics Research). Using data from the Bureau of Labor Statistics, NIOSH and its state partners have investigated 4 robot-related fatalities under the Fatality Assessment and Control Evaluation Program. In addition the Occupational Safety and Health Administration (OSHA) has investigated dozens of robot-related deaths and injuries, which can be reviewed at OSHA Accident Search page. Injuries and fatalities could increase over time because of the increasing number of collaborative and co-existing robots, powered exoskeletons, and autonomous vehicles into the work environment.

Safety standards are being developed by the Robotic Industries Association (RIA) in conjunction with the American National Standards Institute (ANSI). On October 5, 2017, OSHA, NIOSH and RIA signed an alliance to work together to enhance technical expertise, identify and help address potential workplace hazards associated with traditional industrial robots and the emerging technology of human-robot collaboration installations and systems, and help identify needed research to reduce workplace hazards. On October 16 NIOSH launched the Center for Occupational Robotics Research to "provide scientific leadership to guide the development and use of occupational robots that enhance worker safety, health, and wellbeing." So far, the research needs identified by NIOSH and its partners include: tracking and preventing injuries and fatalities, intervention and dissemination strategies to promote safe machine control and maintenance procedures, and on translating effective evidence-based interventions into workplace practice.