Inductivism is the traditional and still commonplace philosophy of scientific method to develop scientific theories. Inductivism aims to neutrally observe a domain, infer laws from examined cases—hence, inductive reasoning—and thus objectively discover the sole naturally true theory of the observed.

Inductivism's basis is, in sum, "the idea that theories can be derived from, or established on the basis of, facts". Evolving in phases, inductivism's conceptual reign spanned four centuries since Francis Bacon's 1620 proposal of such against Western Europe's prevailing model, scholasticism, which reasoned deductively from preconceived beliefs.[

In the 19th and 20th centuries, inductivism succumbed to hypotheticodeductivism—sometimes worded deductivism—as scientific method's realistic idealization. Yet scientific theories as such are now widely attributed to occasions of inference to the best explanation, IBE, which, like scientists' actual methods, are diverse and not formally prescribable.

Philosophers' debates

Inductivist endorsement

Francis Bacon, articulating inductivism in England, is often falsely stereotyped as a naive inductivist. Crudely explained, the "Baconian model" advises to observe nature, propose a modest law that generalizes an observed pattern, confirm it by many observations, venture a modestly broader law, and confirm that, too, by many more observations, while discarding disconfirmed laws. Growing ever broader, the laws never quite exceed observations. Scientists, freed from preconceptions, thus gradually uncover nature's causal and material structure. Newton's theory of universal gravitation—modeling motion as an effect of a force—resembled inductivism's paramount triumph.

Near 1740, David Hume, in Scotland, identified multiple obstacles to inferring causality from experience. Hume noted the formal illogicality of enumerative induction—unrestricted generalization from particular instances to all instances, and stating a universal law—since humans observe sequences of sensory events, not cause and effect. Perceiving neither logical nor natural necessity or impossibility among events, humans tacitly postulate uniformity of nature, unproved. Later philosophers would select, highlight, and nickname Humean principles—Hume's fork, the problem of induction, and Hume's law—although Hume respected and accepted the empirical sciences as inevitably inductive, after all.

Immanuel Kant, in Germany, alarmed by Hume's seemingly radical empiricism, identified its apparent opposite, rationalism, in Descartes, and sought a middleground. Kant intuited that necessity exists, indeed, bridging the world in itself to human experience, and that it is the mind, having innate constants that determine space, time, and substance, and thus ensure the empirically correct physical theory's universal truth. Thus shielding Newtonian physics by discarding scientific realism, Kant's view limited science to tracing appearances, mere phenomena, never unveiling external reality, the noumena. Kant's transcendental idealism launched German idealism, a group of speculative metaphysics.

While philosophers widely continued awkward confidence in empirical sciences as inductive, John Stuart Mill, in England, proposed five methods to discern causality, how genuine inductivism purportedly exceeds enumerative induction. In the 1830s, opposing metaphysics, Auguste Comte, in France, explicated positivism, which, unlike Bacon's model, emphasizes predictions, confirming them, and laying scientific laws, irrefutable by theology or metaphysics. Mill, viewing experience as affirming uniformity of nature and thus justifying enumerative induction, endorsed positivism—the first modern philosophy of science—which, also a political philosophy, upheld scientific knowledge as the only genuine knowledge.

Inductivist repudiation

Nearing 1840, William Whewell, in England, deemed the inductive sciences not so simple, and argued for recognition of "superinduction", an explanatory scope or principle invented by the mind to unite facts, but not present in the facts. John Stuart Mill rejected Whewell's hypotheticodeductivism as science's method. Whewell believed it to sometimes, upon the evidence, potentially including unlikely signs, including consilience, render scientific theories that are probably true metaphysically. By 1880, C S Peirce, in America, clarified the basis of deductive inference and, although acknowledging induction, proposed a third type of inference. Peirce called it "abduction", now termed inference to the best explanation, IBE.

The logical positivists arose in the 1920s, rebuked metaphysical philosophies, accepted hypotheticodeductivist theory origin, and sought to objectively vet scientific theories—or any statement beyond emotive—as provably false or true as to merely empirical facts and logical relations, a campaign termed verificationism. In its milder variant, Rudolf Carnap tried, but always failed, to find an inductive logic whereby a universal law's truth via observational evidence could be quantified by "degree of confirmation". Karl Popper, asserting a strong hypotheticodeductivism since the 1930s, attacked inductivism and its positivist variants, then in 1963 called enumerative induction "a myth", a deductive inference from a tacit explanatory theory. In 1965, Gilbert Harman explained enumerative induction as a masked IBE.

Thomas Kuhn's 1962 book, a cultural landmark, explains that periods of normal science as but paradigms of science are each overturned by revolutionary science, whose radical paradigm becomes the normal science new. Kuhn's thesis dissolved logical positivism's grip on Western academia, and inductivism fell. Besides Popper and Kuhn, other postpositivist philosophers of science—including Paul Feyerabend, Imre Lakatos, and Larry Laudan—have all but unanimously rejected inductivism. Those who assert scientific realism—which interprets scientific theory as reliably and literally, if approximate, true regarding nature's unobservable aspects—generally attribute new theories to IBE. And yet IBE, which, so far, cannot be trained, lacks particular rules of inference. By the 21st century's turn, inductivism's heir was Bayesianism.

Scientific methods

From the 17th to the 20th centuries, inductivism was widely conceived as scientific method's ideal. Even at the 21st century's turn, popular presentations of scientific discovery and progress naively, erroneously suggested it. The 20th was the first century producing more scientists than philosopherscientists. Earlier scientists, "natural philosophers," pondered and debated their philosophies of method. Einstein remarked, "Science without epistemology is—in so far as it is thinkable at all—primitive and muddled".

Particularly after the 1960s, scientists became unfamiliar with the historical and philosophical underpinnings of their won research programs, and often unfamiliar with logic. Scientists thus often struggle to evaluate and communicate their own work against question or attack or to optimize methods and progress. In any case, during the 20th century, philosophers of science accepted that scientific method's truer idealization is hypotheticodeductivism, which, especially in its strongest form, Karl Popper's falsificationism, is also termed deductivism.

Inductivism

Inductivism infers from observations of similar effects to similar causes, and generalizes unrestrictedly—that is, by enumerative induction—to a universal law.

Extending inductivism, Comtean positivism explicitly aims to oppose metaphysics, shuns imaginative theorizing, emphasizes observation, then making predictions, confirming them, and stating laws.

Logical positivism would accept hypotheticodeductivsm in theory development, but sought an inductive logic to objectively quantity a theory's confirmation by empirical evidence and, additionally, objectively compare rival theories.

Confirmation

Whereas a theory's proof—were such possible—may be termed verification. A theory's support is termed confirmation. But to reason from confirmation to verification—If A, then B; in fact B, and so A—is the deductive fallacy called "affirming the consequent." Inferring the relation A to B implies the relation B to A supposes, for instance, "If the lamp is broken, then the room will be dark, and so the room's being dark means the lamp is broken." Even if B holds, A could be due to X or Y or Z, or to XYZ combined. Or the sequence A and then B could be consequence of U—utterly undetected—whereby B always trails A by constant conjunction instead of by causation. Maybe, in fact, U can cease, disconnecting A from B.

Disconfirmation

A natural deductive reasoning form is logically valid without postulates and true by simply the principle of nonselfcontradiction. "Denying the consequent" is a natural deduction—If A, then B; not B, so not A—whereby one can logically disconfirm the hypothesis A. Thus, there also is eliminative induction, using this

Determination

At least logically, any phenomenon can host multiple, conflicting explanations—the problem of underdetermination—why inference from data to theory lacks any formal logic, any deductive rules of inference. A counterargument is the difficulty of finding even one empirically adequate theory. Still, however difficult to attain one, one after another has been replaced by a radically different theory, the problem of unconceived alternatives. In the meantime, many confirming instances of a theory's predictions can occur even if many of the theory's other predictions are false.

Scientific method cannot ensure that scientists will imagine, much less will or even can perform, inquiries or experiments inviting disconfirmations. Further, any data collection projects a horizon of expectation—how even objective facts, direct observations, are laden with theory—whereby incompatible facts may go unnoticed. And the experimenter's regress permits disconfirmation to be rejected by inferring that unnoticed entities or aspects unexpectedly altered the test conditions. A hypothesis can be tested only conjoined to countless auxiliary hypotheses, mostly neglected until disconfirmation.

Deductivism

In hypotheticodeductivism, the HD model, one introduces some explanation or principle from any source, such as imagination or even a dream, infers logical consequences of it—that is, deductive inferences—and compares those with observations, perhaps experimental. In simple or Whewellian hypotheticodeductivism, one might accept a theory as metaphysically true or probably true if its predictions display certain traits that appear doubtful of a false theory.

In Popperian hypotheticodeductivism, sometimes called falsificationism, although one aims for a true theory, one's main tests of the theory are efforts to empirically refute it. Falsification's main value on confirmations is when testing risky predictions that seem likeliest to fail. If the theory's bizarre prediction is empirically confirmed, then the theory is strongly corroborated, but, never upheld as metaphysically true, it is granted simply verisimilitude, the appearance of truth and thus a likeness to truth.

Inductivist reign

Francis Bacon introduced inductivism—and Isaac Newton soon emulated it—in England of the 17th century. In the 18th century, David Hume, in Scotland, raised scandal by philosophical skepticism at inductivism's rationality, whereas Immanuel Kant, in a German state, deflected Hume's fork, as it were, to shield Newtonian physics as well as philosophical metaphysics, but in the feat implied that science could at best reflect and predict observations, structured by the mind. Kant's metaphysics led Hegel's metaphysics, which Karl Marx transposed from spiritual to material and others gave it a nationalist reading.

Auguste Comte, in France of the early 19th century, opposing metaphysics, introducing positivism as, in essence, refined inductivism and a political philosophy. The contemporary urgency of the positivists and of the neopositivists—the logical positivists, emerging in Germany and Vienna in World War I's aftermath, and attenuating into the logical empiricists in America and England after World War II—reflected the sociopolitical climate of their own eras. The philosophers perceived dire threats to society via metaphysical theories, which associated with religious, sociopolitical, and thereby social and military conflicts.

Bacon

In 1620 in England, Francis Bacon's treatise Novum Organum alleged that scholasticism's Aristotelian method of deductive inference via syllogistic logic upon traditional categories was impeding society's progress. Admonishing allegedly classic induction for inferring straight from "sense and particulars up to the most general propositions" and then applying the axioms onto new particulars without empirically verifying them, Bacon stated the "true and perfect Induction". In Bacon's inductivist method, a scientist, until the late 19th century a natural philosopher, ventures an axiom of modest scope, makes many observations, accepts the axiom if it is confirmed and never disconfirmed, then ventures another axiom only modestly broader, collects many more observations, and accepts that axiom, too, only if it is confirmed, never disconfirmed.

In Novus Organum, Bacon uses the term hypothesis rarely, and usually uses it in pejorative senses, as prevalent in Bacon's time. Yet ultimately, as applied, Bacon's term axiom is more similar now to the term hypothesis than to the term law. By now, a law are nearer to an axiom, a rule of inference. By the 20th century's close, historians and philosophers of science generally agreed that Bacon's actual counsel was far more balanced than it had long been stereotyped, while some assessment even ventured that Bacon had described falsificationism, presumably as far from inductivism as one can get. In any case, Bacon was not a strict inductivist and included aspects of hypotheticodeductivism, but those aspects of Bacon's model were neglected by others, and the "Baconian model" was regarded as true inductivism—which it mostly was.

In Bacon's estimation, during this repeating process of modest axiomatization confirmed by extensive and minute observations, axioms expand in scope and deepen in penetrance tightly in accord with all the observations. This, Bacon proposed, would open a clear and true view of nature as it exists independently of human preconceptions. Ultimately, the general axioms concerning observables would render matter's unobservable structure and nature's causal mechanisms discernible by humans. But, as Bacon provides no clear way to frame axioms, let alone develop principles or theoretical constructs universally true, researchers might observe and collect data endlessly. For this vast venture, Bacon's advised precise record keeping and collaboration among researchers—a vision resembling today's research institutes—while the true understanding of nature would permit technological innovation, heralding a New Atlantis.

Newton

Modern science arose against Aristotelian physics. Geocentric were both Aristotelian physics and Ptolemaic astronomy, which latter was a basis of astrology, a basis of medicine. Nicolaus Copernicus proposed heliocentrism, perhaps to better fit astronomy to Aristotelian physics' fifth element—the universal essence, or quintessence, the aether—whose intrinsic motion, explaining celestial observations, was perpetual, perfect circles. Yet Johannes Kepler modified Copernican orbits to ellipses soon after Galileo Galilei's telescopic observations disputed the Moon's composition by aether, and Galilei's experiments with earthly bodies attacked Aristotelian physics. Galilean principles were subsumed by René Descartes, whose Cartesian physics structured his Cartesian cosmology, modeling heliocentrism and employing mechanical philosophy. Mechanical philosophy's first principle, stated by Descartes, was No action at a distance. Yet it was British chemist Robert Boyle who imparted, here, the term mechanical philosophy. Boyle sought for chemistry, by way of corpuscularism—a Cartesian hypothesis that matter is particulate but not necessarily atomic—a mechanical basis and thereby a divorce from alchemy.

In 1666, Isaac Newton fled London from the plague.[30] Isolated, he applied rigorous experimentation and mathematics, including development of calculus, and reduced both terrestrial motion and celestial motion—that is, both physics and astronomy—to one theory stating Newton's laws of motion, several corollary principles, and law of universal gravitation, set in a framework of postulated absolute space and absolute time. Newton's unification of celestial and terrestrial phenomena overthrew vestiges of Aristotelian physics, and disconnected physics from chemistry, which each then followed its own course. Newton became the exemplar of the modern scientist, and the Newtonian research program became the modern model of knowledge. Although absolute space, revealed by no experience, and a force acting at a distance discomforted Newton, he and physicists for some 200 years more would seldom suspect the fictional character of the Newtonian foundation, as they believed not that physical concepts and laws are "free inventions of the human mind", as Einstein in 1933 called them, but could be inferred logically from experience. Supposedly, Newton maintained that toward his gravitational theory, he had "framed" no hypotheses.

Hume

At 1740, Hume sorted truths into two, divergent categories—"relations of ideas" versus "matters of fact and real existence"—as later termed Hume's fork. "Relations of ideas", such as the abstract truths of logic and mathematics, known true without experience of particular instances, offer a priori knowledge. Yet the quests of empirical science concern "matters of fact and real existence", known true only through experience, thus a posteriori knowledge. As no number of examined instances logically entails the conformity of unexamined instances, a universal law's unrestricted generalization bears no formally logical basis, but one justifies it by adding the principle uniformity of nature—itself unverified—thus a major induction to justify a minor induction. This apparent obstacle to empirical science was later termed the problem of induction.

For Hume, humans experience sequences of events, not cause and effect, by pieces of sensory data whereby similar experiences might exhibit merely constant conjunction—first an event like A, and always an event like B—but there is no revelation of causality to reveal either necessity or impossibility. Although Hume apparently enjoyed the scandal that trailed his explanations, Hume did not view them as fatal, and interpreted enumerative induction to be among the mind's unavoidable customs, required in order for one to live. Rather, Hume sought to counter Copernican displacement of humankind from the Universe's center, and to redirect intellectual attention to human nature as the central point of knowledge.

Hume proceeded with inductivism not only toward enumerative induction but toward unobservable aspects of nature, too. Not demolishing Newton's theory, Hume placed his own philosophy on par with it, then. Though skeptical at common metaphysics or theology, Hume accepted "genuine Theism and Religion" and found a rational person must believe in God to explain the structure of nature and order of the universe. Still, Hume had urged, "When we run over libraries, persuaded of these principles, what havoc must we make? If we take into our hand any volume—of divinity or school metaphysics, for instance—let us ask, Does it contain any abstract reasoning concerning quantity or number? No. Does it contain any experimental reasoning concerning matter of fact and existence? No. Commit it then to the flames, for it can contain nothing but sophistry and illusion".

Kant

Awakened from "dogmatic slumber" by Hume's work, Immanuel Kant sought to explain how metaphysics is possible. Kant's 1781 book introduced the distinction rationalism, whereby some knowledge results not by empiricism, but instead by "pure reason". Concluding it impossible to know reality in itself, however, Kant discarded the philosopher's task of unveiling appearance to view the noumena, and limited science to organizing the phenomena. Reasoning that the mind contains categories organizing sense data into the experiences substance, space, and time, Kant thereby inferred uniformity of nature, after all, in the form of a priori knowledge.

Kant sorted statements, rather, into two types, analytic versus synthetic. The analytic, true by their terms' arrangement and meanings, are tautologies, mere logical truths—thus true by necessity—whereas the synthetic apply meanings toward factual states, which are contingent. Yet some synthetic statements, presumably contingent, are necessarily true, because of the mind, Kant argued. Kant's synthetic a priori, then, buttressed both physics—at the time, Newtonian—and metaphysics, too, but discarded scientific realism. This realism regards scientific theories as literally true descriptions of the external world. Kant's transcendental idealism triggered German idealism, including G F W Hegel's absolute idealism.

Positivism

Comte

In the French Revolution's aftermath, fearing Western society's ruin again, Auguste Comte was fed up with metaphysics. As suggested in 1620 by Francis Bacon, developed by Saint-Simon, and promulgated in the 1830s by his former student Comte, positivism was the first modern philosophy of science. Human knowledge had evolved from religion to metaphysics to science, explained Comte, which had flowed from mathematics to astronomy to physics to chemistry to biology to sociology—in that order—describing increasingly intricate domains, all of society's knowledge having become scientific, whereas questions of theology and of metaphysics remained unanswerable, Comte argued. Comte considered, enumerative induction to be reliable, upon the basis of experience available, and asserted that science's proper use is improving human society, not attaining metaphysical truth.

According to Comte, scientific method constrains itself to observations, but frames predictions, confirms these, rather, and states laws—positive statements—irrefutable by theology and by metaphysics, and then lays the laws as foundation for subsequent knowledge. Later, concluding science insufficient for society, however, Comte launched Religion of Humanity, whose churches, honoring eminent scientists, led worship of humankind. Comte coined the term altruism, and emphasized science's application for humankind's social welfare, which would be revealed by Comte's spearheaded science, sociology. Comte's influence is prominent in Herbert Spencer of England and in Émile Durkheim of France, both establishing modern empirical, functionalist sociology. Influential in the latter 19th century, positivism was often linked to evolutionary theory, yet was eclipsed in the 20th century by neopositivism: logical positivism or logical empiricism.

Mill

J S Mill thought, unlike Comte, that scientific laws were susceptible to recall or revision. And Mill abstained from Comte's Religion of Humanity. Still, regarding experience to justify enumerative induction by having shown, indeed, the uniformity of nature, Mill commended Comte's positivism. Mill noted that within the empirical sciences, the natural sciences had well surpassed the alleged Baconian model, too simplistic, whereas the human sciences, such ethics and political philosophy, lagged even Baconian scrutiny of immediate experience and enumerative induction. Similarly, economists of the 19th century tended to pose explanations a priori, and reject disconfirmation by posing circuitous routes of reasoning to maintain their a priori laws. In 1843, Mill's A System of Logic introduced Mill's methods: the five principles whereby causal laws can be discerned to enhance the empirical sciences as, indeed, the inductive sciences. For Mill, all explanations have the same logical structure, while society can be explained by natural laws.

Social

In the 17th century, England, with Isaac Newton and industrialization, led in science. In the 18th century, France led, particularly in chemistry, as by Antoine Lavoisier. During the 19th century, French chemists were influential, like Antoine Béchamp and Louis Pasteur, who inaugurated biomedicine, yet Germany gained the lead in science, by combining physics, physiology, pathology, medical bacteriology, and applied chemistry. In the 20th, America led. These shifts influenced each country's contemporary, envisioned roles for science.

Before Germany's lead in science, France's was upended by the first French Revolution, whose Reign of Terror beheaded Lavoisier, reputedly for selling diluted beer, and led to Napoleon's wars. Amid such crisis and tumult, Auguste Comte inferred that society's natural condition is order, not change. As in Saint-Simon's industrial utopianism, Comte's vision, as later upheld by modernity, positioned science as the only objective true knowledge and thus also as industrial society's secular spiritualism, whereby science would offer political and ethical guide.

Positivism reached Britain well after Britain's own lead in science had ended. British positivism, as witnessed in Victorian ethics of utilitarianism—for instance, J S Mill's utilitarianism and later in Herbert Spencer's social evolutionism—associated science with moral improvement, but rejected science for political leadership. For Mill, all explanations held the same logical structure—thus, society could be explained by natural laws—yet Mill criticized "scientific politics". From its outset, then, sociology was pulled between moral reform versus administrative policy.

Herbert Spencer helped popularize the word sociology in England, and compiled vast data aiming to infer general theory through empirical analysis. Spencer's 1850 book Social Statics shows Comtean as well as Victorian concern for social order. Yet whereas Comte's social science was a social physics, as it were, Spencer took biology—later by way of Darwinism, so called, which arrived in 1859—as the model of science, a model for social science to emulate. Spencer's functionalist, evolutionary account identified social structures as functions that adapt, such that analysis of them would explain social change.

In France, Comte's sociology influence shows with Émile Durkheim, whose Rules for the Sociological Method, 1895, likewise posed natural science as sociology's model. For Durkheim, social phenomena are functions without psychologism—that is, operating without consciousness of individuals—while sociology is antinaturalist, in that social facts differ from natural facts. Still, per Durkheim, social representations are real entities observable, without prior theory, by assessing raw data. Durkheim's sociology was thus realist and inductive, whereby theory would trail observations while scientific method proceeds from social facts to hypotheses to causal laws discovered inductively.

Logical

World War erupted in 1914 and closed in 1919 with a treaty upon reparations that British economist John Maynard Keynes immediately, vehemently predicted would crumble German society by hyperinflation, a prediction fulfilled by 1923. Via the solar eclipse of May, 29, 1919, Einstein's gravitational theory, confirmed in its astonishing prediction, apparently overthrew Newton's gravitional theory. This revolution in science was bitterly resisted by many scientists, yet was completed nearing 1930. Not yet dismissed as pseudoscience, race science flourished, overtaking medicine and public health, even in America, with excesses of negative eugenics. In the 1920s, some philosophers and scientists were appalled by the flaring nationalism, racism, and bigotry, yet perhaps no less by the countermovements toward metaphysics, intuitionism, and mysticism.

Also optimistic, some of the appalled German and Austrian intellectuals were inspired by breakthroughs in philosophy, mathematics, logic, and physics, and sought to lend humankind a transparent, universal language competent to vet statements for either logical truth or empirical truth, no more confusion and irrationality. In their envisioned, radical reform of Western philosophy to transform it into scientific philosophy, they studied exemplary cases of empirical science in their quest to turn philosophy into a special science, like biology and economics. The Vienna Circle, including Otto Neurath, was led by Moritz Schlick, and had converted to the ambitious program by its member Rudolf Carnap, whom the Berlin Circle's leader Hans Reichenbach had introduced to Schlick. Carl Hempel, who had studied under Reichenbach, and would be a Vienna Circle alumnus, would later lead the movement from America, which, along with England, received emigration of many logical positivists during Hitler's regime.

The Berlin Circle and the Vienna Circle became called—or, soon, were often stereotyped as—the logical positivists or, in a milder connotation, the logical empiricists or, in any case, the neopositivists. Rejecting Kant's synthetic a priori, they asserted Hume's fork. Staking it at the analytic/synthetic gap, they sought to dissolve confusions by freeing language from "pseudostatements". And appropriating Ludwig Wittgenstein's verifiability criterion, many asserted that only statements logically or empirically verifiable are cognitively meaningful, whereas the rest are merely emotively meaningful. Further, they presumed a semantic gulf between observational terms versus theoretical terms. Altogether, then, many withheld credence from science's claims about nature's unobservable aspects. Thus rejecting scientific realism, many embraced instrumentalism, whereby scientific theory is simply useful to predict human observations, while sometimes regarding talk of unobservables as either metaphorical or meaningless.

Pursuing both Bertrand Russell's program of logical atomism, which aimed to deconstruct language into supposedly elementary parts, and Russell's endeavor of logicism, which would reduce swaths of mathematics to symbolic logic, the neopositivists envisioned both everyday language and mathematics—thus physics, too—sharing a logical syntax in symbolic logic. To gain cognitive meaningfulness, theoretical terms would be translated, via correspondence rules, into observational terms—thus revealing any theory's actually empirical claims—and then empirical operations would verify them within the observational structure, related to the theoretical structure through the logical syntax. Thus, a logical calculus could be operated to objectively verify the theory's falsity or truth. With this program termed verificationism, logical positivists battled the Marburg school's neoKantianism, Husserlian phenomenology, and, as their very epitome of philosophical transgression, Heidegger's "existential hermeneutics", which Carnap accused of the most flagrant "pseudostatements".

Opposition

In friendly spirit, the Vienna Circle's Otto Neurath nicknamed Karl Popper, a fellow philosopher in Vienna, "the Official Opposition". Popper asserted that any effort to verify a scientific theory, or even to inductively confirm a scientific law, is fundamentally misguided. Popper asserted that although exemplary science is not dogmatic, science inevitably relies on "prejudices". Popper accepted Hume's criticism—the problem of induction—as revealing verification to be impossible.

Popper accepted hypotheticodeductivism, sometimes termed it deductivism, but restricted it to denying the consequent, and thereby, refuting verificationism, reframed it as falsificationism. As to law or theory, Popper held confirmation of probable truth to be untenable, as any number confirmations is finite: empirical evidence approaching 0% probability of truth amid a universal law's predictive run to infinity. Popper even held that a scientific theory is better if its truth appears most improbable. Logical positivism, Popper asserted, "is defeated by its typically inductivist prejudice".

Problems

Having highlighted Hume's problem of induction, John Maynard Keynes posed logical probability to answer it—but then figured not quite. Bertrand Russell held Keynes's book A Treatise on Probability as induction's best examination, and if read with Jean Nicod's Le Probleme logique de l'induction as well as R B Braithwaite's review of that in the October 1925 issue of Mind, to provide "most of what is known about induction", although the "subject is technical and difficult, involving a good deal of mathematics".

Rather than validate enumerative induction—the futile task of showing it a deductive inference—some sought simply to vindicate it. Herbert Feigl as well as Hans Reichenbach, apparently independently, thus sought to show enumerative induction simply useful, either a "good" or the "best" method for the goal at hand, making predictions. Feigl posed it as a rule, thus neither a priori nor a posteriori but a fortiori. Reichenbach's treatment, similar to Pascal's wager, posed it as entailing greater predictive success versus the alternative of not using it.

In 1936, Rudolf Carnap switched the goal of scientific statements' verification, clearly impossible, to the goal of simply their confirmation. Meanwhile, similarly, ardent logical positivist A J Ayer identified two types of verification—strong versus weak—the strong being impossible, but the weak being attained when the statement's truth is probable. In such mission, Carnap sought to apply probability theory to formalize inductive logic by discovering an algorithm that would reveal "degree of confirmation". Employing abundant logical and mathematical tools, yet never attaining the goal, Carnap's formulations of inductive logic always held a universal law's degree of confirmation at zero.

Kurt Gödel's incompleteness theorem of 1931 made the logical positivists' logicism, or reduction of mathematics to logic, doubtful. But then Alfred Tarski's undefinability theorem of 1934 made it hopeless. Some, including logical empiricist Carl Hempel, argued for its possibility, anyway. After all, nonEuclidean geometry had shown that even geometry's truth via axioms occurs among postulates, by definition unproved. Meanwhile, as to mere formalism, rather, which coverts everyday talk into logical forms, but does not reduce it to logic, neopositivists, though accepting hypotheticodeductivist theory development, upheld symbolic logic as the language to justify, by verification or confirmation, its results. But then Hempel's paradox of confirmation highlighted that formalizing confirmatory evidence of the hypothesized, universal law All ravens are black—implying All nonblack things are not ravens—formalizes defining a white shoe, in turn, as a case confirming All ravens are black.

Early criticism

During the 1830s and 1840s, the French Auguste Comte and the British J S Mill were the leading philosophers of science. Debating in the 1840s, J S Mill claimed that science proceeds by inductivism, whereas William Whewell, also British, claimed that it proceeds by hypotheticodeductivism.

Whewell

William Whewell found the "inductive sciences" not so simple, but, amid the climate of esteem for inductivism, described "superinduction". Whewell proposed recognition of "the peculiar import of the term Induction", as "there is some Conception superinduced upon the facts", that is, "the Invention of a new Conception in every inductive inference". Rarely spotted by Whewell's predecessors, such mental inventions rapidly evade notice. Whewell explains,

"Although we bind together facts by superinducing upon them a new Conception, this Conception, once introduced and applied, is looked upon as inseparably connected with the facts, and necessarily implied in them. Having once had the phenomena bound together in their minds in virtue of the Conception, men can no longer easily restore them back to detached and incoherent condition in which they were before they were thus combined".

Once one observes the facts, "there is introduced some general conception, which is given, not by the phenomena, but by the mind". Whewell this called this "colligation", uniting the facts with a "hypothesis"—an explanation—that is an "invention" and a "conjecture". In fact, one can colligate the facts via multiple, conflicting hypotheses. So the next step is testing the hypothesis. Whewell seeks, ultimately, four signs: coverage, abundance, consilience, and coherence.

First, the idea must explain all phenomena that prompted it. Second, it must predict more phenomena, too. Third, in consilience, it must be discovered to encompass phenomena of a different type. Fourth, the idea must nest in a theoretical system that, not framed all at once, developed over time and yet became simpler meanwhile. On these criteria, the colligating idea is naturally true, or probably so. Although devoting several chapters to "methods of induction" and mentioned "logic of induction", Whewell stressed that the colligating "superinduction" lacks rules and cannot be trained. Whewell also held that Bacon, not a strict inductivist, "held the balance, with no partial or feeble hand, between phenomena and ideas".

Peirce

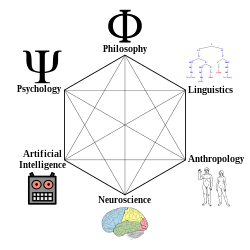

As Kant had noted in 1787, the theory of deductive inference had not progressed since antiquity. In the 1870s, C S Peirce and Gottlob Frege, unbeknownst to one another, revolutionized deductive logic through vast efforts identifying it with mathematical proof. An American who originated pragmatism—or, since 1905, pragmaticism, distinguished from more recent appropriations of his original term—Peirce recognized induction, too, but continuously insisted on a third type of inference that Pierce variously termed abduction, or retroduction, or hypothesis, or presumption. Later philosophers gave Peirce's abduction, and so on, the synonym inference to the best explanation, or IBE. Many philosophers of science later espousing scientific realism have maintained that IBE is how scientists develop approximately true scientific theories about nature.

Inductivist fall

After defeat of National Socialism via World War II in 1945, logical positivists lost their revolutionary zeal and led Western academia's philosophy departments to develop the niche philosophy of science, researching such riddles of scientific method, theories, knowledge, and so on. The movement shifted, thus, into a milder variant bettered termed logical empiricism or, but still a neopositivism, led principally by Rudolf Carnap, Hans Reichenbach, and Carl Hempel.

Amid increasingly apparent contradictions in neopositivism's central tenets—the verifiability principle, the analytic/synthetic division, and the observation/theory gap—Hempel in 1965 abandoned the program a far wider conception of "degrees of significance". This signaled neopositivism's official demise. Neopositivism became mostly maligned, while credit for its fall generally has gone to W V O Quine and to Thomas S Kuhn, although its "murder" had been prematurely confessed to by Karl R Popper in the 1930s.

Fuzziness

Willard Van Orman Quine's 1951 paper "Two dogmas of empiricism"—explaining semantic holism, whereby any term's meaning draws from the speaker's beliefs about the whole world—cast Hume's fork, which posed the analytic/synthetic division as unbridgeable, as itself untenable. Among verificationism's greatest internal critics, Carl Hempel had recently concluded that the verifiability criterion, too, is untenable, as it would cast not only religious assertions and metaphysical statements, but even scientific laws of universal type as cognitively meaningless.

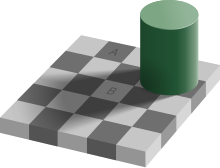

In 1958, Norwood Hanson's book Patterns of Discovery subverted the putative gap between observational terms and theoretical terms, a putative gap whereby direct observation would permit neutral comparison of rival theories. Hanson explains that even direct observations, the scientific facts, are laden with theory, which guides the collection, sorting, prioritization, and interpretation of direct observations, and even shapes the researcher's ability to apprehend a phenomenon. Meanwhile, even as to general knowledge, Quine's thesis eroded foundationalism, which retreated to modesty.

Revolutions

The Structure of Scientific Revolutions, by Thomas Kuhn, 1962, was first published in the International Encyclopedia of Unified Science—a project begun by logical positivists—and somehow, at last, unified the empirical sciences by withdrawing the physics model, and scrutinizing them via history and sociology. Lacking such heavy use of mathematics and logic's formal language—an approach introduced in the Vienna Circle's Rudolf Carnap in the 1920s—Kuhn's book, powerful and persuasive, used in natural language open to laypersons.

Structure explains science as puzzlesolving toward a vision projected by the "ruling class" of a scientific specialty's community, whose "unwritten rulebook" dictates acceptable problems and solutions, altogether normal science. The scientists reinterpret ambiguous data, discard anomalous data, and try to stuff nature into the box of their shared paradigm—a theoretical matrix or fundamental view of nature—until compatible data become scarce, anomalies accumulate, and scientific "crisis" ensues. Newly training, some young scientists defect to revolutionary science, which, simultaneously explaining both the normal data and the anomalous data, resolves the crisis by setting a new "exemplar" that contradicts normal science.

Kuhn explains that rival paradigms, having incompatible languages, are incommensurable. Trying to resolve conflict, scientists talk past each other, as even direct observations—for example, that the Sun is "rising"—get fundamentally conflicting interpretations. Some working scientists convert by a perspectival shift that—to their astonishment—snaps the new paradigm, suddenly obvious, into view. Others, never attaining such gestalt switch, remain holdouts, committed for life to the old paradigm. One by one, holdouts die. Thus, the new exemplar—the new, unwritten rulebook—settles in the new normal science. The old theoretical matrix becomes so shrouded by the meanings of terms in the new theoretical matrix that even philosophers of science misread the old science.

And thus, Kuhn explains, a revolution in science is fulfilled. Kuhn's thesis critically destabilized confidence in foundationalism, which was generally, although erroneously, presumed to be one of logical empiricism's key tenets. As logical empiricism was extremely influential in the social sciences, Kuhn's ideas were rapidly adopted by scholars in disciplines well outside of the natural sciences, where Kuhn's analysis occurs. Kuhn's thesis in turn was attacked, however, even by some of logical empiricism's opponents. In Structure's 1970 postscript, Kuhn asserted, mildly, that science at least lacks an algorithm. On that point, even Kuhn's critics agreed. Reinforcing Quine's assault on logical empiricism, Kuhn ushered American and English academia into postpositivism or postempiricism.

Critical rationalism

Karl Popper's 1959 book The Logic of Scientific Discovery, originally published in German in 1934, reached readers of English at a time when logical empiricism, with its ancestrally verificationist program, was so dominant that a book reviewer mistook it for a new version of verificationism. Instead, Popper's philosophy, later called critical rationalism, fundamentally refuted verificationism. Popper's demarcation principle of falsifiability grants a theory the status of scientific—simply, being empirically testable—not the status of meaningful, a status that Popper did not aim to arbiter. Popper found no scientific theory either verifiable or, as in Carnap's "liberalization of empiricism", confirmable, and found unscientific, metaphysical, ethical, and aesthetic statements often rich in meaning while also underpinning or fueling science as the origin of scientific theories. The only confirmations particularly relevant are those of risky predictions, such as ones conventionally predicted to fail.

Postpositivism

At 1967, historian of philosophy John Passmore concluded, "Logical positivism is dead, or as dead as a philosophical movement ever becomes". Logical positivism, or logical empiricism, or verificationism, or, as the overarching term for this sum movement, neopositivism soon became philosophy of science's bogeyman.

Kuhn's influential thesis was soon attacked for portraying science as irrational—cultural relativism similar to religious experience. Postpositivism's poster became Popper's view of human knowledge as hypothetical, continually growing, always tentative, open to criticism and revision. But then even Popper became unpopular, allegedly unrealistic.

Problem of induction

In 1945, Bertrand Russell had proposed enumerative induction as an "independent logical principle", one "incapable of being inferred either from experience or from other logical principles, and that without this principle, science is impossible". And yet in 1963, Karl Popper declared, "Induction, i.e. inference based on many observations, is a myth. It is neither a psychological fact, nor a fact of ordinary life, nor one of scientific procedure". Popper's 1972 book Objective Knowledge opens, "I think I have solved a major philosophical problem: the problem of induction".

Popper's schema of theory evolution is a superficially stepwise but otherwise cyclical process: Problem1 → Tentative Solution → Critical Test → Error Elimination → Problem2. The tentative solution is improvised, an imaginative leap unguided by inductive rules, and the resulting universal law is deductive, an entailed consequence of all, included explanatory considerations. Popper calls enumerative induction, then, "a kind of optical illusion" that shrouds steps of conjecture and refutation during a problem shift. Still, debate continued over the problem of induction, or whether it even poses a problem to science.

Some have argued that although inductive inference is often obscured by language—as in news reporting that experiments have proved a substance is to be safe—and that enumerative induction ought to be tempered by proper clarification, inductive inference is used liberally in science, that science requires it, and that Popper is obviously wrong. There are, more actually, strong arguments on both sides. Enumerative induction obviously occurs as a summary conclusion, but its literal operation is unclear, as it may, as Popper explains, reflect deductive inference from an underlying, unstated explanation of the observations.

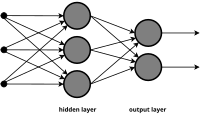

In a 1965 paper now classic, Gilbert Harman explains enumerative induction as a masked effect of what C S Pierce had termed abduction, that is, inference to the best explanation, or IBE. Philosophers of science who espouse scientific realism have usually maintained that IBE is how scientists develop, about the putative mind-independent world, scientific theories approximately true. Thus, calling Popper obviously wrong—since scientists use induction in effort to "prove" their theories true—reflects conflicting semantics. By now, enumerative induction has been shown to exist, but is found rarely, as in programs of machine learning in artificial intelligence. Likewise, machines can be programmed to operate on probabilistic inference of near certainty. Yet sheer enumerative induction is overwhelmingly absent from science conducted by humans. Although much talked of, IBE proceeds by humans' imaginations and creativity without rules of inference, which IBE's discussants provide nothing resembling.

Logical bogeymen

Popperian falsificationism, too, became widely criticized and soon unpopular among philosophers of science. Still, Popper has been the only philosopher of science often praised by scientists. On the other hand, likened to economists of the 19th century who took circuitous, protracted measures to deflect falsification of their own preconceived principles, the verificationists—that is, the logical positivists—became identified as pillars of scientism, allegedly asserting strict inductivism, as well as foundationalism, to ground all empirical sciences to a foundation of direct sensory experience. Rehashing neopositivism's alleged failures became a popular tactic of subsequent philosophers before launching argument for their own views, often built atop misrepresentations and outright falsehoods about neopositivism. Not seeking to overhaul and regulate empirical sciences or their practices, the neopositivists had sought to analyze and understand them, and thereupon overhaul philosophy to scientifically organize human knowledge.

Logical empiricists indeed conceived the unity of science to network all special sciences and to reduce the special sciences' laws—by stating boundary conditions, supplying bridge laws, and heeding the deductivenomological model—to, at least in principle, the fundamental science, that is, fundamental physics. And Rudolf Carnap sought to formalize inductive logic to confirm universal laws through probability as "degree of confirmation". Yet the Vienna Circle had pioneered nonfoundationalism, a legacy especially of its member Otto Neurath, whose coherentism—the main alternative to foundationalism—likened science to a boat that scientists must rebuild at sea without ever touching shore. And neopositivists did not seek rules of inductive logic to regulate scientific discovery or theorizing, but to verify or confirm laws and theories once scientists pose them. Practicing what Popper had preached—conjectures and refutations—neopositivism simply ran its course. So its chief rival, Popper, initially a contentious misfit, emerged from interwar Vienna vindicated.

Scientific anarchy

In the early 1950s, studying philosophy of quantum mechanics under Popper at the London School of Economics, Paul Feyerabend found falsificationism to be not a breakthrough but rather obvious, and thus the controversy over it to suggest instead endemic poverty in the academic discipline philosophy of science. And yet, there witnessing Popper's attacks on inductivism—"the idea that theories can be derived from, or established on the basis of, facts"—Feyerabend was impressed by a Popper talk at the British Society for the Philosophy of Science. Popper showed that higher-level laws, far from reducible to, often conflict with laws supposedly more fundamental.

Popper's prime example, already made by the French classical physicist and philosopher of science Pierre Duhem decades earlier, was Kepler's laws of planetary motion, long famed to be, and yet not actually, reducible to Newton's law of universal gravitation. For Feyerabend, the sham of inductivism was pivotal. Feyerabend investigated, eventually concluding that even in the natural sciences, the unifying method is Anything goes—often rhetoric, circular reasoning, propaganda, deception, and subterfuge—methodological lawlessness, scientific anarchy. At persistent claims that faith in induction is a necessary precondition of reason, Feyerabend's 1987 book sardonically bids Farewell to Reason.

Research programmes

Imre Lakatos deemed Popper's falsificationism neither practiced by scientists nor even realistically practical, but held Kuhn's paradigms of science to be more monopolistic than actual. Lakatos found multiple, vying research programmes to coexist, taking turns at leading in scientific progress.

A research programme stakes a hard core of principles, such as the Cartesian rule No action at a distance, that resists falsification, deflected by a protective belt of malleable theories that advance the hard core via theoretical progress, spreading the hard core into new empirical territories.

Corroborating the new theoretical claims is empirical progress, making the research programme progressive—or else it degenerates. But even an eclipsed research programme may linger, Lakatos finds, and can resume progress by later revisions to its protective belt.

In any case, Lakatos concluded inductivism to be rather farcical and never in the history of science actually practiced. Lakatos alleged that Newton had fallaciously posed his own research programme as inductivist to publicly legitimize itself.

Research traditions

Lakatos's putative methodology of scientific research programmes was criticized by sociologists of science and by some philosophers of science, too, as being too idealized and omitting scientific communities' interplay with the wider society's social configurations and dynamics. Philosopher of science Larry Laudan argued that the stable elements are not research programmes, but rather are research traditions.

Inductivist heir

By the 21st century's turn, Bayesianism had become the heir of inductivism.