Early ideas about the figure of the Earth held the Earth to be flat (see flat Earth), and the heavens a physical dome spanning over it. Two early arguments for a spherical Earth were that lunar eclipses were seen as circular shadows which could only be caused by a spherical Earth, and that Polaris is seen lower in the sky as one travels South.

Hellenic world

Initial development from a diversity of views

Though the earliest written mention of a spherical Earth comes from ancient Greek sources, there is no account of how the sphericity of Earth was discovered, or if it was initially simply a guess. A plausible explanation given by the historian Otto E. Neugebauer is that it was "the experience of travellers that suggested such an explanation for the variation in the observable altitude of the pole and the change in the area of circumpolar stars, a change that was quite drastic between Greek settlements" around the eastern Mediterranean Sea, particularly those between the Nile Delta and Crimea.

Another possible explanation can be traced back to earlier Phoenician sailors. The first circumnavigation of Africa is described as being undertaken by Phoenician explorers employed by Egyptian pharaoh Necho II c. 610–595 BC. In The Histories, written 431–425 BC, Herodotus cast doubt on a report of the Sun observed shining from the north. He stated that the phenomenon was observed by Phoenician explorers during their circumnavigation of Africa (The Histories, 4.42) who claimed to have had the Sun on their right when circumnavigating in a clockwise direction. To modern historians, these details confirm the truth of the Phoenicians' report. The historian Dmitri Panchenko hypothesizes that it was the Phoenician circumnavigation of Africa that inspired the theory of a spherical Earth, the earliest mention of which was made by the philosopher Parmenides in the 5th century BC. However, nothing certain about their knowledge of geography and navigation has survived; therefore, later researchers have no evidence that they conceived of Earth as spherical.

Speculation and theorizing ranged from the flat disc advocated by Homer to the spherical body reportedly postulated by Pythagoras. Anaximenes, an early Greek philosopher, believed strongly that the Earth was rectangular in shape. Some early Greek philosophers alluded to a spherical Earth, though with some ambiguity. Pythagoras (6th century BC) was among those said to have originated the idea, but this might reflect the ancient Greek practice of ascribing every discovery to one or another of their ancient wise men. Pythagoras was a mathematician, and he supposedly reasoned that the gods would create a perfect figure which to him was a sphere. Some idea of the sphericity of Earth seems to have been known to both Parmenides and Empedocles in the 5th century BC, and although the idea cannot reliably be ascribed to Pythagoras, it might nevertheless have been formulated in the Pythagorean school in the 5th century BC although some disagree. After the 5th century BC, no Greek writer of repute thought the world was anything but round. The Pythagorean idea was supported later by Aristotle. Efforts commenced to determine the size of the sphere.

Plato

Plato (427–347 BC) travelled to southern Italy to study Pythagorean mathematics. When he returned to Athens and established his school, Plato also taught his students that Earth was a sphere, though he offered no justifications. "My conviction is that the Earth is a round body in the centre of the heavens, and therefore has no need of air or of any similar force to be a support." If man could soar high above the clouds, Earth would resemble "one of those balls which have leather coverings in twelve pieces, and is decked with various colours, of which the colours used by painters on Earth are in a manner samples." In Timaeus, his one work that was available throughout the Middle Ages in Latin, he wrote that the Creator "made the world in the form of a globe, round as from a lathe, having its extremes in every direction equidistant from the centre, the most perfect and the most like itself of all figures", though the word "world" here refers to the heavens.

Aristotle

Aristotle (384–322 BC) was Plato's prize student and "the mind of the school". Aristotle observed "there are stars seen in Egypt and [...] Cyprus which are not seen in the northerly regions". Since this could only happen on a curved surface, he too believed Earth was a sphere "of no great size, for otherwise the effect of so slight a change of place would not be quickly apparent".

Aristotle reported that mathematicians had calculated the circumference of the Earth (which is actually slightly over 40,000 km) to be 400,000 stadia (between 62,800 and 74,000 km or 46,250 and 39,250 mi).

Aristotle provided physical and observational arguments supporting the idea of a spherical Earth:

- Every portion of Earth tends toward the centre until by compression and convergence they form a sphere.

- Travelers going south see southern constellations rise higher above the horizon.

- The shadow of Earth on the Moon during a lunar eclipse is round.

The concepts of symmetry, equilibrium and cyclic repetition permeated Aristotle's work. In his Meteorology he divided the world into five climatic zones: two temperate areas separated by a torrid zone near the equator, and two cold inhospitable regions, "one near our upper or northern pole and the other near the [...] southern pole", both impenetrable and girdled with ice. Although no humans could survive in the frigid zones, inhabitants in the southern temperate regions could exist.

Aristotle's theory of natural place relied on a spherical Earth to explain why heavy things go down (toward what Aristotle believed was the center of the Universe), and things like air and fire go up. In this geocentric model, the structure of the universe was believed to be a series of perfect spheres. The Sun, Moon, planets and fixed stars were believed to move on celestial spheres around a stationary Earth.

Though Aristotle's theory of physics survived in the Christian world for many centuries, the heliocentric model was eventually shown to be a more correct explanation of the Solar System than the geocentric model, and atomic theory was shown to be a more correct explanation of the nature of matter than classical elements like earth, water, air, fire, and aether.

Archimedes

Archimedes gave an upper bound for the circumference of the Earth of 3,000,000 stadia (483,000 km or 300,000 mi) using the Hellenic stadion which scholars generally take to be 185 meters or 1⁄9 of a geographical mile.

In proposition 2 of the First Book of his treatise "On floating bodies", Archimedes demonstrates that "The surface of any fluid at rest is the surface of a sphere whose centre is the same as that of the Earth". Subsequently, in propositions 8 and 9 of the same work, he assumes the result of proposition 2 that Earth is a sphere and that the surface of a fluid on it is a sphere centered on the center of Earth.

Eratosthenes

Eratosthenes (276–194 BC), a Hellenistic astronomer from what is now Cyrene, Libya working in Alexandria, Egypt, estimated Earth's circumference around 240 BC, computing a value of 252,000 stades. The length that Eratosthenes intended for a "stade" is not known, but his figure only has an error of around one to fifteen percent. Assuming a value for the stadion between 155 and 160 metres, the error is between −2.4% and +0.8%. Eratosthenes described his technique in a book entitled On the measure of the Earth, which has not been preserved. Eratosthenes could only measure the circumference of Earth by assuming that the distance to the Sun is so great that the rays of sunlight are practically parallel.

Eratosthenes' method to calculate the Earth's circumference has been lost; what has been preserved is the simplified version described by Cleomedes to popularise the discovery. Cleomedes invites his reader to consider two Egyptian cities, Alexandria and Syene, modern Assuan:

- Cleomedes assumes that the distance between Syene and Alexandria was 5,000 stadia (a figure that was checked yearly by professional bematists, mensores regii);

- he assumes the simplified (but false) hypothesis that Syene was precisely on the Tropic of Cancer, saying that at local noon on the summer solstice the Sun was directly overhead;

- he assumes the simplified (but false) hypothesis that Syene and Alexandria are on the same meridian.

Under the previous assumptions, says Cleomedes, you can measure the Sun's angle of elevation at noon of the summer solstice in Alexandria, by using a vertical rod (a gnomon) of known length and measuring the length of its shadow on the ground; it is then possible to calculate the angle of the Sun's rays, which he claims to be about 7°, or 1/50th the circumference of a circle. Taking the Earth as spherical, the Earth's circumference would be fifty times the distance between Alexandria and Syene, that is 250,000 stadia. Since 1 Egyptian stadium is equal to 157.5 metres, the result is 39,375 km, which is 1.4% less than the real number, 39,941 km.

Eratosthenes' method was actually more complicated, as stated by the same Cleomedes, whose purpose was to present a simplified version of the one described in Eratosthenes' book. The method was based on several surveying trips conducted by professional bematists, whose job was to precisely measure the extent of the territory of Egypt for agricultural and taxation-related purposes. Furthermore, the fact that Eratosthenes' measure corresponds precisely to 252,000 stadia might be intentional, since it is a number that can be divided by all natural numbers from 1 to 10: some historians believe that Eratosthenes changed from the 250,000 value written by Cleomedes to this new value to simplify calculations; other historians of science, on the other side, believe that Eratosthenes introduced a new length unit based on the length of the meridian, as stated by Pliny, who writes about the stadion "according to Eratosthenes' ratio".

1,700 years after Eratosthenes, Christopher Columbus studied Eratosthenes's findings before sailing west for the Indies. However, ultimately he rejected Eratosthenes in favour of other maps and arguments that interpreted Earth's circumference to be a third smaller than it really is. If, instead, Columbus had accepted Eratosthenes' findings, he may have never gone west, since he did not have the supplies or funding needed for the much longer eight-thousand-plus mile voyage.

Seleucus of Seleucia

Seleucus of Seleucia (c. 190 BC), who lived in the city of Seleucia in Mesopotamia, wrote that Earth is spherical (and actually orbits the Sun, influenced by the heliocentric theory of Aristarchus of Samos).

Posidonius

A parallel later ancient measurement of the size of the Earth was made by another Greek scholar, Posidonius (c. 135 – 51 BC), using a similar method as Eratosthenes. Instead of observing the sun, he noted that the star Canopus was hidden from view in most parts of Greece but that it just grazed the horizon at Rhodes. Posidonius is supposed to have measured the angular elevation of Canopus at Alexandria and determined that the angle was 1/48 of a circle. He used a distance from Alexandria to Rhodes, 5000 stadia, and so he computed the Earth's circumference in stadia as 48 × 5000 = 240,000. Some scholars see these results as luckily semi-accurate due to cancellation of errors. But since the Canopus observations are both mistaken by over a degree, the "experiment" may be not much more than a recycling of Eratosthenes's numbers, while altering 1/50 to the correct 1/48 of a circle. Later, either he or a follower appears to have altered the base distance to agree with Eratosthenes's Alexandria-to-Rhodes figure of 3750 stadia, since Posidonius' final circumference was 180,000 stadia, which equals 48 × 3750 stadia. The 180,000 stadia circumference of Posidonius is suspiciously close to that which results from another method of measuring the Earth, by timing ocean sunsets from different heights, a method which is inaccurate due to horizontal atmospheric refraction. Posidonius furthermore expressed the distance of the Sun in Earth radii.

The above-mentioned larger and smaller sizes of the Earth were those used by later Roman author Claudius Ptolemy at different times: 252,000 stadia in his Almagest and 180,000 stadia in his later Geographia. His mid-career conversion resulted in the latter work's systematic exaggeration of degree longitudes in the Mediterranean by a factor close to the ratio of the two seriously differing sizes discussed here, which indicates that the conventional size of the Earth was what changed, not the stadion.

Ancient India

While the textual evidence has not survived, the precision of the constants used in pre-Greek Vedanga models, and the model's accuracy in predicting the Moon and Sun's motion for Vedic rituals, probably came from direct astronomical observations. The cosmographic theories and assumptions in ancient India likely developed independently and in parallel, but these were influenced by some unknown quantitative Greek astronomy text in the medieval era.

Greek ethnographer Megasthenes, c. 300 BC, has been interpreted as stating that the contemporary Brahmans believed in a spherical Earth as the center of the universe. With the spread of Hellenistic culture in the east, Hellenistic astronomy filtered eastwards to ancient India where its profound influence became apparent in the early centuries AD. The Greek concept of an Earth surrounded by the spheres of the planets and that of the fixed stars, vehemently supported by astronomers like Varāhamihira and Brahmagupta, strengthened the astronomical principles. Some ideas were found possible to preserve, although in altered form.

The Indian astronomer and mathematician Aryabhata (476–550 CE) was a pioneer of mathematical astronomy on the subcontinent. He describes the Earth as being spherical and says that it rotates on its axis, among other places in his Sanskrit magnum opus, Āryabhaṭīya. Aryabhatiya is divided into four sections: Gitika, Ganitha ("mathematics"), Kalakriya ("reckoning of time") and Gola ("celestial sphere"). The discovery that the Earth rotates on its own axis from west to east is described in Aryabhatiya (Gitika 3,6; Kalakriya 5; Gola 9,10). For example, he explained the apparent motion of heavenly bodies as only an illusion (Gola 9), with the following simile:

- Just as a passenger in a boat moving downstream sees the stationary (trees on the river banks) as traversing upstream, so does an observer on earth see the fixed stars as moving towards the west at exactly the same speed (at which the earth moves from west to east.)

Aryabhatiya also estimates the circumference of the Earth. He gives this as 4967 yojanas and its diameter as 1581 1⁄24 yojanas. The length of a yojana varies considerably between sources; assuming a yojana to be 8 km (4.97097 miles) this gives a circumference of 39,736 kilometres (24,691 mi), close to the current equatorial value of 40,075 km (24,901 mi).

Roman Empire

The idea of a spherical Earth slowly spread across the globe, and ultimately became the adopted view in all major astronomical traditions.

In the West, the idea came to the Romans through the lengthy process of cross-fertilization with Hellenistic civilization. Many Roman authors such as Cicero and Pliny refer in their works to the rotundity of Earth as a matter of course. Pliny also considered the possibility of an imperfect sphere "shaped like a pinecone".

Strabo

It has been suggested that seafarers probably provided the first observational evidence that Earth was not flat, based on observations of the horizon. This argument was put forward by the geographer Strabo (c. 64 BC – 24 AD), who suggested that the spherical shape of Earth was probably known to seafarers around the Mediterranean Sea since at least the time of Homer, citing a line from the Odyssey as indicating that the poet Homer knew of this as early as the 7th or 8th century BC. Strabo cited various phenomena observed at sea as suggesting that Earth was spherical. He observed that elevated lights or areas of land were visible to sailors at greater distances than those less elevated, and stated that the curvature of the sea was obviously responsible for this.

Claudius Ptolemy

Claudius Ptolemy (90–168 AD) lived in Alexandria, the centre of scholarship in the 2nd century. In the Almagest, which remained the standard work of astronomy for 1,400 years, he advanced many arguments for the spherical nature of Earth. Among them was the observation that when a ship is sailing towards mountains, observers note these seem to rise from the sea, indicating that they were hidden by the curved surface of the sea. He also gives separate arguments that Earth is curved north-south and that it is curved east-west.

He compiled an eight-volume Geographia covering what was known about Earth. The first part of the Geographia is a discussion of the data and of the methods he used. As with the model of the Solar System in the Almagest, Ptolemy put all this information into a grand scheme. He assigned coordinates to all the places and geographic features he knew, in a grid that spanned the globe (although most of this has been lost). Latitude was measured from the equator, as it is today, but Ptolemy preferred to express it as the length of the longest day rather than degrees of arc (the length of the midsummer day increases from 12h to 24h as you go from the equator to the polar circle). He put the meridian of 0 longitude at the most western land he knew, the Canary Islands.

Geographia indicated the countries of "Serica" and "Sinae" (China) at the extreme right, beyond the island of "Taprobane" (Sri Lanka, oversized) and the "Aurea Chersonesus" (Southeast Asian peninsula).

Ptolemy also devised and provided instructions on how to make maps both of the whole inhabited world (oikoumenè) and of the Roman provinces. In the second part of the Geographia, he provided the necessary topographic lists, and captions for the maps. His oikoumenè spanned 180 degrees of longitude from the Canary Islands in the Atlantic Ocean to China, and about 81 degrees of latitude from the Arctic to the East Indies and deep into Africa. Ptolemy was well aware that he knew about only a quarter of the globe.

Late Antiquity

Knowledge of the spherical shape of Earth was received in scholarship of Late Antiquity as a matter of course, in both Neoplatonism and Early Christianity. Calcidius's fourth-century Latin commentary on and translation of Plato's Timaeus, which was one of the few examples of Greek scientific thought that was known in the Early Middle Ages in Western Europe, discussed Hipparchus's use of the geometrical circumstances of eclipses in On Sizes and Distances to compute the relative diameters of the Sun, Earth, and Moon.

Theological doubt informed by the flat Earth model implied in the Hebrew Bible inspired some early Christian scholars such as Lactantius, John Chrysostom and Athanasius of Alexandria, but this remained an eccentric current. Learned Christian authors such as Basil of Caesarea, Ambrose and Augustine of Hippo were clearly aware of the sphericity of Earth. "Flat Earthism" lingered longest in Syriac Christianity, which tradition laid greater importance on a literalist interpretation of the Old Testament. Authors from that tradition, such as Cosmas Indicopleustes, presented Earth as flat as late as in the 6th century. This last remnant of the ancient model of the cosmos disappeared during the 7th century. From the 8th century and the beginning medieval period, "no cosmographer worthy of note has called into question the sphericity of the Earth".

Such widely read encyclopedists as Macrobius and Martianus Capella (both 5th century AD) discussed the circumference of the sphere of the Earth, its central position in the universe, the difference of the seasons in northern and southern hemispheres, and many other geographical details. In his commentary on Cicero's Dream of Scipio, Macrobius described the Earth as a globe of insignificant size in comparison to the remainder of the cosmos.

Islamic world

Islamic astronomy was developed on the basis of a spherical Earth inherited from Hellenistic astronomy. The Islamic theoretical framework largely relied on the fundamental contributions of Aristotle (De caelo) and Ptolemy (Almagest), both of whom worked from the premise that Earth was spherical and at the centre of the universe (geocentric model).

Early Islamic scholars recognized Earth's sphericity, leading Muslim mathematicians to develop spherical trigonometry in order to further mensuration and to calculate the distance and direction from any given point on Earth to Mecca. This determined the Qibla, or Muslim direction of prayer.

Al-Ma'mun

Around 830 CE, Caliph al-Ma'mun commissioned a group of Muslim astronomers and geographers to measure the distance from Tadmur (Palmyra) to Raqqa in modern Syria. To determine the length of one degree of latitude, by using a rope to measure the distance travelled due north or south (meridian arc) on flat desert land until they reached a place where the altitude of the North Pole had changed by one degree.

Al-Ma'mun's arc measurement result is described in different sources as 66 2/3 miles, 56.5 miles, and 56 miles. The figure Alfraganus used based on these measurements was 56 2/3 miles, giving an Earth circumference of 20,400 miles (32,830 km). 662⁄3 miles results in a calculated planetary circumference of 24,000 miles (39,000 km).

Another estimate given by his astronomers was 562⁄3 Arabic miles (111.8 km) per degree, which corresponds to a circumference of 40,248 km, very close to the currently modern values of 111.3 km per degree and 40,068 km circumference, respectively.

Ibn Hazm

Andalusian polymath Ibn Hazm gave a concise proof of Earth's sphericity: at any given time, there is a point on the Earth where the Sun is directly overhead (which moves throughout the day and throughout the year).

Al-Farghānī

Al-Farghānī (Latinized as Alfraganus) was a Persian astronomer of the 9th century involved in measuring the diameter of Earth, and commissioned by Al-Ma'mun. His estimate given above for a degree (562⁄3 Arabic miles) was much more accurate than the 602⁄3 Roman miles (89.7 km) given by Ptolemy. Christopher Columbus uncritically used Alfraganus's figure as if it were in Roman miles instead of in Arabic miles, in order to prove a smaller size of Earth than that propounded by Ptolemy.

Biruni

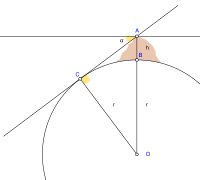

Abu Rayhan Biruni (973–1048) used a new method to accurately compute Earth's circumference, by which he arrived at a value that was close to modern values for Earth's circumference. His estimate of 6,339.6 kilometres (3,939.2 mi) for Earth's radius was only 31.4 kilometres (19.5 mi) less than the modern mean value of 6,371.0 kilometres (3,958.8 mi). In contrast to his predecessors, who measured Earth's circumference by sighting the Sun simultaneously from two different locations, Biruni developed a new method of using trigonometric calculations based on the angle between a plain and mountain top. This yielded more accurate measurements of Earth's circumference and made it possible for a single person to measure it from a single location. Biruni's method was intended to avoid "walking across hot, dusty deserts", and the idea came to him when he was on top of a tall mountain in India (present day Pind Dadan Khan, Pakistan).

From the top of the mountain, he sighted the dip angle which, along with the mountain's height (which he calculated beforehand), he applied to the law of sines formula to calculate the curvature of the Earth. While this was an ingenious new method, Al-Biruni was not aware of atmospheric refraction. To get the true dip angle the measured dip angle needs to be corrected by approximately 1/6, meaning that even with perfect measurement his estimate could only have been accurate to within about 20%.

Biruni also made use of algebra to formulate trigonometric equations and used the astrolabe to measure angles.

According to John J. O'Connor and Edmund F. Robertson,

Important contributions to geodesy and geography were also made by Biruni. He introduced techniques to measure Earth and distances on it using triangulation. He found the radius of Earth to be 6,339.6 kilometres (3,939.2 mi), a value not obtained in the West until the 16th century. His Masudic canon contains a table giving the coordinates of six hundred places, almost all of which he had direct knowledge.

Jamal-al-Din

A terrestrial globe (Kura-i-ard) was among the presents sent by the Persian Muslim astronomer Jamal-al-Din to Kublai Khan's Chinese court in 1267. It was made of wood on which "seven parts of water are represented in green, three parts of land in white, with rivers, lakes[, et cetera]". Ho Peng Yoke remarks that "it did not seem to have any general appeal to the Chinese in those days".

Applications

Muslim scholars who held to the spherical Earth theory used it for a quintessentially Islamic purpose: to calculate the distance and direction from any given point on Earth to Mecca. Muslim mathematicians developed spherical trigonometry; in the 11th century, al-Biruni used it to find the direction of Mecca from many cities and published it in The Determination of the Co-ordinates of Cities. This determined the Qibla, or Muslim direction of prayer.

Magnetic declination

Muslim astronomers and geographers were aware of magnetic declination by the 15th century, when the Egyptian astronomer 'Abd al-'Aziz al-Wafa'i (d. 1469/1471) measured it as 7 degrees from Cairo.

Medieval Europe

Greek influence

In medieval Europe, knowledge of the sphericity of Earth survived into the medieval corpus of knowledge by direct transmission of the texts of Greek antiquity (Aristotle), and via authors such as Isidore of Seville and Beda Venerabilis. It became increasingly traceable with the rise of scholasticism and medieval learning.

Revising the figures attributed to Posidonius, another Greek philosopher determined 18,000 miles (29,000 km) as the Earth's circumference. This last figure was promulgated by Ptolemy through his world maps. The maps of Ptolemy strongly influenced the cartographers of the Middle Ages. It is probable that Christopher Columbus, using such maps, was led to believe that Asia was only 3,000 or 4,000 miles (4,800 or 6,400 km) west of Europe.

Ptolemy's view was not universal, however, and chapter 20 of Mandeville's Travels (c. 1357) supports Eratosthenes' calculation.

Spread of this knowledge beyond the immediate sphere of Greco-Roman scholarship was necessarily gradual, associated with the pace of Christianisation of Europe. For example, the first evidence of knowledge of the spherical shape of Earth in Scandinavia is a 12th-century Old Icelandic translation of Elucidarius. A list of more than a hundred Latin and vernacular writers from Late Antiquity and the Middle Ages who were aware that Earth was spherical has been compiled by Reinhard Krüger, professor for Romance literature at the University of Stuttgart.

It was not until the 16th century that his concept of the Earth's size was revised. During that period the Flemish cartographer, Mercator, made successive reductions in the size of the Mediterranean Sea and all of Europe which had the effect of increasing the size of the earth.

Early Medieval Europe

Isidore of Seville

Bishop Isidore of Seville (560–636) taught in his widely read encyclopedia, The Etymologies, that Earth was "round". The bishop's confusing exposition and choice of imprecise Latin terms have divided scholarly opinion on whether he meant a sphere or a disk or even whether he meant anything specific. Notable recent scholars claim that he taught a spherical Earth. Isidore did not admit the possibility of people dwelling at the antipodes, considering them as legendary and noting that there was no evidence for their existence.

Bede the Venerable

The monk Bede (c. 672–735) wrote in his influential treatise on computus, The Reckoning of Time, that Earth was round. He explained the unequal length of daylight from "the roundness of the Earth, for not without reason is it called 'the orb of the world' on the pages of Holy Scripture and of ordinary literature. It is, in fact, set like a sphere in the middle of the whole universe." (De temporum ratione, 32). The large number of surviving manuscripts of The Reckoning of Time, copied to meet the Carolingian requirement that all priests should study the computus, indicates that many, if not most, priests were exposed to the idea of the sphericity of Earth. Ælfric of Eynsham paraphrased Bede into Old English, saying, "Now the Earth's roundness and the Sun's orbit constitute the obstacle to the day's being equally long in every land."

Bede was lucid about Earth's sphericity, writing "We call the earth a globe, not as if the shape of a sphere were expressed in the diversity of plains and mountains, but because, if all things are included in the outline, the earth's circumference will represent the figure of a perfect globe... For truly it is an orb placed in the centre of the universe; in its width it is like a circle, and not circular like a shield but rather like a ball, and it extends from its centre with perfect roundness on all sides."

Anania Shirakatsi

The 7th-century Armenian scholar Anania Shirakatsi described the world as "being like an egg with a spherical yolk (the globe) surrounded by a layer of white (the atmosphere) and covered with a hard shell (the sky)".

High and late medieval Europe

During the High Middle Ages, the astronomical knowledge in Christian Europe was extended beyond what was transmitted directly from ancient authors by transmission of learning from Medieval Islamic astronomy. An early student of such learning was Gerbert d'Aurillac, the later Pope Sylvester II.

Saint Hildegard (Hildegard von Bingen, 1098–1179), depicted the spherical Earth several times in her work Liber Divinorum Operum.

Johannes de Sacrobosco (c. 1195 – c. 1256 AD) wrote a famous work on Astronomy called Tractatus de Sphaera, based on Ptolemy, which primarily considers the sphere of the sky. However, it contains clear proofs of Earth's sphericity in the first chapter.

Many scholastic commentators on Aristotle's On the Heavens and Sacrobosco's Treatise on the Sphere unanimously agreed that Earth is spherical or round. Grant observes that no author who had studied at a medieval university thought that Earth was flat.

The Elucidarium of Honorius Augustodunensis (c. 1120), an important manual for the instruction of lesser clergy, which was translated into Middle English, Old French, Middle High German, Old Russian, Middle Dutch, Old Norse, Icelandic, Spanish, and several Italian dialects, explicitly refers to a spherical Earth. Likewise, the fact that Bertold von Regensburg (mid-13th century) used the spherical Earth as an illustration in a sermon shows that he could assume this knowledge among his congregation. The sermon was preached in the vernacular German, and thus was not intended for a learned audience.

Dante's Divine Comedy, written in Italian in the early 14th century, portrays Earth as a sphere, discussing implications such as the different stars visible in the southern hemisphere, the altered position of the Sun, and the various time zones of Earth.

Early modern period

The invention of the telescope and the theodolite and the development of logarithm tables allowed exact triangulation and arc measurements.

Ming China

Joseph Needham, in his Chinese Cosmology reports that Shen Kuo (1031-1095) used models of lunar eclipse and solar eclipse to conclude the roundness of celestial bodies.

If they were like balls they would surely obstruct each other when they met. I replied that these celestial bodies were certainly like balls. How do we know this? By the waxing and waning of the moon. The moon itself gives forth no light, but is like a ball of silver; the light is the light of the sun (reflected). When the brightness is first seen, the sun (-light passes almost) alongside, so the side only is illuminated and looks like a crescent. When the sun gradually gets further away, the light shines slanting, and the moon is full, round like a bullet. If half of a sphere is covered with (white) powder and looked at from the side, the covered part will look like a crescent; if looked at from the front, it will appear round. Thus we know that the celestial bodies are spherical.

However, Shen's ideas did not gain widespread acceptance or consideration, as the shape of Earth was not important to Confucian officials who were more concerned with human relations. In the 17th century, the idea of a spherical Earth, now considerably advanced by Western astronomy, ultimately spread to Ming China, when Jesuit missionaries, who held high positions as astronomers at the imperial court, successfully challenged the Chinese belief that Earth was flat and square.

The Ge zhi cao (格致草) treatise of Xiong Mingyu (熊明遇) published in 1648 showed a printed picture of Earth as a spherical globe, with the text stating that "the round Earth certainly has no square corners". The text also pointed out that sailing ships could return to their port of origin after circumnavigating the waters of Earth.

The influence of the map is distinctly Western, as traditional maps of Chinese cartography held the graduation of the sphere at 365.25 degrees, while the Western graduation was of 360 degrees. The adoption of European astronomy, facilitated by the failure of indigenous astronomy to make progress, was accompanied by a sinocentric reinterpretation that declared the imported ideas Chinese in origin:

European astronomy was so much judged worth consideration that numerous Chinese authors developed the idea that the Chinese of antiquity had anticipated most of the novelties presented by the missionaries as European discoveries, for example, the rotundity of the Earth and the "heavenly spherical star carrier model". Making skillful use of philology, these authors cleverly reinterpreted the greatest technical and literary works of Chinese antiquity. From this sprang a new science wholly dedicated to the demonstration of the Chinese origin of astronomy and more generally of all European science and technology.

Although mainstream Chinese science until the 17th century held the view that Earth was flat, square, and enveloped by the celestial sphere, this idea was criticized by the Jin-dynasty scholar Yu Xi (fl. 307–345), who suggested that Earth could be either square or round, in accordance with the shape of the heavens. The Yuan-dynasty mathematician Li Ye (c. 1192–1279) firmly argued that Earth was spherical, just like the shape of the heavens only smaller, since a square Earth would hinder the movement of the heavens and celestial bodies in his estimation. The 17th-century Ge zhi cao treatise also used the same terminology to describe the shape of Earth that the Eastern-Han scholar Zhang Heng (78–139 AD) had used to describe the shape of the Sun and Moon (as in, that the former was as round as a crossbow bullet, and the latter was the shape of a ball).

The Portuguese exploration of Africa and Asia, Columbus's voyage to the Americas (1492) provided more direct evidence of the size and shape of the world.

The first direct demonstration of Earth's sphericity came in the form of the first circumnavigation in history, an expedition captained by Portuguese explorer Ferdinand Magellan. The expedition was financed by the Spanish Crown. On August 10, 1519, the five ships under Magellan's command departed from Seville. They crossed the Atlantic Ocean, passed through what is now called the Strait of Magellan, crossed the Pacific, and arrived in Cebu, where Magellan was killed by Philippine natives in a battle. His second in command, the Spaniard Juan Sebastián Elcano, continued the expedition and, on September 6, 1522, arrived at Seville, completing the circumnavigation. Charles I of Spain, in recognition of his feat, gave Elcano a coat of arms with the motto Primus circumdedisti me (in Latin, "You went around me first").

A circumnavigation alone does not prove that Earth is spherical: it could be cylindric, irregularly globular, or one of many other shapes. Still, combined with trigonometric evidence of the form used by Eratosthenes 1,700 years prior, the Magellan expedition removed any reasonable doubt in educated circles in Europe. The Transglobe Expedition (1979–1982) was the first expedition to make a circumpolar circumnavigation, travelling the world "vertically" traversing both of the poles of rotation using only surface transport.

European calculations

In the Carolingian era, scholars discussed Macrobius's view of the antipodes. One of them, the Irish monk Dungal, asserted that the tropical gap between our habitable region and the other habitable region to the south was smaller than Macrobius had believed.

In 1505 the cosmographer and explorer Duarte Pacheco Pereira calculated the value of the degree of the meridian arc with a margin of error of only 4%, when the current error at the time varied between 7 and 15%.

Jean Picard performed the first modern meridian arc measurement in 1669–1670. He measured a baseline using wooden rods, a telescope (for his angular measurements), and logarithms (for computation). Gian Domenico Cassini then his son Jacques Cassini later continued Picard's arc (Paris meridian arc) northward to Dunkirk and southward to the Spanish border. Cassini divided the measured arc into two parts, one northward from Paris, another southward. When he computed the length of a degree from both chains, he found that the length of one degree of latitude in the northern part of the chain was shorter than that in the southern part (see illustration).

This result, if correct, meant that the earth was not a sphere, but a prolate spheroid (taller than wide). However, this contradicted computations by Isaac Newton and Christiaan Huygens. In 1659, Christiaan Huygens was the first to derive the now standard formula for the centrifugal force in his work De vi centrifuga. The formula played a central role in classical mechanics and became known as the second of Newton's laws of motion. Newton's theory of gravitation combined with the rotation of the Earth predicted the Earth to be an oblate spheroid (wider than tall), with a flattening of 1:230.

The issue could be settled by measuring, for a number of points on earth, the relationship between their distance (in north–south direction) and the angles between their zeniths. On an oblate Earth, the meridional distance corresponding to one degree of latitude will grow toward the poles, as can be demonstrated mathematically.

The French Academy of Sciences dispatched two expeditions. One expedition (1736–37) under Pierre Louis Maupertuis was sent to Torne Valley (near the Earth's northern pole). The second mission (1735–44) under Pierre Bouguer was sent to what is modern-day Ecuador, near the equator. Their measurements demonstrated an oblate Earth, with a flattening of 1:210. This approximation to the true shape of the Earth became the new reference ellipsoid.

In 1787 the first precise trigonometric survey to be undertaken within Britain was the Anglo-French Survey. Its purpose was to link the Greenwich and Paris' observatories. The survey is very significant as the forerunner of the work of the Ordnance Survey which was founded in 1791, one year after William Roy's death.

Johann Georg Tralles surveyed the Bernese Oberland, then the entire Canton of Bern. Soon after the Anglo-French Survey, in 1791 and 1797, he and his pupil Ferdinand Rudolph Hassler measured the base of the Grand Marais (German: Grosses Moos) near Aarberg in Seeland. This work earned Tralles to be appointed as the representative of the Helvetic Republic on the international scientific committee meeting in Paris from 1798 to 1799 to determine the length of the metre.

The French Academy of Sciences had commissioned an expedition led by Jean Baptiste Joseph Delambre and Pierre Méchain, lasting from 1792 to 1799, which attempted to accurately measure the distance between a belfry in Dunkerque and Montjuïc castle in Barcelona at the longitude of Paris Panthéon. The metre was defined as one ten-millionth of the shortest distance from the North Pole to the equator passing through Paris, assuming an Earth's flattening of 1/334. The committee extrapolated from Delambre and Méchain's survey the distance from the North Pole to the Equator which was 5 130 740 toises. As the metre had to be equal to one ten-million of this distance, it was defined as 0,513074 toises or 443,296 lignes of the Toise of Peru (see below).

Asia and Americas

A discovery made in 1672-1673 by Jean Richer turned the attention of mathematicians to the deviation of the Earth's shape from a spherical form. This astronomer, having been sent by the Academy of Sciences of Paris to Cayenne, in South America, for the purpose of investigating the amount of astronomical refraction and other astronomical objects, notably the parallax of Mars between Paris and Cayenne in order to determine the Earth-Sun distance, observed that his clock, which had been regulated at Paris to beat seconds, lost about two minutes and a half daily at Cayenne, and that in order to bring it to measure mean solar time it was necessary to shorten the pendulum by more than a line (about 1⁄12th of an in.). This fact was scarcely credited till it had been confirmed by the subsequent observations of Varin and Deshayes on the coasts of Africa and America.

In South America Bouguer noticed, as did George Everest in the 19th century Great Trigonometric Survey of India, that the astronomical vertical tended to be pulled in the direction of large mountain ranges, due to the gravitational attraction of these huge piles of rock. As this vertical is everywhere perpendicular to the idealized surface of mean sea level, or the geoid, this means that the figure of the Earth is even more irregular than an ellipsoid of revolution. Thus the study of the "undulation of the geoid" became the next great undertaking in the science of studying the figure of the Earth.

19th century

In the late 19th century the Mitteleuropäische Gradmessung (Central European Arc Measurement) was established by several central European countries and a Central Bureau was set up at the expense of Prussia, within the Geodetic Institute at Berlin. One of its most important goals was the derivation of an international ellipsoid and a gravity formula which should be optimal not only for Europe but also for the whole world. The Mitteleuropäische Gradmessung was an early predecessor of the International Association of Geodesy (IAG) one of the constituent sections of the International Union of Geodesy and Geophysics (IUGG) which was founded in 1919.

Prime meridian and standard of length

In 1811 Ferdinand Rudolph Hassler was selected to direct the U.S. coast survey, and sent on a mission to France and England to procure instruments and standards of measurement. The unit of length to which all distances measured in the U.S. coast survey were referred is the French metre, of which Ferdinand Rudolph Hassler had brought a copy in the United States in 1805.

The Scandinavian-Russian meridian arc or Struve Geodetic Arc, named after the German astronomer Friedrich Georg Wilhelm von Struve, was a degree measurement that consisted of a nearly 3000 km long network of geodetic survey points. The Struve Geodetic Arc was one of the most precise and largest projects of earth measurement at that time. In 1860 Friedrich Georg Wilhelm Struve published his Arc du méridien de 25° 20′ entre le Danube et la Mer Glaciale mesuré depuis 1816 jusqu’en 1855. The flattening of the earth was estimated at 1/294.26 and the earth's equatorial radius was estimated at 6378360.7 metres.

In the early 19th century, the Paris meridian's arc was recalculated with greater precision between Shetland and the Balearic Islands by the French astronomers François Arago and Jean-Baptiste Biot. In 1821 they published their work as a fourth volume following the three volumes of "Bases du système métrique décimal ou mesure de l'arc méridien compris entre les parallèles de Dunkerque et Barcelone" (Basis for the decimal metric system or measurement of the meridian arc comprised between Dunkirk and Barcelona) by Delambre and Méchain.

Louis Puissant declared in 1836 in front of the French Academy of Sciences that Delambre and Méchain had made an error in the measurement of the French meridian arc. Some thought that the base of the metric system could be attacked by pointing out some errors that crept into the measurement of the two French scientists. Méchain had even noticed an inaccuracy he did not dare to admit. As this survey was also part of the groundwork for the map of France, Antoine Yvon Villarceau checked, from 1861 to 1866, the geodesic opérations in eight points of the meridian arc. Some of the errors in the operations of Delambre and Méchain were corrected. In 1866, at the conference of the International Association of Geodesy in Neuchâtel Carlos Ibáñez e Ibáñez de Ibero announced Spain's contribution to the remeasurement and extension of the French meridian arc. In 1870, François Perrier was in charge of resuming the triangulation between Dunkirk and Barcelona. This new survey of the Paris meridian arc, named West Europe-Africa Meridian-arc by Alexander Ross Clarke, was undertaken in France and in Algeria under the direction of François Perrier from 1870 to his death in 1888. Jean-Antonin-Léon Bassot completed the task in 1896. According to the calculations made at the central bureau of the international association on the great meridian arc extending from the Shetland Islands, through Great Britain, France and Spain to El Aghuat in Algeria, the Earth equatorial radius was 6377935 metres, the ellipticity being assumed as 1/299.15.

Many measurements of degrees of longitudes along central parallels in Europe were projected and partly carried out as early as the first half of the 19th century; these, however, only became of importance after the introduction of the electric telegraph, through which calculations of astronomical longitudes obtained a much higher degree of accuracy. Of the greatest moment is the measurement near the parallel of 52° lat., which extended from Valentia in Ireland to Orsk in the southern Ural mountains over 69 degrees of longitude. F. G. W. Struve, who is to be regarded as the father of the Russo-Scandinavian latitude-degree measurements, was the originator of this investigation. Having made the requisite arrangements with the governments in 1857, he transferred them to his son Otto, who, in 1860, secured the co-operation of England.

In 1860, the Russian Government at the instance of Otto Wilhelm von Sturve invited the Governments of Belgium, France, Prussia and England to connect their triangulations in order to measure the length of an arc of parallel in latitude 52° and to test the accuracy of the figure and dimensions of the Earth, as derived from the measurements of arc of meridian. In order to combine the measurements, it was necessary to compare the geodetic standards of length used in the different countries. The British Government invited those of France, Belgium, Prussia, Russia, India, Australia, Austria, Spain, United States and Cape of Good Hope to send their standards to the Ordnance Survey office in Southampton. Notably the standards of France, Spain and United States were based on the metric system, whereas those of Prussia, Belgium and Russia where calibrated against the toise, of which the oldest physical representative was the Toise of Peru. The Toise of Peru had been constructed in 1735 for Bouguer and De La Condamine as their standard of reference in the French Geodesic Mission, conducted in actual Ecuador from 1735 to 1744 in collaboration with the Spanish officers Jorge Juan and Antonio de Ulloa.

Friedrich Bessel was responsible for the nineteenth-century investigations of the shape of the Earth by means of the pendulum's determination of gravity and the use of Clairaut's theorem. The studies he conducted from 1825 to 1828 and his determination of the length of the pendulum beating the second in Berlin seven years later marked the beginning of a new era in geodesy. Indeed, the reversible pendulum as it was used by geodesists at the end of the 19th century was largely due to the work of Bessel, because neither Johann Gottlieb Friedrich von Bohnenberger, its inventor, nor Henry Kater who used it in 1818 brought the improvements which would result from the precious indications of Bessel, and which converted the reversible pendulum into one of the most admirable instruments which the scientists of the nineteenth century could use. The reversible pendulum built by the Repsold brothers was used in Switzerland in 1865 by Émile Plantamour for the measurement of gravity in six stations of the Swiss geodetic network. Following the example set by this country and under the patronage of the International Geodetic Association, Austria, Bavaria, Prussia, Russia and Saxony undertook gravity determinations on their respective territories.

However, these results could only be considered provisional insofar as they did not take into account the movements that the oscillations of the pendulum impart to its suspension plane, which constitute an important factor of error in measuring both the duration of the oscillations and the length of the pendulum. Indeed, the determination of gravity by the pendulum is subject to two types of error. On the one hand the resistance of the air and on the other hand the movements that the oscillations of the pendulum impart to its plane of suspension. These movements were particularly important with the device designed by the Repsold brothers on Bessel's indications, because the pendulum had a large mass in order to counteract the effect of the viscosity of the air. While Emile Plantamour was carrying out a series of experiments with this device, Adolphe Hirsch found a way to highlight the movements of the pendulum suspension plane by an ingenious optical amplification process. Isaac-Charles Élisée Cellérier, a Genevan mathematician and Charles Sanders Peirce would independently develop a correction formula which would make it possible to use the observations made using this type of gravimeter.

As Carlos Ibáñez e Ibáñez de Ibero stated. If precision metrology had needed the help of geodesy, it could not continue to prosper without the help of metrology. Indeed, how to express all the measurements of terrestrial arcs as a function of a single unit, and all the determinations of the force of gravity with the pendulum, if metrology had not created a common unit, adopted and respected by all civilized nations, and if in addition one had not compared, with great precision, to the same unit all the rulers for measuring geodesic bases, and all the pendulum rods that had hitherto been used or would be used in the future? Only when this series of metrological comparisons would be finished with a probable error of a thousandth of a millimeter would geodesy be able to link the works of the different nations one with another, and then proclaim the result of the measurement of the Globe.

Alexander Ross Clarke and Henry James published the first results of the standards' comparisons in 1867. The same year Russia, Spain and Portugal joined the Europäische Gradmessung and the General Conference of the association proposed the metre as a uniform length standard for the Arc Measurement and recommended the establishment of an International Bureau of Weights and Measures.

The Europäische Gradmessung decided the creation of an international geodetic standard at the General Conference held in Paris in 1875. The Conference of the International Association of Geodesy also dealt with the best instrument to be used for the determination of gravity. After an in-depth discussion in which Charles Sanders Peirce took part, the association decided in favor of the reversion pendulum, which was used in Switzerland, and it was resolved to redo in Berlin, in the station where Bessel made his famous measurements, the determination of gravity by means of apparatus of various kinds employed in different countries, in order to compare them and thus to have the equation of their scales.

The Metre Convention was signed in 1875 in Paris and the International Bureau of Weights and Measures was created under the supervision of the International Committee for Weights and Measures. The first president of the International Committee for Weights and Measures was the Spanish geodesist Carlos Ibáñez e Ibáñez de Ibero. He also was the president of the Permanent Commission of the Europäische Gradmessung from 1874 to 1886. In 1886 the association changed its name to the International Geodetic Association and Carlos Ibáñez e Ibáñez de Ibero was reelected as president. He remained in this position until his death in 1891. During this period the International Geodetic Association gained worldwide importance with the joining of United States, Mexico, Chile, Argentina and Japan. In 1883 the General Conference of the Europäische Gradmessung had proposed to select the Greenwich meridian as prime meridian in the hope that United States and Great Britain would accede to the Association. Moreover, according to the calculations made at the central bureau of the international association on the West Europe-Africa Meridian-arc the meridian of Greenwich was nearer the mean than that of Paris.

Geodesy and mathematics

In 1804 Johann Georg Tralles was made a member of the Berlin Academy of Sciences. In 1810 he became the first holder of the chair of mathematics at the Humboldt University of Berlin. In the same year he was appointed secretary of the mathematics class at the Berlin Academy of Sciences. Tralles maintained an important correspondence with Friedrich Wilhelm Bessel and supported his appointment to the University of Königsberg.

In 1809 Carl Friedrich Gauss published his method of calculating the orbits of celestial bodies. In that work he claimed to have been in possession of the method of least squares since 1795. This naturally led to a priority dispute with Adrien-Marie Legendre. However, to Gauss's credit, he went beyond Legendre and succeeded in connecting the method of least squares with the principles of probability and to the normal distribution. He had managed to complete Laplace's program of specifying a mathematical form of the probability density for the observations, depending on a finite number of unknown parameters, and define a method of estimation that minimises the error of estimation. Gauss showed that the arithmetic mean is indeed the best estimate of the location parameter by changing both the probability density and the method of estimation. He then turned the problem around by asking what form the density should have and what method of estimation should be used to get the arithmetic mean as estimate of the location parameter. In this attempt, he invented the normal distribution.

In 1810, after reading Gauss's work, Pierre-Simon Laplace, after proving the central limit theorem, used it to give a large sample justification for the method of least squares and the normal distribution. In 1822, Gauss was able to state that the least-squares approach to regression analysis is optimal in the sense that in a linear model where the errors have a mean of zero, are uncorrelated, and have equal variances, the best linear unbiased estimator of the coefficients is the least-squares estimator. This result is known as the Gauss–Markov theorem.

The publication in 1838 of Friedrich Wilhelm Bessel’s Gradmessung in Ostpreussen marked a new era in the science of geodesy. Here was found the method of least squares applied to the calculation of a network of triangles and the reduction of the observations generally. The systematic manner in which all the observations were taken with the view of securing final results of extreme accuracy was admirable. Bessel was also the first scientist who realised the effect later called personal equation, that several simultaneously observing persons determine slightly different values, especially recording the transition time of stars.

Most of the relevant theories were then derived by the German geodesist Friedrich Robert Helmert in his famous books Die mathematischen und physikalischen Theorien der höheren Geodäsie, Volumes 1 & 2 (1880 & 1884, resp.). Helmert also derived the first global ellipsoid in 1906 with an accuracy of 100 meters (0.002 percent of the Earth's radii). The US geodesist Hayford derived a global ellipsoid in ~1910, based on intercontinental isostasy and an accuracy of 200 m. It was adopted by the IUGG as "international ellipsoid 1924".