From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Popper%27s_experiment

Popper's experiment is an experiment proposed by the philosopher Karl Popper to put to the test different interpretations of quantum mechanics (QM). In fact, as early as 1934, Popper started criticising the increasingly more accepted Copenhagen interpretation, a popular subjectivist interpretation of quantum mechanics. Therefore, in his most famous book Logik der Forschung he proposed a first experiment alleged to empirically discriminate between the Copenhagen Interpretation and a realist interpretation, which he advocated. Einstein, however, wrote a letter to Popper about the experiment in which he raised some crucial objections and Popper himself declared that this first attempt was "a gross mistake for which I have been deeply sorry and ashamed of ever since".

Popper, however, came back to the foundations of quantum mechanics from 1948, when he developed his criticism of determinism in both quantum and classical physics. As a matter of fact, Popper greatly intensified his research activities on the foundations of quantum mechanics throughout the 1950s and 1960s developing his interpretation of quantum mechanics in terms of real existing probabilities (propensities), also thanks to the support of a number of distinguished physicists (such as David Bohm).

Overview

In 1980, Popper proposed perhaps his more important, yet overlooked, contribution to QM: a "new simplified version of the EPR experiment".

The experiment was however published only two years later, in the third volume of the Postscript to the Logic of Scientific Discovery.

The most widely known interpretation of quantum mechanics is the Copenhagen interpretation put forward by Niels Bohr and his school. It maintains that observations lead to a wavefunction collapse, thereby suggesting the counter-intuitive result that two well separated, non-interacting systems require action-at-a-distance. Popper argued that such non-locality conflicts with common sense, and would lead to a subjectivist interpretation of phenomena, depending on the role of the 'observer'.

While the EPR argument was always meant to be a thought experiment, put forward to shed light on the intrinsic paradoxes of QM, Popper proposed an experiment which could have been experimentally implemented and participated at a physics conference organised in Bari in 1983, to present his experiment and propose to the experimentalists to carry it out.

The actual realisation of Popper's experiment required new techniques which would make use of the phenomenon of spontaneous parametric down-conversion but had not yet been exploited at that time, so his experiment was eventually performed only in 1999, five years after Popper had died.

Description

Contrarily to the first (mistaken) proposal of 1934, Popper's experiment of 1980 exploits couples of entangled particles, in order to put to the test Heisenberg's uncertainty principle.

Indeed, Popper maintains:

"I wish to suggest a crucial experiment to test whether knowledge alone is sufficient to create 'uncertainty' and, with it, scatter (as is contended under the Copenhagen interpretation), or whether it is the physical situation that is responsible for the scatter."

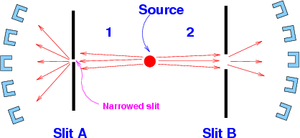

Popper's proposed experiment consists of a low-intensity source of particles that can generate pairs of particles traveling to the left and to the right along the x-axis. The beam's low intensity is "so that the probability is high that two particles recorded at the same time on the left and on the right are those which have actually interacted before emission."

There are two slits, one each in the paths of the two particles. Behind the slits are semicircular arrays of counters which can detect the particles after they pass through the slits (see Fig. 1). "These counters are coincident counters [so] that they only detect particles that have passed at the same time through A and B."

Popper argued that because the slits localize the particles to a narrow region along the y-axis, from the uncertainty principle they experience large uncertainties in the y-components of their momenta. This larger spread in the momentum will show up as particles being detected even at positions that lie outside the regions where particles would normally reach based on their initial momentum spread.

Popper suggests that we count the particles in coincidence, i.e., we count only those particles behind slit B, whose partner has gone through slit A. Particles which are not able to pass through slit A are ignored.

The Heisenberg scatter for both the beams of particles going to the right and to the left, is tested "by making the two slits A and B wider or narrower. If the slits are narrower, then counters should come into play which are higher up and lower down, seen from the slits. The coming into play of these counters is indicative of the wider scattering angles which go with a narrower slit, according to the Heisenberg relations."

Now the slit at A is made very small and the slit at B very wide. Popper wrote that, according to the EPR argument, we have measured position "y" for both particles (the one passing through A and the one passing through B) with the precision , and not just for particle passing through slit A. This is because from the initial entangled EPR state we can calculate the position of the particle 2, once the position of particle 1 is known, with approximately the same precision. We can do this, argues Popper, even though slit B is wide open.

Therefore, Popper states that "fairly precise "knowledge"" about the y position of particle 2 is made; its y position is measured indirectly. And since it is, according to the Copenhagen interpretation, our knowledge which is described by the theory – and especially by the Heisenberg relations — it should be expected that the momentum of particle 2 scatters as much as that of particle 1, even though the slit A is much narrower than the widely opened slit at B.

Now the scatter can, in principle, be tested with the help of the counters. If the Copenhagen interpretation is correct, then such counters on the far side of B that are indicative of a wide scatter (and of a narrow slit) should now count coincidences: counters that did not count any particles before the slit A was narrowed.

To sum up: if the Copenhagen interpretation is correct, then any increase in the precision in the measurement of our mere knowledge of the particles going through slit B should increase their scatter.

Popper was inclined to believe that the test would decide against the Copenhagen interpretation, as it is applied to Heisenberg's uncertainty principle. If the test decided in favor of the Copenhagen interpretation, Popper argued, it could be interpreted as indicative of action at a distance.

The debate

Many viewed Popper's experiment as a crucial test of quantum mechanics, and there was a debate on what result an actual realization of the experiment would yield.

In 1985, Sudbery pointed out that the EPR state, which could be written as , already contained an infinite spread in momenta (tacit in the integral over k), so no further spread could be seen by localizing one particle. Although it pointed to a crucial flaw in Popper's argument, its full implication was not understood. Kripps theoretically analyzed Popper's experiment and predicted that narrowing slit A would lead to momentum spread increasing at slit B. Kripps also argued that his result was based just on the formalism of quantum mechanics, without any interpretational problem. Thus, if Popper was challenging anything, he was challenging the central formalism of quantum mechanics.

In 1987 there came a major objection to Popper's proposal from Collet and Loudon. They pointed out that because the particle pairs originating from the source had a zero total momentum, the source could not have a sharply defined position. They showed that once the uncertainty in the position of the source is taken into account, the blurring introduced washes out the Popper effect.

Furthermore, Redhead analyzed Popper's experiment with a broad source and concluded that it could not yield the effect that Popper was seeking.

Realizations

Kim–Shih's experiment

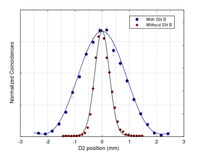

Popper's experiment was realized in 1999 by Yoon-Ho Kim & Yanhua Shih using a spontaneous parametric down-conversion photon source. They did not observe an extra spread in the momentum of particle 2 due to particle 1 passing through a narrow slit. They write:

"Indeed, it is astonishing to see that the experimental results agree with Popper’s prediction. Through quantum entanglement one may learn the precise knowledge of a photon’s position and would therefore expect a greater uncertainty in its momentum under the usual Copenhagen interpretation of the uncertainty relations. However, the measurement shows that the momentum does not experience a corresponding increase in uncertainty. Is this a violation of the uncertainty principle?"

Rather, the momentum spread of particle 2 (observed in coincidence with particle 1 passing through slit A) was narrower than its momentum spread in the initial state.

They concluded that:

"Popper and EPR were correct in the prediction of the physical outcomes of their experiments. However, Popper and EPR made the same error by applying the results of two-particle physics to the explanation of the behavior of an individual particle. The two-particle entangled state is not the state of two individual particles. Our experimental result is emphatically NOT a violation of the uncertainty principle which governs the behavior of an individual quantum."

This led to a renewed heated debate, with some even going to the extent of claiming that Kim and Shih's experiment had demonstrated that there is no non-locality in quantum mechanics.

Unnikrishnan (2001), discussing Kim and Shih's result, wrote that the result:

"is a solid proof that there is no state-reduction-at-a-distance. ... Popper's experiment and its analysis forces us to radically change the current held view on quantum non-locality."

Short criticized Kim and Shih's experiment, arguing that because of the finite size of the source, the localization of particle 2 is imperfect, which leads to a smaller momentum spread than expected. However, Short's argument implies that if the source were improved, we should see a spread in the momentum of particle 2.

Sancho carried out a theoretical analysis of Popper's experiment, using the path-integral approach, and found a similar kind of narrowing in the momentum spread of particle 2, as was observed by Kim and Shih. Although this calculation did not give them any deep insight, it indicated that the experimental result of Kim-Shih agreed with quantum mechanics. It did not say anything about what bearing it has on the Copenhagen interpretation, if any.

Ghost diffraction

Popper's conjecture has also been tested experimentally in the so-called two-particle ghost interference experiment. This experiment was not carried out with the purpose of testing Popper's ideas, but ended up giving a conclusive result about Popper's test. In this experiment two entangled photons travel in different directions. Photon 1 goes through a slit, but there is no slit in the path of photon 2. However, Photon 2, if detected in coincidence with a fixed detector behind the slit detecting photon 1, shows a diffraction pattern. The width of the diffraction pattern for photon 2 increases when the slit in the path of photon 1 is narrowed. Thus, increase in the precision of knowledge about photon 2, by detecting photon 1 behind the slit, leads to increase in the scatter of photons 2.

Predictions according to quantum mechanics

Tabish Qureshi has published the following analysis of Popper's argument.

The ideal EPR state is written as , where the two labels in the "ket" state represent the positions or momenta of the two particle. This implies perfect correlation, meaning, detecting particle 1 at position will also lead to particle 2 being detected at . If particle 1 is measured to have a momentum , particle 2 will be detected to have a momentum . The particles in this state have infinite momentum spread, and are infinitely delocalized. However, in the real world, correlations are always imperfect. Consider the following entangled state

where represents a finite momentum spread, and is a measure of the position spread of the particles. The uncertainties in position and momentum, for the two particles can be written as

The action of a narrow slit on particle 1 can be thought of as reducing it to a narrow Gaussian state:

- .

This will reduce the state of particle 2 to

- .

The momentum uncertainty of particle 2 can now be calculated, and is given by

If we go to the extreme limit of slit A being infinitesimally narrow (), the momentum uncertainty of particle 2 is , which is exactly what the momentum spread was to begin with. In fact, one can show that the momentum spread of particle 2, conditioned on particle 1 going through slit A, is always less than or equal to (the initial spread), for any value of , and . Thus, particle 2 does not acquire any extra momentum spread than it already had. This is the prediction of standard quantum mechanics. So, the momentum spread of particle 2 will always be smaller than what was contained in the original beam. This is what was actually seen in the experiment of Kim and Shih. Popper's proposed experiment, if carried out in this way, is incapable of testing the Copenhagen interpretation of quantum mechanics.

On the other hand, if slit A is gradually narrowed, the momentum spread of particle 2 (conditioned on the detection of particle 1 behind slit A) will show a gradual increase (never beyond the initial spread, of course). This is what quantum mechanics predicts. Popper had said

"...if the Copenhagen interpretation is correct, then any increase in the precision in the measurement of our mere knowledge of the particles going through slit B should increase their scatter."

This particular aspect can be experimentally tested.

Faster-than-light signalling

The expected additional momentum scatter which Popper wrongly attributed to the Copenhagen interpretation would allow faster-than-light communication, which is excluded by the no-communication theorem in quantum mechanics. Note however that both Collet and Loudon and Qureshi compute that scatter decreases with decreasing the size of slit A, contrary to the increase predicted by Popper. There was some controversy about this decrease also allowing superluminal communication. But the reduction is of the standard deviation of the conditional distribution of the position of particle 2 knowing that particle 1 did go through slit A, since we are only counting coincident detection. The reduction in conditional distribution allows for the unconditional distribution to remain the same, which is the only thing that matters to exclude superluminal communication. Also note that the conditional distribution would be different from the unconditional distribution in classical physics as well. But measuring the conditional distribution after slit B requires the information on the result at slit A, which has to be communicated classically, so that the conditional distribution cannot be known as soon as the measurement is made at slit A but is delayed by the time required to transmit that information.

![{\displaystyle \psi (y_{1},y_{2})=A\!\int _{-\infty }^{\infty }dpe^{-{\frac {1}{4}}p^{2}\sigma ^{2}}e^{-{\frac {i}{\hbar }}py_{2}}e^{{\frac {i}{\hbar }}py_{1}}\exp \left[-{\frac {\left(y_{1}+y_{2}\right)^{2}}{16\Omega ^{2}}}\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/fd696dcd91c27e1ebae377d366e7925c7d9f084c)