The philosophy of artificial intelligence attempts to answer such questions as follows:

- Can a machine act intelligently? Can it solve any problem that a person would solve by thinking?

- Are human intelligence and machine intelligence the same? Is the human brain essentially a computer?

- Can a machine have a mind, mental states, and consciousness in the same way that a human being can? Can it feel how things are?

Important propositions in the philosophy of AI include:

- Turing's "polite convention": If a machine behaves as intelligently as a human being, then it is as intelligent as a human being.[2]

- The Dartmouth proposal: "Every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it."[3]

- Newell and Simon's physical symbol system hypothesis: "A physical symbol system has the necessary and sufficient means of general intelligent action."[4]

- Searle's strong AI hypothesis: "The appropriately programmed computer with the right inputs and outputs would thereby have a mind in exactly the same sense human beings have minds."[5]

- Hobbes' mechanism: "For 'reason' ... is nothing but 'reckoning,' that is adding and subtracting, of the consequences of general names agreed upon for the 'marking' and 'signifying' of our thoughts..."[6]

Can a machine display general intelligence?

The basic position of most AI researchers is summed up in this statement, which appeared in the proposal for the Dartmouth workshop of 1956:

- Every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it.[3]

The first step to answering the question is to clearly define "intelligence".

Intelligence

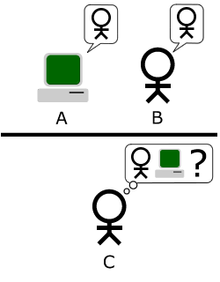

The "standard interpretation" of the Turing test.[8]

Turing test

Alan Turing[9] reduced the problem of defining intelligence to a simple question about conversation. He suggests that: if a machine can answer any question put to it, using the same words that an ordinary person would, then we may call that machine intelligent. A modern version of his experimental design would use an online chat room, where one of the participants is a real person and one of the participants is a computer program. The program passes the test if no one can tell which of the two participants is human.[2] Turing notes that no one (except philosophers) ever asks the question "can people think?" He writes "instead of arguing continually over this point, it is usual to have a polite convention that everyone thinks".[10] Turing's test extends this polite convention to machines:- If a machine acts as intelligently as human being, then it is as intelligent as a human being.

Intelligent agent definition

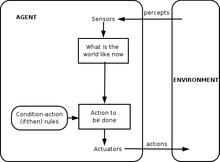

Simple reflex agent

Recent A.I. research defines intelligence in terms of intelligent agents. An "agent" is something which perceives and acts in an environment. A "performance measure" defines what counts as success for the agent.[12]

- If an agent acts so as to maximize the expected value of a performance measure based on past experience and knowledge then it is intelligent.[13]

Arguments that a machine can display general intelligence

The brain can be simulated

An MRI scan of a normal adult human brain

Hubert Dreyfus describes this argument as claiming that "if the nervous system obeys the laws of physics and chemistry, which we have every reason to suppose it does, then .... we ... ought to be able to reproduce the behavior of the nervous system with some physical device".[15] This argument, first introduced as early as 1943[16] and vividly described by Hans Moravec in 1988,[17] is now associated with futurist Ray Kurzweil, who estimates that computer power will be sufficient for a complete brain simulation by the year 2029.[18] A non-real-time simulation of a thalamocortical model that has the size of the human brain (1011 neurons) was performed in 2005[19] and it took 50 days to simulate 1 second of brain dynamics on a cluster of 27 processors.

Few[quantify] disagree that a brain simulation is possible in theory, even critics of AI such as Hubert Dreyfus and John Searle.[20] However, Searle points out that, in principle, anything can be simulated by a computer; thus, bringing the definition to its breaking point leads to the conclusion that any process at all can technically be considered "computation". "What we wanted to know is what distinguishes the mind from thermostats and livers," he writes.[21] Thus, merely mimicking the functioning of a brain would in itself be an admission of ignorance regarding intelligence and the nature of the mind[citation needed].

Human thinking is symbol processing

In 1963, Allen Newell and Herbert A. Simon proposed that "symbol manipulation" was the essence of both human and machine intelligence. They wrote:- A physical symbol system has the necessary and sufficient means of general intelligent action.[4]

- The mind can be viewed as a device operating on bits of information according to formal rules.[23]

Arguments against symbol processing

These arguments show that human thinking does not consist (solely) of high level symbol manipulation. They do not show that artificial intelligence is impossible, only that more than symbol processing is required.Gödelian anti-mechanist arguments

In 1931, Kurt Gödel proved with an incompleteness theorem that it is always possible to construct a "Gödel statement" that a given consistent formal system of logic (such as a high-level symbol manipulation program) could not prove. Despite being a true statement, the constructed Gödel statement is unprovable in the given system. (The truth of the constructed Gödel statement is contingent on the consistency of the given system; applying the same process to a subtly inconsistent system will appear to succeed, but will actually yield a false "Gödel statement" instead.) More speculatively, Gödel conjectured that the human mind can correctly eventually determine the truth or falsity of any well-grounded mathematical statement (including any possible Gödel statement), and that therefore the human mind's power is not reducible to a mechanism.[25] Philosopher John Lucas (since 1961) and Roger Penrose (since 1989) have championed this philosophical anti-mechanist argument.[26] Gödelian anti-mechanist arguments tend to rely on the innocuous-seeming claim that a system of human mathematicians (or some idealization of human mathematicians) is both consistent (completely free of error) and believes fully in its own consistency (and can make all logical inferences that follow from its own consistency, including belief in its Gödel statement)[citation needed]. This is provably impossible for a Turing machine[clarification needed] (and, by an informal extension, any known type of mechanical computer) to do; therefore, the Gödelian concludes that human reasoning is too powerful to be captured in a machine[dubious ].However, the modern consensus in the scientific and mathematical community is that actual human reasoning is inconsistent; that any consistent "idealized version" H of human reasoning would logically be forced to adopt a healthy but counter-intuitive open-minded skepticism about the consistency of H (otherwise H is provably inconsistent); and that Gödel's theorems do not lead to any valid argument that humans have mathematical reasoning capabilities beyond what a machine could ever duplicate.[27][28][29] This consensus that Gödelian anti-mechanist arguments are doomed to failure is laid out strongly in Artificial Intelligence: "any attempt to utilize (Gödel's incompleteness results) to attack the computationalist thesis is bound to be illegitimate, since these results are quite consistent with the computationalist thesis."[30]

More pragmatically, Russell and Norvig note that Gödel's argument only applies to what can theoretically be proved, given an infinite amount of memory and time. In practice, real machines (including humans) have finite resources and will have difficulty proving many theorems. It is not necessary to prove everything in order to be intelligent[when defined as?].[31]

Less formally, Douglas Hofstadter, in his Pulitzer prize winning book Gödel, Escher, Bach: An Eternal Golden Braid, states that these "Gödel-statements" always refer to the system itself, drawing an analogy to the way the Epimenides paradox uses statements that refer to themselves, such as "this statement is false" or "I am lying".[32] But, of course, the Epimenides paradox applies to anything that makes statements, whether they are machines or humans, even Lucas himself. Consider:

- Lucas can't assert the truth of this statement.[33]

After concluding that human reasoning is non-computable, Penrose went on to controversially speculate that some kind of hypothetical non-computable processes involving the collapse of quantum mechanical states give humans a special advantage over existing computers. Existing quantum computers are only capable of reducing the complexity of Turing computable tasks and are still restricted to tasks within the scope of Turing machines.[citation needed][clarification needed]. By Penrose and Lucas's arguments, existing quantum computers are not sufficient, so Penrose seeks for some other process involving new physics, for instance quantum gravity which might manifest new physics at the scale of the Planck mass via spontaneous quantum collapse of the wave function. These states, he suggested, occur both within neurons and also spanning more than one neuron.[35] However, other scientists point out that there is no plausible organic mechanism in the brain for harnessing any sort of quantum computation, and furthermore that the timescale of quantum decoherence seems too fast to influence neuron firing.[36]

Dreyfus: the primacy of unconscious skills

Hubert Dreyfus argued that human intelligence and expertise depended primarily on unconscious instincts rather than conscious symbolic manipulation, and argued that these unconscious skills would never be captured in formal rules.[37]Dreyfus's argument had been anticipated by Turing in his 1950 paper Computing machinery and intelligence, where he had classified this as the "argument from the informality of behavior."[38] Turing argued in response that, just because we do not know the rules that govern a complex behavior, this does not mean that no such rules exist. He wrote: "we cannot so easily convince ourselves of the absence of complete laws of behaviour ... The only way we know of for finding such laws is scientific observation, and we certainly know of no circumstances under which we could say, 'We have searched enough. There are no such laws.'"[39]

Russell and Norvig point out that, in the years since Dreyfus published his critique, progress has been made towards discovering the "rules" that govern unconscious reasoning.[40] The situated movement in robotics research attempts to capture our unconscious skills at perception and attention.[41] Computational intelligence paradigms, such as neural nets, evolutionary algorithms and so on are mostly directed at simulated unconscious reasoning and learning. Statistical approaches to AI can make predictions which approach the accuracy of human intuitive guesses. Research into commonsense knowledge has focused on reproducing the "background" or context of knowledge. In fact, AI research in general has moved away from high level symbol manipulation or "GOFAI", towards new models that are intended to capture more of our unconscious reasoning. Historian and AI researcher Daniel Crevier wrote that "time has proven the accuracy and perceptiveness of some of Dreyfus's comments. Had he formulated them less aggressively, constructive actions they suggested might have been taken much earlier."[42]

Can a machine have a mind, consciousness, and mental states?

This is a philosophical question, related to the problem of other minds and the hard problem of consciousness. The question revolves around a position defined by John Searle as "strong AI":- A physical symbol system can have a mind and mental states.[5]

- A physical symbol system can act intelligently.[5]

Neither of Searle's two positions are of great concern to AI research, since they do not directly answer the question "can a machine display general intelligence?" (unless it can also be shown that consciousness is necessary for intelligence). Turing wrote "I do not wish to give the impression that I think there is no mystery about consciousness… [b]ut I do not think these mysteries necessarily need to be solved before we can answer the question [of whether machines can think]."[43] Russell and Norvig agree: "Most AI researchers take the weak AI hypothesis for granted, and don't care about the strong AI hypothesis."[44]

There are a few researchers who believe that consciousness is an essential element in intelligence, such as Igor Aleksander, Stan Franklin, Ron Sun, and Pentti Haikonen, although their definition of "consciousness" strays very close to "intelligence." (See artificial consciousness.)

Before we can answer this question, we must be clear what we mean by "minds", "mental states" and "consciousness".

Consciousness, minds, mental states, meaning

The words "mind" and "consciousness" are used by different communities in different ways. Some new age thinkers, for example, use the word "consciousness" to describe something similar to Bergson's "élan vital": an invisible, energetic fluid that permeates life and especially the mind. Science fiction writers use the word to describe some essential property that makes us human: a machine or alien that is "conscious" will be presented as a fully human character, with intelligence, desires, will, insight, pride and so on. (Science fiction writers also use the words "sentience", "sapience," "self-awareness" or "ghost" - as in the Ghost in the Shell manga and anime series - to describe this essential human property). For others[who?], the words "mind" or "consciousness" are used as a kind of secular synonym for the soul.For philosophers, neuroscientists and cognitive scientists, the words are used in a way that is both more precise and more mundane: they refer to the familiar, everyday experience of having a "thought in your head", like a perception, a dream, an intention or a plan, and to the way we know something, or mean something or understand something[citation needed]. "It's not hard to give a commonsense definition of consciousness" observes philosopher John Searle.[45] What is mysterious and fascinating is not so much what it is but how it is: how does a lump of fatty tissue and electricity give rise to this (familiar) experience of perceiving, meaning or thinking?

Philosophers call this the hard problem of consciousness. It is the latest version of a classic problem in the philosophy of mind called the "mind-body problem."[46] A related problem is the problem of meaning or understanding (which philosophers call "intentionality"): what is the connection between our thoughts and what we are thinking about (i.e. objects and situations out in the world)? A third issue is the problem of experience (or "phenomenology"): If two people see the same thing, do they have the same experience? Or are there things "inside their head" (called "qualia") that can be different from person to person?[47]

Neurobiologists believe all these problems will be solved as we begin to identify the neural correlates of consciousness: the actual relationship between the machinery in our heads and its collective properties; such as the mind, experience and understanding. Some of the harshest critics of artificial intelligence agree that the brain is just a machine, and that consciousness and intelligence are the result of physical processes in the brain.[48] The difficult philosophical question is this: can a computer program, running on a digital machine that shuffles the binary digits of zero and one, duplicate the ability of the neurons to create minds, with mental states (like understanding or perceiving), and ultimately, the experience of consciousness?

Arguments that a computer cannot have a mind and mental states

Searle's Chinese room

John Searle asks us to consider a thought experiment: suppose we have written a computer program that passes the Turing test and demonstrates "general intelligent action." Suppose, specifically that the program can converse in fluent Chinese. Write the program on 3x5 cards and give them to an ordinary person who does not speak Chinese. Lock the person into a room and have him follow the instructions on the cards. He will copy out Chinese characters and pass them in and out of the room through a slot. From the outside, it will appear that the Chinese room contains a fully intelligent person who speaks Chinese. The question is this: is there anyone (or anything) in the room that understands Chinese? That is, is there anything that has the mental state of understanding, or which has conscious awareness of what is being discussed in Chinese? The man is clearly not aware. The room cannot be aware. The cards certainly aren't aware. Searle concludes that the Chinese room, or any other physical symbol system, cannot have a mind.[49]Searle goes on to argue that actual mental states and consciousness require (yet to be described) "actual physical-chemical properties of actual human brains."[50] He argues there are special "causal properties" of brains and neurons that gives rise to minds: in his words "brains cause minds."[51]

Related arguments: Leibniz' mill, Davis's telephone exchange, Block's Chinese nation and Blockhead

Gottfried Leibniz made essentially the same argument as Searle in 1714, using the thought experiment of expanding the brain until it was the size of a mill.[52] In 1974, Lawrence Davis imagined duplicating the brain using telephone lines and offices staffed by people, and in 1978 Ned Block envisioned the entire population of China involved in such a brain simulation. This thought experiment is called "the Chinese Nation" or "the Chinese Gym".[53] Ned Block also proposed his Blockhead argument, which is a version of the Chinese room in which the program has been re-factored into a simple set of rules of the form "see this, do that", removing all mystery from the program.Responses to the Chinese room

Responses to the Chinese room emphasize several different points.- The systems reply and the virtual mind reply:[54] This reply argues that the system, including the man, the program, the room, and the cards, is what understands Chinese. Searle claims that the man in the room is the only thing which could possibly "have a mind" or "understand", but others disagree, arguing that it is possible for there to be two minds in the same physical place, similar to the way a computer can simultaneously "be" two machines at once: one physical (like a Macintosh) and one "virtual" (like a word processor).

- Speed, power and complexity replies:[55] Several critics point out that the man in the room would probably take millions of years to respond to a simple question, and would require "filing cabinets" of astronomical proportions. This brings the clarity of Searle's intuition into doubt.

- Robot reply:[56] To truly understand, some believe the Chinese Room needs eyes and hands. Hans Moravec writes: 'If we could graft a robot to a reasoning program, we wouldn't need a person to provide the meaning anymore: it would come from the physical world."[57]

- Brain simulator reply:[58] What if the program simulates the sequence of nerve firings at the synapses of an actual brain of an actual Chinese speaker? The man in the room would be simulating an actual brain. This is a variation on the "systems reply" that appears more plausible because "the system" now clearly operates like a human brain, which strengthens the intuition that there is something besides the man in the room that could understand Chinese.

- Other minds reply and the epiphenomena reply:[59] Several people have noted that Searle's argument is just a version of the problem of other minds, applied to machines. Since it is difficult to decide if people are "actually" thinking, we should not be surprised that it is difficult to answer the same question about machines.

- A related question is whether "consciousness" (as Searle understands it) exists. Searle argues that the experience of consciousness can't be detected by examining the behavior of a machine, a human being or any other animal. Daniel Dennett points out that natural selection cannot preserve a feature of an animal that has no effect on the behavior of the animal, and thus consciousness (as Searle understands it) can't be produced by natural selection. Therefore either natural selection did not produce consciousness, or "strong AI" is correct in that consciousness can be detected by suitably designed Turing test.

Is thinking a kind of computation?

The computational theory of mind or "computationalism" claims that the relationship between mind and brain is similar (if not identical) to the relationship between a running program and a computer. The idea has philosophical roots in Hobbes (who claimed reasoning was "nothing more than reckoning"), Leibniz (who attempted to create a logical calculus of all human ideas), Hume (who thought perception could be reduced to "atomic impressions") and even Kant (who analyzed all experience as controlled by formal rules).[60] The latest version is associated with philosophers Hilary Putnam and Jerry Fodor.[61]This question bears on our earlier questions: if the human brain is a kind of computer then computers can be both intelligent and conscious, answering both the practical and philosophical questions of AI. In terms of the practical question of AI ("Can a machine display general intelligence?"), some versions of computationalism make the claim that (as Hobbes wrote):

- Reasoning is nothing but reckoning[6]

- Mental states are just implementations of (the right) computer programs[62]

Alan Turing noted that there are many arguments of the form "a machine will never do X", where X can be many things, such as:

Be kind, resourceful, beautiful, friendly, have initiative, have a sense of humor, tell right from wrong, make mistakes, fall in love, enjoy strawberries and cream, make someone fall in love with it, learn from experience, use words properly, be the subject of its own thought, have as much diversity of behaviour as a man, do something really new.[63]Turing argues that these objections are often based on naive assumptions about the versatility of machines or are "disguised forms of the argument from consciousness". Writing a program that exhibits one of these behaviors "will not make much of an impression."[63] All of these arguments are tangential to the basic premise of AI, unless it can be shown that one of these traits is essential for general intelligence.

Can a machine have emotions?

If "emotions" are defined only in terms of their effect on behavior or on how they function inside an organism, then emotions can be viewed as a mechanism that an intelligent agent uses to maximize the utility of its actions. Given this definition of emotion, Hans Moravec believes that "robots in general will be quite emotional about being nice people".[64] Fear is a source of urgency. Empathy is a necessary component of good human computer interaction. He says robots "will try to please you in an apparently selfless manner because it will get a thrill out of this positive reinforcement. You can interpret this as a kind of love."[64] Daniel Crevier writes "Moravec's point is that emotions are just devices for channeling behavior in a direction beneficial to the survival of one's species."[65]However, emotions can also be defined in terms of their subjective quality, of what it feels like to have an emotion. The question of whether the machine actually feels an emotion, or whether it merely acts as if it is feeling an emotion is the philosophical question, "can a machine be conscious?" in another form.[43]

Can a machine be self-aware?

"Self awareness", as noted above, is sometimes used by science fiction writers as a name for the essential human property that makes a character fully human. Turing strips away all other properties of human beings and reduces the question to "can a machine be the subject of its own thought?" Can it think about itself? Viewed in this way, it is obvious that a program can be written that can report on its own internal states, such as a debugger.[63] Though arguably self-awareness often presumes a bit more capability; a machine that can ascribe meaning in some way to not only its own state but in general postulating questions without solid answers: the contextual nature of its existence now; how it compares to past states or plans for the future, the limits and value of its work product, how it perceives its performance to be valued-by or compared to others.Can a machine be original or creative?

Turing reduces this to the question of whether a machine can "take us by surprise" and argues that this is obviously true, as any programmer can attest.[66] He notes that, with enough storage capacity, a computer can behave in an astronomical number of different ways.[67] It must be possible, even trivial, for a computer that can represent ideas to combine them in new ways. (Douglas Lenat's Automated Mathematician, as one example, combined ideas to discover new mathematical truths.)In 2009, scientists at Aberystwyth University in Wales and the U.K's University of Cambridge designed a robot called Adam that they believe to be the first machine to independently come up with new scientific findings.[68] Also in 2009, researchers at Cornell developed Eureqa, a computer program that extrapolates formulas to fit the data inputted, such as finding the laws of motion from a pendulum's motion.

Can a machine be benevolent or hostile?

This question (like many others in the philosophy of artificial intelligence) can be presented in two forms. "Hostility" can be defined in terms function or behavior, in which case "hostile" becomes synonymous with "dangerous". Or it can be defined in terms of intent: can a machine "deliberately" set out to do harm? The latter is the question "can a machine have conscious states?" (such as intentions) in another form.[43]The question of whether highly intelligent and completely autonomous machines would be dangerous has been examined in detail by futurists (such as the Singularity Institute). (The obvious element of drama has also made the subject popular in science fiction, which has considered many differently possible scenarios where intelligent machines pose a threat to mankind.)

One issue is that machines may acquire the autonomy and intelligence required to be dangerous very quickly. Vernor Vinge has suggested that over just a few years, computers will suddenly become thousands or millions of times more intelligent than humans. He calls this "the Singularity."[69] He suggests that it may be somewhat or possibly very dangerous for humans.[70] This is discussed by a philosophy called Singularitarianism.

In 2009, academics and technical experts attended a conference to discuss the potential impact of robots and computers and the impact of the hypothetical possibility that they could become self-sufficient and able to make their own decisions. They discussed the possibility and the extent to which computers and robots might be able to acquire any level of autonomy, and to what degree they could use such abilities to possibly pose any threat or hazard. They noted that some machines have acquired various forms of semi-autonomy, including being able to find power sources on their own and being able to independently choose targets to attack with weapons. They also noted that some computer viruses can evade elimination and have achieved "cockroach intelligence." They noted that self-awareness as depicted in science-fiction is probably unlikely, but that there were other potential hazards and pitfalls.[69]

Some experts and academics have questioned the use of robots for military combat, especially when such robots are given some degree of autonomous functions.[71] The US Navy has funded a report which indicates that as military robots become more complex, there should be greater attention to implications of their ability to make autonomous decisions.[72][73]

The President of the Association for the Advancement of Artificial Intelligence has commissioned a study to look at this issue.[74] They point to programs like the Language Acquisition Device which can emulate human interaction.

Some have suggested a need to build "Friendly AI", meaning that the advances which are already occurring with AI should also include an effort to make AI intrinsically friendly and humane.[75]

Can a machine have a soul?

Finally, those who believe in the existence of a soul may argue that "Thinking is a function of man's immortal soul." Alan Turing called this "the theological objection". He writesIn attempting to construct such machines we should not be irreverently usurping His power of creating souls, any more than we are in the procreation of children: rather we are, in either case, instruments of His will providing mansions for the souls that He creates.