From Wikipedia, the free encyclopedia

Inductive charging pad for LG smartphone, using the Qi (pronounced 'Chi') system, an example of near-field wireless transfer. When the phone is set on the pad, a coil in the pad creates a magnetic field which induces a current in another coil, in the phone, charging its battery.

Wireless power transfer (WPT)[1] or wireless energy transmission is the transmission of electrical power from a power source to a consuming device without using solid wires or conductors.[2][3][4][5] It is a generic term that refers to a number of different power transmission technologies that use time-varying electromagnetic fields.[1][5][6][7] Wireless transmission is useful to power electrical devices in cases where interconnecting wires are inconvenient, hazardous, or are not possible. In wireless power transfer, a transmitter device connected to a power source, such as the mains power line, transmits power by electromagnetic fields across an intervening space to one or more receiver devices, where it is converted back to electric power and utilized.[1]

Wireless power techniques fall into two categories, non-radiative and radiative.[1][6][8][9][10] In near-field or non-radiative techniques, power is transferred over short distances by magnetic fields using inductive coupling between coils of wire or in a few devices by electric fields using capacitive coupling between electrodes.[5][8]

Applications of this type are electric toothbrush chargers, RFID tags, smartcards, and chargers for implantable medical devices like artificial cardiac pacemakers, and inductive powering or charging of electric vehicles like trains or buses.[9][11] A current focus is to develop wireless systems to charge mobile and handheld computing devices such as cellphones, digital music player and portable computers without being tethered to a wall plug. In radiative or far-field techniques, also called power beaming, power is transmitted by beams of electromagnetic radiation, like microwaves or laser beams. These techniques can transport energy longer distances but must be aimed at the receiver. Proposed applications for this type are solar power satellites, and wireless powered drone aircraft.[9] An important issue associated with all wireless power systems is limiting the exposure of people and other living things to potentially injurious electromagnetic fields (see Electromagnetic radiation and health).[9]

Overview

"Wireless power transmission" is a collective term that refers to a number of different technologies for transmitting power by means of time-varying electromagnetic fields.[1][5][8] The technologies, listed in the table below, differ in the distance over which they can transmit power efficiently, whether the transmitter must be aimed (directed) at the receiver, and in the type of electromagnetic energy they use: time varying electric fields, magnetic fields, radio waves, microwaves, or infrared or visible light waves.[8]

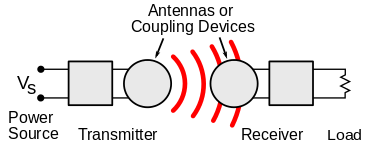

In general a wireless power system consists of a "transmitter" device connected to a source of power such as mains power lines, which converts the power to a time-varying electromagnetic field, and one or more "receiver" devices which receive the power and convert it back to DC or AC electric power which is consumed by an electrical load.[1][8] In the transmitter the input power is converted to an oscillating electromagnetic field by some type of "antenna" device. The word "antenna" is used loosely here; it may be a coil of wire which generates a magnetic field, a metal plate which generates an electric field, an antenna which radiates radio waves, or a laser which generates light. A similar antenna or coupling device in the receiver converts the oscillating fields to an electric current. An important parameter which determines the type of waves is the frequency f in hertz of the oscillations. The frequency determines the wavelength λ = c/f of the waves which carry the energy across the gap, where c is the velocity of light.

Wireless power uses the same fields and waves as wireless communication devices like radio,[6][12] another familiar technology which involves power transmitted without wires by electromagnetic fields, used in cellphones, radio and television broadcasting, and WiFi. In radio communication the goal is the transmission of information, so the amount of power reaching the receiver is unimportant as long as it is enough that the signal to noise ratio is high enough that the information can be received intelligibly.[5][6][12] In wireless communication technologies generally only tiny amounts of power reach the receiver. By contrast, in wireless power, the amount of power received is the important thing, so the efficiency (fraction of transmitted power that is received) is the more significant parameter.[5] For this reason wireless power technologies are more limited by distance than wireless communication technologies.

These are the different wireless power technologies:[1][8][9][13][14]

| Technology | Range[15] | Directivity[8] | Frequency | Antenna devices | Current and or possible future applications |

|---|---|---|---|---|---|

| Inductive coupling | Short | Low | Hz - MHz | Wire coils | Electric tooth brush and razor battery charging, induction stovetops and industrial heaters. |

| Resonant inductive coupling | Mid- | Low | MHz - GHz | Tuned wire coils, lumped element resonators | Charging portable devices (Qi, WiTricity), biomedical implants, electric vehicles, powering busses, trains, MAGLEV, RFID, smartcards. |

| Capacitive coupling | Short | Low | kHz - MHz | Electrodes | Charging portable devices, power routing in large scale integrated circuits, Smartcards. |

| Magnetodynamic[13] | Short | N.A. | Hz | Rotating magnets | Charging electric vehicles. |

| Microwaves | Long | High | GHz | Parabolic dishes, phased arrays, rectennas | Solar power satellite, powering drone aircraft. |

| Light waves | Long | High | ≥THz | Lasers, photocells, lenses | Powering drone aircraft, powering space elevator climbers. |

Field regions

Electric and magnetic fields are created by charged particles in matter such as electrons. A stationary charge creates an electrostatic field in the space around it. A steady current of charges (direct current, DC) creates a static magnetic field around it. The above fields contain energy, but cannot carry power because they are static. However time-varying fields can carry power.[16] Accelerating electric charges, such as are found in an alternating current (AC) of electrons in a wire, create time-varying electric and magnetic fields in the space around them. These fields can exert oscillating forces on the electrons in a receiving "antenna", causing them to move back and forth. These represent alternating current which can be used to power a load.The oscillating electric and magnetic fields surrounding moving electric charges in an antenna device can be divided into two regions, depending on distance Drange from the antenna.[1][4][6][8][9][10][17] The boundary between the regions is somewhat vaguely defined.[8] The fields have different characteristics in these regions, and different technologies are used for transmitting power:

- Near-field or nonradiative region - This means the area within about 1 wavelength (λ) of the antenna.[1][4][10] In this region the oscillating electric and magnetic fields are separate[6] and power can be transferred via electric fields by capacitive coupling (electrostatic induction) between metal electrodes, or via magnetic fields by inductive coupling (electromagnetic induction) between coils of wire.[5][6][8][9] These fields are not radiative,[10] meaning the energy stays within a short distance of the transmitter.[18] If there is no receiving device or absorbing material within their limited range to "couple" to, no power leaves the transmitter.[18] The range of these fields is short, and depends on the size and shape of the "antenna" devices, which are usually coils of wire. The fields, and thus the power transmitted, decrease exponentially with distance,[4][17][19] so if the distance between the two "antennas" Drange is much larger than the diameter of the "antennas" Dant very little power will be received. Therefore these techniques cannot be used for long distance power transmission.

- Resonance, such as resonant inductive coupling, can increase the coupling between the antennas greatly, allowing efficient transmission at somewhat greater distances,[1][4][6][9][20][21] although the fields still decrease exponentially. Therefore the range of near-field devices is conventionally devided into two categories:

- Short range - up to about one antenna diameter: Drange ≤ Dant.[18][20][22] This is the range over which ordinary nonresonant capacitive or inductive coupling can transfer practical amounts of power.

- Mid-range - up to 10 times the antenna diameter: Drange ≤ 10 Dant.[20][21][22][23] This is the range over which resonant capacitive or inductive coupling can transfer practical amounts of power.

- Far-field or radiative region - Beyond about 1 wavelength (λ) of the antenna, the electric and magnetic fields are perpendicular to each other and propagate as an electromagnetic wave; examples are radio waves, microwaves, or light waves.[1][4][9] This part of the energy is radiative,[10] meaning it leaves the antenna whether or not there is a receiver to absorb it. The portion of energy which does not strike the receiving antenna is dissipated and lost to the system. The amount of power emitted as electromagnetic waves by an antenna depends on the ratio of the antenna's size Dant to the wavelength of the waves λ,[24] which is determined by the frequency: λ = c/f. At low frequencies f where the antenna is much smaller than the size of the waves, Dant << λ, very little power is radiated. Therefore the near-field devices above, which use lower frequencies, radiate almost none of their energy as electromagnetic radiation. Antennas about the same size as the wavelength Dant ≈ λ such as monopole or dipole antennas, radiate power efficiently, but the electromagnetic waves are radiated in all directions (omnidirectionally), so if the receiving antenna is far away, only a small amount of the radiation will hit it.[10][20] Therefore these can be used for short range, inefficient power transmission but not for long range transmission.[25]

- However, unlike fields, electromagnetic radiation can be focused by reflection or refraction into beams. By using a high-gain antenna or optical system which concentrates the radiation into a narrow beam aimed at the receiver, it can be used for long range power transmission.[20][25] From the Rayleigh criterion, to produce the narrow beams necessary to focus a significant amount of the energy on a distant receiver, an antenna must be much larger than the wavelength of the waves used: Dant >> λ = c/f.[26][27] Practical beam power devices require wavelengths in the centimeter region or below, corresponding to frequencies above 1 GHz, in the microwave range or above.[1]

Near-field or non-radiative techniques

The near-field components of electric and magnetic fields die out quickly beyond a distance of about one diameter of the antenna (Dant). Outside very close ranges the field strength and coupling is roughly proportional to (Drange/Dant)−3[28][17] Since power is proportional to the square of the field strength, the power transferred decreases with the sixth power of the distance (Drange/Dant)−6.[6][19][29][30] or 60 dB per decade. In other words, doubling the distance between transmitter and receiver causes the power received to decrease by a factor of 26 = 64.Inductive coupling

The electrodynamic induction wireless transmission technique relies on the use of a magnetic field generated by an electric current to induce a current in a second conductor. This effect occurs in the electromagnetic near field, with the secondary in close proximity to the primary. As the distance from the primary is increased, more and more of the primary's magnetic field misses the secondary. Even over a relatively short range the inductive coupling is grossly inefficient, wasting much of the transmitted energy.[31]

This action of an electrical transformer is the simplest form of wireless power transmission. The primary coil and secondary coil of a transformer are not directly connected; each coil is part of a separate circuit. Energy transfer takes place through a process known as mutual induction. Principal functions are stepping the primary voltage either up or down and electrical isolation. Mobile phone and electric toothbrush battery chargers, are examples of how this principle is used. Induction cookers use this method. The main drawback to this basic form of wireless transmission is short range. The receiver must be directly adjacent to the transmitter or induction unit in order to efficiently couple with it.

Common uses of resonance-enhanced electrodynamic induction[32] are charging the batteries of portable devices such as laptop computers and cell phones, medical implants and electric vehicles.[33][34][35] A localized charging technique[36] selects the appropriate transmitting coil in a multilayer winding array structure.[37] Resonance is used in both the wireless charging pad (the transmitter circuit) and the receiver module (embedded in the load) to maximize energy transfer efficiency. Battery-powered devices fitted with a special receiver module can then be charged simply by placing them on a wireless charging pad. It has been adopted as part of the Qi wireless charging standard.

This technology is also used for powering devices with very low energy requirements, such as RFID patches and contactless smartcards. Instead of relying on each of the many thousands or millions of RFID patches or smartcards to contain a working battery, electrodynamic induction can provide power only when the devices are needed.

Capacitive coupling

In capacitive coupling (electrostatic induction), the dual of inductive coupling, power is transmitted by electric fields[5] between electrodes such as metal plates. The transmitter and receiver electrodes form a capacitor, with the intervening space as the dielectric.[5][6][9][38][39] An alternating voltage generated by the transmitter is applied to the transmitting plate, and the oscillating electric field induces an alternating potential on the receiver plate by electrostatic induction,[5] which causes an alternating current to flow in the load circuit. The amount of power transferred increases with the frequency[38] and the capacitance between the plates, which is proportional to the area of the smaller plate and (for short distances) inversely proportional to the separation.[5]Capacitive coupling has only been used practically in a few low power applications, because the very high voltages on the electrodes required to transmit significant power can be hazardous,[6][9] and can cause unpleasant side effects such as noxious ozone production. In addition, in contrast to magnetic fields,[20] electric fields interact strongly with most materials, including the human body, due to dielectric polarization.[39] Intervening materials between or near the electrodes can absorb the energy, in the case of humans possibly causing excessive electromagnetic field exposure.[6] However capacitive coupling has a few advantages over inductive. The field is largely confined between the capacitor plates, reducing interference, which in inductive coupling requires heavy ferrite "flux confinement" cores.[5][39] Also, alignment requirements between the transmitter and receiver are less critical.[5][6][38] Capacitive coupling has recently been applied to charging battery powered portable devices[40] and is being considered as a means of transferring power between substrate layers in integrated circuits.[41]

Magnetodynamic coupling

In this method, power is transmitted between two rotating armature, one in the transmitter and one in the receiver, which rotate synchronously, coupled together by a magnetic field generated by permanent magnets on the armatures.[13] The transmitter armature is turned either by or as the rotor of an electric motor, and its magnetic field exerts torque on the receiver armature, turning it. The magnetic field acts like a mechanical coupling between the armatures.[13] The receiver armature produces power to drive the load, either by turning a separate electric generator or by using the receiver armature itself as the rotor in a generator.This device has been proposed as an alternative to inductive power transfer for noncontact charging of electric vehicles.[13] A rotating armature embedded in a garage floor or curb would turn a receiver armature in the underside of the vehicle to charge its batteries.[13] It is claimed that this technique can transfer power over distances of 10 to 15 cm (4 to 6 inches) with high efficiency, over 90%.[13] Also, the low frequency stray magnetic fields produced by the rotating magnets produce less electromagnetic interference to nearby electronic devices than the high frequency magnetic fields produced by inductive coupling systems. A prototype system charging electric vehicles has been in operation at University of British Columbia since 2012. Other researchers, however, claim that the two energy conversions (electrical to mechanical to electrical again) make the system less efficient than electrical systems like inductive coupling.[13]

Far-field or radiative techniques

Far field methods achieve longer ranges, often multiple kilometer ranges, where the distance is much greater than the diameter of the device(s). The main reason for longer ranges with radio wave and optical devices is the fact that electromagnetic radiation in the far-field can be made to match the shape of the receiving area (using high directivity antennas or well-collimated laser beams). The maximum directivity for antennas is physically limited by diffraction.In general, visible light (from lasers) and microwaves (from purpose-designed antennas) are the forms of electromagnetic radiation best suited to energy transfer.

The dimensions of the components may be dictated by the distance from transmitter to receiver, the wavelength and the Rayleigh criterion or diffraction limit, used in standard radio frequency antenna design, which also applies to lasers. Airy's diffraction limit is also frequently used to determine an approximate spot size at an arbitrary distance from the aperture. Electromagnetic radiation experiences less diffraction at shorter wavelengths (higher frequencies); so, for example, a blue laser is diffracted less than a red one.

The Rayleigh criterion dictates that any radio wave, microwave or laser beam will spread and become weaker and diffuse over distance; the larger the transmitter antenna or laser aperture compared to the wavelength of radiation, the tighter the beam and the less it will spread as a function of distance (and vice versa). Smaller antennae also suffer from excessive losses due to side lobes. However, the concept of laser aperture considerably differs from an antenna. Typically, a laser aperture much larger than the wavelength induces multi-moded radiation and mostly collimators are used before emitted radiation couples into a fiber or into space.

Ultimately, beamwidth is physically determined by diffraction due to the dish size in relation to the wavelength of the electromagnetic radiation used to make the beam.

Microwave power beaming can be more efficient than lasers, and is less prone to atmospheric attenuation caused by dust or water vapor.

Then the power levels are calculated by combining the above parameters together, and adding in the gains and losses due to the antenna characteristics and the transparency and dispersion of the medium through which the radiation passes. That process is known as calculating a link budget.

Microwaves

An artist's depiction of a solar satellite that could send electric energy by microwaves to a space vessel or planetary surface.

Power transmission via radio waves can be made more directional, allowing longer distance power beaming, with shorter wavelengths of electromagnetic radiation, typically in the microwave range.[42] A rectenna may be used to convert the microwave energy back into electricity. Rectenna conversion efficiencies exceeding 95% have been realized. Power beaming using microwaves has been proposed for the transmission of energy from orbiting solar power satellites to Earth and the beaming of power to spacecraft leaving orbit has been considered.[43][44]

Power beaming by microwaves has the difficulty that, for most space applications, the required aperture sizes are very large due to diffraction limiting antenna directionality. For example, the 1978 NASA Study of solar power satellites required a 1-km diameter transmitting antenna and a 10 km diameter receiving rectenna for a microwave beam at 2.45 GHz.[45] These sizes can be somewhat decreased by using shorter wavelengths, although short wavelengths may have difficulties with atmospheric absorption and beam blockage by rain or water droplets. Because of the "thinned array curse," it is not possible to make a narrower beam by combining the beams of several smaller satellites.

For earthbound applications, a large-area 10 km diameter receiving array allows large total power levels to be used while operating at the low power density suggested for human electromagnetic exposure safety. A human safe power density of 1 mW/cm2 distributed across a 10 km diameter area corresponds to 750 megawatts total power level. This is the power level found in many modern electric power plants.

Following World War II, which saw the development of high-power microwave emitters known as cavity magnetrons, the idea of using microwaves to transmit power was researched. By 1964, a miniature helicopter propelled by microwave power had been demonstrated.[46]

Japanese researcher Hidetsugu Yagi also investigated wireless energy transmission using a directional array antenna that he designed. In February 1926, Yagi and his colleague Shintaro Uda published their first paper on the tuned high-gain directional array now known as the Yagi antenna. While it did not prove to be particularly useful for power transmission, this beam antenna has been widely adopted throughout the broadcasting and wireless telecommunications industries due to its excellent performance characteristics.[47]

Wireless high power transmission using microwaves is well proven. Experiments in the tens of kilowatts have been performed at Goldstone in California in 1975[48][49][50] and more recently (1997) at Grand Bassin on Reunion Island.[51] These methods achieve distances on the order of a kilometer.

Under experimental conditions, microwave conversion efficiency was measured to be around 54%.[52]

More recently, a change to 24 GHz has been suggested as microwave emitters similar to LEDs have been made with very high quantum efficiencies using negative resistance, i.e. Gunn or IMPATT diodes, and this would be viable for short range links.

Lasers

In the case of electromagnetic radiation closer to the visible region of the spectrum (tens of micrometers to tens of nanometres), power can be transmitted by converting electricity into a laser beam that is then pointed at a photovoltaic cell.[53] This mechanism is generally known as "power beaming" because the power is beamed at a receiver that can convert it to electrical energy.

Compared to other wireless methods:[54]

- Collimated monochromatic wavefront propagation allows narrow beam cross-section area for transmission over large distances.

- Compact size: solid state lasers fit into small products.

- No radio-frequency interference to existing radio communication such as Wi-Fi and cell phones.

- Access control: only receivers hit by the laser receive power.

- Laser radiation is hazardous. Low power levels can blind humans and other animals. High power levels can kill through localized spot heating.

- Conversion between electricity and light is inefficient. Photovoltaic cells achieve only 40%–50% efficiency.[55] (Efficiency is higher with monochromatic light than with solar panels).

- Atmospheric absorption, and absorption and scattering by clouds, fog, rain, etc., causes up to 100% losses.

- Requires a direct line of sight with the target.

Other details include propagation,[61] and the coherence and the range limitation problem.[62]

Geoffrey Landis[63][64][65] is one of the pioneers of solar power satellites[66] and laser-based transfer of energy especially for space and lunar missions. The demand for safe and frequent space missions has resulted in proposals for a laser-powered space elevator.[67][68]

NASA's Dryden Flight Research Center demonstrated a lightweight unmanned model plane powered by a laser beam.[69] This proof-of-concept demonstrates the feasibility of periodic recharging using the laser beam system.

Energy harvesting

In the context of wireless power, energy harvesting, also called power harvesting or energy scavenging, is the conversion of ambient energy from the environment to electric power, mainly to power small autonomous wireless electronic devices.[70] The ambient energy may come from stray electric or magnetic fields or radio waves from nearby electrical equipment, light, thermal energy (heat), or kinetic energy such as vibration or motion of the device.[70] Although the efficiency of conversion is usually low and the power gathered often minuscule (milliwatts or microwatts),[70] it can be adequate to run or recharge small micropower wireless devices such as remote sensors, which are proliferating in many fields.[70] This new technology is being developed to eliminate the need for battery replacement or charging of such wireless devices, allowing them to operate completely autonomously.History

In 1826 André-Marie Ampère developed Ampère's circuital law showing that electric current produces a magnetic field.[71] Michael Faraday developed Faraday's law of induction in 1831, describing the electromagnetic force induced in a conductor by a time-varying magnetic flux. In 1862 James Clerk Maxwell synthesized these and other observations, experiments and equations of electricity, magnetism and optics into a consistent theory, deriving Maxwell's equations. This set of partial differential equations forms the basis for modern electromagnetics, including the wireless transmission of electrical energy.[14][72] Maxwell predicted the existence of electromagnetic waves in his 1873 A Treatise on Electricity and Magnetism.[73] In 1884 John Henry Poynting developed equations for the flow of power in an electromagnetic field, Poynting's theorem and the Poynting vector, which are used in the analysis of wireless energy transfer systems.[14][72] In 1888 Heinrich Rudolf Hertz discovered radio waves, confirming the prediction of electromagnetic waves by Maxwell.[73]Tesla's experiments

Tesla demonstrating wireless power transmission in a lecture at Columbia College, New York, in 1891. The two metal sheets are connected to his Tesla coil oscillator, which applies a high radio frequency oscillating voltage. The oscillating electric field between the sheets ionizes the low pressure gas in the two long Geissler tubes he is holding, causing them to glow by fluorescence, similar to neon lights.

Inventor Nikola Tesla performed the first experiments in wireless power transmission at the turn of the 20th century,[72][74] and may have done more to popularize the idea than any other individual. In the period 1891 to 1904 he experimented with transmitting power by inductive and capacitive coupling using spark-excited radio frequency resonant transformers, now called Tesla coils, which generated high AC voltages.[72][74][75] With these he was able to transmit power for short distances without wires. In demonstrations before the American Institute of Electrical Engineers[75] and at the 1893 Columbian Exposition in Chicago he lit light bulbs from across a stage.[74] He found he could increase the distance by using a receiving LC circuit tuned to resonance with the transmitter's LC circuit.[76] using resonant inductive coupling. At his Colorado Springs laboratory during 1899-1900, by using voltages of the order of 20 megavolts generated by an enormous coil, he was able to light three incandescent lamps at a distance of about one hundred feet.[77][78] The resonant inductive coupling which Tesla pioneered is now a familiar technology used throughout electronics; its use in wireless power has been recently rediscovered and it is currently being widely applied to short-range wireless power systems.[74][79]

The inductive and capacitive coupling used in Tesla's experiments is a "near-field" effect,[74] so it is not able to transmit power long distances. However, Tesla was obsessed with developing a wireless power distribution system that could transmit power directly into homes and factories, as proposed in a visionary 1900 article in Century magazine.[80][81][82][83] and believed that resonance was the key. He claimed to be able to transmit power on a worldwide scale, using a method that involved conduction through the Earth and atmosphere.[84][81][82][83] Tesla was vague about his methods. One of his ideas was to use balloons to suspend transmitting and receiving terminals in the air above 30,000 feet (9,100 m) in altitude, where the pressure is lower.[84] At this altitude, Tesla claimed, an ionized layer would allow electricity to be sent at high voltages (millions of volts) over long distances.

Resonant wireless power demonstration at the Franklin Institute, Philadelphia, 1937. Visitors could adjust the receiver's tuned circuit (right) with the two knobs. When the resonant frequency of the receiver was out of tune with the transmitter, the light would go out.

In 1901, Tesla began construction of a large high-voltage coil facility, the Wardenclyffe Tower at Shoreham, New York, intended as a prototype transmitter for a "World Wireless System" that was to transmit power worldwide, but by 1904 his investors had pulled out, and the facility was never completed.[82][85] Although Tesla claimed his ideas were proven, he had a history of failing to confirm his ideas by experiment,[86][87] and there seems to be no evidence that he ever transmitted significant power beyond the short-range demonstrations above.[14][72][76][77][87][88][89][90][91] The only report of long-distance transmission by Tesla is a claim, not found in reliable sources, that in 1899 he wirelessly lit 200 light bulbs at a distance of 26 miles (42 km).[77][88] There is no independent confirmation of this putative demonstration;[77][88][92] Tesla did not mention it,[88] and it does not appear in his meticulous laboratory notes.[92][93] It originated in 1944 from Tesla's first biographer, John J. O'Neill,[77] who said he pieced it together from "fragmentary material... in a number of publications".[94] In the 110 years since Tesla's experiments, efforts using similar equipment have failed to achieve long distance power transmission,[74][77][88][90] and the scientific consensus is his World Wireless system would not have worked.[14][72][76][82][88][95][96][97][98]

Tesla's world power transmission scheme remains today what it was in Tesla's time, a fascinating dream.[14][82]

Microwaves

Before World War 2, little progress was made in wireless power transmission.[89] Radio was developed for communication uses, but couldn't be used for power transmission due to the fact that the relatively low-frequency radio waves spread out in all directions and little energy reached the receiver.[14][72][89] In radio communication, at the receiver, an amplifier intensifies a weak signal using energy from another source. For power transmission, efficient transmission required transmitters that could generate higher-frequency microwaves, which can be focused in narrow beams towards a receiver.[14][72][89][96]The development of microwave technology during World War 2, such as the klystron and magnetron tubes and parabolic antennas[89] made radiative (far-field) methods practical for the first time, and the first long-distance wireless power transmission was achieved in the 1960s by William C. Brown.[14][72] In 1964 Brown invented the rectenna which could efficiently convert microwaves to DC power, and in 1964 demonstrated it with the first wireless-powered aircraft, a model helicopter powered by microwaves beamed from the ground.[14][89] A major motivation for microwave research in the 1970s and 80s was to develop a solar power satellite.[72][89] Conceived in 1968 by Peter Glaser, this would harvest energy from sunlight using solar cells and beam it down to Earth as microwaves to huge rectennas, which would convert it to electrical energy on the electric power grid.[14][99] In landmark 1975 high power experiments, Brown demonstrated short range transmission of 475 W of microwaves at 54% DC to DC efficiency, and he and Robert Dickinson at NASA's Jet Propulsion Laboratory transmitted 30 kW DC output power across 1.5 km with 2.38 GHz microwaves from a 26 m dish to a 7.3 x 3.5 m rectenna array.[14][100] The incident-RF to DC conversion efficiency of the rectenna was 80%.[14][100] In 1983 Japan launched MINIX (Microwave Ionosphere Nonlinear Interation Experiment), a rocket experiment to test transmission of high power microwaves through the ionosphere.[14]

In recent years a focus of research has been the development of wireless-powered drone aircraft, which began in 1959 with the Dept. of Defense's RAMP (Raytheon Airborne Microwave Platform) project[89] which sponsored Brown's research. In 1987 Canada's Communications Research Center developed a small prototype airplane called Stationary High Altitude Relay Platform (SHARP) to relay telecommunication data between points on earth similar to a communication satellite. Powered by a rectenna, it could fly at 13 miles (21 km) altitude and stay aloft for months. In 1992 a team at Kyoto University built a more advanced craft called MILAX (MIcrowave Lifted Airplane eXperiment). In 2003 NASA flew the first laser powered aircraft. The small model plane's motor was powered by electricity generated by photocells from a beam of infrared light from a ground based laser, while a control system kept the laser pointed at the plane.

Near-field technologies

Inductive power transfer between nearby coils of wire is an old technology, existing since the transformer was developed in the 1800s. Induction heating has been used for 100 years. With the advent of cordless appliances, inductive charging stands were developed for appliances used in wet environments like electric toothbrushes and electric razors to reduce the hazard of electric shock.One field to which inductive transfer has been applied is to power electric vehicles. In 1892 Maurice Hutin and Maurice Leblanc patented a wireless method of powering railroad trains using resonant coils inductively coupled to a track wire at 3 kHz.[101] The first passive RFID (Radio Frequency Identification) technologies were invented by Mario Cardullo[102] (1973) and Koelle et al.[103] (1975) and by the 1990s were being used in proximity cards and contactless smartcards.

The proliferation of portable wireless communication devices such as cellphones, tablet, and laptop computers in recent decades is currently driving the development of wireless powering and charging technology to eliminate the need for these devices to be tethered to wall plugs during charging.[104] The Wireless Power Consortium was established in 2008 to develop interoperable standards across manufacturers.[104] Its Qi inductive power standard published in August 2009 enables charging and powering of portable devices of up to 5 watts over distances of 4 cm (1.6 inches).[105] The wireless device is placed on a flat charger plate (which could be embedded in table tops at cafes, for example) and power is transferred from a flat coil in the charger to a similar one in the device.

In 2007, a team led by Marin Soljačić at MIT used coupled tuned circuits made of a 25 cm resonant coil at 10 MHz to transfer 60 W of power over a distance of 2 meters (6.6 ft) (8 times the coil diameter) at around 40% efficiency.[74][106] This technology is being commercialized as WiTricity.