The philosophy of biology is a subfield of philosophy of science, which deals with epistemological, metaphysical, and ethical issues in the biological and biomedical sciences. Although philosophers of science and philosophers generally have long been interested in biology (e.g., Aristotle, Descartes, and even Kant), philosophy of biology only emerged as an independent field of philosophy in the 1960s and 1970s. Philosophers of science then began paying increasing attention to biology, from the rise of Neodarwinism in the 1930s and 1940s to the discovery of the structure of DNA in 1953 to more recent advances in genetic engineering. Other key ideas include the reduction of all life processes to biochemical reactions, and the incorporation of psychology into a broader neuroscience.

Overview

- "What is a biological species?"

- "How is rationality possible, given our biological origins?"

- "How do organisms coordinate their common behavior?"

- "Are there genome editing agents?"

- "How might our biological understandings of race, sexuality, and gender reflect social values?"

- "What is natural selection, and how does it operate in nature?"

- "How do medical doctors explain disease?"

- "From where do language and logic stem?"

- "How is ecology related to medicine?"

- "What is life?"[1]

- "What makes humans uniquely human?"

- "What is the basis of moral thinking?"

- "What are the factors we use for aesthetic judgments?"

- "Is evolution compatible with Christianity or other religious systems?"

Philosophy of biology today has become a very visible, well-organized discipline - with its own journals, conferences, and professional organizations. The largest of the latter is the International Society for the History, Philosophy, and Social Studies of Biology (ISHPSSB);[4] the name of the Society reflecting the interdisciplinary nature of the field.

Reductionism, holism, and vitalism

One subject within philosophy of biology deals with the relationship between reductionism and holism, contending views with epistemological and methodological significance, but also with ethical and metaphysical connotations.- Scientific reductionism is the view that higher-level biological processes reduce to physical and chemical processes. For example, the biological process of respiration is explained as a biochemical process involving oxygen and carbon dioxide.

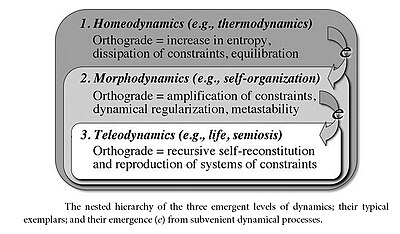

- Holism is the view that emphasizes higher-level processes, also called emergent properties: phenomena at a larger level that occur due to the pattern of interactions between the elements of a system over time. For example, to explain why one species of finch survives a drought while others die out, the holistic method looks at the entire ecosystem. Reducing an ecosystem to its parts in this case would be less effective at explaining overall behavior (in this case, the decrease in biodiversity). As individual organisms must be understood in the context of their ecosystems, holists argue, so must lower-level biological processes be understood in the broader context of the living organism in which they take part. Proponents of this view cite our growing understanding of the multidirectional and multilayered nature of gene modulation (including epigenetic changes) as an area where a reductionist view is inadequate for full explanatory power.[5] See also Holism in science.

- Vitalism is the view, rejected by mainstream biologists since the 19th century, that there is a life-force (called the "vis viva") that has thus far been unmeasurable scientifically that gives living organisms their "life." Vitalists often claimed that the vis viva acts with purposes according to its pre-established "form" (see teleology). Examples of vitalist philosophy are found in many religions. Mainstream biologists reject vitalism on the grounds that it opposes the scientific method. The scientific method was designed as a methodology to build an extremely reliable understanding of the world, that is, a supportable, evidenced understanding. Following this epistemological view, mainstream scientists reject phenomena that have not been scientifically measured or verified, and thus reject vitalism.

An autonomous philosophy of biology

All processes in organisms obey physical laws, the difference from inanimate processes lying in their organisation and their being subject to control by coded information. This has led some biologists and philosophers (for example, Ernst Mayr and David Hull) to return to the strictly philosophical reflections of Charles Darwin to resolve some of the problems which confronted them when they tried to employ a philosophy of science derived from classical physics. This latter, positivist approach, exemplified by Joseph Henry Woodger, emphasised a strict determinism (as opposed to high probability) and to the discovery of universally applicable laws, testable in the course of experiment. It was difficult for biology, beyond a basic microbiological level, to live up to these structures.[6] Standard philosophy of science seemed to leave out a lot of what characterised living organisms - namely, a historical component in the form of an inherited genotype.Biologists with philosophic interests responded, emphasising the dual nature of the living organism. On the one hand there was the genetic programme (represented in nucleic acids) - the genotype. On the other there was its extended body or soma - the phenotype. In accommodating the more probabilistic and non-universal nature of biological generalisations, it was a help that standard philosophy of science was in the process of accommodating similar aspects of 20th century physics.

This led to a distinction between proximate causes and explanations - "how" questions dealing with the phenotype; and ultimate causes - "why" questions, including evolutionary causes, focused on the genotype. This clarification was part of the great reconciliation, by Ernst Mayr, among others, in the 1940s, between Darwinian evolution by natural selection and the genetic model of inheritance. A commitment to conceptual clarification has characterised many of these philosophers since. Trivially, this has reminded us of the scientific basis of all biology, while noting its diversity - from microbiology to ecology. A complete philosophy of biology would need to accommodate all these activities.[citation needed] Less trivially, it has unpacked the notion of "teleology". Since 1859, scientists have had no need for a notion of cosmic teleology - a programme or a law that can explain and predict evolution. Darwin provided that. But teleological explanations (relating to purpose or function) have remained stubbornly useful in biology - from the structural configuration of macromolecules to the study of co-operation in social systems. By clarifying and restricting the use of the term to describe and explain systems controlled strictly scientifically by genetic programmes, or other physical systems, teleological questions can be framed and investigated while remaining committed to the physical nature of all underlying organic processes.

Similar attention has been given to the concepts of natural selection (what is the target of natural selection? - the individual? the environment? the genome? the species?); adaptation; diversity and classification; species and speciation; and macroevolution.

Just as biology has developed as an autonomous discipline in full conversation with the other sciences, there is a great deal of work now being carried on by biologists and philosophers to develop a dedicated philosophy of biological science which, while in full conversation with all other philosophic disciplines, attempts to give answers to the real questions raised by scientific investigations in biology.

Other perspectives

While the overwhelming majority of English-speaking scholars operating under the banner of "philosophy of biology" work within the Anglo-American tradition of analytical philosophy, there is a stream of philosophic work in continental philosophy which seeks to deal with issues deriving from biological science. The communication difficulties involved between these two traditions are well known, not helped by differences in language. Gerhard Vollmer is often thought of as a bridge but, despite his education and residence in Germany, he largely works in the Anglo-American tradition, particularly pragmatism, and is famous for his development of Konrad Lorenz's and Willard Van Orman Quine's idea of evolutionary epistemology. On the other hand, one scholar who has attempted to give a more continental account of the philosophy of biology is Hans Jonas. His "The Phenomenon of Life" (New York, 1966) sets out boldly to offer an "existential interpretation of biological facts", starting with the organism's response to stimulus and ending with man confronting the Universe, and drawing upon a detailed reading of phenomenology. This is unlikely to have much influence on mainstream philosophy of biology, but indicates, as does Vollmer's work, the current powerful influence of biological thought on philosophy. Another account is given by the late Virginia Tech philosopher Marjorie Grene.Philosophy of biology was historically associated very closely with theoretical evolutionary biology, however more recently there have been more diverse movements within philosophy of biology including movements to examine for instance molecular biology.[11]

Scientific discovery process

Research in biology continues to be less guided by theory than it is in other sciences.[12] This is especially the case where the availability of high throughput screening techniques for the different "-omics" fields such as genomics, whose complexity makes them predominantly data-driven. Such data-intensive scientific discovery is by some considered to be the fourth paradigm, after empiricism, theory and computer simulation.[13] Others reject the idea that data driven research is about to replace theory.[14][15] As Krakauer et al. put it: "machine learning is a powerful means of preprocessing data in preparation for mechanistic theory building, but should not be considered the final goal of a scientific inquiry."[16] In regard to cancer biology, Raspe et al. state: "A better understanding of tumor biology is fundamental for extracting the relevant information from any high throughput data." [17] The journal Science chose cancer immunotherapy as the breakthrough of 2013. According to their explanation a lesson to be learned from the successes of cancer immunotherapy is that they emerged from decoding of basic biology.[18]Theory in biology is to some extent less strictly formalized than in physics. Besides 1) classic mathematical-analytical theory, as in physics, there is 2) statistics-based, 3) computer simulation and 4) conceptual/verbal analysis.[19] Dougherty and Bittner argue that for biology to progress as a science, it has to move to more rigorous mathematical modeling, or otherwise risk to be "empty talk".[20]

In tumor biology research, the characterization of cellular signaling processes has largely focused on identifying the function of individual genes and proteins. Janes [21] showed however the context-dependent nature of signaling driving cell decisions demonstrating the need for a more system based approach.[22] The lack of attention for context dependency in preclinical research is also illustrated by the observation that preclinical testing rarely includes predictive biomarkers that, when advanced to clinical trials, will help to distinguish those patients who are likely to benefit from a drug.