From Wikipedia, the free encyclopedia

https://en.wikipedia.org/wiki/Commercialization_of_the_Internet

The commercialization

of the Internet refers to the creation and management of online

services principally for financial gain. It typically involves the

increasing monetization of network services and consumer products

mediated through the varied use of Internet technologies. Common forms of Internet commercialization include e-commerce (electronic commerce), electronic money, and advanced marketing techniques including personalized and targeted advertising. The effects of the commercialization of the Internet are controversial, with benefits that simplify daily life and repercussions that challenge personal freedoms, including surveillance capitalism and data tracking. This began with the National Science Foundation funding supercomputing center and then universities being able to develop supercomputer sites for research and academic purposes.

With the growing population and demands of Internet users,

startups and their investors were encouraged to start profiting off of

the Internet.

Early history

The core idea of the web was outlined by Vannevar Bush

in 1945 as interconnected networks of hyperlinked pages. The first

attempt to materialize this vision was by Ted Nelson in 1965 all the way

up to 1984, a decade before Netscape.

In the mid-1980s, the National Science Foundation,

the backbone of the internet at the time, claimed that the Internet was

for research and not commerce. This strict allocation of funding was

known as the "acceptable use policy." The NSF were firm believers that the Internet would be thwarted and devalued if it were to open up to commercial interests.

However, simultaneously in April 1984 CompuServe's

Consumer Information Service opened a new online shopping service

called the Electronic Shopping Mall that allowed subscribers to buy from

merchants including American Express, Sears, and over sixty other

retailers.

Development of public networks

NSFNET,

the National Science Foundation Network, was a three-layer network that

acted as a backbone for much of the internet's infrastructure.

Originally funded by the government, NSFNET was a big leap into the

future which allowed networks to run smoothly. It allowed people to view

pages without any cost to institutions.

The allowance of users to access websites without having to pay to go

on them would further develop the idea of being able to browse for items

on the internet in the future. In 1992, interested entrepreneurs who

believed there was money to be made from the Internet petitioned

Congress to get the government out of the way of NSFNET.

UUNET was the first company to sell commercial TCP/IP, first to government-approved corporations in November 1988 and then actively to the public starting in January 1990, albeit only to the NSFNET backbone with their approval.

Barry Shein's The World STD was selling dial-up Internet on a legally questionable basis starting in late 1989 or early 1990, and then on an approved basis by 1992. He claims to be and is generally recognized as the first to ever think of selling dial-up Internet access for money. The High Performance Computing Act of 1991 put U.S. government money behind the National Information Infrastructure, the National Research and Education Network (NREN), the National Center for Supercomputing Applications, and more.

Internet becomes a true commercial medium

Although

the Internet infrastructure was mostly privately owned by 1993, the

lack of security in the protocols made doing business and obtaining

capital for commercial projects on the Internet difficult. Additionally,

the legality of Internet business was still somewhat grey, though

increasingly tolerated, which prevented large amounts of investment

money from entering the medium. This changed with the NSFNET selling its assets in 1995 and the December 1994 release of Netscape Navigator, whose HTTPS secure protocol permitted relatively safe transfer of credit and debit card information.

This along with the advent of user-friendly Web browsers and ISP portals such as America Online, along with the disbanding of the NSFNET in 1995 is what led to the corporate Internet and the dot com boom of the late 1990s.

1995 was a significant year for the concept of commercial Internet service provider (ISP) markets due to two substantial events that took place: the Netscape initial public offering

(IPO) and emergence of AT&T World Net. The Netscape IPO brought a

lot of publicity to the new technology of the Web and the commercial

opportunities for ISPs changed. At this point in time, ISPs started

providing their traditional service, text-based applications such as

e-mail, and trying to expand to another service, web applications. This

became a time where ISPs were incentivized to expand and experiment with

new types of services and business models. The entry of AT&T on the

other hand created an Internet access service that spread nationwide

and gained one million customers due to its publicity and marketing.

Despite this, AT&T did not dominate the commercial ISP markets, and

in fact, led to the growth and emergence of other independent ISPs such

as AOL, going against the predicted trend that commercial ISP markets

would be dominated by only a few national ISP services.

The commercialization of the Internet is going so well due to

four main reasons. First, the academic model could easily migrate into

business operations without requiring additional providers. Second, it

is feasible for entrepreneurs to learn and gain benefits without too

many technical and operational challenges. Third, customizing Internet

access is widespread through many different locations, circumstances,

and users. Fourth, the Internet Access industry is still growing and

provides a lot of opportunities for further research and practice.

Dot-com bubble

Between

1995-2000, Internet start-ups encouraged investors to pour large sums

of money into companies with ".com" in their business plan. When the

commercialization of the Internet became more acceptable and fast-paced,

Internet companies began to form rapidly with minute planning in order

to get into what they thought would be easy money. It fueled by

enthusiasm for all of the new opportunities and profits the Internet had

to offer, produced some successful companies such as Amazon.com.

This was shortly followed by the Dot-com Crash in 2001, wherein

many of the same start-up companies failed due to a lack of concrete

structure in their business plans, and investors cut off funding for the

unprofitable companies. This led many people to believe that the

Internet was overhyped, but in reality this turning-point for the

Internet led to a revolutionary concept known as Web 2.0.

Early social media platforms

The introduction to social media started in 1994 when GeoCities was created by David Bohnett and John Rezner.

GeoCities, being the first form of web hosting on the Internet, let

users utilize its functions to create "digital neighborhoods," which let

everyone using the platform discuss different topics in depth with

others involved in the same discussions. The concept of connecting with

others through the Internet in this manner that was established by

GeoCities paved the way for the emergence of other social media

platforms.

In December 1995, Classmates, a website created by Randy Conrads,

enabled its users to create their own profiles, search through large

yearbook databases, and add their high school friends to their friend

list.

Two years later, in 1997, Six Degrees, which is widely considered to be

the first social networking website, included many of the popular

features on Classmates, such as creating profiles and adding friends and

school affiliations.

File-sharing computer services

Before

the internet, sharing files was through floppy disks, tapes, CD's and

other forms. However with the creation of the internet and the

development of new applications made file-sharing much easier. A prime

example would be Napster,

a music file-sharing application created by Shawn Fanning in 1999. The

main idea of Napster was for people to share music files between each

other, virtually, without the use of a physical copy.

Napster was the reason why we have digital forms of music, tv-shows,

and movies. Napster forced production and music companies to make

digital forms of their media and streaming services.

File-sharing computer services started to become more accessible to users due to applications like BitTorrent.

The creation of BitTorrent brought peer to peer sharing and users were

able to use the built-in search engine to find media they would like to

view. BitTorrent's traffic made up 53% of all peer to peer traffic in

2004 even though it was a file-download protocol. Since BitTorrent was a file- download protocol, it had to rely on websites like The Pirate's Bay

for users to share their media onto the website and then users were

allowed to download media onto their computers through BitTorrent.

Web 2.0 and the rise of social media

Web 2.0

The

bursting of the Dot-Com Bubble in 2001 acted as an unforeseen gateway

for the transition from Web 1.0 to Web 2.0 by 2004. A conference between

Tim O'Reilly with O'Reilly Media and MediaLive International coined the term "Web 2.0". This conference became an annual "Web 2.0 Summit" in San Francisco,

where the idea was developed gradually from 2004 to 2011. Web 2.0

included data that existed prior within Web 1.0 with improved data

management and increased interaction. Web 2.0 was majorly delivered by AdobeFlash, Ajax, RSS, Eclipse, JavaScript, Microsoft Silverlight, etc.

Some key characteristics of Web 2.0 included:

- Development of user friendly advertising i.e banners and pop-up ads developed by Google and Overture

- A platform for the people by the people

- Users now able to add value to existing applications

- Transition from static to dynamic HTML serving web applications to users

- User-generated content

- Growth of social media

- Transition from passive viewing to co-authoring

- The transition from read-only web to read-write web.

While using the applications of Web 2.0, users unknowingly had their

user data aggregated: collected and linked together for what was already

becoming commercial purposes. Sites used built-in APIs

(Application Programming Interfaces) to connect with external sources

invisible to the user. Thanks to the newfound connection between

platform and user, applications could now access data and exchange it

with other softwares.

Web 2.0 catapulted the marketing industry into a completely

uncharted territory. With encouraged user integration, there were now

enhanced retail opportunities, increased marketing visibility, and the

ability for business to interact with customers. In November 2005,

Google came out with Google Analytics which allows sellers to track

buyers' referrals, advertisements, search engines, and e-mail

promotions.

All of these characteristics of Web 2.0 combined gave way to "viral

marketing": a marketing technique that was all over the internet, all

the time. Business could now promote products or services to larger

audiences wherever, and however they chose to.

Personalization on the Internet

The

commercialization of the Internet has allowed personalized experiences

for consumers, which in turn provides companies and marketers with data

that helps them make inferences about the behavioral patterns and

actions of the consumer. Personalization of the Internet follows a

typical cycle of product purchases, which includes steps such as

personalized searches, personalized recommendations, and personalized

price and promotions. Companies tailor products they think the consumer would like based on their behavior and transactions. This make people feel like someone is listening to their needs.

Clickstream

Clickstream technology is what is used in order to infer information about the user, mainly for online shopping sites. Clickstream is the path that consumers take on the website to its destination. It includes times stamps and the pages that were visited.

Although clickstream may be associated with online shopping and

advertising, it can also be used to simply extend certain sites to more

people.

It is important to understand a consumer's pattern on a certain site in

order to tailor information to their liking. Not only does this

increase traffic flow to a specific site, but it also increases

connections with customers which makes it more engaging.

Personalized email marketing

Emails

can also help in the process of personalizing the Internet. Email

personalization means that companies have to learn about your likes and

dislikes in order to send you emails that carry important weight to you

rather than sending every user the same email. Because of email personalization, it has been proven to increase interest in the site.

A form of email personalization that is commonly seen are those that

remind the user that they still have products in their cart that have

not been purchased yet.

This can remind them of something that they forgot or this can increase

their interest even further and ultimately can lead to them purchasing

the product.

Advertising on the Internet

Targeted

advertising can take on many different forms, across numerous

platforms, in order to effectively target range of market subgroups.

Often seen as a precursor to the Internet, the first spam email was

sent on May 3, 1978, originally used as "a highly secure medium for

information flow between universities and research centers," beginning

with UCLA, UC Santa Barbara, University of Utah, and Stanford Research Institute. The earliest widespread spam email was sent on April 13, 1994 by Martha Siegel and Laurence Canter, who were among the first to post on Usenet in order to advertise their law firm, claiming they would in turn be able to help people enter the green-card lottery. Hotwired magazine then coined the term "banner advertising" in October 1994, with AT&T being one of the first companies to purchase a banner ad.

Search engine marketing

Search

engine marketing is something that is seen every time someone searches

for a product on a search engine. Sometimes referred to as SEM, search

engine marketing is a strategy in marketing when ads appear above or

below relevant search results. These ads are usually sponsored or paid for, also known as CPC, cost-per-click. Commonly seen on popular search engines such as Google and Bing, these ads don't just stop at search engines, but extend to their partner sites, such as Yahoo, YouTube, and shopping. Similar to SEM, search engine optimization (SEO) improves visibility of their page. Instead of paying for ads, they have to appeal to search engines in order for them to rank them higher on specific searches.

Banner ads

Banner ads are slim ads that can appear vertically, horizontally, and in between texts. They are usually filled with pictures and few words rather than all words. Usually clickable, when a user clicks on these banners, they lead to another page which displays the full site.

Usually placed in high-traffic areas, companies have to bid for their

ad to be placed their instead of simply paying for the spot. However, this is not done by humans but rather by a program that does it without human assistance.

Unlike personalization, banner ads can reach everyone which can spark

interest in new people rather than staying within one group of consumer.

However, it can also show for those that are interested in a specific

product. Ultimately, banner ads will not decrease but only increase attraction towards their site.

Facebook

Facebook was founded by Mark Zuckerberg

in 2004 and was originally only available to Harvard students as an

interactive college student network, but it soon expanded to many other

college campuses throughout the United States. By September 2006,

Facebook expanded outside of just educational institutions and became

available to any person with a registered email address.

After Sean Parker, the founder of Napster, became the president of

Facebook, Mark Zuckerberg was introduced to Peter Thiel, a venture

capitalist and one of the founders of PayPal,

which resulted in Thiel investing $500,000 into Facebook, establishing

Facebook as an up and coming company that would interest other

investors.

In April 2004, Facebook's ad sales effort, which was led by co-founder Eduardo Saverin,

was an example of an early commercial use of social media. The ad rates

were as low as $1 per 1,000 impressions and Facebook offered its

services to companies who wanted to advertise themselves through this

platform. Facebook enabled companies to create targeted advertisements

based on a variety of consumer-related factors such as

college/university, degree type, sexual orientation, age, personal

interests, and political views. Companies were also provided with an

up-to-the-minute ad performance tracking service and had rates that

differed depending on the type of advertisement (run-of-site ads,

targeted ads, etc.).

Twitter

emerged from Odeo, a podcasting venture that was launched in 2004 and

founded by Evan Williams, Biz Stone, and Noah Glass. After Apple's

announcement in 2005 that they would add podcasts to iTunes, the leaders

of Odeo wanted to take the company in a new direction since they

believed that they could not compete with Apple in regards to podcasts.

Engineer Jack Dorsey suggested that the company could provide a short message service

(SMS) that enables friends to share short blog-like updates to one

another. Using Dorsey's idea, in 2006, Twitter was officially launched.

Throughout Twitter's lifespan, there have been various examples

of it being used for commercial purposes. Twitter's implementation of

hashtags allows companies to go viral on the platform and gain a

significant amount of attention for launching a successful hashtag.

Nike's "Dream Big" campaign used the hashtag "#justdoit" to promote

their message, which focused on the stories of famous athletes who

became successful after not letting fear stop them from achieving their

goals in order to motivate their followers to chase their own dreams.

The success and traction of Nike's "Dream Big" campaign is an example of

how Twitter as a platform was used to benefit companies whose goal was

to increase their visibility to a large amount of people using Twitter.

Viral marketing is also present on Twitter as companies use their posts

to touch upon current issues that are being heavily discussed. This

method of using Twitter to keep their brands up to date with the trends

creates more opportunities for companies to interact with users and

potential buyers in ways that are relevant to them.

The emergence of the mobile Internet

Preceded modern cellular mobile form of telephony technology was the mobile radio telephone systems, referred to as the zero generation (0G or pre-cellular) systems, later succeeded by 1G. The world's first commercial 1G mobile network, launched in 1979 by Nippon Telegraph and Telephone

(NTT), was made available to the citizens of Tokyo, Japan. Following

Japan, other countries started attaining 1G coverage. Although cellphone

prototypes followed the launch of 1G, it wasn't until 1983 that

Motorola rolled out to the public the first commercially available

cellphone.

Replacing 1G's analog technology, telecommunication standards is 2G, digital telecommunications. 2G cellar networks were first commercially launched on the Global System for Mobile Communications

(GSM) standard in Finland in 1991. 2G made significant changes,

advancing the basis of 1G's voice calls through improved quality and

download speed, also introducing digitally encrypted phone calls

(although not in entirety, it allowed for conversations held between the

mobile phone and the cellular base station).

2G also marked the start of data services for mobile devices and

enables access to media content. The introduction of 2G's data

transferring abilities, text messages (SMS) and multimedia messages (MMS),

changed how people communicate. As methods of communications shifted,

the 2G network led to the smartphones' massive adoption. With the demand

increase for data and connectivity, the 2G network was superseded by 3G, followed by 4G, and later on 5G.

3G Technology

The first commercial launch of 3G technology was deployed for the public by NTT Docomo

in Japan in 2001. 3G networks implemented new technology and protocols

that offered significantly faster data transfer capabilities, which

improved connectivity, call quality, and connection speed, including 30

times higher rates (3 Mbps) on average than that of 2G (0.1 Mbps).

When 3G infrastructure launched more broadly in 2002, its networks

continued to develop in not only quality and speed but also range and

volume, setting the early commercial foundations of the mobile Internet.

Smartphones soon gained popularity quickly as 3G marked the first

for a cellular communications network to broaden and improve an range

of features, kick-starting the transition to widespread usage of

cellular networks. In 2002, BlackBerry launched its first mobile device

(BlackBerry 5810), offering a full keyboard, advanced security, and

internet access. The company characterized it as a "breakthrough in

wireless convergence," and touted the wireless handheld device for

mobile services ranging from email delivery and SMS,

to streaming and web browsing, to graphical interfaces and utility

features. Later, the BlackBerry 5810 was replaced by more advanced

models iteratively produced by both Blackberry and their field

competitors.

In 2007, Apple released the company's first-ever phone, iPhone 2G (also known as iPhone 1 or the original iPhone). At Macworld 2007, Steve Jobs

presented the phone as the one device allowing capabilities of "an

iPod, a phone, and an internet communicator." Following the iPhone 2G, the iPhone 3G (also known as iPhone 2) was introduced along with the company's developed App Store.

The Apple-developed and maintained app store platform utilizes the 3G

network, providing access to mobile applications on the company's operations systems.

Jointly, the introduction of the App Store marketplace and the

maturation of the iPhone established the idea that an electronic device

need not be rigid functionally. The device contributed to the transition

to mobile as it speedily evolved into a dominant platform of the mobile

web, making available to the public increasing access to apps and data.

4G Technology

4G,

different from previous networks designed primarily for voice

communication (2G and 3G), is the first network designed specifically

for data transmission, driven by order for quicker data and expansion of

network capabilities. The network observes the start of today's standard services, offering faster data access on mobile phones.

Some improvements and applications brought about by LTE and 4G include:

- Streaming quality (higher resolution and better audio quality)

- Upload and download rates

- Video calls

- More on-the-go entertainment, including influence on social media platforms and gaming services

- Wearable tech products

Eliminating the previous hold, set by limited data transmission, the

network contributed to advanced mobile applications and the

applications' unique features. Network users became able to share

high-resolution images and videos from their mobile devices, causing

social media and gaming platforms to shift and create features that take

advantage of the network's new effects. While progress in the 4G

network helped with further improvement of data transferring speeds, the

network had reached its capacity, and the rapidly releasing new mobile

and wearable products require faster networks.

5G Technology

At the heart of 5G network is 3GPP

(3rd Generation Partnership Project), which invented the air interface

and service layer design for 5G. 3GPP encompasses infrastructure

vendors, device manufacturers, as well as network and service providers.

The initial idea of 5G network was to connect all machines,

objects, and devices together virtually. 5G would be more reliable with

massive network capacity and a uniform user experience. No specific

company owns 5G, but a plethora of different companies contribute to

bringing it to its full potential.

5G works by using OFDM

(orthogonal frequency-division multiplexing), which sends digital

signals across channels to properly interconnect various devices. With

wider bandwidth, 5G has larger capacity and lower latency than its

predecessors.

From 2013-2016, companies such as Verizon, Samsung, Google, Bt, Nokia and others began to develop their own versions of 5G networks including Google’s Skybender.

Global operators began launching new 5G networks in 2019. By 2020, 5G

networks were integrated into the majority of mobile phones as well as

in-home modems.

Some characteristics and effects of 5G network:

- Speeds twice as fast as 4G

- Ability to download movies within seconds

- Larger frequencies which allow for faster data travel with less clutter

- Mobile phone becomes the dominant platform for video consumption

- Mass roll-out and quality improvement of virtual reality

Facebook has taken advantage of the prevail of 5G networks. Facebook

took over a 5G company, Inovi, and partnered with a startup company,

Common Networks, to help power home use of 5G.

Facebook had already invested in Oculus, with the idea that Virtual Reality and 5G will innovate social media usage. Mark Zuckerberg and Facebook have already incorporated VR into applications such as VR chat, Facebook spaces,

and Oculus Home. Users can communicate with one another through avatars

and specialized 3D audio technology, play virtual games, watch content

together, and visit virtual space stations.

E-commerce

E-commerce

stands for electronic commerce and pertains to buying and trading goods

through electronic mediums. This enabled shoppers to do their online

shopping at home instead of going into physical stores. With the

development and better access to the Internet, e-commerce allowed larger

and smaller businesses to grow at a faster rate and it cuts down

expenses when it comes down to retail shopping.

History and development of e-commerce

The idea of e-commerce can be traced back to the 1960s with the development of the Electronic Data Interchange, enabling data exchange through digital transactions without human interaction. Early forms of e-commerce date to Michael Aldrich, when in 1979 he connected a TV to a transaction processing computer using a telephone, calling it teleshopping.

In 1992, Book Stacks Unlimited became the first store to host an e-commerce site, as additional companies like Dell followed suit with the adoption related e-commerce models. The development of the Secure Socket Layers,

an encryption certificate, provided better security for data

transmission over the internet. This gave online shoppers less

hesitations and concerns while shopping online.

Impact on the retail industry

E-commerce was an stepping stone into cutting down costs for customers and for businesses to bring in more profit.

A traditional business selling shirts would have to go through

warehouses and distributors before the product ends up in the store,

which all bring additional costs to the business.

The access to the Internet allowed for individuals to do their

shopping on their computers or phones. The change that E-commerce

brought to the shopping industry had negative impacts on the retail

section. Business would close down their stores to cut costs on selling

their product which lead to employees losing their jobs since businesses

found it easier and cheaper to sell their products online. With the

decline in physical retail stores, customers aren't able to go to a

store and try the product before buying it; this brought a bigger hassle

to customers where their new item doesn't fit or work and they would

have to ship it back.

Role of e-commerce in 21st century

Amazon.com,

created by Jeff Bezos, started out as a bookstore and comparing it to

today, Amazon.com offers different products for users when using their

website. Amazon.com is known for their Prime services, 2-day delivery,

which attracted shoppers since they were able to get different items

delivered to their front door step. In some cases, groceries and

household supplies would also be able to delivered through Amazon.com

making it easy for shoppers due to the fact they wouldn't have to leave

their homes.

Brands like Nike and Adidas

would promote their Cyber Monday deals to encourage people to buy their

products online without having to deal with the long lines when

shopping during Black Friday.

Smaller businesses were able to sell their products online and be able to promote it using internet advertisements.

The creation of applications like Shopify

allows users to develop their own website to sell their product and

build a reputable brand with the help of tutorials and instructions. The

developments of PayPal and other payment methods gave customers an easier and faster way of paying by signing into their accounts.

5G and Online Shopping

Augmented reality,

Virtual reality and 5G networks have given rise to revolutionary online

shopping practices. By using AR to achieve a hyper-realistic virtual

presentation of the physical world, online shopping stores have immersed

their consumers into the digital future of trying and buying products

online. Facebook has also started to test out AR advertisements on their

platform, and even collaborating with businesses to advertise using AR

on Facebook Messenger

ever since 2018. These ads are unique due to their “tap and try”

feature, with companies virtually demonstrating their product or service

to the prospective buyers with Facebook as the middleman.

The use of 5G allows brands to utilize big data for

hyper-personalized advertising. With consumers using the internet as

often as they do, high amounts of data allow brands to micro-segment

their target audiences; this is a form of digital marketing yet to be seen.

Internet Privacy

NSA disclosures

In 2013, Edward Snowden, a former intelligence contractor for Booz Allen Hamilton in Hawaii, leaked classified documents from the National Security Agency

(NSA) to journalists Glenn Greenwald and Laura Poitras. These documents

revealed information including the fact that the NSA collected millions

of Verizon customers' telephone records and used a program called Prism

to access data from Internet companies such as Google and Facebook.

When people became more aware of the mass surveillance being done by

the NSA, Americans became more disapproving of the government's

surveillance program, which served as an anti-terrorism effort, and the

majority of Americans started to believe that having control of who is

able to access their personal and private information is important.

Internet privacy became an important concept for companies when it came

to easing their customers due to the information Snowden leaked.

Facebook is an example of one of these companies, which was shown during

Facebook's F8 developer conference in 2019, where Zuckerberg stated

"The future is private...Over time, I believe that a private social

platform will be even more important to our lives than our digital town

squares. So today, we’re going to start talking about what this could

look like as a product, what it means to have your social experience be

more intimate, and how we need to change the way we run this company in

order to build this.”

Facebook scandal

In

2018, the Cambridge Analytica group brought to attention what Facebook

was doing with their user's information. This scandal was based around

the election and how Facebook had harvested their data from their users

and use their information for advertisement.

A well known social media page was sued for personal data breach. This

case became argued by the fact that data was being taken without

consent, which meant failure to comply with legal obligations under the

Data Protection Act of 1998.

The Cambridge Analytica scandal against Facebook didn't change

Facebook but the way individuals see social media applications. The

Cambridge Analytica wanted Facebook to fix the privacy issues within

their application, however Facebook wasn't taking the steps that the

Cambridge Analytica wanted Facebook to take. However, this scandal

opened the eyes of Facebook users because users saw what was being done

with their data that they give to Facebook.

![{\displaystyle {\begin{aligned}\Psi &={\frac {i\Psi '}{z\lambda }}\int _{-{\frac {a}{2}}}^{\frac {a}{2}}\int _{-\infty }^{\infty }e^{-ik\left[z+{\frac {\left(x-x'\right)^{2}+y^{\prime 2}}{2z}}\right]}\,dy'\,dx'\\&={\frac {i\Psi ^{\prime }}{z\lambda }}e^{-ikz}\int _{-{\frac {a}{2}}}^{\frac {a}{2}}e^{-ik\left[{\frac {\left(x-x'\right)^{2}}{2z}}\right]}\,dx^{\prime }\int _{-\infty }^{\infty }e^{-ik\left[{\frac {y^{\prime 2}}{2z}}\right]}\,dy'\\&=\Psi ^{\prime }{\sqrt {\frac {i}{z\lambda }}}e^{\frac {-ikx^{2}}{2z}}\int _{-{\frac {a}{2}}}^{\frac {a}{2}}e^{\frac {ikxx'}{z}}e^{\frac {-ikx^{\prime 2}}{2z}}\,dx'\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/28dd79cf3a40895f3ebabb7dee02ba60f66e8ee3)

![{\displaystyle \Psi =aC{\frac {\sin {\frac {ka\sin \theta }{2}}}{\frac {ka\sin \theta }{2}}}=aC\left[\operatorname {sinc} \left({\frac {ka\sin \theta }{2}}\right)\right]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/f79356554dbbba3ea663d9f2fa6d6aba14867908)

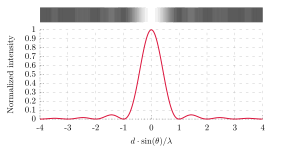

![{\displaystyle I(\theta )=I_{0}{\left[\operatorname {sinc} \left({\frac {\pi a}{\lambda }}\sin \theta \right)\right]}^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/46e0810dc7aa8ca2487a4f2244139665f0f1d4ea)

![{\displaystyle {\begin{aligned}\Psi &=aC{\frac {\sin {\frac {ka\sin \theta }{2}}}{\frac {ka\sin \theta }{2}}}\left({\frac {1-e^{iNkd\sin \theta }}{1-e^{ikd\sin \theta }}}\right)\\[1ex]&=aC{\frac {\sin {\frac {ka\sin \theta }{2}}}{\frac {ka\sin \theta }{2}}}\left({\frac {e^{-iNkd{\frac {\sin \theta }{2}}}-e^{iNkd{\frac {\sin \theta }{2}}}}{e^{-ikd{\frac {\sin \theta }{2}}}-e^{ikd{\frac {\sin \theta }{2}}}}}\right)\left({\frac {e^{iNkd{\frac {\sin \theta }{2}}}}{e^{ikd{\frac {\sin \theta }{2}}}}}\right)\\[1ex]&=aC{\frac {\sin {\frac {ka\sin \theta }{2}}}{\frac {ka\sin \theta }{2}}}{\frac {\frac {e^{-iNkd{\frac {\sin \theta }{2}}}-e^{iNkd{\frac {\sin \theta }{2}}}}{2i}}{\frac {e^{-ikd{\frac {\sin \theta }{2}}}-e^{ikd{\frac {\sin \theta }{2}}}}{2i}}}\left(e^{i(N-1)kd{\frac {\sin \theta }{2}}}\right)\\[1ex]&=aC{\frac {\sin \left({\frac {ka\sin \theta }{2}}\right)}{\frac {ka\sin \theta }{2}}}{\frac {\sin \left({\frac {Nkd\sin \theta }{2}}\right)}{\sin \left({\frac {kd\sin \theta }{2}}\right)}}e^{i\left(N-1\right)kd{\frac {\sin \theta }{2}}}\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/7477cf078ea2140bba1611474083e5460b9e2b29)

![{\displaystyle I\left(\theta \right)=I_{0}\left[\operatorname {sinc} \left({\frac {\pi a}{\lambda }}\sin \theta \right)\right]^{2}\cdot \left[{\frac {\sin \left({\frac {N\pi d}{\lambda }}\sin \theta \right)}{\sin \left({\frac {\pi d}{\lambda }}\sin \theta \right)}}\right]^{2}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/817c1f8594184fc46dc4a12c31e54b59e644c4f8)