Logical failures occur when the hard drive devices are functional but the user or automated-OS cannot retrieve or access data stored on them. Logical failures can occur due to corruption of the engineering chip, lost partitions, firmware failure, or failures during formatting/re-installation.

Data recovery can be a very simple or technical challenge. This is why there are specific software companies specialized in this field.

About

The most common data recovery scenarios involve an operating system failure, malfunction of a storage device, logical failure of storage devices, accidental damage or deletion, etc. (typically, on a single-drive, single-partition, single-OS system), in which case the ultimate goal is simply to copy all important files from the damaged media to another new drive. This can be accomplished using a Live CD, or DVD by booting directly from a ROM or a USB drive instead of the corrupted drive in question. Many Live CDs or DVDs provide a means to mount the system drive and backup drives or removable media, and to move the files from the system drive to the backup media with a file manager or optical disc authoring software. Such cases can often be mitigated by disk partitioning and consistently storing valuable data files (or copies of them) on a different partition from the replaceable OS system files.

Another scenario involves a drive-level failure, such as a compromised file system or drive partition, or a hard disk drive failure. In any of these cases, the data is not easily read from the media devices. Depending on the situation, solutions involve repairing the logical file system, partition table, or master boot record, or updating the firmware or drive recovery techniques ranging from software-based recovery of corrupted data, to hardware- and software-based recovery of damaged service areas (also known as the hard disk drive's "firmware"), to hardware replacement on a physically damaged drive which allows for the extraction of data to a new drive. If a drive recovery is necessary, the drive itself has typically failed permanently, and the focus is rather on a one-time recovery, salvaging whatever data can be read.

In a third scenario, files have been accidentally "deleted" from a storage medium by the users. Typically, the contents of deleted files are not removed immediately from the physical drive; instead, references to them in the directory structure are removed, and thereafter space the deleted data occupy is made available for later data overwriting. In the mind of end users, deleted files cannot be discoverable through a standard file manager, but the deleted data still technically exists on the physical drive. In the meantime, the original file contents remain, often several disconnected fragments, and may be recoverable if not overwritten by other data files.

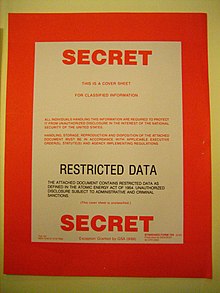

The term "data recovery" is also used in the context of forensic applications or espionage, where data which have been encrypted, hidden, or deleted, rather than damaged, are recovered. Sometimes data present in the computer gets encrypted or hidden due to reasons like virus attacks which can only be recovered by some computer forensic experts.

Physical damage

A wide variety of failures can cause physical damage to storage media, which may result from human errors and natural disasters. CD-ROMs can have their metallic substrate or dye layer scratched off; hard disks can suffer from a multitude of mechanical failures, such as head crashes, PCB failure, and failed motors; tapes can simply break.

Physical damage to a hard drive, even in cases where a head crash has occurred, does not necessarily mean there will be a permanent loss of data. The techniques employed by many professional data recovery companies can typically salvage most, if not all, of the data that had been lost when the failure occurred.

Of course, there are exceptions to this, such as cases where severe damage to the hard drive platters may have occurred. However, if the hard drive can be repaired and a full image or clone created, then the logical file structure can be rebuilt in most instances.

Most physical damage cannot be repaired by end users. For example, opening a hard disk drive in a normal environment can allow airborne dust to settle on the platter and become caught between the platter and the read/write head. During normal operation, read/write heads float 3 to 6 nanometers above the platter surface, and the average dust particles found in a normal environment are typically around 30,000 nanometers in diameter. When these dust particles get caught between the read/write heads and the platter, they can cause new head crashes that further damage the platter and thus compromise the recovery process. Furthermore, end users generally do not have the hardware or technical expertise required to make these repairs. Consequently, data recovery companies are often employed to salvage important data with the more reputable ones using class 100 dust- and static-free cleanrooms.

Recovery techniques

Recovering data from physically damaged hardware can involve multiple techniques. Some damage can be repaired by replacing parts in the hard disk. This alone may make the disk usable, but there may still be logical damage. A specialized disk-imaging procedure is used to recover every readable bit from the surface. Once this image is acquired and saved on a reliable medium, the image can be safely analyzed for logical damage and will possibly allow much of the original file system to be reconstructed.

Hardware repair

A common misconception is that a damaged printed circuit board (PCB) may be simply replaced during recovery procedures by an identical PCB from a healthy drive. While this may work in rare circumstances on hard disk drives manufactured before 2003, it will not work on newer drives. Electronics boards of modern drives usually contain drive-specific adaptation data (generally a map of bad sectors and tuning parameters) and other information required to properly access data on the drive. Replacement boards often need this information to effectively recover all of the data. The replacement board may need to be reprogrammed. Some manufacturers (Seagate, for example) store this information on a serial EEPROM chip, which can be removed and transferred to the replacement board.

Each hard disk drive has what is called a system area or service area; this portion of the drive, which is not directly accessible to the end user, usually contains drive's firmware and adaptive data that helps the drive operate within normal parameters. One function of the system area is to log defective sectors within the drive; essentially telling the drive where it can and cannot write data.

The sector lists are also stored on various chips attached to the PCB, and they are unique to each hard disk drive. If the data on the PCB do not match what is stored on the platter, then the drive will not calibrate properly. In most cases the drive heads will click because they are unable to find the data matching what is stored on the PCB.

Logical damage

The term "logical damage" refers to situations in which the error is not a problem in the hardware and requires software-level solutions.

Corrupt partitions and file systems, media errors

In some cases, data on a hard disk drive can be unreadable due to damage to the partition table or file system, or to (intermittent) media errors. In the majority of these cases, at least a portion of the original data can be recovered by repairing the damaged partition table or file system using specialized data recovery software such as Testdisk; software like ddrescue can image media despite intermittent errors, and image raw data when there is partition table or file system damage. This type of data recovery can be performed by people without expertise in drive hardware as it requires no special physical equipment or access to platters.

Sometimes data can be recovered using relatively simple methods and tools; more serious cases can require expert intervention, particularly if parts of files are irrecoverable. Data carving is the recovery of parts of damaged files using knowledge of their structure.

Overwritten data

After data has been physically overwritten on a hard disk drive, it is generally assumed that the previous data are no longer possible to recover. In 1996, Peter Gutmann, a computer scientist, presented a paper that suggested overwritten data could be recovered through the use of magnetic force microscopy. In 2001, he presented another paper on a similar topic. To guard against this type of data recovery, Gutmann and Colin Plumb designed a method of irreversibly scrubbing data, known as the Gutmann method and used by several disk-scrubbing software packages.

Substantial criticism has followed, primarily dealing with the lack of any concrete examples of significant amounts of overwritten data being recovered. Gutmann's article contains a number of errors and inaccuracies, particularly regarding information about how data is encoded and processed on hard drives. Although Gutmann's theory may be correct, there is no practical evidence that overwritten data can be recovered, while research has shown to support that overwritten data cannot be recovered.

Solid-state drives (SSD) overwrite data differently from hard disk drives (HDD) which makes at least some of their data easier to recover. Most SSDs use flash memory to store data in pages and blocks, referenced by logical block addresses (LBA) which are managed by the flash translation layer (FTL). When the FTL modifies a sector it writes the new data to another location and updates the map so the new data appear at the target LBA. This leaves the pre-modification data in place, with possibly many generations, and recoverable by data recovery software.

Lost, deleted, and formatted data

Sometimes, data present in the physical drives (Internal/External Hard disk, Pen Drive, etc.) gets lost, deleted and formatted due to circumstances like virus attack, accidental deletion or accidental use of SHIFT+DELETE. In these cases, data recovery software is used to recover/restore the data files.

Logical bad sector

In the list of logical failures of hard disks, a logical bad sector is the most common fault leading data not to be readable. Sometimes it is possible to sidestep error detection even in software, and perhaps with repeated reading and statistical analysis recover at least some of the underlying stored data. Sometimes prior knowledge of the data stored and the error detection and correction codes can be used to recover even erroneous data. However, if the underlying physical drive is degraded badly enough, at least the hardware surrounding the data must be replaced, or it might even be necessary to apply laboratory techniques to the physical recording medium. Each of the approaches is progressively more expensive, and as such progressively more rarely sought.

Eventually, if the final, physical storage medium has indeed been disturbed badly enough, recovery will not be possible using any means; the information has irreversibly been lost.

Remote data recovery

Recovery experts do not always need to have physical access to the damaged hardware. When the lost data can be recovered by software techniques, they can often perform the recovery using remote access software over the Internet, LAN or other connection to the physical location of the damaged media. The process is essentially no different from what the end user could perform by themselves.

Remote recovery requires a stable connection with an adequate bandwidth. However, it is not applicable where access to the hardware is required, as in cases of physical damage.

Four phases of data recovery

Usually, there are four phases when it comes to successful data recovery, though that can vary depending on the type of data corruption and recovery required.

- Phase 1

- Repair the hard disk drive

- The hard drive is repaired in order to get it running in some form, or at least in a state suitable for reading the data from it. For example, if heads are bad they need to be changed; if the PCB is faulty then it needs to be fixed or replaced; if the spindle motor is bad the platters and heads should be moved to a new drive.

- Phase 2

- Image the drive to a new drive or a disk image file

- When a hard disk drive fails, the importance of getting the data off the drive is the top priority. The longer a faulty drive is used, the more likely further data loss is to occur. Creating an image of the drive will ensure that there is a secondary copy of the data on another device, on which it is safe to perform testing and recovery procedures without harming the source.

- Phase 3

- Logical recovery of files, partition, MBR and filesystem structures

- After the drive has been cloned to a new drive, it is suitable to attempt the retrieval of lost data. If the drive has failed logically, there are a number of reasons for that. Using the clone it may be possible to repair the partition table or master boot record (MBR) in order to read the file system's data structure and retrieve stored data.

- Phase 4

- Repair damaged files that were retrieved

- Data damage can be caused when, for example, a file is written to a sector on the drive that has been damaged. This is the most common cause in a failing drive, meaning that data needs to be reconstructed to become readable. Corrupted documents can be recovered by several software methods or by manually reconstructing the document using a hex editor.

Restore disk

The Windows operating system can be reinstalled on a computer that is already licensed for it. The reinstallation can be done by downloading the operating system or by using a "restore disk" provided by the computer manufacturer. Eric Lundgren was fined and sentenced to U.S. federal prison in April 2018 for producing 28,000 restore disks and intending to distribute them for about 25 cents each as a convenience to computer repair shops.

List of data recovery software

Bootable

Data recovery cannot always be done on a running system. As a result, a boot disk, live CD, live USB, or any other type of live distro contains a minimal operating system.

- BartPE: a lightweight variant of Microsoft Windows XP or Windows Server 2003 32-bit operating systems, similar to a Windows Preinstallation Environment, which can be run from a live CD or live USB drive. Discontinued.

- Finnix: a Debian-based Live CD with a focus on being small and fast, useful for computer and data rescue

- Disk Drill Basic: capable of creating bootable Mac OS X USB drives for data recovery

- Knoppix: contains utilities for data recovery under Linux

- SystemRescueCD: an Arch Linux based live CD, useful for repairing unbootable computer systems and retrieving data after a system crash

- Windows Preinstallation Environment (WinPE): A customizable Windows Boot DVD (made by Microsoft and distributed for free). Can be modified to boot to any of the programs listed.

Consistency checkers

- CHKDSK: a consistency checker for DOS and Windows systems

- Disk First Aid: a consistency checker for Mac OS 9

- Disk Utility: a consistency checker for Mac OS X

- fsck: a consistency checker for UNIX

- gparted: a GUI for GNU parted, the GNU partition editor, capable of calling fsck

File recovery

- CDRoller: recovers data from optical disc

- Disk Drill Basic: data recovery application for Mac OS X and Windows

- DMDE: multi-platform data recovery and disk editing tool

- dvdisaster: generates error-correction data for optical discs

- GetDataBack: a Windows recovery program

- Hetman Partition Recovery: data drive recovery solution

- IsoBuster: recovers data from optical discs, USB sticks, flash drives and hard drives

- Mac Data Recovery Guru: Mac OS X data recovery program which works on USB sticks, optical media, and hard drives

- MiniTool Partition Wizard: for Windows 7 and later; includes data recovery

- Norton Utilities: a suite of utilities that has a file recovery component

- PhotoRec: advanced multi-platform program with text-based user interface used to recover files

- Recover My Files: proprietary software for Windows 2000 and later—FAT, NTFS and HFS

- Recovery Toolbox: freeware and shareware tools plus online services for various Windows 2000 and later programs

- Recuva: proprietary software for Windows 2000 and later—FAT and NTFS

- Stellar Data Recovery: data recovery utility for Windows and macOS

- TestDisk: free, open source, multi-platform. recover files and lost partitions

- TuneUp Utilities: a suite of utilities that has a file recovery component for Windows XP and later

- Windows File Recovery: a command-line utility from Microsoft to recover deleted files for Windows 10 version 2004 and later

Forensics

- Foremost: an open-source command-line file recovery program, originally developed by the U.S. Air Force Office of Special Investigations and NPS Center for Information Systems Security Studies and Research

- Forensic Toolkit: by AccessData, used by law enforcement

- Open Computer Forensics Architecture: An open-source program for Linux

- The Coroner's Toolkit: a suite of utilities for assisting in forensic analysis of a UNIX system after a break-in

- The Sleuth Kit: also known as TSK, a suite of forensic analysis tools developed by Brian Carrier for UNIX, Linux and Windows systems. TSK includes the Autopsy forensic browser.

Imaging tools

- Clonezilla: a free disk cloning, disk imaging, data recovery, and deployment boot disk

- dd: common byte-to-byte cloning tool found on Unix-like systems

- ddrescue: an open-source tool similar to dd but with the ability to skip over and subsequently retry bad blocks on failing storage devices

- Team Win Recovery Project: a free and open-source recovery system for Android devices