Data sanitization involves the secure and permanent erasure of sensitive data from datasets and media to guarantee that no residual data can be recovered even through extensive forensic analysis. Data sanitization has a wide range of applications but is mainly used for clearing out end-of-life electronic devices or for the sharing and use of large datasets that contain sensitive information. The main strategies for erasing personal data from devices are physical destruction, cryptographic erasure, and data erasure. While the term data sanitization may lead some to believe that it only includes data on electronic media, the term also broadly covers physical media, such as paper copies. These data types are termed soft for electronic files and hard for physical media paper copies. Data sanitization methods are also applied for the cleaning of sensitive data, such as through heuristic-based methods, machine-learning based methods, and k-source anonymity.

This erasure is necessary as an increasing amount of data is moving to online storage, which poses a privacy risk in the situation that the device is resold to another individual. The importance of data sanitization has risen in recent years as private information is increasingly stored in an electronic format and larger, more complex datasets are being utilized to distribute private information. Electronic storage has expanded and enabled more private data to be stored. Therefore it requires more advanced and thorough data sanitization techniques to ensure that no data is left on the device once it is no longer in use. Technological tools that enable the transfer of large amounts of data also allow more private data to be shared. Especially with the increasing popularity of cloud-based information sharing and storage, data sanitization methods that ensure that all data shared is cleaned has become a significant concern. Therefore it is only sensible that governments and private industry create and enforce data sanitization policies to prevent data loss or other security incidents.

Data sanitization policy in public and private sectors

While the practice of data sanitization is common knowledge in most technical fields, it is not consistently understood across all levels of business and government. Thus, the need for a comprehensive Data Sanitization policy in government contracting and private industry is required in order to avoid the possible loss of data, leaking of state secrets to adversaries, disclosing proprietary technologies, and possibly being barred for contract competition by government agencies.

With the increasingly connected world, it has become even more critical that governments, companies, and individuals follow specific data sanitization protocols to ensure that the confidentiality of information is sustained throughout its lifecycle. This step is critical to the core Information Security triad of Confidentiality, Integrity, and Availability. This CIA Triad is especially relevant to those who operate as government contractors or handle other sensitive private information. To this end, government contractors must follow specific data sanitization policies and use these policies to enforce the National Institute of Standards and Technology recommended guidelines for Media Sanitization covered in NIST Special Publication 800-88. This is especially prevalent for any government work which requires CUI (Controlled Unclassified Information) or above and is required by DFARS Clause 252.204-7012, Safeguarding Covered Defense Information and Cyber Incident Reporting. While private industry may not be required to follow NIST 800-88 standards for data sanitization, it is typically considered to be a best practice across industries with sensitive data. To further compound the issue, the ongoing shortage of cyber specialists and confusion on proper cyber hygiene has created a skill and funding gap for many government contractors.

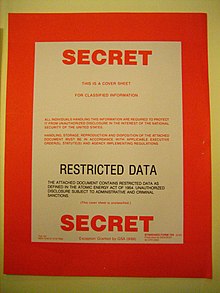

However, failure to follow these recommended sanitization policies may result in severe consequences, including losing data, leaking state secrets to adversaries, losing proprietary technologies, and preventing contract competition by government agencies. Therefore, the government contractor community must ensure its data sanitization policies are well defined and follow NIST guidelines for data sanitization. Additionally, while the core focus of data sanitization may seem to focus on electronic “soft copy” data, other data sources such as “hard copy” documents must be addressed in the same sanitization policies.

Data sanitization trends

To examine the existing instances of data sanitization policies and determine the impacts of not developing, utilizing, or following these policy guidelines and recommendation, research data was not only coalesced from the government contracting sector but also other critical industries such as Defense, Energy, and Transportation. These were selected as they typically also fall under government regulations, and therefore NIST (National Institute of Standards and Technology) guidelines and policies would also apply in the United States. Primary Data is from the study performed by an independent research company Coleman Parkes Research in August 2019. This research project targeted many different senior cyber executives and policy makers while surveying over 1,800 senior stakeholders. The data from Coleman Parkes shows that 96% of organizations have a data sanitization policy in place; however, in the United States, only 62% of respondents felt that the policy is communicated well across the business. Additionally, it reveals that remote and contract workers were the least likely to comply with data sanitization policies. This trend has become a more pressing issue as many government contractors and private companies have been working remotely due to the Covid-19 pandemic. The likelihood of this continuing after the return to normal working conditions is likely.

On June 26, 2021, a basic Google search for “data lost due to non-sanitization” returned over 20 million results. These included articles on; data breaches and the loss of business, military secrets and proprietary data losses, PHI (Protected Health Information), PII (Personally Identifiable Information), and many articles on performing essential data sanitization. Many of these articles also point to existing data sanitization and security policies of companies and government entities, such as the U.S. Environmental Protection Agency, "Sample Policy and Guidance Language for Federal Media Sanitization". Based on these articles and NIST 800-88 recommendations, depending on its data security level or categorization, data should be:

- Cleared – Provide a basic level of data sanitization by overwriting data sectors to remove any previous data remnants that a basic format would not include. Again, the focus is on electronic media. This method is typically utilized if the media is going to be re-used within the organization at a similar data security level.

- Purged – May use physical (degaussing) or logical methods (sector overwrite) to make the target media unreadable. Typically utilized when media is no longer needed and is at a lower level of data security level.

- Destroyed – Permanently renders the data irretrievable and is commonly used when media is leaving an organization or has reached its end of life, i.e., paper shredding or hard drive/media crushing and incineration. This method is typically utilized for media containing highly sensitive information and state secrets which could cause grave damage to national security or to the privacy and safety of individuals.

Data sanitization road blocks

The International Information Systems Security Certification Consortium 2020 Cyber Workforce study shows that the global cybersecurity industry still has over 3.12 million unfilled positions due to a skills shortage. Therefore, those with the correct skillset to implement NIST 800-88 in policies may come at a premium labor rate. In addition, staffing and funding need to adjust to meet policy needs to properly implement these sanitization methods in tandem with appropriate Data level categorization to improve data security outcomes and reduce data loss. In order to ensure the confidentiality of customer and client data, government and private industry must create and follow concrete data sanitization policies which align with best practices, such as those outlined in NIST 800-88. Without consistent and enforced policy requirements, the data will be at increased risk of compromise. To achieve this, entities must allow for a cybersecurity wage premium to attract qualified talent. In order to prevent the loss of data and therefore Proprietary Data, Personal Information, Trade Secrets, and Classified Information, it is only logical to follow best practices.

Data sanitization policy best practices

Data sanitization policy must be comprehensive and include data levels and correlating sanitization methods. Any data sanitization policy created must be comprehensive and include all forms of media to include soft and hard copy data. Categories of data should also be defined so that appropriate sanitization levels will be defined under a sanitization policy. This policy should be defined so that all levels of data can align to the appropriate sanitization method. For example, controlled unclassified information on electronic storage devices may be cleared or purged, but those devices storing secret or top secret classified materials should be physically destroyed.

Any data sanitization policy should be enforceable and show what department and management structure has the responsibility to ensure data is sanitized accordingly. This policy will require a high-level management champion (typically the Chief Information Security Officer or another C-suite equivalent) for the process and to define responsibilities and penalties for parties at all levels. This policy champion will include defining concepts such as the Information System Owner and Information Owner to define the chain of responsibility for data creation and eventual sanitization. The CISO or other policy champion should also ensure funding is allocated to additional cybersecurity workers to implement and enforce policy compliance. Auditing requirements are also typically included to prove media destruction and should be managed by these additional staff. For small business and those without a broad cyber background resources are available in the form of editable Data Sanitization policy templates. Many groups such as the IDSC (International Data Sanitization Consortium) provide these free of charge on their website https://www.datasanitization.org/.

Without training in data security and sanitization principles, it is unfeasible to expect users to comply with the policy. Therefore, the Sanitization Policy should include a matrix of instruction and frequency by job category to ensure that users, at every level, understand their part in complying with the policy. This task should be easy to accomplish as most government contractors are already required to perform annual Information Security training for all employees. Therefore, additional content can be added to ensure data sanitization policy compliance.

Sanitizing devices

The primary use of data sanitization is for the complete clearing of devices and destruction of all sensitive data once the storage device is no longer in use or is transferred to another Information system. This is an essential stage in the Data Security Lifecycle (DSL) and Information Lifecycle Management (ILM). Both are approaches for ensuring privacy and data management throughout the usage of an electronic device, as it ensures that all data is destroyed and unrecoverable when devices reach the end of their lifecycle.

There are three main methods of data sanitization for complete erasure of data: physical destruction, cryptographic erasure, and data erasure. All three erasure methods aim to ensure that deleted data cannot be accessed even through advanced forensic methods, which maintains the privacy of individuals’ data even after the mobile device is no longer in use.

Physical destruction

Physical erasure involves the manual destruction of stored data. This method uses mechanical shredders or degaussers to shred devices, such as phones, computers, hard drives, and printers, into small individual pieces. Varying levels of data security levels require different levels of destruction.

Degaussing is most commonly used on hard disk drives (HDDs), and involves the utilization of high energy magnetic fields to permanently disrupt the functionality and memory storage of the device. When data is exposed to this strong magnetic field, any memory storage is neutralized and can not be recovered or used again. Degaussing does not apply to solid state disks (SSDs) as the data is not stored using magnetic methods. When particularly sensitive data is involved it is typical to utilize processes such as paper pulp, special burn, and solid state conversion. This will ensure proper destruction of all sensitive media including paper, Hard and Soft copy media, optical media, specialized computing hardware.

Physical destruction often ensures that data is completely erased and cannot be used again. However, the physical by-products of mechanical waste from mechanical shredding can be damaging to the environment, but a recent trend in increasing the amount of e-waste material recovered by e-cycling has helped to minimize the environmental impact. Furthermore, once data is physically destroyed, it can no longer be resold or used again.

Cryptographic erasure

Cryptographic erasure involves the destruction of the secure key or passphrase, that is used to protect stored information. Data encryption involves the development of a secure key that only enables authorized parties to gain access to the data that is stored. The permanent erasure of this key ensures that the private data stored can no longer be accessed. Cryptographic erasure is commonly installed through manufactures of the device itself as encryption software is often built into the device. Encryption with key erasure involves encrypting all sensitive material in a way that requires a secure key to decrypt the information when it needs to be used. When the information needs to be deleted, the secure key can be erased. This provides a greater ease of use, and a speedier data wipe, than other software methods because it involves one deletion of secure information rather than each individual file.

Cryptographic erasure is often used for data storage that does not contain as much private information since there is a possibility that errors can occur due to manufacturing failures or human error during the process of key destruction. This creates a wider range of possible results of data erasure. This method allows for data to continue to be stored on the device and does not require that the device be completely erased. This way, the device can be resold again to another individual or company since the physical integrity of the device itself is maintained. However this assumes that the level of data encryption on the device is resistant to future encryption attacks. For instance a hard drive utilizing Cryptographic erasure with a 128bit AES key may be secure now but in 5 years, it may be common to break this level of encryption. Therefore the level of data security should be declared in a data sanitization policy to future proof the process.

Data erasure

The process of data erasure involves masking all information at the byte level through the insertion of random 0s and 1s in on all sectors of the electronic equipment that is no longer in use. This software based method ensures that all data previous stored is completely hidden and unrecoverable, which ensures full data sanitization. The efficacy and accuracy of this sanitization method can also be analyzed through auditable reports.

Data erasure often ensures complete sanitization while also maintaining the physical integrity of the electronic equipment so that the technology can be resold or reused. This ability to recycle technological devices makes data erasure a more environmentally sound version of data sanitization. This method is also the most accurate and comprehensive since the efficacy of the data masking can be tested afterwards to ensure complete deletion. However, data erasure through software based mechanisms requires more time compared to other methods.

Secure erase

A number of storage media sets support a command that, when passed to the device, causes it to perform a built-in sanitization procedure. The following command sets define such a standard command:

- ATA (including SATA) defines a Security Erase command. Two levels of thoroughness are defined.

- SCSI (including SAS and other physical connections) defines a SANITIZE command.

- NVMe defines formatting with secure erase.

- Opal Storage Specification specifies a command set for self-encrypting drives and cryptographic erase, available in addition to command-set methods.

The drive usually performs fast cryptographic erasure when data is encrypted, and a slower data erasure by overwriting otherwise. SCSI allows for asking for a specific type of erasure.

If implemented correctly, the built-in sanitization feature is sufficient to render data unrecoverable. The NIST approves of the use of this feature. There have been a few reported instances of failures to erase some or all data due to buggy firmware, sometimes readily apparent in a sector editor.

Necessity of data sanitization

There has been increased usage of mobile devices, Internet of Things (IoT) technologies, cloud-based storage systems, portable electronic devices, and various other electronic methods to store sensitive information, therefore implementing effective erasure methods once the device is not longer in use has become crucial to protect sensitive data. Due to the increased usage of electronic devices in general and the increased storage of private information on these electronic devices, the need for data sanitization has been much more urgent in recent years.

There are also specific methods of sanitization that do not fully clean devices of private data which can prove to be problematic. For example, some remote wiping methods on mobile devices are vulnerable to outside attacks and efficacy depends on the unique efficacy of each individual software system installed. Remote wiping involves sending a wireless command to the device when it has been lost or stolen that directs the device to completely wipe out all data. While this method can be very beneficial, it also has several drawbacks. For example, the remote wiping method can be manipulated by attackers to signal the process when it is not yet necessary. This results in incomplete data sanitization. If attackers do gain access to the storage on the device, the user risks exposing all private information that was stored.

Cloud computing and storage has become an increasingly popular method of data storage and transfer. However, there are certain privacy challenges associated with cloud computing that have not been fully explored. Cloud computing is vulnerable to various attacks such as through code injection, the path traversal attack, and resource depletion because of the shared pool structure of these new techniques. These cloud storage models require specific data sanitization methods to combat these issues. If data is not properly removed from cloud storage models, it opens up the possibility for security breaches at multiple levels.

Risks posed by inadequate data-set sanitization

Inadequate data sanitization methods can result in two main problems: a breach of private information and compromises to the integrity of the original dataset. If data sanitization methods are unsuccessful at removing all sensitive information, it poses the risk of leaking this information to attackers. Numerous studies have been conducted to optimize ways of preserving sensitive information. Some data sanitization methods have a high sensitivity to distinct points that have no closeness to data points. This type of data sanitization is very precise and can detect anomalies even if the poisoned data point is relatively close to true data. Another method of data sanitization is one that also removes outliers in data, but does so in a more general way. It detects the general trend of data and discards any data that strays and it’s able to target anomalies even when inserted as a group. In general, data sanitization techniques use algorithms to detect anomalies and remove any suspicious points that may be poisoned data or sensitive information.

Furthermore, data sanitization methods may remove useful, non-sensitive information, which then renders the sanitized dataset less useful and altered from the original. There have been iterations of common data sanitization techniques that attempt to correct the issue of the loss of original dataset integrity. In particular, Liu, Xuan, Wen, and Song offered a new algorithm for data sanitization called the Improved Minimum Sensitive Itemsets Conflict First Algorithm (IMSICF) method. There is often a lot of emphasis that is put into protecting the privacy of users, so this method brings a new perspective that focuses on also protecting the integrity of the data. It functions in a way that has three main advantages: it learns to optimize the process of sanitization by only cleaning the item with the highest conflict count, keeps parts of the dataset with highest utility, and also analyzes the conflict degree of the sensitive material. Robust research was conducted on the efficacy and usefulness of this new technique to reveal the ways that it can benefit in maintaining the integrity of the dataset. This new technique is able to firstly pinpoint the specific parts of the dataset that are possibly poisoned data and also use computer algorithms to make a calculation between the tradeoffs of how useful it is to decide if it should be removed. This is a new way of data sanitization that takes into account the utility of the data before it is immediately discarded.

Applications of data sanitization

Data sanitization methods are also implemented for privacy preserving data mining, association rule hiding, and blockchain-based secure information sharing. These methods involve the transfer and analysis of large datasets that contain private information. This private information needs to be sanitized before being made available online so that sensitive material is not exposed. Data sanitization is used to ensure privacy is maintained in the dataset, even when it is being analyzed.

Privacy preserving data mining

Privacy Preserving Data Mining (PPDM) is the process of data mining while maintaining privacy of sensitive material. Data mining involves analyzing large datasets to gain new information and draw conclusions. PPDM has a wide range of uses and is an integral step in the transfer or use of any large data set containing sensitive material.

Data sanitization is an integral step to privacy preserving data mining because private datasets need to be sanitized before they can be utilized by individuals or companies for analysis. The aim of privacy preserving data mining is to ensure that private information cannot be leaked or accessed by attackers and sensitive data is not traceable to individuals that have submitted the data. Privacy preserving data mining aims to maintain this level of privacy for individuals while also maintaining the integrity and functionality of the original dataset. In order for the dataset to be used, necessary aspects of the original data need to be protected during the process of data sanitization. This balance between privacy and utility has been the primary goal of data sanitization methods.

One approach to achieve this optimization of privacy and utility is through encrypting and decrypting sensitive information using a process called key generation. After the data is sanitized, key generation is used to ensure that this data is secure and cannot be tampered with. Approaches such as the Rider optimization Algorithm (ROA), also called Randomized ROA (RROA) use these key generation strategies to find the optimal key so that data can be transferred without leaking sensitive information.

Some versions of key generation have also been optimized to fit larger datasets. For example, a novel, method-based Privacy Preserving Distributed Data Mining strategy is able to increase privacy and hide sensitive material through key generation. This version of sanitization allows large amount of material to be sanitized. For companies that are seeking to share information with several different groups, this methodology may be preferred over original methods that take much longer to process.

Certain models of data sanitization delete or add information to the original database in an effort to preserve the privacy of each subject. These heuristic based algorithms are beginning to become more popularized, especially in the field of association rule mining. Heuristic methods involve specific algorithms that use pattern hiding, rule hiding, and sequence hiding to keep specific information hidden. This type of data hiding can be used to cover wide patterns in data, but is not as effective for specific information protection. Heuristic based methods are not as suited to sanitizing large datasets, however, recent developments in the heuristics based field have analyzed ways to tackle this problem. An example includes the MR-OVnTSA approach, a heuristics based sensitive pattern hiding approach for big data, introduced by Shivani Sharma and Durga Toshniwa. This approach uses a heuristics based method called the ‘MapReduce Based Optimum Victim Item and Transaction Selection Approach’, also called MR-OVnTSA, that aims to reduce the loss of important data while removing and hiding sensitive information. It takes advantage of algorithms that compare steps and optimize sanitization.

An important goal of PPDM is to strike a balance between maintaining the privacy of users that have submitted the data while also enabling developers to make full use of the dataset. Many measures of PPDM directly modify the dataset and create a new version that makes the original unrecoverable. It strictly erases any sensitive information and makes it inaccessible for attackers.

Association rule mining

One type of data sanitization is rule based PPDM, which uses defined computer algorithms to clean datasets. Association rule hiding is the process of data sanitization as applied to transactional databases. Transactional databases are the general term for data storage used to record transactions as organizations conduct their business. Examples include shipping payments, credit card payments, and sales orders. This source analyzes fifty four different methods of data sanitization and presents its four major findings of its trends

Certain new methods of data sanitization that rely on machine deep learning. There are various weaknesses in the current use of data sanitization. Many methods are not intricate or detailed enough to protect against more specific data attacks. This effort to maintain privacy while dating important data is referred to as privacy-preserving data mining. Machine learning develops methods that are more adapted to different types of attacks and can learn to face a broader range of situations. Deep learning is able to simplify the data sanitization methods and run these protective measures in a more efficient and less time consuming way.

There have also been hybrid models that utilize both rule based and machine deep learning methods to achieve a balance between the two techniques.

Blockchain-based secure information sharing

Browser backed cloud storage systems are heavily reliant on data sanitization and are becoming an increasingly popular route of data storage. Furthermore, the ease of usage is important for enterprises and workplaces that use cloud storage for communication and collaboration.

Blockchain is used to record and transfer information in a secure way and data sanitization techniques are required to ensure that this data is transferred more securely and accurately. It’s especially applicable for those working in supply chain management and may be useful for those looking to optimize the supply chain process. For example, the Whale Optimization Algorithm (WOA), uses a method of secure key generation to ensure that information is shared securely through the blockchain technique. The need to improve blockchain methods is becoming increasingly relevant as the global level of development increases and becomes more electronically dependent.