From Wikipedia, the free encyclopedia

Artist's rendering of the accretion disk in

ULAS J1120+0641, a very distant quasar powered by a black hole with a mass two billion times that of the Sun.

[1] Credit:

ESO/M. Kornmesser

A

quasar (

) (also

quasi-stellar object or

QSO) is an

active galactic nucleus of very high

luminosity. A quasar consists of a

supermassive black hole surrounded by an orbiting

accretion disk of gas. As gas in the accretion disk falls toward the black hole,

energy is released in the form of

electromagnetic radiation. Quasars emit energy across the

electromagnetic spectrum and can be observed at

radio,

infrared,

visible,

ultraviolet, and

X-ray wavelengths. The most powerful quasars have luminosities exceeding 10

41 W, thousands of times greater than the luminosity of a large

galaxy such as the

Milky Way.

[2]

The term "quasar" originated as a

contraction

of "quasi-stellar radio source", because quasars were first identified

as sources of radio-wave emission, and in photographic images at visible

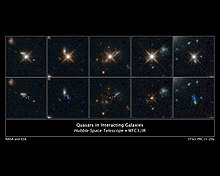

wavelengths they resembled point-like stars. High-resolution images of

quasars, particularly from the

Hubble Space Telescope, have demonstrated that quasars occur in the centers of galaxies, and that some quasar host galaxies are strongly

interacting or

merging galaxies.

[3]

Quasars are found over a very broad range of

distances (corresponding to

redshifts

ranging from z < 0.1 for the nearest quasars to z > 7 for the

most distant known quasars), and quasar discovery surveys have

demonstrated that quasar activity was more common in the distant past.

The peak epoch of quasar activity in the

Universe corresponds to redshifts around 2, or approximately 10 billion years ago.

[4] As of 2011, the

most distant known quasar is at redshift z=7.085; light observed from this quasar was emitted when the Universe was only 770 million years old.

[5]

Overview

Because quasars are distant objects, any light which reaches the

Earth is redshifted due to the

metric expansion of space.

[6]

Quasars inhabit the very center of active, young galaxies, and are

among the most luminous, powerful, and energetic objects known in the

universe, emitting up to a thousand times the energy output of the

Milky Way,

which contains 200–400 billion stars. This radiation is emitted across

the electromagnetic spectrum, almost uniformly, from X-rays to the

far-infrared with a peak in the ultraviolet-optical bands, with some

quasars also being strong sources of radio emission and of gamma-rays.

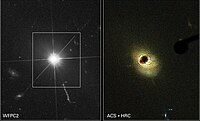

Hubble images of quasar

3C 273. At right, a

coronagraph is used to block the quasar's light, making it easier to detect the surrounding host galaxy.

Quasar

QSO-160913+653228

is so distant its light has taken nine billion years to reach the

telescope that took this photo, two thirds of the time that has elapsed

since the

Big Bang.

[7]

In early optical images, quasars appeared as

point sources, indistinguishable from stars, except for their peculiar spectra. With infrared telescopes and the

Hubble Space Telescope, the "host galaxies" surrounding the quasars have been detected in some cases.

[8]

These galaxies are normally too dim to be seen against the glare of the

quasar, except with special techniques. Most quasars, with the

exception of

3C 273 whose average

apparent magnitude is 12.9, cannot be seen with small telescopes.

The

luminosity

of some quasars changes rapidly in the optical range and even more

rapidly in the X-ray range. Because these changes occur very rapidly

they define an upper limit on the volume of a quasar; quasars are not

much larger than the

Solar System.

[9] This implies an extremely high

power density.

[10] The mechanism of brightness changes probably involves

relativistic beaming of

astrophysical jets pointed nearly directly toward Earth. The highest

redshift quasar known (as of June 2011) is

ULAS J1120+0641, with a redshift of 7.085, which corresponds to a

comoving distance of approximately 29 billion

light-years from Earth (see more discussion of how cosmological distances can be greater than the light-travel time at

metric expansion of space).

Quasars are believed to be powered by

accretion of material into

supermassive black holes in the nuclei of distant galaxies, making these luminous versions of the general class of objects known as

active galaxies. Since light cannot escape the black holes, the escaping energy is actually generated outside the

event horizon by gravitational stresses and immense

friction on the incoming material.

[11] Central masses of 10

5 to 10

9 solar masses have been measured in quasars by using

reverberation mapping.

Several dozen nearby large galaxies, with no sign of a quasar nucleus,

have been shown to contain a similar central black hole in their nuclei,

so it is thought that all large galaxies have one, but only a small

fraction are active (with enough accretion to power radiation), and it

is the activity of these black holes that are seen as quasars. The

matter accreting onto the black hole is unlikely to fall directly in,

but will have some angular momentum around the black hole that will

cause the matter to collect into an

accretion disc.

Quasars may also be ignited or re-ignited when normal galaxies merge

and the black hole is infused with a fresh source of matter. In fact, it

has been suggested that a quasar could form as the

Andromeda Galaxy collides with our own

Milky Way galaxy in approximately 3–5 billion years.

[11][12][13]

Properties

The

Chandra

X-ray image is of the quasar PKS 1127-145, a highly luminous source of

X-rays and visible light about 10 billion light years from Earth. An

enormous X-ray jet extends at least a million light years from the

quasar. Image is 60 arcsec on a side.

RA 11h 30m 7.10s

Dec -14° 49' 27" in Crater. Observation date: May 28, 2000. Instrument: ACIS.

More than 200,000 quasars are known, most from the

Sloan Digital Sky Survey. All observed quasar spectra have

redshifts between 0.056 and 7.085. Applying

Hubble's law to these redshifts, it can be shown that they are between 600 million

[14] and 28.85 billion

light-years away (in terms of

comoving distance).

Because of the great distances to the farthest quasars and the finite

velocity of light, they and their surrounding space appear as they

existed in the very early universe.

The power of quasars originates from supermassive black holes that

are believed to exist at the core of all galaxies. The Doppler shifts of

stars near the cores of galaxies indicate that they are rotating around

tremendous masses with very steep gravity gradients, suggesting black

holes.

Although quasars appear faint when viewed from Earth, they are

visible from extreme distances, being the most luminous objects in the

known universe. The brightest quasar in the sky is

3C 273 in the

constellation of

Virgo. It has an average

apparent magnitude of 12.8 (bright enough to be seen through a medium-size amateur

telescope), but it has an

absolute magnitude of −26.7.

[15] From a distance of about 33

light-years, this object would shine in the sky about as brightly as our

sun. This quasar's

luminosity is, therefore, about 4 trillion (4 × 10

12) times that of the Sun, or about 100 times that of the total light of giant galaxies like the

Milky Way.

[15] This assumes the quasar is radiating energy in all directions, but the

active galactic nucleus

is believed to be radiating preferentially in the direction of its jet.

In a universe containing hundreds of billions of galaxies, most of

which had active nuclei billions of years ago but only seen today, it is

statistically certain that thousands of energy jets should be pointed

toward the Earth, some more directly than others. In many cases it is

likely that the brighter the quasar, the more directly its jet is aimed

at the Earth.

The hyperluminous quasar

APM 08279+5255 was, when discovered in 1998, given an

absolute magnitude of −32.2. High resolution imaging with the

Hubble Space Telescope and the 10 m

Keck Telescope revealed that this system is

gravitationally lensed.

A study of the gravitational lensing of this system suggests that the

light emitted has been magnified by a factor of ~10. It is still

substantially more luminous than nearby quasars such as 3C 273.

Quasars were much more common in the early universe than they are today. This discovery by

Maarten Schmidt in 1967 was early strong evidence against the

Steady State cosmology of

Fred Hoyle, and in favor of the

Big Bang cosmology. Quasars show the locations where massive black holes are growing rapidly (via

accretion).

These black holes grow in step with the mass of stars in their host

galaxy in a way not understood at present. One idea is that jets,

radiation and winds created by the quasars, shut down the formation of

new stars in the host galaxy, a process called 'feedback'. The jets that

produce strong radio emission in some quasars at the centers of

clusters of galaxies are known to have enough power to prevent the hot gas in those clusters from cooling and falling onto the central galaxy.

Quasars' luminosities are variable, with time scales that range from

months to hours. This means that quasars generate and emit their energy

from a very small region, since each part of the quasar would have to be

in contact with other parts on such a time scale as to allow the

coordination of the luminosity variations. This would mean that a quasar

varying on a time scale of a few weeks cannot be larger than a few

light-weeks across. The emission of large amounts of power from a small

region requires a power source far more efficient than the nuclear

fusion that powers stars. The release of

gravitational energy[16]

by matter falling towards a massive black hole is the only process

known that can produce such high power continuously. Stellar explosions –

supernovas and

gamma-ray bursts

– can do likewise, but only for a few weeks. Black holes were

considered too exotic by some astronomers in the 1960s. They also

suggested that the redshifts arose from some other (unknown) process, so

that the quasars were not really so distant as the Hubble law implied.

This "

redshift controversy"

lasted for many years. Many lines of evidence (optical viewing of host

galaxies, finding 'intervening' absorption lines, gravitational lensing)

now demonstrate that the quasar redshifts are due to the Hubble

expansion, and quasars are in fact as powerful as first thought.

[17]

Gravitationally lensed quasar HE 1104-1805.

[18]

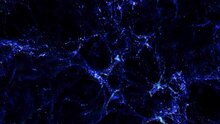

Animation shows the alignments between the spin axes of quasars and the large-scale structures that they inhabit.

Quasars have all the properties of other active galaxies such as

Seyfert galaxies, but are more powerful: their

radiation is partially 'nonthermal' (i.e., not due to

black body radiation), and approximately 10 percent are observed to also have jets and lobes like those of

radio galaxies that also carry significant (but poorly understood) amounts of energy in the form of particles moving at

relativistic speeds. Extremely high energies might be explained by several mechanisms (see

Fermi acceleration and

Centrifugal mechanism of acceleration). Quasars can be detected over the entire observable

electromagnetic spectrum including

radio,

infrared,

visible light,

ultraviolet,

X-ray and even

gamma rays. Most quasars are brightest in their rest-frame near-ultraviolet

wavelength of 121.6

nm Lyman-alpha

emission line of hydrogen, but due to the tremendous redshifts of these

sources, that peak luminosity has been observed as far to the red as

900.0 nm, in the near infrared. A minority of quasars show strong radio

emission, which is generated by jets of matter moving close to the speed

of light. When viewed downward, these appear as

blazars and often have regions that seem to move away from the center faster than the speed of light (

superluminal expansion). This is an optical illusion due to the properties of

special relativity.

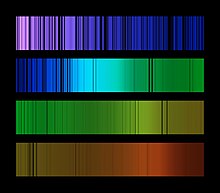

Quasar

redshifts are measured from the strong

spectral lines

that dominate their visible and ultraviolet spectra. These lines are

brighter than the continuous spectrum, so they are called 'emission'

lines. They have widths of several percent of the speed of light. These

widths are due to Doppler shifts caused by the high speeds of the gas

emitting the lines. Fast motions strongly indicate a large mass.

Emission lines of hydrogen (mainly of the

Lyman series and

Balmer series),

helium, carbon, magnesium, iron and oxygen are the brightest lines. The

atoms emitting these lines range from neutral to highly ionized,

leaving it highly charged. This wide range of ionization shows that the

gas is highly irradiated by the quasar, not merely hot, and not by

stars, which cannot produce such a wide range of ionization.

Iron quasars show strong emission lines resulting from low ionization

iron (FeII), such as

IRAS 18508-7815.

Emission generation

This view, taken with infrared light, is a false-color image of a quasar-starburst tandem with the most luminous

starburst ever seen in such a combination.

Since quasars exhibit properties common to all

active galaxies,

the emission from quasars can be readily compared to those of smaller

active galaxies powered by smaller supermassive black holes. To create a

luminosity of 10

40 watts

(the typical brightness of a quasar), a super-massive black hole would

have to consume the material equivalent of 10 stars per year. The

brightest known quasars devour 1000 solar masses of material every year.

The largest known is estimated to consume matter equivalent to 600

Earths per minute. Quasar luminosities can vary considerably over time,

depending on their surroundings. Since it is difficult to fuel quasars

for many billions of years, after a quasar finishes accreting the

surrounding gas and dust, it becomes an ordinary galaxy.

Quasars also provide some clues as to the end of the

Big Bang's

reionization. The oldest known quasars (

redshift ≥ 6) display a

Gunn-Peterson trough and have absorption regions in front of them indicating that the

intergalactic medium at that time was

neutral gas. More recent quasars show no absorption region but rather their spectra contain a spiky area known as the

Lyman-alpha forest; this indicates that the intergalactic medium has undergone reionization into

plasma, and that neutral gas exists only in small clouds.

Quasars show evidence of elements heavier than

helium, indicating that galaxies underwent a massive phase of

star formation, creating

population III stars between the time of the

Big Bang and the first observed quasars. Light from these stars may have been observed in 2005 using

NASA's

Spitzer Space Telescope,

[19] although this observation remains to be confirmed.

Like all (unobscured) active galaxies, quasars can be strong X-ray

sources. Radio-loud quasars can also produce X-rays and gamma rays by

inverse Compton scattering of lower-energy photons by the radio-emitting electrons in the jet.

[20]

History of observation

Picture shows a cosmic mirage known as the

Einstein Cross. Four apparent images are actually from the same quasar.

The first quasars (

3C 48 and

3C 273) were discovered in the late 1950s, as radio sources in all-sky radio surveys.

[21][22][23][24] They were first noted as radio sources with no corresponding visible object. Using small telescopes and the

Lovell Telescope as an interferometer, they were shown to have a very small angular size.

[25] Hundreds of these objects were recorded by 1960 and published in the

Third Cambridge Catalogue as astronomers scanned the skies for their optical counterparts. In 1963, a definite identification of the radio source

3C 48 with an optical object was published by

Allan Sandage and

Thomas A. Matthews.

Astronomers had detected what appeared to be a faint blue star at the

location of the radio source and obtained its spectrum. Containing many

unknown broad emission lines, the anomalous spectrum defied

interpretation — a claim by

John Bolton of a large redshift was not generally accepted.

In 1962 a breakthrough was achieved. Another radio source,

3C 273, was predicted to undergo five

occultations by

the Moon. Measurements taken by

Cyril Hazard and John Bolton during one of the occultations using the

Parkes Radio Telescope allowed

Maarten Schmidt to optically identify the object and obtain an

optical spectrum using the 200-inch

Hale Telescope

on Mount Palomar. This spectrum revealed the same strange emission

lines. Schmidt realized that these were actually spectral lines of

hydrogen redshifted at the rate of 15.8 percent. This discovery showed

that 3C 273 was receding at a rate of 47,000 km/s.

[26]

This discovery revolutionized quasar observation and allowed other

astronomers to find redshifts from the emission lines from other radio

sources. As predicted earlier by Bolton, 3C 48 was found to have a

redshift of 37% of the speed of light.

The term "quasar" was coined by Chinese-born U.S.

astrophysicist Hong-Yee Chiu in May 1964, in

Physics Today, to describe these puzzling objects:

So far, the clumsily long name 'quasi-stellar radio sources' is used

to describe these objects. Because the nature of these objects is

entirely unknown, it is hard to prepare a short, appropriate

nomenclature for them so that their essential properties are obvious

from their name. For convenience, the abbreviated form 'quasar' will be

used throughout this paper.

Later it was found that not all quasars have strong radio emission;

in fact only about 10% are "radio-loud". Hence the name 'QSO'

(quasi-stellar object) is used (in addition to "quasar") to refer to

these objects, including the 'radio-loud' and the 'radio-quiet' classes.

The discovery of the quasar had large implications for the field of

astronomy in the 1960s, including drawing physics and astronomy closer

together.

[28]

One great topic of debate during the 1960s was whether quasars were nearby objects or distant objects as implied by their

redshift. It was suggested, for example, that the redshift of quasars was not due to the

expansion of space but rather to

light escaping a deep gravitational well. However a star of sufficient mass to form such a well would be unstable and in excess of the

Hayashi limit.

[29] Quasars also show

forbidden

spectral emission lines which were previously only seen in hot gaseous

nebulae of low density, which would be too diffuse to both generate the

observed power and fit within a deep gravitational well.

[30]

There were also serious concerns regarding the idea of cosmologically

distant quasars. One strong argument against them was that they implied

energies that were far in excess of known energy conversion processes,

including

nuclear fusion. At this time, there were some suggestions that quasars were made of some hitherto unknown form of stable

antimatter and that this might account for their brightness.

[citation needed] Others speculated that quasars were a

white hole end of a

wormhole.

[31][32] However, when

accretion disc

energy-production mechanisms were successfully modeled in the 1970s,

the argument that quasars were too luminous became moot and today the

cosmological distance of quasars is accepted by almost all researchers.

In 1979 the

gravitational lens effect predicted by

Einstein's

General Theory of Relativity was confirmed observationally for the first time with images of the

double quasar 0957+561.

[33]

In the 1980s, unified models were developed in which quasars were

classified as a particular kind of active galaxy, and a consensus

emerged that in many cases it is simply the viewing angle that

distinguishes them from other classes, such as

blazars and

radio galaxies.

[34]

The huge luminosity of quasars results from the accretion discs of

central supermassive black holes, which can convert on the order of 10%

of the

mass of an object into

energy as compared to 0.7% for the

p-p chain nuclear fusion process that dominates the energy production in Sun-like stars.

Bright halos around 18 distant quasars.

[35]

This mechanism also explains why quasars were more common in the

early universe, as this energy production ends when the supermassive

black hole consumes all of the gas and dust near it. This means that it

is possible that most galaxies, including the Milky Way, have gone

through an active stage, appearing as a quasar or some other class of

active galaxy that depended on the black hole mass and the accretion

rate, and are now quiescent because they lack a supply of matter to feed

into their central black holes to generate radiation.

Role in celestial reference systems

The energetic radiation of the quasar makes

dark galaxies glow, helping astronomers to understand the obscure early stages of galaxy formation.

[36]

Because quasars are extremely distant, bright, and small in apparent

size, they are useful reference points in establishing a measurement

grid on the sky.

[37] The

International Celestial Reference System

(ICRS) is based on hundreds of extra-galactic radio sources, mostly

quasars, distributed around the entire sky. Because they are so distant,

they are apparently stationary to our current technology, yet their

positions can be measured with the utmost accuracy by

Very Long Baseline Interferometry (VLBI). The positions of most are known to 0.001

arcsecond or better, which is orders of magnitude more precise than the best optical measurements.

Multiple quasars

A multiple-image quasar is a quasar whose light undergoes

gravitational lensing,

resulting in double, triple or quadruple images of the same quasar. The

first such gravitational lens to be discovered was the double-imaged

quasar

Q0957+561 (or Twin Quasar) in 1979.

[38]

A grouping of two or more quasars can result from a chance alignment,

physical proximity, actual close physical interaction, or effects of

gravity bending the light of a single quasar into two or more images.

As quasars are rare objects, the probability of three or more

separate quasars being found near the same location is very low. The

first true triple quasar was found in 2007 by observations at the

W. M. Keck Observatory Mauna Kea,

Hawaii.

[39] LBQS 1429-008 (or QQQ J1432−0106) was first observed in 1989 and was found to be a double quasar; itself a rare occurrence. When

astronomers

discovered the third member, they confirmed that the sources were

separate and not the result of gravitational lensing. This triple quasar

has a red shift of

z = 2.076, which is equivalent to 10.5 billion

light years.

[40] The components are separated by an estimated 30–50 kpc, which is typical of interacting galaxies.

[41] An example of a triple quasar that is formed by lensing is PG1115 +08.

[42]

Quasars in interacting galaxies.

[43]

In 2013, the second true triplet quasars

QQQ J1519+0627 was found with redshift

z

= 1.51 (approx 9 billion light years) by an international team of

astronomers led by Farina of the University of Insubria, the whole

system is well accommodated within 25′′ (i.e., 200 kpc in projected

distance). The team accessed data from observations collected at the

La Silla Observatory with the New Technology Telescope (NTT) of the

European Southern Observatory (ESO) and at the

Calar Alto Observatory with the 3.5m telescope of the Centro Astronómico Hispano Alemán (CAHA).

[44][45]

The first quadruple quasar was discovered in 2015.

[46]

When two quasars are so nearly in the same direction as seen from

Earth that they appear to be a single quasar but may be separated by the

use of telescopes, they are referred to as a "double quasar", such as

the Twin Quasar.

[47]

These are two different quasars, and not the same quasar that is

gravitationally lensed. This configuration is similar to the optical

double star.

Two quasars, a "quasar pair", may be closely related in time and space,

and be gravitationally bound to one another. These may take the form of

two quasars in the same

galaxy cluster. This configuration is similar to two prominent stars in a

star cluster. A "binary quasar", may be closely linked gravitationally and form a pair of

interacting galaxies. This configuration is similar to that of a

binary star system.

). When the mass is very large like a macroscopic object, the uncertainties and thus the quantum effect become very small, and classical physics is applicable.

). When the mass is very large like a macroscopic object, the uncertainties and thus the quantum effect become very small, and classical physics is applicable. at a time

at a time  in terms of its Fourier transform

in terms of its Fourier transform  to be

to be at a time

at a time  is

is , just as the amplitude of thermal fluctuations is controlled by

, just as the amplitude of thermal fluctuations is controlled by  , where

, where  is Boltzmann's constant. Note that the following three points are closely related:

is Boltzmann's constant. Note that the following three points are closely related:instead of

(the quantum kernel is nonlocal from a classical heat kernel viewpoint, but it is local in the sense that it does not allow signals to be transmitted),[citation needed]

![\rho_0[\varphi_t] = \exp{\left[-\frac{1}{\hbar}

\int\frac{d^3k}{(2\pi)^3}

\tilde\varphi_t^*(k)\sqrt{|k|^2+m^2}\;\tilde \varphi_t(k)\right]}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/957b1e970103b111dc5c5385a0061b699d795b25)

![\rho_E[\varphi_t] = \exp{[-H[\varphi_t]/k_\mathrm{B}T]}=\exp{\left[-\frac{1}{k_\mathrm{B}T} \int\frac{d^3k}{(2\pi)^3}

\tilde\varphi_t^*(k){\scriptstyle\frac{1}{2}}(|k|^2+m^2)\;\tilde \varphi_t(k)\right]}.](https://wikimedia.org/api/rest_v1/media/math/render/svg/c698c6d4af7d375bb19778cc836eabfe56505cf4)