The efficient coding hypothesis was proposed by Horace Barlow in 1961 as a theoretical model of sensory coding in the brain. Within the brain, neurons communicate with one another by sending electrical impulses referred to as action potentials or spikes. One goal of sensory neuroscience is to decipher the meaning of these spikes in order to understand how the brain represents and processes information about the outside world.

Barlow hypothesized that the spikes in the sensory system formed a neural code for efficiently representing sensory information. By efficient it is understood that the code minimized the number of spikes needed to transmit a given signal. This is somewhat analogous to transmitting information across the internet, where different file formats can be used to transmit a given image. Different file formats require different numbers of bits for representing the same image at a given distortion level, and some are better suited for representing certain classes of images than others. According to this model, the brain is thought to use a code which is suited for representing visual and audio information which is representative of an organism's natural environment .

Efficient coding and information theory

The development of Barlow's hypothesis was influenced by information theory introduced by Claude Shannon only a decade before. Information theory provides a mathematical framework for analyzing communication systems. It formally defines concepts such as information, channel capacity, and redundancy. Barlow's model treats the sensory pathway as a communication channel where neuronal spiking is an efficient code for representing sensory signals. The spiking code aims to maximize available channel capacity by minimizing the redundancy between representational units. H. Barlow was not the first to introduce the idea. It already appears in a 1954 article written by F. Attneave.

A key prediction of the efficient coding hypothesis is that sensory processing in the brain should be adapted to natural stimuli. Neurons in the visual (or auditory) system should be optimized for coding images (or sounds) representative of those found in nature. Researchers have shown that filters optimized for coding natural images lead to filters which resemble the receptive fields of simple-cells in V1. In the auditory domain, optimizing a network for coding natural sounds leads to filters which resemble the impulse response of cochlear filters found in the inner ear.

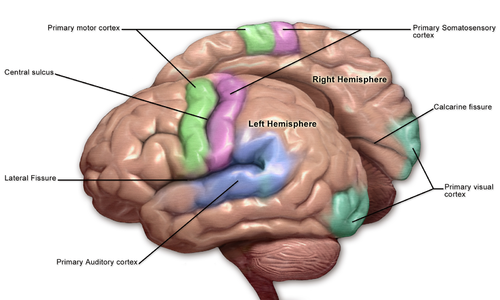

Constraints on the visual system

Due to constraints on the visual system such as the number of neurons and the metabolic energy required for "neural activities", the visual processing system must have an efficient strategy for transmitting as much information as possible. Information must be compressed as it travels from the retina back to the visual cortex. While the retinal receptors can receive information at 10^9 bit/s, the optic nerve, which is composed of 1 million ganglion cells transmitting at 1 bit/sec, only has a transmission capacity of 10^6 bit/s. Further reduction occurs that limits the overall transmission to 40 bit/s which results in inattentional blindness. Thus, the hypothesis states that neurons should encode information as efficiently as possible in order to maximize neural resources. For example, it has been shown that visual data can be compressed up to 20 fold without noticeable information loss.

Evidence suggests that our visual processing system engages in bottom-up selection. For example, inattentional blindness suggests that there must be data deletion early on in the visual pathway. This bottom-up approach allows us to respond to unexpected and salient events more quickly and is often directed by attentional selection. This also gives our visual system the property of being goal-directed. Many have suggested that the visual system is able to work efficiently by breaking images down into distinct components. Additionally, it has been argued that the visual system takes advantage of redundancies in inputs in order to transmit as much information as possible while using the fewest resources.

Evolution-based neural system

Simoncelli and Olshausen outline the three major concepts that are assumed to be involved in the development of systems neuroscience:

- an organism has specific tasks to perform

- neurons have capabilities and limitations

- an organism is in a particular environment.

One assumption used in testing the Efficient Coding Hypothesis is that neurons must be evolutionarily and developmentally adapted to the natural signals in their environment. The idea is that perceptual systems will be the quickest when responding to "environmental stimuli". The visual system should cut out any redundancies in the sensory input.

Natural images and statistics

Central to Barlow's hypothesis is information theory, which when applied to neuroscience, argues that an efficiently coding neural system "should match the statistics of the signals they represent". Therefore, it is important to be able to determine the statistics of the natural images that are producing these signals. Researchers have looked at various components of natural images including luminance contrast, color, and how images are registered over time. They can analyze the properties of natural scenes via digital cameras, spectrophotometers, and range finders.

Researchers look at how luminance contrasts are spatially distributed in an image: the luminance contrasts are highly correlated the closer they are in measurable distance and less correlated the farther apart the pixels are. Independent component analysis (ICA) is an algorithm system that attempts to "linearly transform given (sensory) inputs into independent outputs (synaptic currents) ". ICA eliminates the redundancy by decorrelating the pixels in a natural image. Thus the individual components that make up the natural image are rendered statistically independent. However, researchers have thought that ICA is limited because it assumes that the neural response is linear, and therefore insufficiently describes the complexity of natural images. They argue that, despite what is assumed under ICA, the components of the natural image have a "higher-order structure" that involves correlations among components. Instead, researchers have now developed temporal independent component analysis (TICA), which better represents the complex correlations that occur between components in a natural image. Additionally, a "hierarchical covariance model" developed by Karklin and Lewicki expands on sparse coding methods and can represent additional components of natural images such as "object location, scale, and texture".

The chromatic spectrum as it comes from natural light, but also as it is reflected off of "natural materials", can be easily characterized with principal components analysis (PCA). Because the cones are absorbing a specific amount of photons from the natural image, researchers can use cone responses as a way of describing the natural image. Researchers have found that the three classes of cone receptors in the retina can accurately code natural images and that color is decorrelated already in the LGN.Time has also been modeled. Natural images transform over time, and we can use these transformations to see how the visual input changes over time.

A padegogical review of efficient coding in visual processing --- efficient spatial coding, color coding, temporal/motion coding, stereo coding, and the combination of them --- is in chapter 3 of the book "Understanding vision: theory, models, and data". It explains how efficient coding is realized when input noise makes redundancy reduction no longer adequate, and how efficient coding methods in different situations are related to each other or different from each other.

Hypotheses for testing the efficient coding hypothesis

If neurons are encoding according to the efficient coding hypothesis then individual neurons must be expressing their full output capacity. Before testing this hypothesis it is necessary to define what is considered to be a neural response. Simoncelli and Olshausen suggest that an efficient neuron needs to be given a maximal response value so that we can measure if a neuron is efficiently meeting the maximum level. Secondly, a population of neurons must not be redundant in transmitting signals and must be statistically independent. If the efficient coding hypothesis is accurate, researchers should observe is that there is sparsity in the neuron responses: that is, only a few neurons at a time should fire for an input.

Methodological approaches for testing the hypotheses

One approach is to design a model for early sensory processing based on the statistics of a natural image and then compare this predicted model to how real neurons actually respond to the natural image. The second approach is to measure a neural system responding to a natural environment, and analyze the results to see if there are any statistical properties to this response. A third approach is to derive the necessary and sufficient conditions under which an observed neural computation is efficient, and test whether empirical stimulus statistics satisfy them.

Examples of these approaches

1. Predicted model approach

In one study by Doi et al. in 2012, the researchers created a predicted response model of the retinal ganglion cells that would be based on the statistics of the natural images used, while considering noise and biological constraints. They then compared the actual information transmission as observed in real retinal ganglion cells to this optimal model to determine the efficiency. They found that the information transmission in the retinal ganglion cells had an overall efficiency of about 80% and concluded that "the functional connectivity between cones and retinal ganglion cells exhibits unique spatial structure...consistent with coding efficiency.

A study by van Hateren and Ruderman in 1998 used ICA to analyze video-sequences and compared how a computer analyzed the independent components of the image to data for visual processing obtained from a cat in DeAngelis et al. 1993. The researchers described the independent components obtained from a video sequence as the "basic building blocks of a signal", with the independent component filter (ICF) measuring "how strongly each building block is present". They hypothesized that if simple cells are organized to pick out the "underlying structure" of images over time then cells should act like the independent component filters. They found that the ICFs determined by the computer were similar to the "receptive fields" that were observed in actual neurons.

2. Analyzing actual neural system in response to natural images

In a report in Science from 2000, William E. Vinje and Jack Gallant outlined a series of experiments used to test elements of the efficient coding hypothesis, including a theory that the non-classical receptive field (nCRF) decorrelates projections from the primary visual cortex. To test this, they took recordings from the V1 neurons in awake macaques during "free viewing of natural images and conditions" that simulated natural vision conditions. The researchers hypothesized that the V1 uses sparse code, which is minimally redundant and "metabolically more efficient".

They also hypothesized that interactions between the classical receptive field (CRF) and the nCRF produced this pattern of sparse coding during the viewing of these natural scenes. In order to test this, they created eye-scan paths and also extracted patches that ranged in size from 1-4 times the diameter of the CRF. They found that the sparseness of the coding increased with the size of the patch. Larger patches encompassed more of the nCRF—indicating that the interactions between these two regions created sparse code. Additionally as stimulus size increased, so did the sparseness. This suggests that the V1 uses sparse code when natural images span the entire visual field. The CRF was defined as the circular area surrounding the locations where stimuli evoked action potentials. They also tested to see if stimulation of the nCRF increased the independence of the responses from the V1 neurons by randomly selecting pairs of neurons. They found that indeed, the neurons were more greatly decoupled upon stimulation of the nCRF.

In conclusion, the experiments of Vinje and Gallant showed that the V1 uses sparse code by employing both the CRF and nCRF when viewing natural images, with the nCRF showing a definitive decorrelating effect on neurons which may increase their efficiency by increasing the amount of independent information they carry. They propose that the cells may represent the individual components of a given natural scene, which may contribute to pattern recognition

Another study done by Baddeley et al. had shown that firing-rate distributions of cat visual area V1 neurons and monkey inferotemporal (IT) neurons were exponential under naturalistic conditions, which implies optimal information transmission for a fixed average rate of firing. A subsequent study of monkey IT neurons found that only a minority were well described by an exponential firing distribution. De Polavieja later argued that this discrepancy was due to the fact that the exponential solution is correct only for the noise-free case, and showed that by taking noise into consideration, one could account for the observed results.

A study by Dan, Attick, and Reid in 1996 used natural images to test the hypothesis that early on in the visual pathway, incoming visual signals will be decorrelated to optimize efficiency. This decorrelation can be observed as the '"whitening" of the temporal and spatial power spectra of the neuronal signals". The researchers played natural image movies in front of cats and used a multielectrode array to record neural signals. This was achieved by refracting the eyes of the cats and then contact lenses being fitted into them. They found that in the LGN, the natural images were decorrelated and concluded, "the early visual pathway has specifically adapted for efficient coding of natural visual information during evolution and/or development".

Extensions

One of the implications of the efficient coding hypothesis is that the neural coding depends upon the statistics of the sensory signals. These statistics are a function of not only the environment (e.g., the statistics of the natural environment), but also the organism's behavior (e.g., how it moves within that environment). However, perception and behavior are closely intertwined in the perception-action cycle. For example, the process of vision involves various kinds of eye movements. An extension to the efficient coding hypothesis called active efficient coding (AEC) extends efficient coding to active perception. It hypothesizes that biological agents optimize not only their neural coding, but also their behavior to contribute to an efficient sensory representation of the environment. Along these lines, models for the development of active binocular vision, active visual tracking, and accommodation control have been proposed.

The brain has limited resources to process information, in vision this is manifested as the visual attentional bottleneck. The bottleneck forces the brain to select only a small fraction of visual input information for further processing, as merely coding information efficiently is no longer sufficient. A subsequent theory, V1 Saliency Hypothesis, has been developed on exogenous attentional selection of visual input information for further processing guided by a bottom-up saliency map in the primary visual cortex.

Criticisms

Researchers should consider how the visual information is used: The hypothesis does not explain how the information from a visual scene is used—which is the main purpose of the visual system. It seems necessary to understand why we are processing image statistics from the environment because this may be relevant to how this information is ultimately processed. However, some researchers may see the irrelevance of the purpose of vision in Barlow's theory as an advantage for designing experiments.

Some experiments show correlations between neurons: When considering multiple neurons at a time, recordings "show correlation, synchronization, or other forms of statistical dependency between neurons". However, it is relevant to note that most of these experiments did not use natural stimuli to provoke these responses: this may not fit in directly to the efficient coding hypothesis because this hypothesis is concerned with natural image statistics. In his review article Simoncelli notes that perhaps we can interpret redundancy in the Efficient Coding Hypothesis a bit differently: he argues that statistical dependency could be reduced over "successive stages of processing", and not just in one area of the sensory pathway. Yet, recordings by Hung et al. at the end of the visual pathway also show strong layer-dependent correlations to naturalistic objects and in ongoing activity. They showed that redundancy of neighboring neurons (i.e. a 'manifold' representation) benefits learning of complex shape features and that network anisotropy/inhomogeneity is a stronger predictor than noise redundancy of encoding/decoding efficiency.

Observed redundancy: A comparison of the number of retinal ganglion cells to the number of neurons in the primary visual cortex shows an increase in the number of sensory neurons in the cortex as compared to the retina. Simoncelli notes that one major argument of critics in that higher up in the sensory pathway there are greater numbers of neurons that handle the processing of sensory information so this should seem to produce redundancy. However, this observation may not be fully relevant because neurons have different neural coding. In his review, Simoncelli notes "cortical neurons tend to have lower firing rates and may use a different form of code as compared to retinal neurons". Cortical Neurons may also have the ability to encode information over longer periods of time than their retinal counterparts. Experiments done in the auditory system have confirmed that redundancy is decreased.

Difficult to test: Estimation of information-theoretic quantities requires enormous amounts of data, and is thus impractical for experimental verification. Additionally, informational estimators are known to be biased. However, some experimental success has occurred.

Need well-defined criteria for what to measure: This criticism illustrates one of the most fundamental issues of the hypothesis. Here, assumptions are made about the definitions of both the inputs and the outputs of the system. The inputs into the visual system are not completely defined, but they are assumed to be encompassed in a collection of natural images. The output must be defined to test the hypothesis, but variability can occur here too based on the choice of which type of neurons to measure, where they are located and what type of responses, such as firing rate or spike times are chosen to be measured.

How to take noise into account: Some argue that experiments that ignore noise, or other physical constraints on the system are too simplistic. However, some researchers have been able to incorporate these elements into their analyses, thus creating more sophisticated systems.

However, with appropriate formulations, efficient coding can also address some of these issues raised above. For example, some quantifiable degree of redundancies in neural representations of sensory inputs (manifested as correlations in neural responses) is predicted to occur when efficient coding is applied to noisy sensory inputs. Falsifiable theoretical predictions can also be made, and some of them subsequently tested.

Biomedical applications

Possible applications of the efficient coding hypothesis include cochlear implant design. These neuroprosthetic devices stimulate the auditory nerve by an electrical impulses which allows some of the hearing to return to people who have hearing impairments or are even deaf. The implants are considered to be successful and efficient and the only ones in use currently. Using frequency-place mappings in the efficient coding algorithm may benefit in the use of cochlear implants in the future. Changes in design based on this hypothesis could increase speech intelligibility in hearing impaired patients. Research using vocoded speech processed by different filters showed that humans had greater accuracy in deciphering the speech when it was processed using an efficient-code filter as opposed to a cochleotropic filter or a linear filter. This shows that efficient coding of noise data offered perceptual benefits and provided the listeners with more information. More research is needed to apply current findings into medically relevant changes to cochlear implant design.