Quantum pseudo-telepathy describes the use of quantum entanglement to eliminate the need for classical communications. A nonlocal game is said to display quantum pseudo-telepathy if players who can use entanglement can win it with certainty while players without it can not. The prefix pseudo refers to the fact that quantum pseudo-telepathy does not involve the exchange of information between any parties. Instead, quantum pseudo-telepathy removes the need for parties to exchange information in some circumstances.

Quantum pseudo-telepathy is generally used as a thought experiment to demonstrate the non-local characteristics of quantum mechanics. However, quantum pseudo-telepathy is a real-world phenomenon which can be verified experimentally. It is thus an especially striking example of an experimental confirmation of Bell inequality violations.

The magic square game

A simple magic square game demonstrating nonclassical correlations was introduced by P. K. Aravind based on a series of papers by N. David Mermin and Asher Peres and Adán Cabellothat developed simplifying demonstrations of Bell's theorem. The game has been reformulated to demonstrate quantum pseudo-telepathy.

Game rules

This is a cooperative game featuring two players, Alice and Bob, and a referee. The referee asks Alice to fill in one row, and Bob one column, of a 3×3 table with plus and minus signs. Their answers must respect the following constraints: Alice's row must contain an even number of minus signs, Bob's column must contain an odd number of minus signs, and they both must assign the same sign to the cell where the row and column intersects. If they manage to do so, they win—otherwise they lose.

Alice and Bob are allowed to elaborate a strategy together, but crucially are not allowed to communicate after they know which row and column they will need to fill in (as otherwise the game would be trivial).

Classical strategy

It is easy to see that if Alice and Bob can come up with a classical strategy where they always win, they can represent it as a 3×3 table encoding their answers. But this is not possible, as the number of minus signs in this hypothetical table would need to be even and odd at the same time: every row must contain an even number of minus signs, making the total number of minus signs even, and every column must contain an odd number of minus signs, making the total number of minus signs odd.

With a bit further analysis one can see that the best possible classical strategy can be represented by a table where each cell now contains both Alice and Bob's answers, that may differ. It is possible to make their answers equal in 8 out of 9 cells, while respecting the parity of Alice's rows and Bob's columns. This implies that if the referee asks for a row and column whose intersection is one of the cells where their answers match they win, and otherwise they lose. Under the usual assumption that the referee asks for them uniformly at random, the best classical winning probability is 8/9.

Pseudo-telepathic strategies

Use of quantum pseudo-telepathy would enable Alice and Bob to win the game 100% of the time without any communication once the game has begun.

This requires Alice and Bob to possess two pairs of particles with entangled states. These particles must have been prepared before the start of the game. One particle of each pair is held by Alice and the other by Bob, so they each have two particles. When Alice and Bob learn which column and row they must fill, each uses that information to select which measurements they should make to their particles. The result of the measurements will appear to each of them to be random (and the observed partial probability distribution of either particle will be independent of the measurement performed by the other party), so no real "communication" takes place.

However, the process of measuring the particles imposes sufficient structure on the joint probability distribution of the results of the measurement such that if Alice and Bob choose their actions based on the results of their measurement, then there will exist a set of strategies and measurements allowing the game to be won with probability 1.

Note that Alice and Bob could be light years apart from one another, and the entangled particles will still enable them to coordinate their actions sufficiently well to win the game with certainty.

Each round of this game uses up one entangled state. Playing N rounds requires that N entangled states (2N independent Bell pairs, see below) be shared in advance. This is because each round needs 2-bits of information to be measured (the third entry is determined by the first two, so measuring it isn't necessary), which destroys the entanglement. There is no way to reuse old measurements from earlier games.

The trick is for Alice and Bob to share an entangled quantum state and to use specific measurements on their components of the entangled state to derive the table entries. A suitable correlated state consists of a pair of entangled Bell states:

here and are eigenstates of the Pauli operator Sx with eigenvalues +1 and −1, respectively, whilst the subscripts a, b, c, and d identify the components of each Bell state, with a and c going to Alice, and b and d going to Bob. The symbol represents a tensor product.

Observables for these components can be written as products of the Pauli matrices:

Products of these Pauli spin operators can be used to fill the 3×3 table such that each row and each column contains a mutually commuting set of observables with eigenvalues +1 and −1, and with the product of the observables in each row being the identity operator, and the product of observables in each column equating to minus the identity operator. This is a so-called Mermin–Peres magic square. It is shown in below table.

Effectively, while it is not possible to construct a 3×3 table with entries +1 and −1 such that the product of the elements in each row equals +1 and the product of elements in each column equals −1, it is possible to do so with the richer algebraic structure based on spin matrices.

The play proceeds by having each player make one measurement on their part of the entangled state per round of play. Each of Alice's measurements will give her the values for a row, and each of Bob's measurements will give him the values for a column. It is possible to do that because all observables in a given row or column commute, so there exists a basis in which they can be measured simultaneously. For Alice's first row she needs to measure both her particles in the basis, for the second row she needs to measure them in the basis, and for the third row she needs to measure them in an entangled basis. For Bob's first column he needs to measure his first particle in the basis and the second in the basis, for second column he needs to measure his first particle in the basis and the second in the basis, and for his third column he needs to measure both his particles in a different entangled basis, the Bell basis. As long as the table above is used, the measurement results are guaranteed to always multiply out to +1 for Alice along her row, and −1 for Bob down his column. Of course, each completely new round requires a new entangled state, as different rows and columns are not compatible with each other.

Current research

It has been demonstrated that the above-described game is the simplest two-player game of its type in which quantum pseudo-telepathy allows a win with probability one. Other games in which quantum pseudo-telepathy occurs have been studied, including larger magic square games, graph colouring games giving rise to the notion of quantum chromatic number, and multiplayer games involving more than two participants.

In July 2022 a study reported the experimental demonstration of quantum pseudotelepathy via playing the nonlocal version of Mermin-Peres magic square game.

Greenberger–Horne–Zeilinger game

The Greenberger–Horne–Zeilinger (GHZ) game is another example of quantum pseudo-telepathy. Classically, the game has 0.75 winning probability. However, with a quantum strategy, the players can achieve a winning probability of 1, meaning they always win.

In the game there are three players, Alice, Bob, and Carol playing against a referee. The referee poses a binary question to each player (either or ). The three players each respond with an answer again in the form of either or . Therefore, when the game is played the three questions of the referee x, y, z are drawn from the 4 options . For example, if question triple is chosen, then Alice receives bit 0, Bob receives bit 1, and Carol receives bit 1 from the referee. Based on the question bit received, Alice, Bob, and Carol each respond with an answer a, b, c, also in the form of 0 or 1. The players can formulate a strategy together prior to the start of the game. However, no communication is allowed during the game itself.

The players win if , where indicates OR condition and indicates summation of answers modulo 2. In other words, the sum of three answers has to be even if . Otherwise, the sum of answers has to be odd.

| 0 | 0 | 0 | 0 mod 2 |

| 1 | 1 | 0 | 1 mod 2 |

| 1 | 0 | 1 | 1 mod 2 |

| 0 | 1 | 1 | 1 mod 2 |

Classical strategy

Classically, Alice, Bob, and Carol can employ a deterministic strategy that always end up with odd sum (e.g. Alice always output 1. Bob and Carol always output 0). The players win 75% of the time and only lose if the questions are .

This is the best classical strategy: only 3 out of 4 winning conditions can be satisfied simultaneously. Let be Alice's response to question 0 and 1 respectively, be Bob's response to question 0, 1, and be Carol's response to question 0, 1. We can write all constraints that satisfy winning conditions as

Suppose that there is a classical strategy that satisfies all four winning conditions, all four conditions hold true. Through observation, each term appears twice on the left hand side. Hence, the left side sum = 0 mod 2. However, the right side sum = 1 mod 2. The contradiction shows that all four winning conditions cannot be simultaneously satisfied.

Quantum strategy

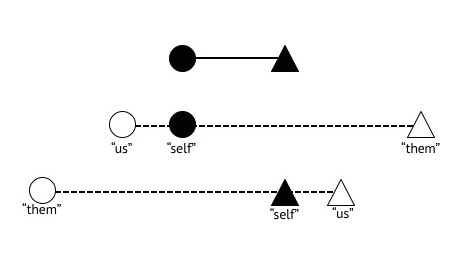

When Alice, Bob, and Carol decide to adopt a quantum strategy they share a tripartite entangled state , known as the GHZ state.

If question 0 is received, the player makes a measurement in the X basis . If question 1 is received, the player makes a measurement in the Y basis . In both cases, the players give answer 0 if the result of the measurement is the first state of the pair, and answer 1 if the result is the second state of the pair. With this strategy the players win the game with probability 1.