Gestalt psychology or gestaltism is a school of psychology that emerged in Austria and Germany in the early twentieth century based on work by Max Wertheimer, Wolfgang Köhler, and Kurt Koffka. As used in Gestalt psychology, the German word Gestalt, meaning "form") is interpreted as "pattern" or "configuration". Gestalt psychologists emphasized that organisms perceive entire patterns or configurations, not merely individual components. The view is sometimes summarized using the adage, "the whole is more than the sum of its parts."

Gestalt principles, proximity, similarity, figure-ground, continuity,

closure, and connection, determine how humans perceive visuals in

connection with different objects and environments.

Origin and history

Max Wertheimer (1880–1943), Kurt Koffka (1886–1941), and Wolfgang Köhler (1887–1967) founded Gestalt psychology in the early 20th century. The dominant view in psychology at the time was structuralism, exemplified by the work of Hermann von Helmholtz (1821–1894), Wilhelm Wundt (1832–1920), and Edward B. Titchener (1867–1927). Structuralism was rooted firmly in British empiricism and was based on three closely interrelated theories: (1) "atomism," also known as "elementalism,"

the view that all knowledge, even complex abstract ideas, is built from

simple, elementary constituents, (2) "sensationalism," the view that

the simplest constituents—the atoms of thought—are elementary sense impressions, and (3) "associationism," the view that more complex ideas arise from the association of simpler ideas.

Together, these three theories give rise to the view that the mind

constructs all perceptions and even abstract thoughts strictly from

lower-level sensations that are related solely by being associated

closely in space and time.

The Gestaltists took issue with this widespread "atomistic" view that

the aim of psychology should be to break consciousness down into

putative basic elements.

In contrast, the Gestalt psychologists believed that breaking

psychological phenomena down into smaller parts would not lead to

understanding psychology.

The Gestalt psychologists believed, instead, that the most fruitful way

to view psychological phenomena is as organized, structured wholes.

They argued that the psychological "whole" has priority and that the

"parts" are defined by the structure of the whole, rather than vice

versa. One could say that the approach was based on a macroscopic view

of psychology rather than a microscopic approach.

Gestalt theories of perception are based on human nature being inclined

to understand objects as an entire structure rather than the sum of its

parts.

Wertheimer had been a student of Austrian philosopher, Christian von Ehrenfels (1859–1932), a member of the School of Brentano. Von Ehrenfels introduced the concept of Gestalt to philosophy and psychology in 1890, before the advent of Gestalt psychology as such.

Von Ehrenfels observed that a perceptual experience, such as perceiving

a melody or a shape, is more than the sum of its sensory components.

He claimed that, in addition to the sensory elements of the perception,

there is something extra. Although in some sense derived from the

organization of the component sensory elements, this further quality is

an element in its own right. He called it Gestalt-qualität or

"form-quality." For instance, when one hears a melody, one hears the

notes plus something in addition to them that binds them together into a

tune – the Gestalt-qualität. It is this Gestalt-qualität

that, according to von Ehrenfels, allows a tune to be transposed to a

new key, using completely different notes, while still retaining its

identity. The idea of a Gestalt-qualität has roots in theories by David Hume, Johann Wolfgang von Goethe, Immanuel Kant, David Hartley, and Ernst Mach. Both von Ehrenfels and Edmund Husserl seem to have been inspired by Mach's work Beiträge zur Analyse der Empfindungen (Contributions to the Analysis of Sensations, 1886), in formulating their very similar concepts of gestalt and figural moment, respectively.

By 1914, the first published references to Gestalt theory could be found in a footnote of Gabriele von Wartensleben's

application of Gestalt theory to personality. She was a student at

Frankfurt Academy for Social Sciences, who interacted deeply with

Wertheimer and Köhler.

Through a series of experiments, Wertheimer discovered that a

person observing a pair of alternating bars of light can, under the

right conditions, experience the illusion of movement between one

location and the other. He noted that this was a perception of motion

absent any moving object. That is, it was pure phenomenal motion. He

dubbed it phi ("phenomenal") motion. Wertheimer's publication of these results in 1912 marks the beginning of Gestalt psychology.

In comparison to von Ehrenfels and others who had used the term

"gestalt" earlier in various ways, Wertheimer's unique contribution was

to insist that the "gestalt" is perceptually primary. The gestalt

defines the parts from which it is composed, rather than being a

secondary quality that emerges from those parts.

Wertheimer took the more radical position that "what is given me by

the melody does not arise ... as a secondary process from the sum of the

pieces as such. Instead, what takes place in each single part already

depends upon what the whole is", (1925/1938). In other words, one hears

the melody first and only then may perceptually divide it up into notes.

Similarly, in vision, one sees the form of the circle first—it is given

"im-mediately" (i.e., its apprehension is not mediated by a process of

part-summation). Only after this primary apprehension might one notice

that it is made up of lines or dots or stars.

The two men who served as Wertheimer's subjects in the phi

experiments were Köhler and Koffka. Köhler was an expert in physical

acoustics, having studied under physicist Max Planck (1858–1947), but had taken his degree in psychology under Carl Stumpf

(1848–1936). Koffka was also a student of Stumpf's, having studied

movement phenomena and psychological aspects of rhythm. In 1917, Köhler

(1917/1925) published the results of four years of research on learning

in chimpanzees. Köhler showed, contrary to the claims of most other

learning theorists, that animals can learn by "sudden insight" into the

"structure" of a problem, over and above the associative and incremental

manner of learning that Ivan Pavlov (1849–1936) and Edward Lee Thorndike (1874–1949) had demonstrated with dogs and cats, respectively.

The terms "structure" and "organization" were focal for the

Gestalt psychologists. Stimuli were said to have a certain structure, to

be organized in a certain way, and that it is to this structural

organization, rather than to individual sensory elements, that the

organism responds. When an animal is conditioned, it does not simply

respond to the absolute properties of a stimulus, but to its properties

relative to its surroundings. To use a favorite example of Köhler's, if

conditioned to respond in a certain way to the lighter of two gray

cards, the animal generalizes the relation between the two stimuli

rather than the absolute properties of the conditioned stimulus: it will

respond to the lighter of two cards in subsequent trials even if the

darker card in the test trial is of the same intensity as the lighter

one in the original training trials.

In 1921, Koffka published a Gestalt-oriented text on developmental psychology, Growth of the Mind. With the help of American psychologist Robert Ogden, Koffka introduced the Gestalt point of view to an American audience in 1922 by way of a paper in Psychological Bulletin.

It contains criticisms of then-current explanations of a number of

problems of perception, and the alternatives offered by the Gestalt

school. Koffka moved to the United States in 1924, eventually settling

at Smith College in 1927. In 1935, Koffka published his Principles of Gestalt Psychology. This textbook laid out the Gestalt

vision of the scientific enterprise as a whole. Science, he said, is

not the simple accumulation of facts. What makes research scientific is

the incorporation of facts into a theoretical structure. The goal of the

Gestaltists was to integrate the facts of inanimate nature,

life, and mind into a single scientific structure. This meant that

science would have to accommodate not only what Koffka called the

quantitative facts of physical science but the facts of two other

"scientific categories": questions of order and questions of Sinn,

a German word which has been variously translated as significance,

value, and meaning. Without incorporating the meaning of experience and

behavior, Koffka believed that science would doom itself to trivialities

in its investigation of human beings.

Having survived the Nazis up to the mid-1930s, all the core members of the Gestalt movement were forced out of Germany to the United States by 1935. Köhler published another book, Dynamics in Psychology, in 1940 but thereafter the Gestalt

movement suffered a series of setbacks. Koffka died in 1941 and

Wertheimer in 1943. Wertheimer's long-awaited book on mathematical

problem-solving, Productive Thinking, was published posthumously in 1945, but Köhler was left to guide the movement without his two long-time colleagues.

Gestalt therapy

Gestalt psychology should not be confused with the Gestalt therapy, which is only peripherally linked to Gestalt psychology. The founders of Gestalt therapy, Fritz and Laura Perls, had worked with Kurt Goldstein,

a neurologist who had applied principles of Gestalt psychology to the

functioning of the organism. Laura Perls had been a Gestalt psychologist

before she became a psychoanalyst and before she began developing

Gestalt therapy together with Fritz Perls.

The extent to which Gestalt psychology influenced Gestalt therapy is

disputed, however. In any case it is not identical with Gestalt

psychology. On the one hand, Laura Perls preferred not to use the term

"Gestalt" to name the emerging new therapy, because she thought that the

Gestalt psychologists would object to it; on the other hand Fritz and Laura Perls clearly adopted some of Goldstein's work.

Thus, though recognizing the historical connection and the influence,

most Gestalt psychologists emphasize that Gestalt therapy is not a form

of Gestalt psychology.

Mary Henle

noted in her presidential address to Division 24 at the meeting of the

American Psychological Association (1975): "What Perls has done has been

to take a few terms from Gestalt psychology, stretch their meaning

beyond recognition, mix them with notions—often unclear and often

incompatible—from the depth psychologies, existentialism, and common

sense, and he has called the whole mixture gestalt therapy. His work has

no substantive relation to scientific Gestalt psychology. To use his

own language, Fritz Perls has done 'his thing'; whatever it is, it is not Gestalt psychology"

With her analysis however, she restricts herself explicitly to only

three of Perls' books from 1969 and 1972, leaving out Perls' earlier

work, and Gestalt therapy in general as a psychotherapy method.

There have been clinical applications of Gestalt psychology in

the psychotherapeutic field long before Perls'ian Gestalt therapy, in

group psychoanalysis (Foulkes), Adlerian individual psychology, by

Gestalt psychologists in psychotherapy like Erwin Levy, Abraham S.

Luchins, by Gestalt psychologically oriented psychoanalysts in Italy

(Canestrari and others), and there have been newer developments foremost

in Europe. For example, a strictly Gestalt psychology-based therapeutic

method is Gestalt Theoretical Psychotherapy, developed by the German Gestalt psychologist and psychotherapist Hans-Jürgen Walter and his colleagues in Germany, Austria (Gerhard Stemberger and colleagues) and Switzerland. Other countries, especially Italy, have seen similar developments.

Contributions

Gestalt

psychology made many contributions to the body of psychology. The

Gestaltists were the first to demonstrate empirically and document many

facts about perception—including facts about the perception of movement, the perception of contour, perceptual constancy, and perceptual illusions. Wertheimer's discovery of the phi phenomenon is one example of such a contribution.

In addition to discovering perceptual phenomena, the contributions of

Gestalt psychology include: (a) a unique theoretical framework and

methodology, (b) a set of perceptual principles, (c) a well-known set of

perceptual grouping laws, (d) a theory of problem solving based on insight, and (e) a theory of memory. The following subsections discuss these contributions in turn.

Theoretical framework and methodology

The

Gestalt psychologists practiced a set of theoretical and methodological

principles that attempted to redefine the approach to psychological

research. This is in contrast to investigations developed at the

beginning of the 20th century, based on traditional scientific

methodology, which divided the object of study into a set of elements

that could be analyzed separately with the objective of reducing the

complexity of this object.

The theoretical principles are the following:

- Principle of Totality—Conscious experience must be considered globally (by taking into account all the physical and mental aspects of the individual simultaneously) because the nature of the mind demands that each component be considered as part of a system of dynamic relationships. Wertheimer described holism as fundamental to Gestalt psychology, writing "There are wholes, the behavior of which is not determined by that of their individual elements, but where the part-processes are themselves determined by the intrinsic nature of the whole." In other words, a perceptual whole is different from what one would predict based on only its individual parts. Moreover, the nature of a part depends upon the whole in which it is embedded. Köhler, for example, writes "In psychology...we have wholes which, instead of being the sum of parts existing independently, give their parts specific functions or properties that can only be defined in relation to the whole in question." Thus, the maxim that the whole is more than the sum of its parts is not a precise description of the Gestaltist view. Rather, "The whole is something else than the sum of its parts, because summing is a meaningless procedure, whereas the whole-part relationship is meaningful."

- Principle of psychophysical isomorphism – Köhler hypothesized that there is a correlation between conscious experience and cerebral activity.

Based on the principles above the following methodological principles are defined:

- Phenomenon experimental analysis—In relation to the Totality Principle any psychological research should take phenomena as a starting point and not be solely focused on sensory qualities.

- Biotic experiment—The Gestalt psychologists established a need to conduct real experiments that sharply contrasted with and opposed classic laboratory experiments. This signified experimenting in natural situations, developed in real conditions, in which it would be possible to reproduce, with higher fidelity, what would be habitual for a subject.

Properties

Reification

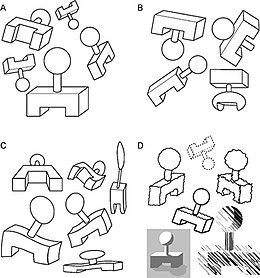

Reification

Reification is the constructive or generative aspect of perception, by which the experienced percept contains more explicit spatial information than the sensory stimulus on which it is based.

For instance, a triangle is perceived in picture A, though no triangle is there. In pictures B and D the eye recognizes disparate shapes as "belonging" to a single shape, in C a complete three-dimensional shape is seen, where in actuality no such thing is drawn.

Reification can be explained by progress in the study of illusory contours, which are treated by the visual system as "real" contours.

Multistability

Multistability (or multistable perception) is the tendency of ambiguous perceptual experiences to pop back and forth unstably between two or more alternative interpretations. This is seen, for example, in the Necker cube and Rubin's Figure/Vase illusion shown here. Other examples include the three-legged blivet and artist M. C. Escher's artwork and the appearance of flashing marquee lights moving first one direction and then suddenly the other. Again, Gestalt psychology does not explain how images appear multistable, only that they do.

Invariance

Invariance

Invariance is the property of perception whereby simple geometrical objects are recognized independent of rotation, translation, and scale; as well as several other variations such as elastic deformations, different lighting, and different component features. For example, the objects in A in the figure are all immediately recognized as the same basic shape, which are immediately distinguishable from the forms in B. They are even recognized despite perspective and elastic deformations as in C, and when depicted using different graphic elements as in D. Computational theories of vision, such as those by David Marr, have provided alternate explanations of how perceived objects are classified.

Emergence, reification, multistability, and invariance are not

necessarily separable modules to model individually, but they could be

different aspects of a single unified dynamic mechanism.

Figure-Ground Organization

The

perceptual field (what an organism perceives) is organized.

Figure-ground organization is one form of perceptual organization.

Figure-ground organization is the interpretation of perceptual elements

in terms of their shapes and relative locations in the layout of

surfaces in the 3-D world.

Figure-ground organization structures the perceptual field into a

figure (standing out at the front of the perceptual field) and a

background (receding behind the figure). Pioneering work on figure-ground organization was carried out by the Danish psychologist Edgar Rubin.

The Gestalt psychologists demonstrated that we tend to perceive as

figures those parts of our perceptual fields that are convex, symmetric,

small, and enclosed.

Prägnanz

Like figure-ground organization, perceptual grouping (sometimes called perceptual segregation) is a form of perceptual organization. Organisms perceive some parts of their perceptual fields as "hanging together" more tightly than others. They use this information for object detection. Perceptual grouping is the process that determines what these "pieces" of the perceptual field are.

The Gestaltists were the first psychologists to systematically study perceptual grouping. According to Gestalt psychologists, the fundamental principle of perceptual grouping is the law of Prägnanz. (The law of Prägnanz is also known as the law of good Gestalt.) Prägnanz is a German word that directly translates to "pithiness" and implies salience, conciseness, and orderliness.

The law of Prägnanz says that we tend to experience things as regular,

orderly, symmetrical, and simple. As Koffka put it, "Of several

geometrically possible organizations that one will actually occur which

possesses the best, simplest and most stable shape."

The law of Prägnanz implies that, as individuals perceive the

world, they eliminate complexity and unfamiliarity so they can observe

reality in its most simplistic form. Eliminating extraneous stimuli

helps the mind create meaning. This meaning created by perception

implies a global regularity, which is often mentally prioritized over

spatial relations. The law of good Gestalt focuses on the idea of

conciseness, which is what all of Gestalt theory is based on.

A major aspect of Gestalt psychology is that it implies that the mind

understands external stimuli as wholes rather than as the sums of their

parts. The wholes are structured and organized using grouping laws.

Gestalt psychologists attempted to discover refinements of the law of Prägnanz, and this involved writing down laws that, hypothetically, allow us to predict the interpretation of sensation, what are often called "gestalt laws".

Wertheimer defined a few principles that explain the ways humans

perceive objects. Those principles were based on similarity, proximity,

continuity. The Gestalt concept is based on perceiving reality in its simplest form. The various laws are called laws or principles, depending on the paper where they appear—but for simplicity's sake, this article uses the term laws.

These laws took several forms, such as the grouping of similar, or

proximate, objects together, within this global process. These laws deal

with the sensory modality of vision. However, there are analogous laws

for other sensory modalities including auditory, tactile, gustatory and

olfactory (Bregman – GP). The visual Gestalt principles of grouping

were introduced in Wertheimer (1923). Through the 1930s and '40s

Wertheimer, Kohler and Koffka formulated many of the laws of grouping

through the study of visual perception.

Law of Proximity

Law of proximity

The law of proximity states that when an individual perceives an assortment of objects, they perceive objects that are close to each other as forming a group. For example, in the figure illustrating the law of proximity, there are 72 circles, but we perceive the collection of circles in groups. Specifically, we perceive that there is a group of 36 circles on the left side of the image, and three groups of 12 circles on the right side of the image. This law is often used in advertising logos to emphasize which aspects of events are associated.

Law of Similarity

Law of similarity

The law of similarity states that elements within an assortment of objects are perceptually grouped together if they are similar to each other. This similarity can occur in the form of shape, colour, shading or other qualities. For example, the figure illustrating the law of similarity portrays 36 circles all equal distance apart from one another forming a square. In this depiction, 18 of the circles are shaded dark, and 18 of the circles are shaded light. We perceive the dark circles as grouped together and the light circles as grouped together, forming six horizontal lines within the square of circles. This perception of lines is due to the law of similarity.

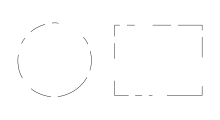

Law of Closure

Law of closure

Gestalt psychologists believed that humans tend to perceive objects as complete rather than focusing on the gaps that the object might contain. For example, a circle has good Gestalt in terms of completeness. However, we will also perceive an incomplete circle as a complete circle. That tendency to complete shapes and figures is called closure. The law of closure states that individuals perceive objects such as shapes, letters, pictures, etc., as being whole when they are not complete. Specifically, when parts of a whole picture are missing, our perception fills in the visual gap. Research shows that the reason the mind completes a regular figure that is not perceived through sensation is to increase the regularity of surrounding stimuli. For example, the figure that depicts the law of closure portrays what we perceive as a circle on the left side of the image and a rectangle on the right side of the image. However, gaps are present in the shapes. If the law of closure did not exist, the image would depict an assortment of different lines with different lengths, rotations, and curvatures—but with the law of closure, we perceptually combine the lines into whole shapes.

Law of Symmetry

Law of symmetry

The law of symmetry states that the mind perceives objects as being symmetrical and forming around a center point. It is perceptually pleasing to divide objects into an even number of symmetrical parts. Therefore, when two symmetrical elements are unconnected the mind perceptually connects them to form a coherent shape. Similarities between symmetrical objects increase the likelihood that objects are grouped to form a combined symmetrical object. For example, the figure depicting the law of symmetry shows a configuration of square and curled brackets. When the image is perceived, we tend to observe three pairs of symmetrical brackets rather than six individual brackets.

Law of Common Fate

The

law of common fate states that objects are perceived as lines that move

along the smoothest path. Experiments using the visual sensory modality

found that movement of elements of an object produce paths that

individuals perceive that the objects are on. We perceive elements of

objects to have trends of motion, which indicate the path that the

object is on. The law of continuity implies the grouping together of

objects that have the same trend of motion and are therefore on the same

path. For example, if there are an array of dots and half the dots are

moving upward while the other half are moving downward, we would

perceive the upward moving dots and the downward moving dots as two

distinct units.

Law of Continuity

Law of continuity

The law of continuity (also known as the law of good continuation) states that elements of objects tend to be grouped together, and therefore integrated into perceptual wholes if they are aligned within an object. In cases where there is an intersection between objects, individuals tend to perceive the two objects as two single uninterrupted entities. Stimuli remain distinct even with overlap. We are less likely to group elements with sharp abrupt directional changes as being one object.

Law of Past Experience

The

law of past experience implies that under some circumstances visual

stimuli are categorized according to past experience. If two objects

tend to be observed within close proximity, or small temporal intervals,

the objects are more likely to be perceived together. For example, the

English language contains 26 letters that are grouped to form words

using a set of rules. If an individual reads an English word they have

never seen, they use the law of past experience to interpret the letters

"L" and "I" as two letters beside each other, rather than using the law

of closure to combine the letters and interpret the object as an

uppercase U.

Music

An example

of the Gestalt movement in effect, as it is both a process and result,

is a music sequence. People are able to recognise a sequence of perhaps

six or seven notes, despite them being transposed into a different

tuning or key.

Problem solving and insight

Gestalt psychology contributed to the scientific study of problem solving. In fact, the early experimental work of the Gestaltists in Germany

marks the beginning of the scientific study of problem solving. Later

this experimental work continued through the 1960s and early 1970s with

research conducted on relatively simple (but novel for participants)

laboratory tasks of problem solving.

Given Gestalt psychology's focus on the whole, it was natural for

Gestalt psychologists to study problem solving from the perspective of insight,

seeking to understand the process by which organisms sometimes suddenly

transition from having no idea how to solve a problem to instantly

understanding the whole problem and its solution.

In a famous set of experiments, Köhler gave chimpanzees some boxes and

placed food high off the ground; after some time, the chimpanzees

appeared to suddenly realize that they could stack the boxes on top of

each other to reach the food.

Max Wertheimer distinguished two kinds of thinking: productive thinking and reproductive thinking. Productive thinking is solving a problem based on insight—a quick, creative, unplanned response to situations and environmental interaction. Reproductive thinking is solving a problem deliberately based on previous experience and knowledge. Reproductive thinking proceeds algorithmically—a problem solver reproduces a series of steps from memory, knowing that they will lead to a solution—or by trial and error.

Karl Duncker, another Gestalt psychologist who studied problem solving, coined the term functional fixedness

for describing the difficulties in both visual perception and problem

solving that arise from the fact that one element of a whole situation

already has a (fixed) function that has to be changed in order to

perceive something or find the solution to a problem.

Abraham Luchins also studied problem solving from the perspective of Gestalt psychology. He is well known for his research on the role of mental set (Einstellung effect), which he demonstrated using a series of problems having to do with refilling water jars.

Another Gestalt psychologist, Perkins, believes insight deals with three processes:

- Unconscious leap in thinking.

- The increased amount of speed in mental processing.

- The amount of short-circuiting that occurs in normal reasoning.

Views going against the Gestalt psychology are:

- Nothing-special view

- Neo-gestalt view

- The Three-Process View

Fuzzy-trace theory of memory

Fuzzy-trace theory,

a dual process model of memory and reasoning, was also derived from

Gestalt psychology. Fuzzy-trace theory posits that we encode information

into two separate traces: verbatim and gist. Information stored in

verbatim is exact memory for detail (the individual parts of a pattern,

for example) while information stored in gist is semantic and conceptual

(what we perceive the pattern to be). The effects seen in Gestalt

psychology can be attributed to the way we encode information as gist.

Legacy

Gestalt

psychology struggled to precisely define terms like Prägnanz, to make

specific behavioral predictions, and to articulate testable models of

underlying neural mechanisms. It was criticized as being merely descriptive.

These shortcomings led, by the mid-20th century, to growing

dissatisfaction with Gestaltism and a subsequent decline in its impact

on psychology.

Despite this decline, Gestalt psychology has formed the basis of much

further research into the perception of patterns and objects and of research into behavior, thinking, problem solving and psychopathology.

Support from cybernetics and neurology

In the 1940s and 1950s, laboratory research in neurology and what became known as cybernetics on the mechanism of frogs' eyes indicate that perception of 'gestalts' (in particular gestalts in motion) is perhaps more primitive and fundamental than 'seeing' as such:

- A frog hunts on land by vision... He has no fovea, or region of greatest acuity in vision, upon which he must center a part of the image... The frog does not seem to see or, at any rate, is not concerned with the detail of stationary parts of the world around him. He will starve to death surrounded by food if it is not moving. His choice of food is determined only by size and movement. He will leap to capture any object the size of an insect or worm, providing it moves like one. He can be fooled easily not only by a piece of dangled meat but by any moving small object... He does remember a moving thing provided it stays within his field of vision and he is not distracted.

- The lowest-level concepts related to visual perception for a human being probably differ little from the concepts of a frog. In any case, the structure of the retina in mammals and in human beings is the same as in amphibians. The phenomenon of distortion of perception of an image stabilized on the retina gives some idea of the concepts of the subsequent levels of the hierarchy. This is a very interesting phenomenon. When a person looks at an immobile object, "fixes" it with his eyes, the eyeballs do not remain absolutely immobile; they make small involuntary movements. As a result the image of the object on the retina is constantly in motion, slowly drifting and jumping back to the point of maximum sensitivity. The image "marks time" in the vicinity of this point.

Quantum cognition modeling

Similarities between Gestalt phenomena and quantum mechanics have been pointed out by, among others, chemist Anton Amann,

who commented that "similarities between Gestalt perception and quantum

mechanics are on a level of a parable" yet may give useful insight

nonetheless. Physicist Elio Conte and co-workers have proposed abstract, mathematical models to describe the time dynamics of cognitive associations with mathematical tools borrowed from quantum mechanics and has discussed psychology experiments in this context. A similar approach has been suggested by physicists David Bohm, Basil Hiley and philosopher Paavo Pylkkänen with the notion that mind and matter both emerge from an "implicate order". The models involve non-commutative

mathematics; such models account for situations in which the outcome of

two measurements performed one after the other can depend on the order

in which they are performed—a pertinent feature for psychological

processes, as an experiment performed on a conscious person may

influence the outcome of a subsequent experiment by changing the state

of mind of that person.

Use in contemporary social psychology

The halo effect can be explained through the application of Gestalt theories to social information processing.

The constructive theories of social cognition are applied though the

expectations of individuals. They have been perceived in this manner and

the person judging the individual is continuing to view them in this

positive manner.

Gestalt's theories of perception enforces that individual's tendency to

perceive actions and characteristics as a whole rather than isolated

parts,

therefore humans are inclined to build a coherent and consistent

impression of objects and behaviors in order to achieve an acceptable

shape and form. The halo effect is what forms patterns for individuals, the halo effect being classified as a cognitive bias which occurs during impression formation. The halo effect can also be altered by physical characteristics, social status and many other characteristics.

As well, the halo effect can have real repercussions on the

individual's perception of reality, either negatively or positively,

meaning to construct negative or positive images about other individuals

or situations, something that could lead to self-fulfilling prophesies, stereotyping, or even discrimination.

Contemporary cognitive and perceptual psychology

Some

of the central criticisms of Gestaltism are based on the preference

Gestaltists are deemed to have for theory over data, and a lack of

quantitative research supporting Gestalt ideas. This is not necessarily a

fair criticism as highlighted by a recent collection of quantitative

research on Gestalt perception. Researchers continue to test hypotheses about the mechanisms underlying Gestalt principles such as the principle of similarity.

Other important criticisms concern the lack of definition and support for the many physiological assumptions made by gestaltists and lack of theoretical coherence in modern Gestalt psychology.

In some scholarly communities, such as cognitive psychology and computational neuroscience, gestalt theories of perception are criticized for being descriptive rather than explanatory

in nature. For this reason, they are viewed by some as redundant or

uninformative. For example, a textbook on visual perception states that,

"The physiological theory of the gestaltists has fallen by the wayside,

leaving us with a set of descriptive principles, but without a model of

perceptual processing. Indeed, some of their 'laws' of perceptual

organisation today sound vague and inadequate. What is meant by a 'good'

or 'simple' shape, for example?"

One historian of psychology has argued that Gestalt psychologists

first discovered many principles later championed by cognitive

psychology, including schemas and prototypes. Another psychologist has argued that the Gestalt psychologists made a lasting contribution by showing how the study of illusions can help scientists understand essential aspects of how the visual system normally functions, not merely how it breaks down.

Use in design

The gestalt laws are used in user interface design. The laws of similarity and proximity can, for example, be used as guides for placing radio buttons.

They may also be used in designing computers and software for more

intuitive human use. Examples include the design and layout of a

desktop's shortcuts in rows and columns.