Neural networks have garnered all the headlines, but a much more powerful approach is waiting in the wings.

by Emerging Technology from the arXivJuly 18, 2018

Original link: https://www.technologyreview.com/s/611568/evolutionary-algorithm-outperforms-deep-learning-machines-at-video-games/

With all the excitement over neural networks and deep-learning techniques, it’s easy to imagine that the world of computer science consists of little else. Neural networks, after all, have begun to outperform humans in tasks such as object and face recognition and in games such as chess, Go, and various arcade video games.

These networks are based on the way the human brain works. Nothing could have more potential than that, right?

Not quite. An entirely different type of computing has the potential to be significantly more powerful than neural networks and deep learning. This technique is based on the process that created the human brain—evolution. In other words, a sequence of iterative change and selection that produced the most complex and capable machines known to humankind—the eye, the wing, the brain, and so on. The power of evolution is a wonder to behold.

But in the last few years, this area of computer science has had to play second fiddle to deep-learning machines and their huge success.

Today, the tables look set to turn thanks to the work of Dennis Wilson and a few colleagues at the University of Toulouse in France. These guys have shown how evolutionary computing can match the performance of deep-learning machines at the emblematic task that first powered them to fame in 2013—the ability to outperform humans at arcade video games such as Pong, Breakout, and Space Invaders. The work suggests that evolutionary computing should be feted just as widely as its deep-learning-based relations.

Evolutionary computing works in an entirely different way than neural networks. The goal is to create computer code that solves a specific problem using an approach that is somewhat counterintuitive.

The conventional way to create code is to write it from first principles with a specific goal in mind.

Evolutionary computing uses a different approach. It starts with code generated entirely at random. And not just one version of it, but lots of versions, sometimes hundreds of thousands of randomly assembled pieces of code.

Each of these codes is tested to see whether it achieves the required goal. And of course, all the code is awful because it is randomly generated.

But just by chance, some pieces of code are a little better than others. These pieces are then reproduced in a new generation of code, which includes more copies of the better codes.

However, the next generation cannot be an identical copy of the first. Instead, it must change in some way. These changes can involve switching two terms in the code—a kind of point mutation. Or they can involve two codes that are cut in half and the halves exchanged—like sexual recombination.

Each of the new generation is then tested to see how well it works. The best pieces of code are preferentially reproduced in another generation, and so on.

In this way, the code evolves. Over time, it becomes better, and after many generations, if conditions are right, it can become better than any human coder can design.

Computer scientists have successfully applied evolutionary approaches to problems ranging from designing robots to building aircraft parts.

But it has fallen out of favor because of the huge interest in deep learning. So an important question is whether it can match the performance of deep-learning machines. To find out, Wilson and co used the approach to develop code that could control arcade computer games dating from the 1980s and 1990s.

These games are available in a database called the Arcade Learning Environment, which is increasingly being used to test the learning behavior of algorithms of various kinds. The database consists of 61 Atari games, such as Pong, Space Invaders, Breakout, and Kung Fu Master.

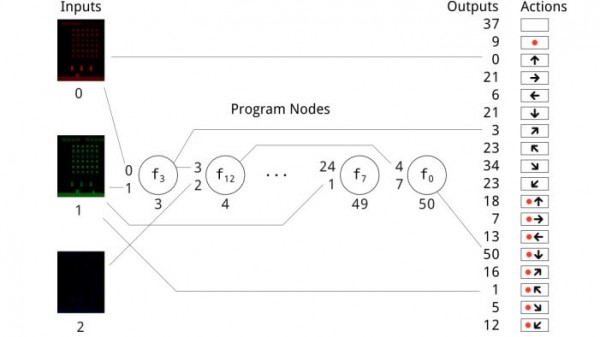

The task is to create an algorithm that can play a game like Pong by looking only at the output from the screen, the same way that humans play. So the algorithm must analyze each game position and then decide how to move to maximize its score.

The controls for all games are the same. These correspond to the eight directions the controller can be moved (up, down, left, and right plus four diagonal directions), a button press, the same eight movements combined with a button press, and doing nothing at all. Not all games use all 18 possible combinations, and some use as few as four.

The code first has to be created. The evolutionary approach requires a vocabulary of terms that can be concatenated to form computer code. The terms range from simple actions such as ADD (x+y)/2 to more complex ones, such as “return the 1-element x-vector if x is a scalar.”

The choice of terms that make up this vocabulary is important, and Wilson and co use a set already defined for Cartesian genetic programming (as their technique is called).

The process begins by randomly creating a code containing 40 terms. This is the “genome” of the program. This genome is then tested to see how well it plays the game, as judged by the score. Depending on how well it performs, the genome is then reproduced with mutations and tested again, and so on. In total, the team tested 10,000 genomes in this way.

The results make for interesting reading. At first, the genomes are terrible at playing the game. But over time, they get better. And after many generations, they play well, sometimes better than humans.

Many genomes ended up playing entirely new gaming strategies, often complex ones. But they sometimes found simple ones that humans had overlooked.

For example, when playing Kung Fu Master, the evolutionary algorithm discovered that the most valuable attack was a crouch-punch. Crouching is safer because it dodges half the bullets aimed at the player and also attacks anything nearby. The algorithm’s strategy was to repeatedly use this maneuver with no other actions. In hindsight, using the crouch-punch exclusively makes sense.

That surprised the human players involved in the study. “Employing this strategy by hand achieved a better score than playing the game normally, and the author now uses crouching punches exclusively when attacking in this game,” say Wilson and co.

Overall, the evolved code played many of the games well, even outperforming humans in games such as Kung Fu Master. Just as significantly, the evolved code is just as good as many deep-learning approaches and outperforms them in games like Asteroids, Defender, and Kung Fu Master.

It also produces a result more quickly. “While the programs are relatively small, many controllers are competitive with state-of-the-art methods for the Atari benchmark set and require less training time,” say Wilson and co.

The evolved code has another advantage. Because it is small, it is easy to see how it works. By contrast, a well-known problem with deep-learning techniques is that it is sometimes impossible to know why they have made particular decisions, and this can have practical and legal ramifications.

Overall, this is interesting work that should suggest to computer scientists who are focusing exclusively on deep learning that they may be missing a trick. The evolutionary approach is a powerful alternative that can be applied in a wide set of situations.

Indeed, some researchers have begun to use it to evolve better deep-learning machines. What could possibly go wrong?

Ref: https://arxiv.org/abs/1806.05695: Evolving Simple Programs for Playing Atari Games