An agent-based model (ABM) is a class of computational models for simulating

the actions and interactions of autonomous agents (both individual or

collective entities such as organizations or groups) with a view to

assessing their effects on the system as a whole. It combines elements

of game theory, complex systems, emergence, computational sociology, multi-agent systems, and evolutionary programming. Monte Carlo methods are used to introduce randomness. Particularly within ecology, ABMs are also called individual-based models (IBMs),

and individuals within IBMs may be simpler than fully autonomous agents

within ABMs. A review of recent literature on individual-based models,

agent-based models, and multiagent systems shows that ABMs are used on

non-computing related scientific domains including biology, ecology and social science. Agent-based modeling is related to, but distinct from, the concept of multi-agent systems or multi-agent simulation

in that the goal of ABM is to search for explanatory insight into the

collective behavior of agents obeying simple rules, typically in natural

systems, rather than in designing agents or solving specific practical

or engineering problems.

Agent-based models are a kind of microscale model

that simulate the simultaneous operations and interactions of multiple

agents in an attempt to re-create and predict the appearance of complex

phenomena. The process is one of emergence

from the lower (micro) level of systems to a higher (macro) level. As

such, a key notion is that simple behavioral rules generate complex

behavior. This principle, known as K.I.S.S.

("Keep it simple, stupid"), is extensively adopted in the modeling

community. Another central tenet is that the whole is greater than the

sum of the parts. Individual agents are typically characterized as

boundedly rational, presumed to be acting in what they perceive as their

own interests, such as reproduction, economic benefit, or social

status, using heuristics or simple decision-making rules. ABM agents may experience "learning", adaptation, and reproduction.

Most agent-based models are composed of: (1) numerous agents specified at various scales (typically referred to as agent-granularity); (2) decision-making heuristics; (3) learning rules or adaptive processes; (4) an interaction topology; and (5) an environment. ABMs are typically implemented as computer simulations, either as custom software, or via ABM toolkits, and this software can be then used to test how changes in individual behaviors will affect the system's emerging overall behavior.

Most agent-based models are composed of: (1) numerous agents specified at various scales (typically referred to as agent-granularity); (2) decision-making heuristics; (3) learning rules or adaptive processes; (4) an interaction topology; and (5) an environment. ABMs are typically implemented as computer simulations, either as custom software, or via ABM toolkits, and this software can be then used to test how changes in individual behaviors will affect the system's emerging overall behavior.

History

The

idea of agent-based modeling was developed as a relatively simple

concept in the late 1940s. Since it requires computation-intensive

procedures, it did not become widespread until the 1990s.

Early developments

The history of the agent-based model can be traced back to the Von Neumann machine, a theoretical machine capable of reproduction. The device von Neumann

proposed would follow precisely detailed instructions to fashion a copy

of itself. The concept was then built upon by von Neumann's friend Stanislaw Ulam,

also a mathematician; Ulam suggested that the machine be built on

paper, as a collection of cells on a grid. The idea intrigued von

Neumann, who drew it up—creating the first of the devices later termed cellular automata.

Another advance was introduced by the mathematician John Conway. He constructed the well-known Game of Life.

Unlike von Neumann's machine, Conway's Game of Life operated by

tremendously simple rules in a virtual world in the form of a

2-dimensional checkerboard.

1970s and 1980s: the first models

One of the earliest agent-based models in concept was Thomas Schelling's segregation model,

which was discussed in his paper "Dynamic Models of Segregation" in

1971. Though Schelling originally used coins and graph paper rather

than computers, his models embodied the basic concept of agent-based

models as autonomous agents interacting in a shared environment with an

observed aggregate, emergent outcome.

In the early 1980s, Robert Axelrod hosted a tournament of Prisoner's Dilemma

strategies and had them interact in an agent-based manner to determine a

winner. Axelrod would go on to develop many other agent-based models

in the field of political science that examine phenomena from ethnocentrism to the dissemination of culture.

By the late 1980s, Craig Reynolds' work on flocking

models contributed to the development of some of the first biological

agent-based models that contained social characteristics. He tried to

model the reality of lively biological agents, known as artificial life, a term coined by Christopher Langton.

The first use of the word "agent" and a definition as it is

currently used today is hard to track down. One candidate appears to be

John Holland and John H. Miller's 1991 paper "Artificial Adaptive Agents in Economic Theory", based on an earlier conference presentation of theirs.

At the same time, during the 1980s, social scientists,

mathematicians, operations researchers, and a scattering of people from

other disciplines developed Computational and Mathematical Organization

Theory (CMOT). This field grew as a special interest group of The

Institute of Management Sciences (TIMS) and its sister society, the

Operations Research Society of America (ORSA).

1990s: expansion

With the appearance of StarLogo in 1990, Swarm and NetLogo in the mid-1990s and RePast and AnyLogic in 2000, or GAMA

in 2007 as well as some custom-designed code, modelling software became

widely available and the range of domains that ABM was applied to,

grew. Bonabeau (2002) is a good survey of the potential of agent-based

modeling as of the time.

The 1990s were especially notable for the expansion of ABM within

the social sciences, one notable effort was the large-scale ABM, Sugarscape, developed by

Joshua M. Epstein and Robert Axtell

to simulate and explore the role of social phenomena such as seasonal

migrations, pollution, sexual reproduction, combat, and transmission of

disease and even culture. Other notable 1990s developments included Carnegie Mellon University's Kathleen Carley ABM, to explore the co-evolution of social networks and culture.

During this 1990s time frame Nigel Gilbert

published the first textbook on Social Simulation: Simulation for the

social scientist (1999) and established a journal from the perspective

of social sciences: the Journal of Artificial Societies and Social Simulation (JASSS). Other than JASSS, agent-based models of any discipline are within scope of SpringerOpen journal Complex Adaptive Systems Modeling (CASM).

Through the mid-1990s, the social sciences thread of ABM began to

focus on such issues as designing effective teams, understanding the

communication required for organizational effectiveness, and the

behavior of social networks. CMOT—later renamed Computational Analysis

of Social and Organizational Systems (CASOS)—incorporated more and more

agent-based modeling. Samuelson (2000) is a good brief overview of the

early history, and Samuelson (2005) and Samuelson and Macal (2006) trace the more recent developments.

In the late 1990s, the merger of TIMS and ORSA to form INFORMS,

and the move by INFORMS from two meetings each year to one, helped to

spur the CMOT group to form a separate society, the North American

Association for Computational Social and Organizational Sciences

(NAACSOS). Kathleen Carley was a major contributor, especially to

models of social networks, obtaining National Science Foundation funding for the annual conference and serving as the first President of NAACSOS. She was succeeded by David Sallach of the University of Chicago and Argonne National Laboratory, and then by Michael Prietula of Emory University. At about the same time NAACSOS began, the European Social Simulation Association

(ESSA) and the Pacific Asian Association for Agent-Based Approach in

Social Systems Science (PAAA), counterparts of NAACSOS, were organized.

As of 2013, these three organizations collaborate internationally. The

First World Congress on Social Simulation was held under their joint

sponsorship in Kyoto, Japan, in August 2006. The Second World Congress was held in the northern Virginia suburbs of Washington, D.C., in July 2008, with George Mason University taking the lead role in local arrangements.

2000s and later

More recently, Ron Sun developed methods for basing agent-based simulation on models of human cognition, known as cognitive social simulation. Bill McKelvey, Suzanne Lohmann, Dario Nardi, Dwight Read and others at UCLA

have also made significant contributions in organizational behavior and

decision-making. Since 2001, UCLA has arranged a conference at Lake

Arrowhead, California, that has become another major gathering point for

practitioners in this field. In 2014, Sadegh Asgari from Columbia University and his colleagues developed an agent-based model of the construction competitive bidding.

While his model was used to analyze the low-bid lump-sum construction

bids, it could be applied to other bidding methods with little

modifications to the model.

Theory

Most computational modeling research describes systems in equilibrium

or as moving between equilibria. Agent-based modeling, however, using

simple rules, can result in different sorts of complex and interesting

behavior. The three ideas central to agent-based models are agents as

objects, emergence, and complexity.

Agent-based models consist of dynamically interacting rule-based

agents. The systems within which they interact can create

real-world-like complexity. Typically agents are

situated

in space and time and reside in networks or in lattice-like

neighborhoods. The location of the agents and their responsive behavior

are encoded in algorithmic

form in computer programs. In some cases, though not always, the

agents may be considered as intelligent and purposeful. In ecological

ABM (often referred to as "individual-based models" in ecology), agents

may, for example, be trees in forest, and would not be considered

intelligent, although they may be "purposeful" in the sense of

optimizing access to a resource (such as water).

The modeling process is best described as inductive.

The modeler makes those assumptions thought most relevant to the

situation at hand and then watches phenomena emerge from the agents'

interactions. Sometimes that result is an equilibrium. Sometimes it is

an emergent pattern. Sometimes, however, it is an unintelligible mangle.

In some ways, agent-based models complement traditional analytic

methods. Where analytic methods enable humans to characterize the

equilibria of a system, agent-based models allow the possibility of

generating those equilibria. This generative contribution may be the

most mainstream of the potential benefits of agent-based modeling.

Agent-based models can explain the emergence of higher-order

patterns—network structures of terrorist organizations and the Internet,

power-law distributions

in the sizes of traffic jams, wars, and stock-market crashes, and

social segregation that persists despite populations of tolerant people.

Agent-based models also can be used to identify lever points, defined

as moments in time in which interventions have extreme consequences, and

to distinguish among types of path dependency.

Rather than focusing on stable states, many models consider a

system's robustness—the ways that complex systems adapt to internal and

external pressures so as to maintain their functionalities. The task of

harnessing that complexity requires consideration of the agents

themselves—their diversity, connectedness, and level of interactions.

Framework

Recent

work on the Modeling and simulation of Complex Adaptive Systems has

demonstrated the need for combining agent-based and complex network

based models.

describe a framework consisting of four levels of developing models of

complex adaptive systems described using several example

multidisciplinary case studies:

- Complex Network Modeling Level for developing models using interaction data of various system components.

- Exploratory Agent-based Modeling Level for developing agent-based models for assessing the feasibility of further research. This can e.g. be useful for developing proof-of-concept models such as for funding applications without requiring an extensive learning curve for the researchers.

- Descriptive Agent-based Modeling (DREAM) for developing descriptions of agent-based models by means of using templates and complex network-based models. Building DREAM models allows model comparison across scientific disciplines.

- Validated agent-based modeling using Virtual Overlay Multiagent system (VOMAS) for the development of verified and validated models in a formal manner.

Other methods of describing agent-based models include code templates and text-based methods such as the ODD (Overview, Design concepts, and Design Details) protocol.

The role of the environment where agents live, both macro and micro,

is also becoming an important factor in agent-based modelling and

simulation work. Simple environment affords simple agents, but complex

environments generates diversity of behavior.

Applications

In biology

Agent-based modeling has been used extensively in biology, including the analysis of the spread of epidemics, and the threat of biowarfare, biological applications including population dynamics, vegetation ecology, landscape diversity, the growth and decline of ancient civilizations, evolution of ethnocentric behavior, forced displacement/migration, language choice dynamics, cognitive modeling, and biomedical applications including modeling 3D breast tissue formation/morphogenesis, the effects of ionizing radiation on mammary stem cell sub-population dynamics, inflammation,

and the human immune system. Agent-based models have also been used for developing decision support systems such as for breast cancer.

Agent-based models are increasingly being used to model pharmacological

systems in early stage and pre-clinical research to aid in drug

development and gain insights into biological systems that would not be

possible a priori. Military applications have also been evaluated. Moreover, agent-based models have been recently employed to study molecular-level biological systems.

In business, technology and network theory

Agent-based

models have been used since the mid-1990s to solve a variety of

business and technology problems. Examples of applications include the

modeling of organizational behavior and cognition, team working, supply chain optimization and logistics, modeling of consumer behavior, including word of mouth, social network effects, distributed computing, workforce management, and portfolio management. They have also been used to analyze traffic congestion.

Recently, agent based modelling and simulation has been applied

to various domains such as studying the impact of publication venues by

researchers in the computer science domain (journals versus

conferences). In addition, ABMs have been used to simulate information delivery in ambient assisted environments. A November 2016 article in arXiv analyzed an agent based simulation of posts spread in the Facebook online social network.

In the domain of peer-to-peer, ad-hoc and other self-organizing and

complex networks, the usefulness of agent based modeling and simulation

has been shown. The use of a computer science-based formal specification framework coupled with wireless sensor networks and an agent-based simulation has recently been demonstrated.

Agent based evolutionary search or algorithm is a new research topic for solving complex optimization problems.

In economics and social sciences

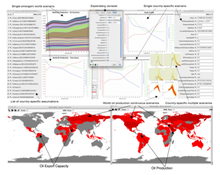

Graphic user interface for an agent-based modeling tool.

Prior to, and in the wake of the financial crisis, interest has grown in ABMs as possible tools for economic analysis. ABMs do not assume the economy can achieve equilibrium and "representative agents" are replaced by agents with diverse, dynamic, and interdependent behavior including herding.

ABMs take a "bottom-up" approach and can generate extremely complex and

volatile simulated economies. ABMs can represent unstable systems with

crashes and booms that develop out of non-linear (disproportionate) responses to proportionally small changes. A July 2010 article in The Economist looked at ABMs as alternatives to DSGE models. The journal Nature

also encouraged agent-based modeling with an editorial that suggested

ABMs can do a better job of representing financial markets and other

economic complexities than standard models along with an essay by J. Doyne Farmer

and Duncan Foley that argued ABMs could fulfill both the desires of

Keynes to represent a complex economy and of Robert Lucas to construct

models based on microfoundations.

Farmer and Foley pointed to progress that has been made using ABMs to

model parts of an economy, but argued for the creation of a very large

model that incorporates low level models.

By modeling a complex system of analysts based on three distinct

behavioral profiles – imitating, anti-imitating, and indifferent –

financial markets were simulated to high accuracy. Results showed a

correlation between network morphology and the stock market index.

Since the beginning of the 21st century ABMs have been deployed

in architecture and urban planning to evaluate design and to simulate

pedestrian flow in the urban environment.

There is also a growing field of socio-economic analysis of

infrastructure investment impact using ABM's ability to discern systemic

impacts upon a socio-economic network.

Organizational ABM: agent-directed simulation

The

agent-directed simulation (ADS) metaphor distinguishes between two

categories, namely "Systems for Agents" and "Agents for Systems."

Systems for Agents (sometimes referred to as agents systems) are

systems implementing agents for the use in engineering, human and social

dynamics, military applications, and others. Agents for Systems are

divided in two subcategories. Agent-supported systems deal with the use

of agents as a support facility to enable computer assistance in problem

solving or enhancing cognitive capabilities. Agent-based systems focus

on the use of agents for the generation of model behavior in a system

evaluation (system studies and analyses).

Implementation

Many agent-based modeling software are designed for serial von-Neumann computer architectures.

This limits the speed and scalability of these systems. A recent

development is the use of data-parallel algorithms on Graphics

Processing Units GPUs for ABM simulation.

The extreme memory bandwidth combined with the sheer number crunching

power of multi-processor GPUs has enabled simulation of millions of

agents at tens of frames per second.

Verification and validation

Verification and validation (V&V) of simulation models is extremely important.

Verification involves the model being debugged to ensure it works

correctly, whereas validation ensures that the right model has been

built. Face validation, sensitivity analysis, calibration and

statistical validation have also been demonstrated. A discrete-event simulation framework approach for the validation of agent-based systems has been proposed. A comprehensive resource on empirical validation of agent-based models can be found here.

As an example of V&V technique, consider VOMAS (virtual overlay multi-agent system),

a software engineering based approach, where a virtual overlay

multi-agent system is developed alongside the agent-based model. The

agents in the multi-agent system are able to gather data by generation

of logs as well as provide run-time validation and verification support

by watch agents and also agents to check any violation of invariants at

run-time. These are set by the Simulation Specialist with help from the

SME (subject-matter expert). Muazi et al. also provide an example of using VOMAS for verification and validation of a forest fire simulation model.

VOMAS provides a formal way of validation and verification. To

develop a VOMAS, one must design VOMAS agents along with the agents in

the actual simulation, preferably from the start. In essence, by the

time the simulation model is complete, one can essentially consider it

to be one model containing two models:

- An agent-based model of the intended system

- An agent-based model of the VOMAS

Unlike all previous work on verification and validation, VOMAS agents

ensure that the simulations are validated in-simulation i.e. even

during execution. In case of any exceptional situations, which are

programmed on the directive of the Simulation Specialist (SS), the VOMAS

agents can report them. In addition, the VOMAS agents can be used to

log key events for the sake of debugging and subsequent analysis of

simulations. In other words, VOMAS allows for a flexible use of any

given technique for the sake of verification and validation of an

agent-based model in any domain.

Details of validated agent-based modeling using VOMAS along with several case studies are given in.

This thesis also gives details of "exploratory agent-based modeling",

"descriptive agent-based modeling" and "validated agent-based modeling",

using several worked case study examples.

Complex systems modelling

Mathematical models of complex systems are of three types: black-box (phenomenological), white-box (mechanistic, based on the first principles) and grey-box (mixtures of phenomenological and mechanistic models).

In black-box models, the individual-based (mechanistic) mechanisms of a complex dynamic system remain hidden.

Mathematical models for complex systems

Black-box models are completely non-mechanistic. They are

phenomenological and ignore a composition and internal structure of a

complex system. We cannot investigate interactions of subsystems of such

a non-transparent model. A white-box model of complex dynamic system

has ‘transparent walls’ and directly shows underlying mechanisms. All

events at micro-, meso- and macro-levels of a dynamic system are

directly visible at all stages of its white-box model evolution. In most

cases mathematical modelers use the heavy black-box mathematical

methods, which cannot produce mechanistic models of complex dynamic

systems. Grey-box models are intermediate and combine black-box and

white-box approaches.

Logical deterministic individual-based cellular automata model of single species population growth

Creation of a white-box model of complex system is associated with the

problem of the necessity of an a priori basic knowledge of the modeling

subject. The deterministic logical cellular automata

are necessary but not sufficient condition of a white-box model. The

second necessary prerequisite of a white-box model is the presence of

the physical ontology of the object under study. The white-box modeling represents an automatic hyper-logical inference from the first principles

because it is completely based on the deterministic logic and axiomatic

theory of the subject. The purpose of the white-box modeling is to

derive from the basic axioms a more detailed, more concrete mechanistic

knowledge about the dynamics of the object under study. The necessity to

formulate an intrinsic axiomatic system

of the subject before creating its white-box model distinguishes the

cellular automata models of white-box type from cellular automata models

based on arbitrary logical rules. If cellular automata rules have not

been formulated from the first principles of the subject, then such a

model may have a weak relevance to the real problem.